Haoyuan Tian

A Cross-Scene Benchmark for Open-World Drone Active Tracking

Dec 01, 2024Abstract:Drone Visual Active Tracking aims to autonomously follow a target object by controlling the motion system based on visual observations, providing a more practical solution for effective tracking in dynamic environments. However, accurate Drone Visual Active Tracking using reinforcement learning remains challenging due to the absence of a unified benchmark, the complexity of open-world environments with frequent interference, and the diverse motion behavior of dynamic targets. To address these issues, we propose a unified cross-scene cross-domain benchmark for open-world drone active tracking called DAT. The DAT benchmark provides 24 visually complex environments to assess the algorithms' cross-scene and cross-domain generalization abilities, and high-fidelity modeling of realistic robot dynamics. Additionally, we propose a reinforcement learning-based drone tracking method called R-VAT, which aims to improve the performance of drone tracking targets in complex scenarios. Specifically, inspired by curriculum learning, we introduce a Curriculum-Based Training strategy that progressively enhances the agent tracking performance in vast environments with complex interference. We design a goal-centered reward function to provide precise feedback to the drone agent, preventing targets farther from the center of view from receiving higher rewards than closer ones. This allows the drone to adapt to the diverse motion behavior of open-world targets. Experiments demonstrate that the R-VAT has about 400% improvement over the SOTA method in terms of the cumulative reward metric.

Automatic bony structure segmentation and curvature estimation on ultrasound cervical spine images -- a feasibility study

Dec 19, 2023Abstract:The loss of cervical lordosis is a common degenerative disorder known to be associated with abnormal spinal alignment. In recent years, ultrasound (US) imaging has been widely applied in the assessment of spine deformity and has shown promising results. The objectives of this study are to automatically segment bony structures from the 3D US cervical spine image volume and to assess the cervical lordosis on the key sagittal frames. In this study, a portable ultrasound imaging system was applied to acquire cervical spine image volume. The nnU-Net was trained on to segment bony structures on the transverse images and validated by 5-fold-cross-validation. The volume data were reconstructed from the segmented image series. An energy function indicating intensity levels and integrity of bony structures was designed to extract the proxy key sagittal frames on both left and right sides for the cervical curve measurement. The mean absolute difference (MAD), standard deviation (SD) and correlation between the spine curvatures of the left and right sides were calculated for quantitative evaluation of the proposed method. The DSC value of the nnU-Net model in segmenting ROI was 0.973. For the measurement of 22 lamina curve angles, the MAD, SD and correlation between the left and right sides of the cervical spine were 3.591, 3.432 degrees and 0.926, respectively. The results indicate that our method has a high accuracy and reliability in the automatic segmentation of the cervical spine and shows the potential of diagnosing the loss of cervical lordosis using the 3D ultrasound imaging technique.

Towards Artistic Image Aesthetics Assessment: a Large-scale Dataset and a New Method

Mar 27, 2023

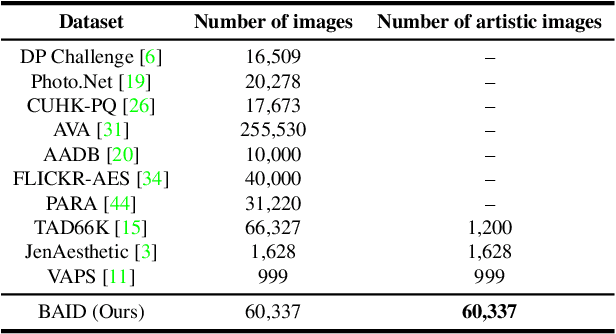

Abstract:Image aesthetics assessment (IAA) is a challenging task due to its highly subjective nature. Most of the current studies rely on large-scale datasets (e.g., AVA and AADB) to learn a general model for all kinds of photography images. However, little light has been shed on measuring the aesthetic quality of artistic images, and the existing datasets only contain relatively few artworks. Such a defect is a great obstacle to the aesthetic assessment of artistic images. To fill the gap in the field of artistic image aesthetics assessment (AIAA), we first introduce a large-scale AIAA dataset: Boldbrush Artistic Image Dataset (BAID), which consists of 60,337 artistic images covering various art forms, with more than 360,000 votes from online users. We then propose a new method, SAAN (Style-specific Art Assessment Network), which can effectively extract and utilize style-specific and generic aesthetic information to evaluate artistic images. Experiments demonstrate that our proposed approach outperforms existing IAA methods on the proposed BAID dataset according to quantitative comparisons. We believe the proposed dataset and method can serve as a foundation for future AIAA works and inspire more research in this field. Dataset and code are available at: https://github.com/Dreemurr-T/BAID.git

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge