Haoran Ma

An LLM-LVLM Driven Agent for Iterative and Fine-Grained Image Editing

Aug 24, 2025

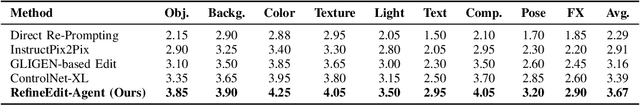

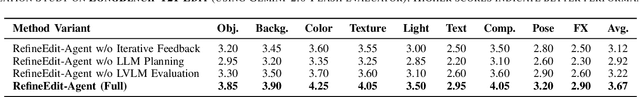

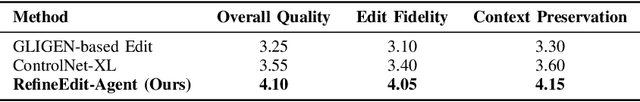

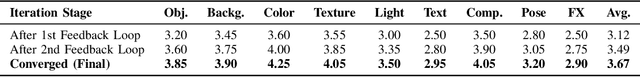

Abstract:Despite the remarkable capabilities of text-to-image (T2I) generation models, real-world applications often demand fine-grained, iterative image editing that existing methods struggle to provide. Key challenges include granular instruction understanding, robust context preservation during modifications, and the lack of intelligent feedback mechanisms for iterative refinement. This paper introduces RefineEdit-Agent, a novel, training-free intelligent agent framework designed to address these limitations by enabling complex, iterative, and context-aware image editing. RefineEdit-Agent leverages the powerful planning capabilities of Large Language Models (LLMs) and the advanced visual understanding and evaluation prowess of Vision-Language Large Models (LVLMs) within a closed-loop system. Our framework comprises an LVLM-driven instruction parser and scene understanding module, a multi-level LLM-driven editing planner for goal decomposition, tool selection, and sequence generation, an iterative image editing module, and a crucial LVLM-driven feedback and evaluation loop. To rigorously evaluate RefineEdit-Agent, we propose LongBench-T2I-Edit, a new benchmark featuring 500 initial images with complex, multi-turn editing instructions across nine visual dimensions. Extensive experiments demonstrate that RefineEdit-Agent significantly outperforms state-of-the-art baselines, achieving an average score of 3.67 on LongBench-T2I-Edit, compared to 2.29 for Direct Re-Prompting, 2.91 for InstructPix2Pix, 3.16 for GLIGEN-based Edit, and 3.39 for ControlNet-XL. Ablation studies, human evaluations, and analyses of iterative refinement, backbone choices, tool usage, and robustness to instruction complexity further validate the efficacy of our agentic design in delivering superior edit fidelity and context preservation.

Environment-Aware and Human-Cooperative Swing Control for Lower-Limb Prostheses in Diverse Obstacle Scenarios

Jul 01, 2025Abstract:Current control strategies for powered lower limb prostheses often lack awareness of the environment and the user's intended interactions with it. This limitation becomes particularly apparent in complex terrains. Obstacle negotiation, a critical scenario exemplifying such challenges, requires both real-time perception of obstacle geometry and responsiveness to user intention about when and where to step over or onto, to dynamically adjust swing trajectories. We propose a novel control strategy that fuses environmental awareness and human cooperativeness: an on-board depth camera detects obstacles ahead of swing phase, prompting an elevated early-swing trajectory to ensure clearance, while late-swing control defers to natural biomechanical cues from the user. This approach enables intuitive stepping strategies without requiring unnatural movement patterns. Experiments with three non-amputee participants demonstrated 100 percent success across more than 150 step-overs and 30 step-ons with randomly placed obstacles of varying heights (4-16 cm) and distances (15-70 cm). By effectively addressing obstacle navigation -- a gateway challenge for complex terrain mobility -- our system demonstrates adaptability to both environmental constraints and user intentions, with promising applications across diverse locomotion scenarios.

Navigating Heat Exposure: Simulation of Route Planning Based on Visual Language Model Agents

Mar 17, 2025Abstract:Heat exposure significantly influences pedestrian routing behaviors. Existing methods such as agent-based modeling (ABM) and empirical measurements fail to account for individual physiological variations and environmental perception mechanisms under thermal stress. This results in a lack of human-centred, heat-adaptive routing suggestions. To address these limitations, we propose a novel Vision Language Model (VLM)-driven Persona-Perception-Planning-Memory (PPPM) framework that integrating street view imagery and urban network topology to simulate heat-adaptive pedestrian routing. Through structured prompt engineering on Gemini-2.0 model, eight distinct heat-sensitive personas were created to model mobility behaviors during heat exposure, with empirical validation through questionnaire survey. Results demonstrate that simulation outputs effectively capture inter-persona variations, achieving high significant congruence with observed route preferences and highlighting differences in the factors driving agents decisions. Our framework is highly cost-effective, with simulations costing 0.006USD and taking 47.81s per route. This Artificial Intelligence-Generated Content (AIGC) methodology advances urban climate adaptation research by enabling high-resolution simulation of thermal-responsive mobility patterns, providing actionable insights for climate-resilient urban planning.

ConServe: Harvesting GPUs for Low-Latency and High-Throughput Large Language Model Serving

Oct 02, 2024

Abstract:Many applications are leveraging large language models (LLMs) for complex tasks, and they generally demand low inference latency and high serving throughput for interactive online jobs such as chatbots. However, the tight latency requirement and high load variance of applications pose challenges to serving systems in achieving high GPU utilization. Due to the high costs of scheduling and preemption, today's systems generally use separate clusters to serve online and offline inference tasks, and dedicate GPUs for online inferences to avoid interference. This approach leads to underutilized GPUs because one must reserve enough GPU resources for the peak expected load, even if the average load is low. This paper proposes to harvest stranded GPU resources for offline LLM inference tasks such as document summarization and LLM benchmarking. Unlike online inferences, these tasks usually run in a batch-processing manner with loose latency requirements, making them a good fit for stranded resources that are only available shortly. To enable safe and efficient GPU harvesting without interfering with online tasks, we built ConServe, an LLM serving system that contains (1) an execution engine that preempts running offline tasks upon the arrival of online tasks, (2) an incremental checkpointing mechanism that minimizes the amount of recomputation required by preemptions, and (3) a scheduler that adaptively batches offline tasks for higher GPU utilization. Our evaluation demonstrates that ConServe achieves strong performance isolation when co-serving online and offline tasks but at a much higher GPU utilization. When colocating practical online and offline workloads on popular models such as Llama-2-7B, ConServe achieves 2.35$\times$ higher throughput than state-of-the-art online serving systems and reduces serving latency by 84$\times$ compared to existing co-serving systems.

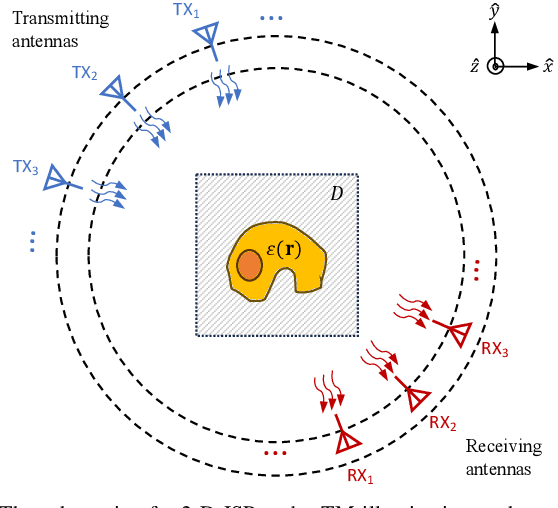

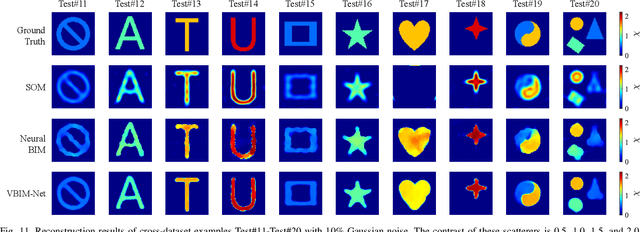

VBIM-Net: Variational Born Iterative Network for Inverse Scattering Problems

May 29, 2024

Abstract:Recently, studies have shown the potential of integrating field-type iterative methods with deep learning (DL) techniques in solving inverse scattering problems (ISPs). In this article, we propose a novel Variational Born Iterative Network, namely, VBIM-Net, to solve the full-wave ISPs with significantly improved flexibility and inversion quality. The proposed VBIM-Net emulates the alternating updates of the total electric field and the contrast in the variational Born iterative method (VBIM) by multiple layers of subnetworks. We embed the calculation of the contrast variation into each of the subnetworks, converting the scattered field residual into an approximate contrast variation and then enhancing it by a U-Net, thus avoiding the requirement of matched measurement dimension and grid resolution as in existing approaches. The total field and contrast of each layer's output is supervised in the loss function of VBIM-Net, which guarantees the physical interpretability of variables of the subnetworks. In addition, we design a training scheme with extra noise to enhance the model's stability. Extensive numerical results on synthetic and experimental data both verify the inversion quality, generalization ability, and robustness of the proposed VBIM-Net. This work may provide some new inspiration for the design of efficient field-type DL schemes.

How does spatial structure affect psychological restoration? A method based on Graph Neural Networks and Street View Imagery

Nov 30, 2023

Abstract:The Attention Restoration Theory (ART) presents a theoretical framework with four essential indicators (being away, extent, fascinating, and compatibility) for comprehending urban and natural restoration quality. However, previous studies relied on non-sequential data and non-spatial dependent methods, which overlooks the impact of spatial structure defined here as the positional relationships between scene entities on restoration quality. The past methods also make it challenging to measure restoration quality on an urban scale. In this work, a spatial-dependent graph neural networks (GNNs) approach is proposed to reveal the relation between spatial structure and restoration quality on an urban scale. Specifically, we constructed two different types of graphs at the street and city levels. The street-level graphs, using sequential street view images (SVIs) of road segments to capture position relationships between entities, were used to represent spatial structure. The city-level graph, modeling the topological relationships of roads as non-Euclidean data structures and embedding urban features (including Perception-features, Spatial-features, and Socioeconomic-features), was used to measure restoration quality. The results demonstrate that: 1) spatial-dependent GNNs model outperforms traditional methods (Acc = 0.735, F1 = 0.732); 2) spatial structure portrayed through sequential SVIs data significantly influences restoration quality; 3) spaces with the same restoration quality exhibited distinct spatial structures patterns. This study clarifies the association between spatial structure and restoration quality, providing a new perspective to improve urban well-being in the future.

A natural language processing-based approach: mapping human perception by understanding deep semantic features in street view images

Nov 29, 2023Abstract:In the past decade, using Street View images and machine learning to measure human perception has become a mainstream research approach in urban science. However, this approach using only image-shallow information makes it difficult to comprehensively understand the deep semantic features of human perception of a scene. In this study, we proposed a new framework based on a pre-train natural language model to understand the relationship between human perception and the sense of a scene. Firstly, Place Pulse 2.0 was used as our base dataset, which contains a variety of human-perceived labels, namely, beautiful, safe, wealthy, depressing, boring, and lively. An image captioning network was used to extract the description information of each street view image. Secondly, a pre-trained BERT model was finetuning and added a regression function for six human perceptual dimensions. Furthermore, we compared the performance of five traditional regression methods with our approach and conducted a migration experiment in Hong Kong. Our results show that human perception scoring by deep semantic features performed better than previous studies by machine learning methods with shallow features. The use of deep scene semantic features provides new ideas for subsequent human perception research, as well as better explanatory power in the face of spatial heterogeneity.

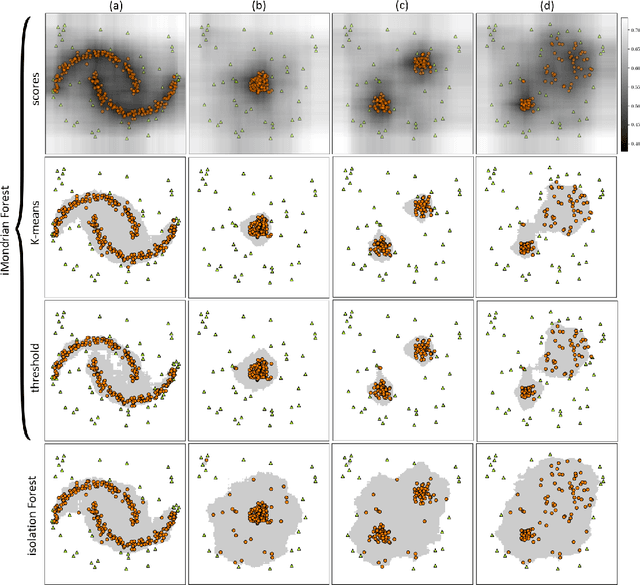

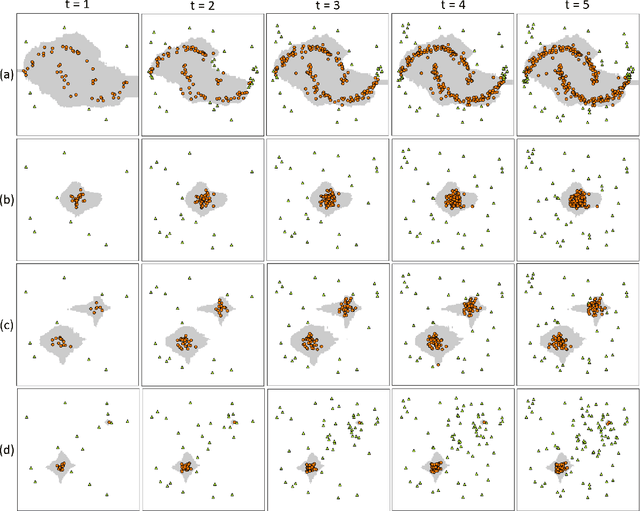

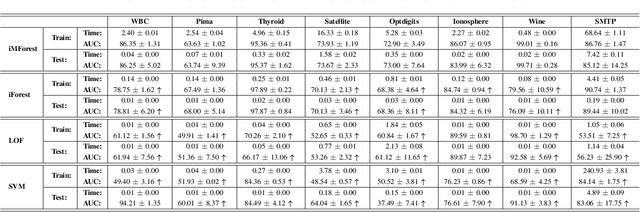

Isolation Mondrian Forest for Batch and Online Anomaly Detection

Mar 08, 2020

Abstract:We propose a new method, named isolation Mondrian forest (iMondrian forest), for batch and online anomaly detection. The proposed method is a novel hybrid of isolation forest and Mondrian forest which are existing methods for batch anomaly detection and online random forest, respectively. iMondrian forest takes the idea of isolation, using the depth of a node in a tree, and implements it in the Mondrian forest structure. The result is a new data structure which can accept streaming data in an online manner while being used for anomaly detection. Our experiments show that iMondrian forest mostly performs better than isolation forest in batch settings and has better or comparable performance against other batch and online anomaly detection methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge