Hao Jianye

Differentiable Logic Machines

Feb 24, 2021

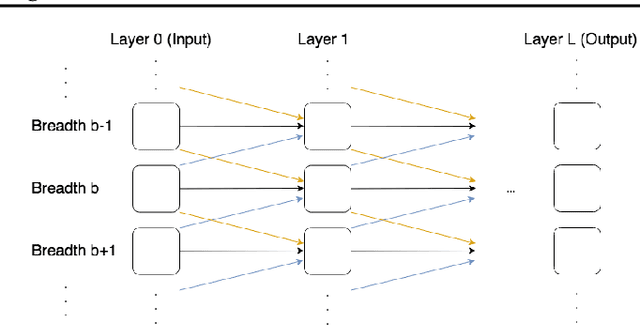

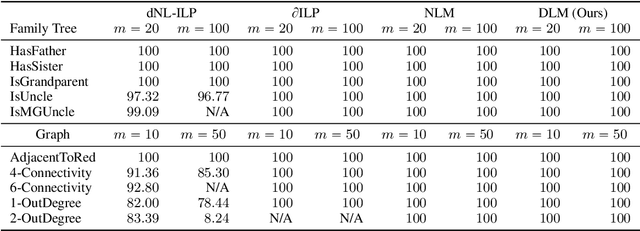

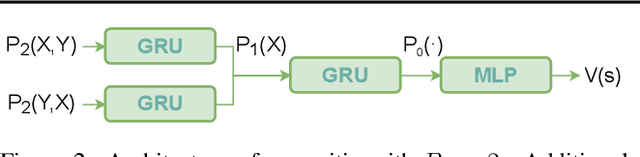

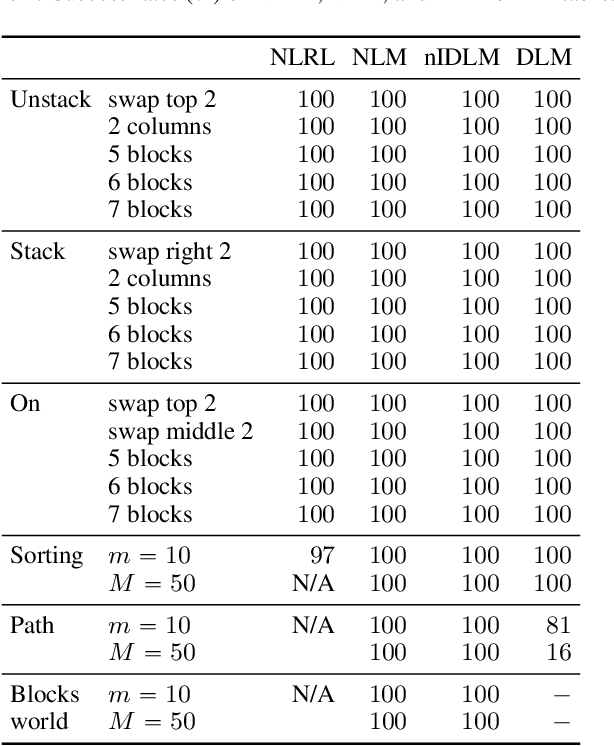

Abstract:The integration of reasoning, learning, and decision-making is key to build more general AI systems. As a step in this direction, we propose a novel neural-logic architecture that can solve both inductive logic programming (ILP) and deep reinforcement learning (RL) problems. Our architecture defines a restricted but expressive continuous space of first-order logic programs by assigning weights to predicates instead of rules. Therefore, it is fully differentiable and can be efficiently trained with gradient descent. Besides, in the deep RL setting with actor-critic algorithms, we propose a novel efficient critic architecture. Compared to state-of-the-art methods on both ILP and RL problems, our proposition achieves excellent performance, while being able to provide a fully interpretable solution and scaling much better, especially during the testing phase.

HEBO: Heteroscedastic Evolutionary Bayesian Optimisation

Dec 07, 2020

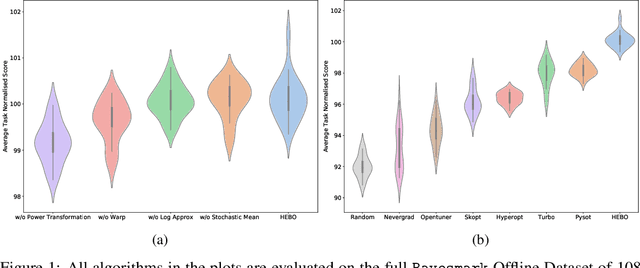

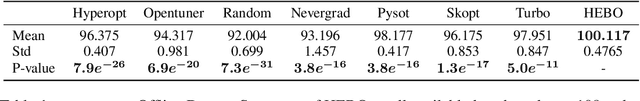

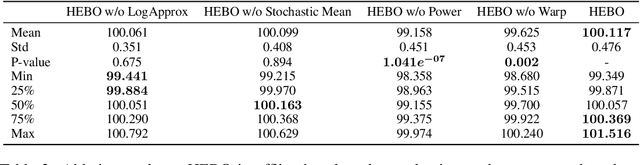

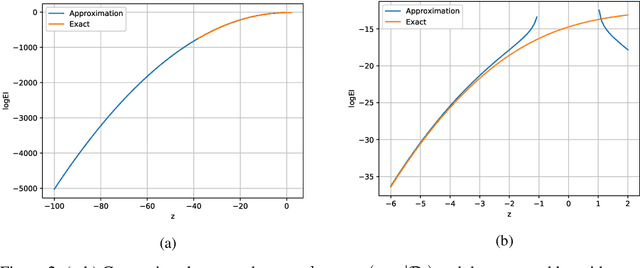

Abstract:We introduce HEBO: Heteroscedastic Evolutionary Bayesian Optimisation that won the NeurIPS 2020 black-box optimisation competition. We present non-conventional modifications to the surrogate model and acquisition maximisation process and show such a combination superior against all baselines provided by the \texttt{Bayesmark} package. Lastly, we perform an ablation study to highlight the components that contributed to the success of HEBO.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge