Hanlin Dong

KiteRunner: Language-Driven Cooperative Local-Global Navigation Policy with UAV Mapping in Outdoor Environments

Mar 11, 2025

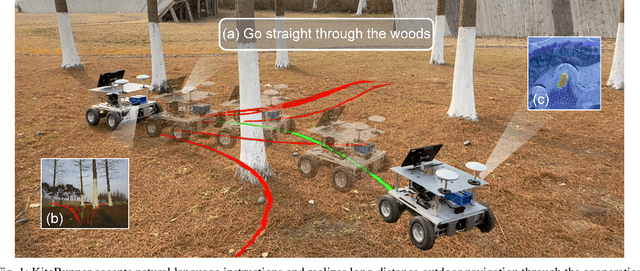

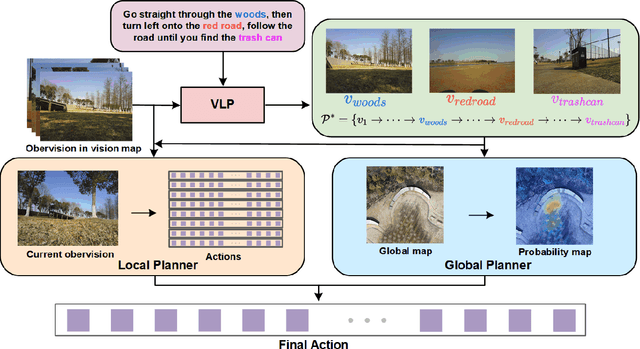

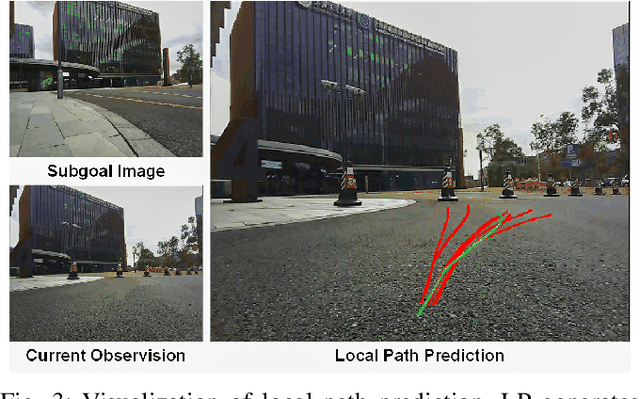

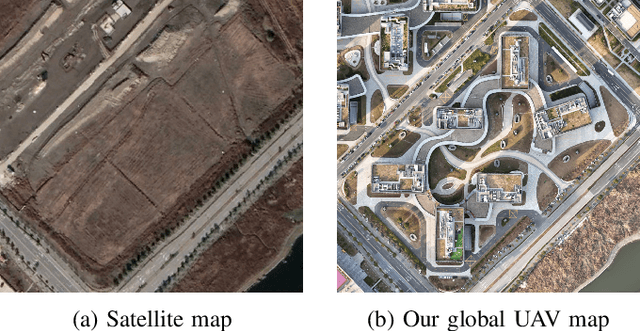

Abstract:Autonomous navigation in open-world outdoor environments faces challenges in integrating dynamic conditions, long-distance spatial reasoning, and semantic understanding. Traditional methods struggle to balance local planning, global planning, and semantic task execution, while existing large language models (LLMs) enhance semantic comprehension but lack spatial reasoning capabilities. Although diffusion models excel in local optimization, they fall short in large-scale long-distance navigation. To address these gaps, this paper proposes KiteRunner, a language-driven cooperative local-global navigation strategy that combines UAV orthophoto-based global planning with diffusion model-driven local path generation for long-distance navigation in open-world scenarios. Our method innovatively leverages real-time UAV orthophotography to construct a global probability map, providing traversability guidance for the local planner, while integrating large models like CLIP and GPT to interpret natural language instructions. Experiments demonstrate that KiteRunner achieves 5.6% and 12.8% improvements in path efficiency over state-of-the-art methods in structured and unstructured environments, respectively, with significant reductions in human interventions and execution time.

Dual-BEV Nav: Dual-layer BEV-based Heuristic Path Planning for Robotic Navigation in Unstructured Outdoor Environments

Jan 30, 2025

Abstract:Path planning with strong environmental adaptability plays a crucial role in robotic navigation in unstructured outdoor environments, especially in the case of low-quality location and map information. The path planning ability of a robot depends on the identification of the traversability of global and local ground areas. In real-world scenarios, the complexity of outdoor open environments makes it difficult for robots to identify the traversability of ground areas that lack a clearly defined structure. Moreover, most existing methods have rarely analyzed the integration of local and global traversability identifications in unstructured outdoor scenarios. To address this problem, we propose a novel method, Dual-BEV Nav, first introducing Bird's Eye View (BEV) representations into local planning to generate high-quality traversable paths. Then, these paths are projected onto the global traversability map generated by the global BEV planning model to obtain the optimal waypoints. By integrating the traversability from both local and global BEV, we establish a dual-layer BEV heuristic planning paradigm, enabling long-distance navigation in unstructured outdoor environments. We test our approach through both public dataset evaluations and real-world robot deployments, yielding promising results. Compared to baselines, the Dual-BEV Nav improved temporal distance prediction accuracy by up to $18.7\%$. In the real-world deployment, under conditions significantly different from the training set and with notable occlusions in the global BEV, the Dual-BEV Nav successfully achieved a 65-meter-long outdoor navigation. Further analysis demonstrates that the local BEV representation significantly enhances the rationality of the planning, while the global BEV probability map ensures the robustness of the overall planning.

Relaxed Rotational Equivariance via $G$-Biases in Vision

Aug 25, 2024

Abstract:Group Equivariant Convolution (GConv) can effectively handle rotational symmetry data. They assume uniform and strict rotational symmetry across all features, as the transformations under the specific group. However, real-world data rarely conforms to strict rotational symmetry commonly referred to as Rotational Symmetry-Breaking in the system or dataset, making GConv unable to adapt effectively to this phenomenon. Motivated by this, we propose a simple but highly effective method to address this problem, which utilizes a set of learnable biases called the $G$-Biases under the group order to break strict group constraints and achieve \textbf{R}elaxed \textbf{R}otational \textbf{E}quivarant \textbf{Conv}olution (RREConv). We conduct extensive experiments to validate Relaxed Rotational Equivariance on rotational symmetry groups $\mathcal{C}_n$ (e.g. $\mathcal{C}_2$, $\mathcal{C}_4$, and $\mathcal{C}_6$ groups). Further experiments demonstrate that our proposed RREConv-based methods achieve excellent performance, compared to existing GConv-based methods in classification and detection tasks on natural image datasets.

SBDet: A Symmetry-Breaking Object Detector via Relaxed Rotation-Equivariance

Aug 21, 2024

Abstract:Introducing Group Equivariant Convolution (GConv) empowers models to explore symmetries hidden in visual data, improving their performance. However, in real-world scenarios, objects or scenes often exhibit perturbations of a symmetric system, specifically a deviation from a symmetric architecture, which can be characterized by a non-trivial action of a symmetry group, known as Symmetry-Breaking. Traditional GConv methods are limited by the strict operation rules in the group space, only ensuring features remain strictly equivariant under limited group transformations, making it difficult to adapt to Symmetry-Breaking or non-rigid transformations. Motivated by this, we introduce a novel Relaxed Rotation GConv (R2GConv) with our defined Relaxed Rotation-Equivariant group $\mathbf{R}_4$. Furthermore, we propose a Relaxed Rotation-Equivariant Network (R2Net) as the backbone and further develop the Symmetry-Breaking Object Detector (SBDet) for 2D object detection built upon it. Experiments demonstrate the effectiveness of our proposed R2GConv in natural image classification tasks, and SBDet achieves excellent performance in object detection tasks with improved generalization capabilities and robustness.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge