Hadrien Bertrand

TorchXRayVision: A library of chest X-ray datasets and models

Oct 31, 2021

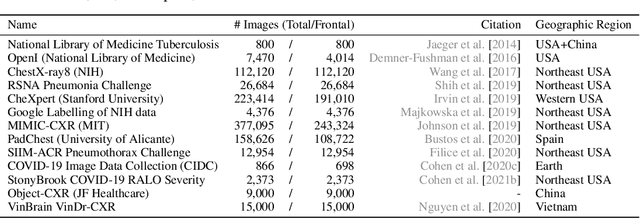

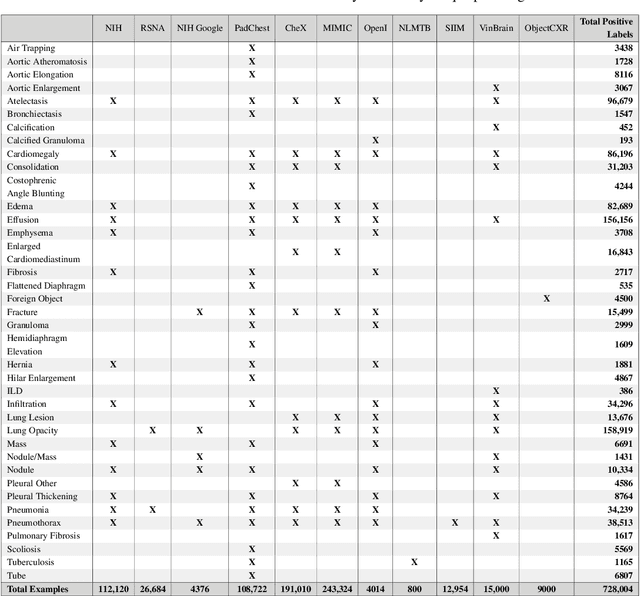

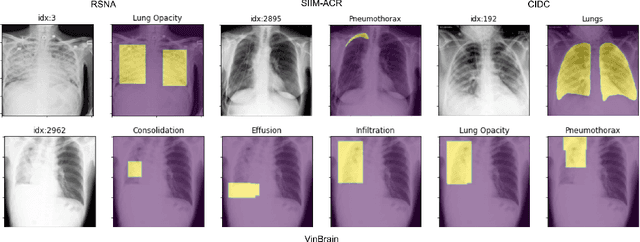

Abstract:TorchXRayVision is an open source software library for working with chest X-ray datasets and deep learning models. It provides a common interface and common pre-processing chain for a wide set of publicly available chest X-ray datasets. In addition, a number of classification and representation learning models with different architectures, trained on different data combinations, are available through the library to serve as baselines or feature extractors.

Quantifying the Value of Lateral Views in Deep Learning for Chest X-rays

Feb 07, 2020

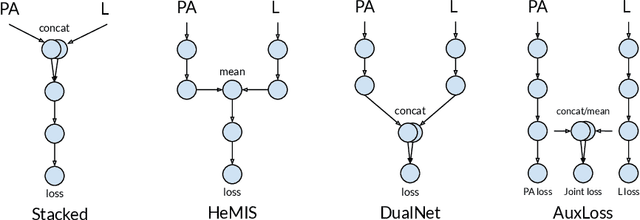

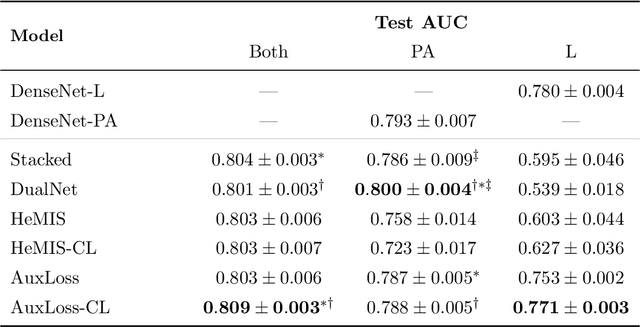

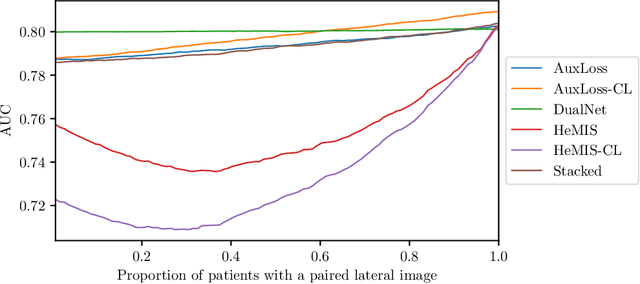

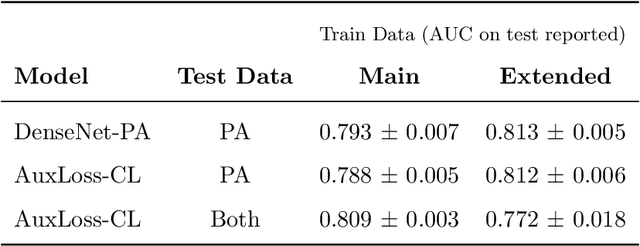

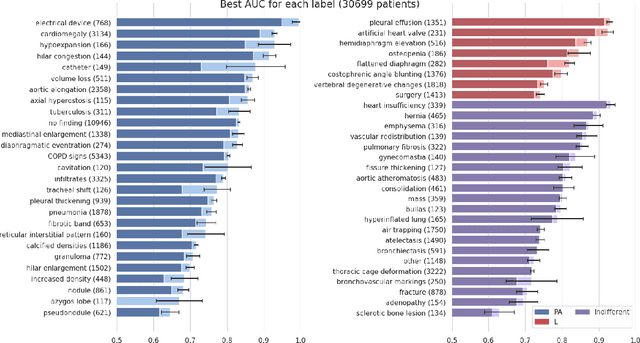

Abstract:Most deep learning models in chest X-ray prediction utilize the posteroanterior (PA) view due to the lack of other views available. PadChest is a large-scale chest X-ray dataset that has almost 200 labels and multiple views available. In this work, we use PadChest to explore multiple approaches to merging the PA and lateral views for predicting the radiological labels associated with the X-ray image. We find that different methods of merging the model utilize the lateral view differently. We also find that including the lateral view increases performance for 32 labels in the dataset, while being neutral for the others. The increase in overall performance is comparable to the one obtained by using only the PA view with twice the amount of patients in the training set.

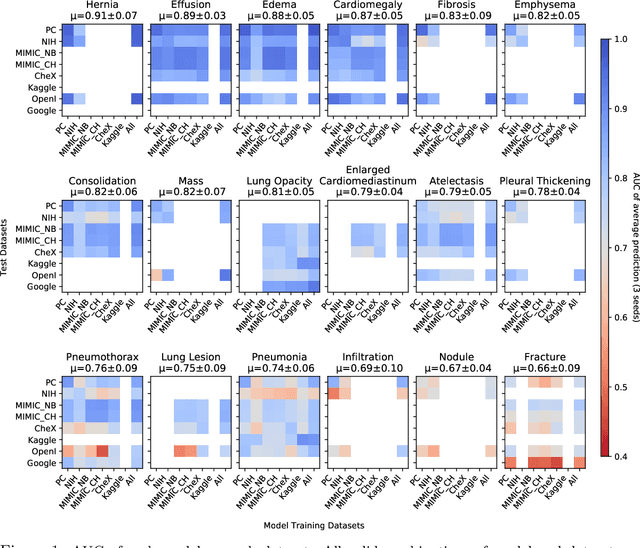

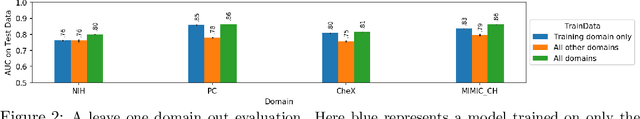

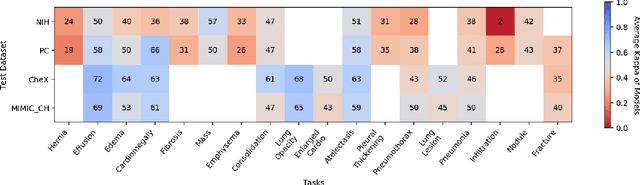

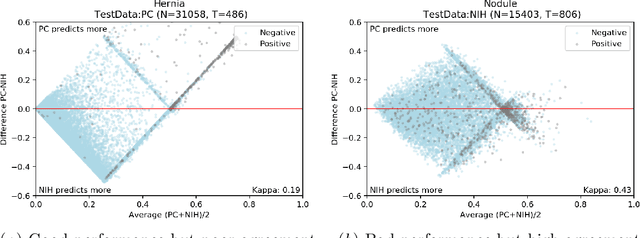

On the limits of cross-domain generalization in automated X-ray prediction

Feb 06, 2020

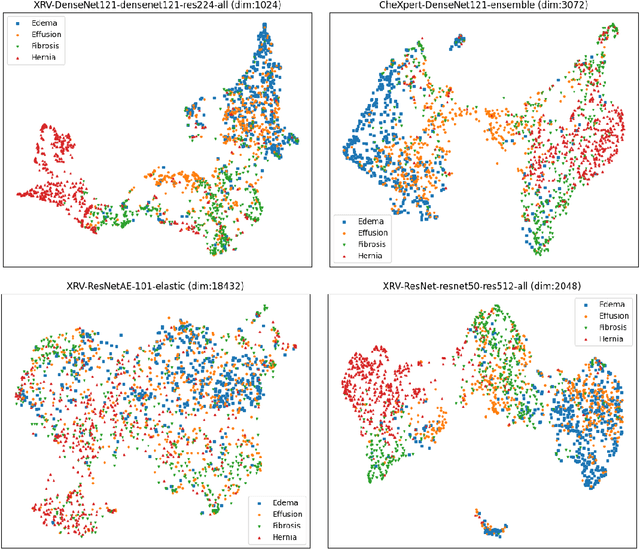

Abstract:This large scale study focuses on quantifying what X-rays diagnostic prediction tasks generalize well across multiple different datasets. We present evidence that the issue of generalization is not due to a shift in the images but instead a shift in the labels. We study the cross-domain performance, agreement between models, and model representations. We find interesting discrepancies between performance and agreement where models which both achieve good performance disagree in their predictions as well as models which agree yet achieve poor performance. We also test for concept similarity by regularizing a network to group tasks across multiple datasets together and observe variation across the tasks.

Do Lateral Views Help Automated Chest X-ray Predictions?

Apr 17, 2019

Abstract:Most convolutional neural networks in chest radiology use only the frontal posteroanterior (PA) view to make a prediction. However the lateral view is known to help the diagnosis of certain diseases and conditions. The recently released PadChest dataset contains paired PA and lateral views, allowing us to study for which diseases and conditions the performance of a neural network improves when provided a lateral x-ray view as opposed to a frontal posteroanterior (PA) view. Using a simple DenseNet model, we find that using the lateral view increases the AUC of 8 of the 56 labels in our data and achieves the same performance as the PA view for 21 of the labels. We find that using the PA and lateral views jointly doesn't trivially lead to an increase in performance but suggest further investigation.

Classification of MRI data using Deep Learning and Gaussian Process-based Model Selection

Jan 16, 2017

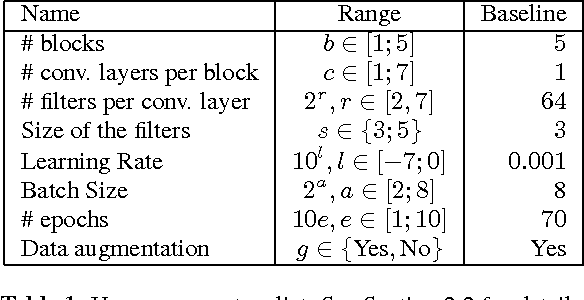

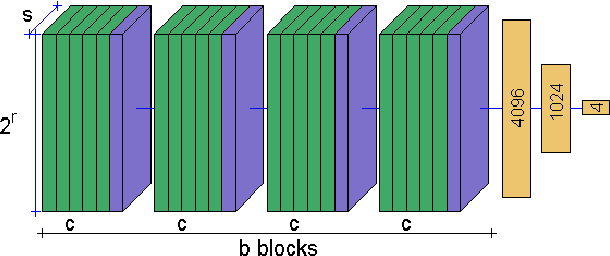

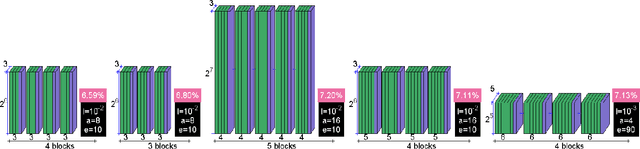

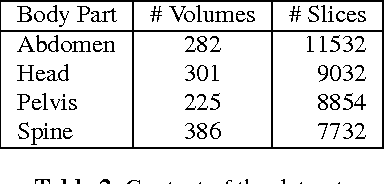

Abstract:The classification of MRI images according to the anatomical field of view is a necessary task to solve when faced with the increasing quantity of medical images. In parallel, advances in deep learning makes it a suitable tool for computer vision problems. Using a common architecture (such as AlexNet) provides quite good results, but not sufficient for clinical use. Improving the model is not an easy task, due to the large number of hyper-parameters governing both the architecture and the training of the network, and to the limited understanding of their relevance. Since an exhaustive search is not tractable, we propose to optimize the network first by random search, and then by an adaptive search based on Gaussian Processes and Probability of Improvement. Applying this method on a large and varied MRI dataset, we show a substantial improvement between the baseline network and the final one (up to 20\% for the most difficult classes).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge