H. Wang

Learning novel representations of variable sources from multi-modal $\textit{Gaia}$ data via autoencoders

May 22, 2025

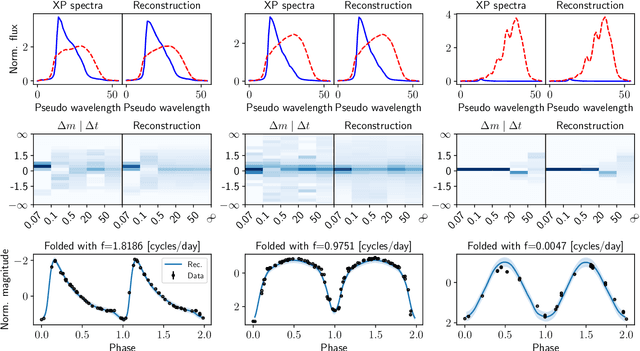

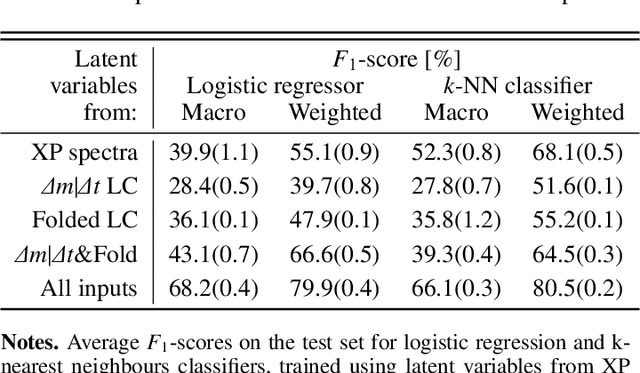

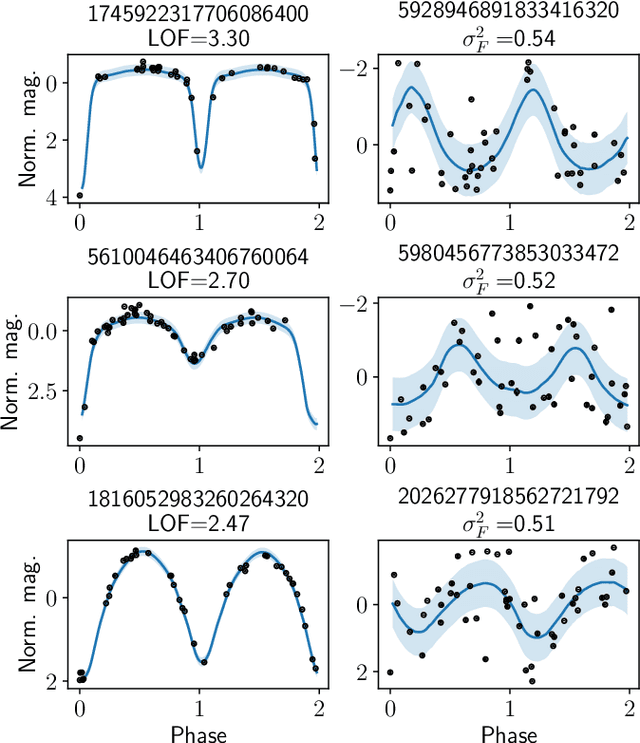

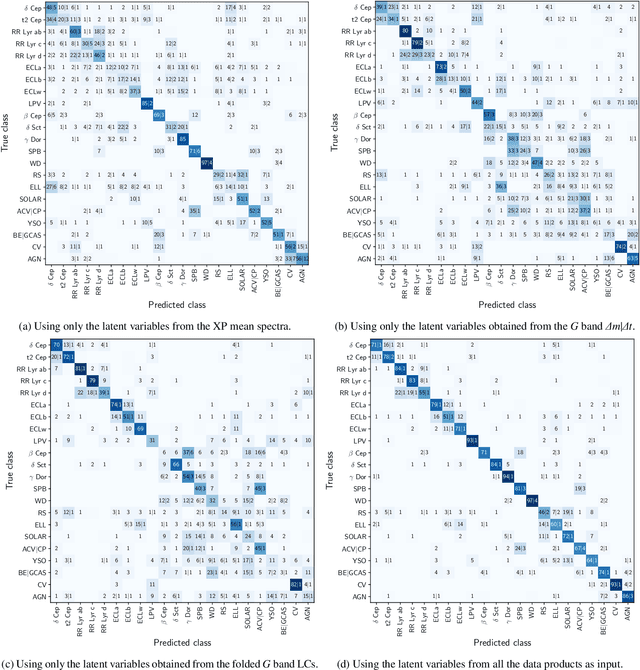

Abstract:Gaia Data Release 3 (DR3) published for the first time epoch photometry, BP/RP (XP) low-resolution mean spectra, and supervised classification results for millions of variable sources. This extensive dataset offers a unique opportunity to study their variability by combining multiple Gaia data products. In preparation for DR4, we propose and evaluate a machine learning methodology capable of ingesting multiple Gaia data products to achieve an unsupervised classification of stellar and quasar variability. A dataset of 4 million Gaia DR3 sources is used to train three variational autoencoders (VAE), which are artificial neural networks (ANNs) designed for data compression and generation. One VAE is trained on Gaia XP low-resolution spectra, another on a novel approach based on the distribution of magnitude differences in the Gaia G band, and the third on folded Gaia G band light curves. Each Gaia source is compressed into 15 numbers, representing the coordinates in a 15-dimensional latent space generated by combining the outputs of these three models. The learned latent representation produced by the ANN effectively distinguishes between the main variability classes present in Gaia DR3, as demonstrated through both supervised and unsupervised classification analysis of the latent space. The results highlight a strong synergy between light curves and low-resolution spectral data, emphasising the benefits of combining the different Gaia data products. A two-dimensional projection of the latent variables reveals numerous overdensities, most of which strongly correlate with astrophysical properties, showing the potential of this latent space for astrophysical discovery. We show that the properties of our novel latent representation make it highly valuable for variability analysis tasks, including classification, clustering and outlier detection.

Foresee and Act Ahead: Task Prediction and Pre-Scheduling Enabled Efficient Robotic Warehousing

Dec 09, 2024Abstract:In warehousing systems, to enhance logistical efficiency amid surging demand volumes, much focus is placed on how to reasonably allocate tasks to robots. However, the robots labor is still inevitably wasted to some extent. In response to this, we propose a pre-scheduling enhanced warehousing framework that predicts task flow and acts in advance. It consists of task flow prediction and hybrid tasks allocation. For task prediction, we notice that it is possible to provide a spatio-temporal representation of task flow, so we introduce a periodicity-decoupled mechanism tailored for the generation patterns of aggregated orders, and then further extract spatial features of task distribution with novel combination of graph structures. In hybrid tasks allocation, we consider the known tasks and predicted future tasks simultaneously and optimize the allocation dynamically. In addition, we consider factors such as predicted task uncertainty and sector-level efficiency evaluation in warehousing to realize more balanced and rational allocations. We validate our task prediction model across actual datasets derived from real factories, achieving SOTA performance. Furthermore, we implement our compelte scheduling system in a real-world robotic warehouse for months of lifelong validation, demonstrating large improvements in key metrics of warehousing, such as empty running rate, by more than 50%.

Carbon price fluctuation prediction using blockchain information A new hybrid machine learning approach

Nov 05, 2024Abstract:In this study, the novel hybrid machine learning approach is proposed in carbon price fluctuation prediction. Specifically, a research framework integrating DILATED Convolutional Neural Networks (CNN) and Long Short-Term Memory (LSTM) neural network algorithm is proposed. The advantage of the combined framework is that it can make feature extraction more efficient. Then, based on the DILATED CNN-LSTM framework, the L1 and L2 parameter norm penalty as regularization method is adopted to predict. Referring to the characteristics of high correlation between energy indicator price and blockchain information in previous literature, and we primarily includes indicators related to blockchain information through regularization process. Based on the above methods, this paper uses a dataset containing an amount of data to carry out the carbon price prediction. The experimental results show that the DILATED CNN-LSTM framework is superior to the traditional CNN-LSTM architecture. Blockchain information can effectively predict the price. Since parameter norm penalty as regularization, Ridge Regression (RR) as L2 regularization is better than Smoothly Clipped Absolute Deviation Penalty (SCAD) as L1 regularization in price forecasting. Thus, the proposed RR-DILATED CNN-LSTM approach can effectively and accurately predict the fluctuation trend of the carbon price. Therefore, the new forecasting methods and theoretical ecology proposed in this study provide a new basis for trend prediction and evaluating digital assets policy represented by the carbon price for both the academia and practitioners.

Probing many-body Bell correlation depth with superconducting qubits

Jun 25, 2024

Abstract:Quantum nonlocality describes a stronger form of quantum correlation than that of entanglement. It refutes Einstein's belief of local realism and is among the most distinctive and enigmatic features of quantum mechanics. It is a crucial resource for achieving quantum advantages in a variety of practical applications, ranging from cryptography and certified random number generation via self-testing to machine learning. Nevertheless, the detection of nonlocality, especially in quantum many-body systems, is notoriously challenging. Here, we report an experimental certification of genuine multipartite Bell correlations, which signal nonlocality in quantum many-body systems, up to 24 qubits with a fully programmable superconducting quantum processor. In particular, we employ energy as a Bell correlation witness and variationally decrease the energy of a many-body system across a hierarchy of thresholds, below which an increasing Bell correlation depth can be certified from experimental data. As an illustrating example, we variationally prepare the low-energy state of a two-dimensional honeycomb model with 73 qubits and certify its Bell correlations by measuring an energy that surpasses the corresponding classical bound with up to 48 standard deviations. In addition, we variationally prepare a sequence of low-energy states and certify their genuine multipartite Bell correlations up to 24 qubits via energies measured efficiently by parity oscillation and multiple quantum coherence techniques. Our results establish a viable approach for preparing and certifying multipartite Bell correlations, which provide not only a finer benchmark beyond entanglement for quantum devices, but also a valuable guide towards exploiting multipartite Bell correlation in a wide spectrum of practical applications.

VCounselor: A Psychological Intervention Chat Agent Based on a Knowledge-Enhanced Large Language Model

Mar 20, 2024Abstract:Conversational artificial intelligence can already independently engage in brief conversations with clients with psychological problems and provide evidence-based psychological interventions. The main objective of this study is to improve the effectiveness and credibility of the large language model in psychological intervention by creating a specialized agent, the VCounselor, to address the limitations observed in popular large language models such as ChatGPT in domain applications. We achieved this goal by proposing a new affective interaction structure and knowledge-enhancement structure. In order to evaluate VCounselor, this study compared the general large language model, the fine-tuned large language model, and VCounselor's knowledge-enhanced large language model. At the same time, the general large language model and the fine-tuned large language model will also be provided with an avatar to compare them as an agent with VCounselor. The comparison results indicated that the affective interaction structure and knowledge-enhancement structure of VCounselor significantly improved the effectiveness and credibility of the psychological intervention, and VCounselor significantly provided positive tendencies for clients' emotions. The conclusion of this study strongly supports that VConselor has a significant advantage in providing psychological support to clients by being able to analyze the patient's problems with relative accuracy and provide professional-level advice that enhances support for clients.

Application of quantum-inspired generative models to small molecular datasets

Apr 21, 2023

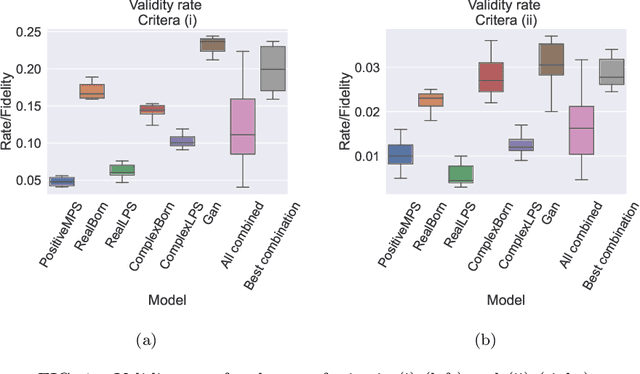

Abstract:Quantum and quantum-inspired machine learning has emerged as a promising and challenging research field due to the increased popularity of quantum computing, especially with near-term devices. Theoretical contributions point toward generative modeling as a promising direction to realize the first examples of real-world quantum advantages from these technologies. A few empirical studies also demonstrate such potential, especially when considering quantum-inspired models based on tensor networks. In this work, we apply tensor-network-based generative models to the problem of molecular discovery. In our approach, we utilize two small molecular datasets: a subset of $4989$ molecules from the QM9 dataset and a small in-house dataset of $516$ validated antioxidants from TotalEnergies. We compare several tensor network models against a generative adversarial network using different sample-based metrics, which reflect their learning performances on each task, and multiobjective performances using $3$ relevant molecular metrics per task. We also combined the output of the models and demonstrate empirically that such a combination can be beneficial, advocating for the unification of classical and quantum(-inspired) generative learning.

Towards emotion recognition for virtual environments: an evaluation of EEG features on benchmark dataset

Oct 25, 2022Abstract:One of the challenges in virtual environments is the difficulty users have in interacting with these increasingly complex systems. Ultimately, endowing machines with the ability to perceive users emotions will enable a more intuitive and reliable interaction. Consequently, using the electroencephalogram as a bio-signal sensor, the affective state of a user can be modelled and subsequently utilised in order to achieve a system that can recognise and react to the user's emotions. This paper investigates features extracted from electroencephalogram signals for the purpose of affective state modelling based on Russell's Circumplex Model. Investigations are presented that aim to provide the foundation for future work in modelling user affect to enhance interaction experience in virtual environments. The DEAP dataset was used within this work, along with a Support Vector Machine and Random Forest, which yielded reasonable classification accuracies for Valence and Arousal using feature vectors based on statistical measurements and band power from the \'z, \b{eta}, \'z, and \'z\'z waves and High Order Crossing of the EEG signal.

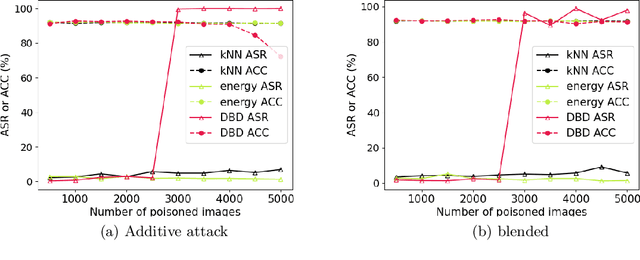

Training set cleansing of backdoor poisoning by self-supervised representation learning

Oct 19, 2022

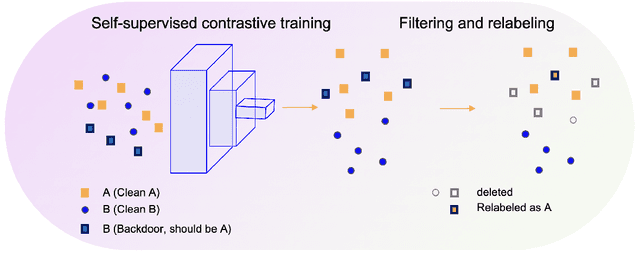

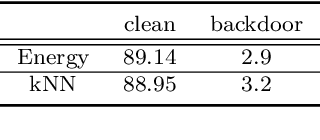

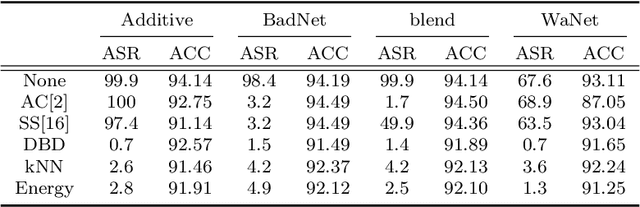

Abstract:A backdoor or Trojan attack is an important type of data poisoning attack against deep neural network (DNN) classifiers, wherein the training dataset is poisoned with a small number of samples that each possess the backdoor pattern (usually a pattern that is either imperceptible or innocuous) and which are mislabeled to the attacker's target class. When trained on a backdoor-poisoned dataset, a DNN behaves normally on most benign test samples but makes incorrect predictions to the target class when the test sample has the backdoor pattern incorporated (i.e., contains a backdoor trigger). Here we focus on image classification tasks and show that supervised training may build stronger association between the backdoor pattern and the associated target class than that between normal features and the true class of origin. By contrast, self-supervised representation learning ignores the labels of samples and learns a feature embedding based on images' semantic content. %We thus propose to use unsupervised representation learning to avoid emphasising backdoor-poisoned training samples and learn a similar feature embedding for samples of the same class. Using a feature embedding found by self-supervised representation learning, a data cleansing method, which combines sample filtering and re-labeling, is developed. Experiments on CIFAR-10 benchmark datasets show that our method achieves state-of-the-art performance in mitigating backdoor attacks.

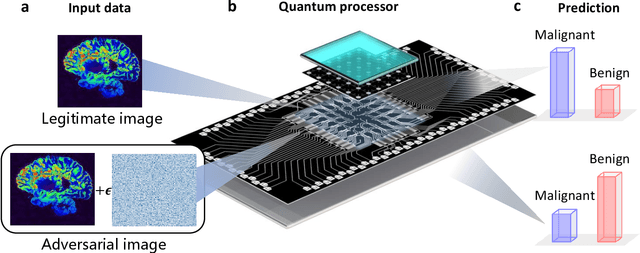

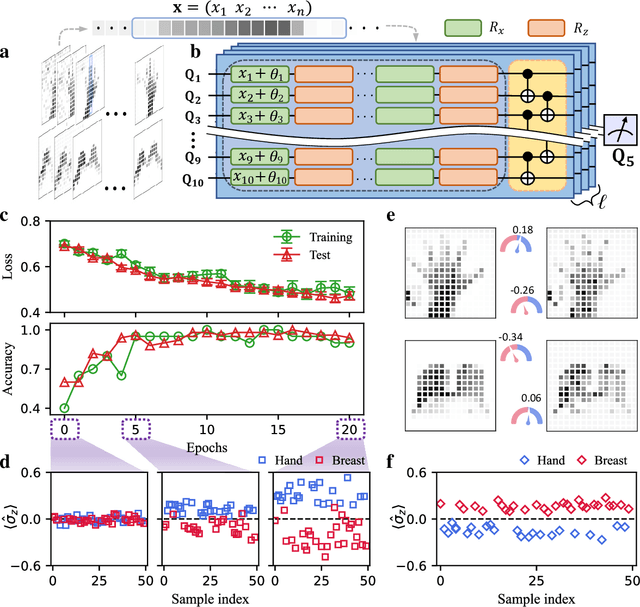

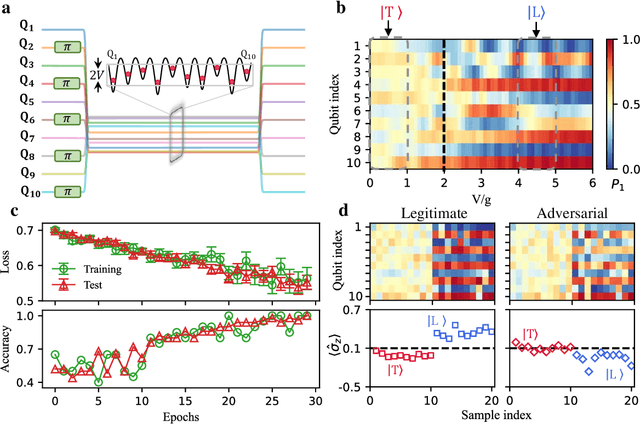

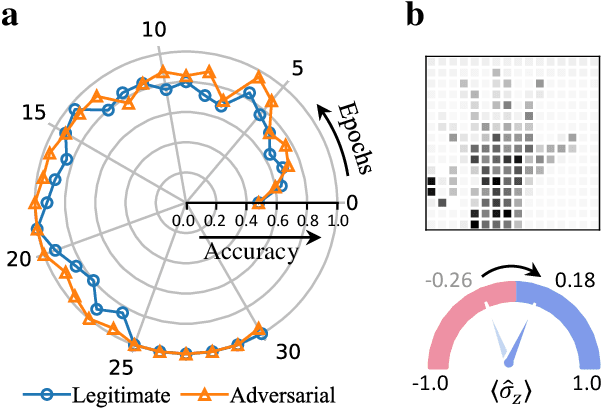

Experimental quantum adversarial learning with programmable superconducting qubits

Apr 04, 2022

Abstract:Quantum computing promises to enhance machine learning and artificial intelligence. Different quantum algorithms have been proposed to improve a wide spectrum of machine learning tasks. Yet, recent theoretical works show that, similar to traditional classifiers based on deep classical neural networks, quantum classifiers would suffer from the vulnerability problem: adding tiny carefully-crafted perturbations to the legitimate original data samples would facilitate incorrect predictions at a notably high confidence level. This will pose serious problems for future quantum machine learning applications in safety and security-critical scenarios. Here, we report the first experimental demonstration of quantum adversarial learning with programmable superconducting qubits. We train quantum classifiers, which are built upon variational quantum circuits consisting of ten transmon qubits featuring average lifetimes of 150 $\mu$s, and average fidelities of simultaneous single- and two-qubit gates above 99.94% and 99.4% respectively, with both real-life images (e.g., medical magnetic resonance imaging scans) and quantum data. We demonstrate that these well-trained classifiers (with testing accuracy up to 99%) can be practically deceived by small adversarial perturbations, whereas an adversarial training process would significantly enhance their robustness to such perturbations. Our results reveal experimentally a crucial vulnerability aspect of quantum learning systems under adversarial scenarios and demonstrate an effective defense strategy against adversarial attacks, which provide a valuable guide for quantum artificial intelligence applications with both near-term and future quantum devices.

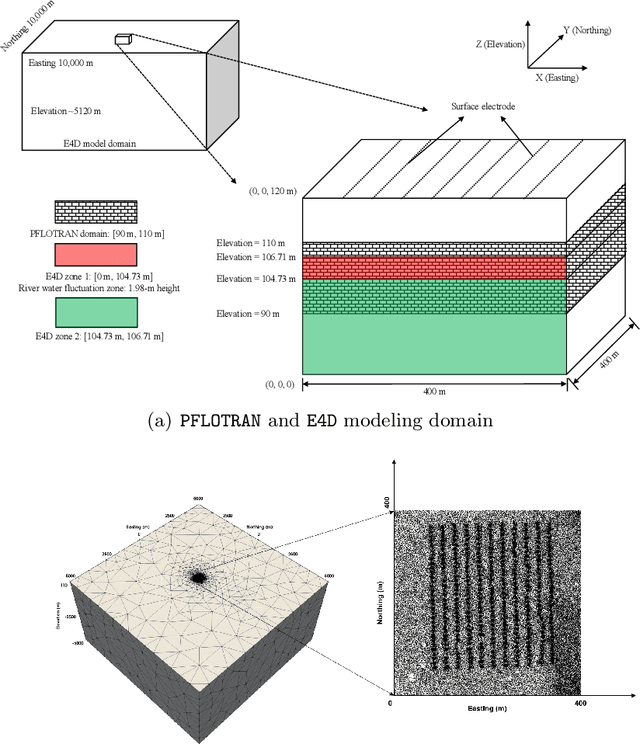

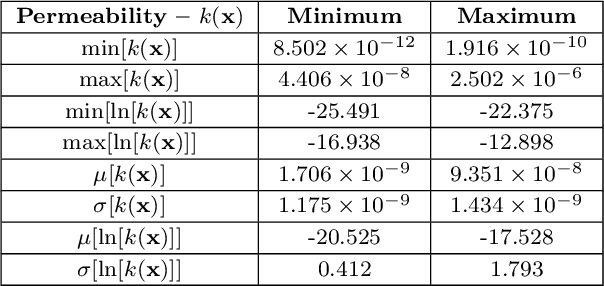

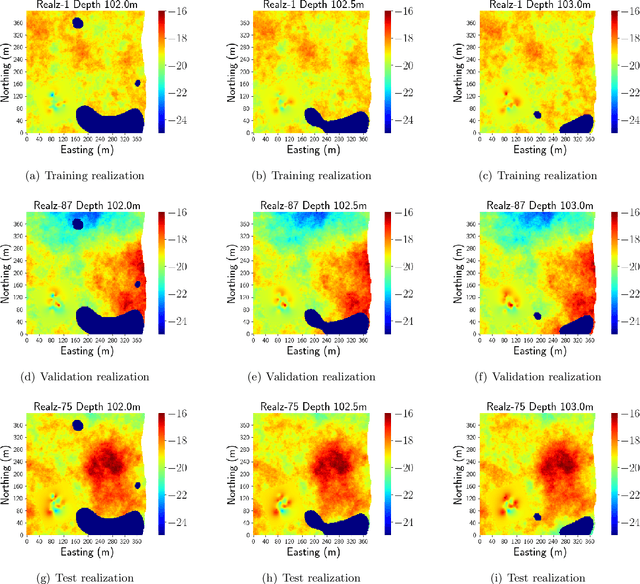

Deep Learning to Estimate Permeability using Geophysical Data

Oct 08, 2021

Abstract:Time-lapse electrical resistivity tomography (ERT) is a popular geophysical method to estimate three-dimensional (3D) permeability fields from electrical potential difference measurements. Traditional inversion and data assimilation methods are used to ingest this ERT data into hydrogeophysical models to estimate permeability. Due to ill-posedness and the curse of dimensionality, existing inversion strategies provide poor estimates and low resolution of the 3D permeability field. Recent advances in deep learning provide us with powerful algorithms to overcome this challenge. This paper presents a deep learning (DL) framework to estimate the 3D subsurface permeability from time-lapse ERT data. To test the feasibility of the proposed framework, we train DL-enabled inverse models on simulation data. Subsurface process models based on hydrogeophysics are used to generate this synthetic data for deep learning analyses. Results show that proposed weak supervised learning can capture salient spatial features in the 3D permeability field. Quantitatively, the average mean squared error (in terms of the natural log) on the strongly labeled training, validation, and test datasets is less than 0.5. The R2-score (global metric) is greater than 0.75, and the percent error in each cell (local metric) is less than 10%. Finally, an added benefit in terms of computational cost is that the proposed DL-based inverse model is at least O(104) times faster than running a forward model. Note that traditional inversion may require multiple forward model simulations (e.g., in the order of 10 to 1000), which are very expensive. This computational savings (O(105) - O(107)) makes the proposed DL-based inverse model attractive for subsurface imaging and real-time ERT monitoring applications due to fast and yet reasonably accurate estimations of the permeability field.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge