Guangzhi Ma

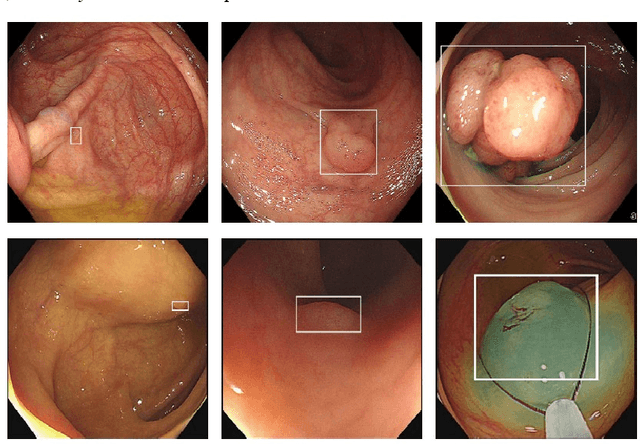

YOLO-OB: An improved anchor-free real-time multiscale colon polyp detector in colonoscopy

Dec 14, 2023

Abstract:Colon cancer is expected to become the second leading cause of cancer death in the United States in 2023. Although colonoscopy is one of the most effective methods for early prevention of colon cancer, up to 30% of polyps may be missed by endoscopists, thereby increasing patients' risk of developing colon cancer. Though deep neural networks have been proven to be an effective means of enhancing the detection rate of polyps. However, the variation of polyp size brings the following problems: (1) it is difficult to design an efficient and sufficient multi-scale feature fusion structure; (2) matching polyps of different sizes with fixed-size anchor boxes is a hard challenge. These problems reduce the performance of polyp detection and also lower the model's training and detection efficiency. To address these challenges, this paper proposes a new model called YOLO-OB. Specifically, we developed a bidirectional multiscale feature fusion structure, BiSPFPN, which could enhance the feature fusion capability across different depths of a CNN. We employed the ObjectBox detection head, which used a center-based anchor-free box regression strategy that could detect polyps of different sizes on feature maps of any scale. Experiments on the public dataset SUN and the self-collected colon polyp dataset Union demonstrated that the proposed model significantly improved various performance metrics of polyp detection, especially the recall rate. Compared to the state-of-the-art results on the public dataset SUN, the proposed method achieved a 6.73% increase on recall rate from 91.5% to 98.23%. Furthermore, our YOLO-OB was able to achieve real-time polyp detection at a speed of 39 frames per second using a RTX3090 graphics card. The implementation of this paper can be found here: https://github.com/seanyan62/YOLO-OB.

Multi-class Classification with Fuzzy-feature Observations: Theory and Algorithms

Jun 09, 2022

Abstract:The theoretical analysis of multi-class classification has proved that the existing multi-class classification methods can train a classifier with high classification accuracy on the test set, when the instances are precise in the training and test sets with same distribution and enough instances can be collected in the training set. However, one limitation with multi-class classification has not been solved: how to improve the classification accuracy of multi-class classification problems when only imprecise observations are available. Hence, in this paper, we propose a novel framework to address a new realistic problem called multi-class classification with imprecise observations (MCIMO), where we need to train a classifier with fuzzy-feature observations. Firstly, we give the theoretical analysis of the MCIMO problem based on fuzzy Rademacher complexity. Then, two practical algorithms based on support vector machine and neural networks are constructed to solve the proposed new problem. Experiments on both synthetic and real-world datasets verify the rationality of our theoretical analysis and the efficacy of the proposed algorithms.

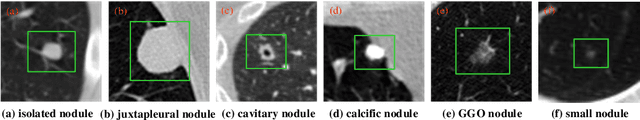

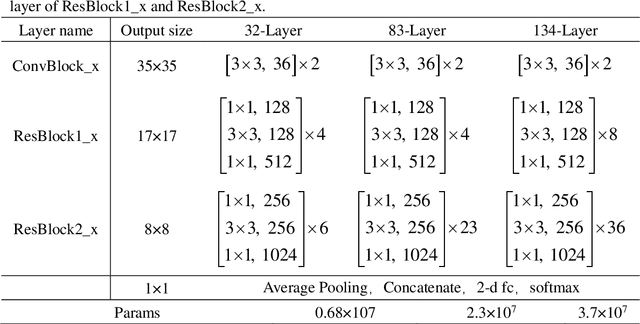

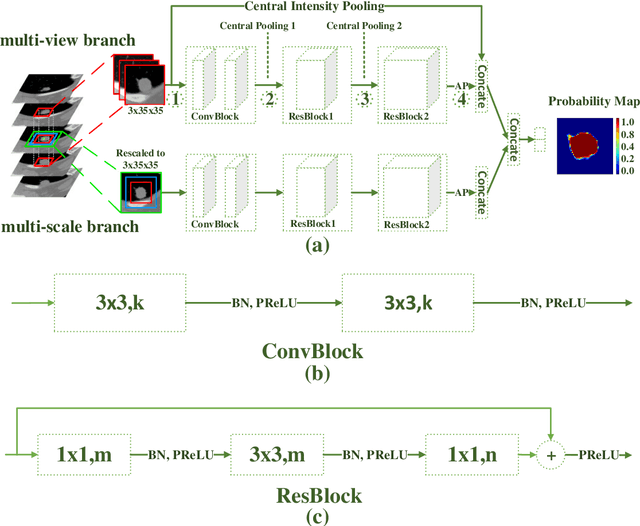

Dual-branch residual network for lung nodule segmentation

May 21, 2019

Abstract:An accurate segmentation of lung nodules in computed tomography (CT) images is critical to lung cancer analysis and diagnosis. However, due to the variety of lung nodules and the similarity of visual characteristics between nodules and their surroundings, a robust segmentation of nodules becomes a challenging problem. In this study, we propose the Dual-branch Residual Network (DB-ResNet) which is a data-driven model. Our approach integrates two new schemes to improve the generalization capability of the model: 1) the proposed model can simultaneously capture multi-view and multi-scale features of different nodules in CT images; 2) we combine the features of the intensity and the convolution neural networks (CNN). We propose a pooling method, called the central intensity-pooling layer (CIP), to extract the intensity features of the center voxel of the block, and then use the CNN to obtain the convolutional features of the center voxel of the block. In addition, we designed a weighted sampling strategy based on the boundary of nodules for the selection of those voxels using the weighting score, to increase the accuracy of the model. The proposed method has been extensively evaluated on the LIDC dataset containing 986 nodules. Experimental results show that the DB-ResNet achieves superior segmentation performance with an average dice score of 82.74% on the dataset. Moreover, we compared our results with those of four radiologists on the same dataset. The comparison showed that our average dice score was 0.49% higher than that of human experts. This proves that our proposed method is as good as the experienced radiologist.

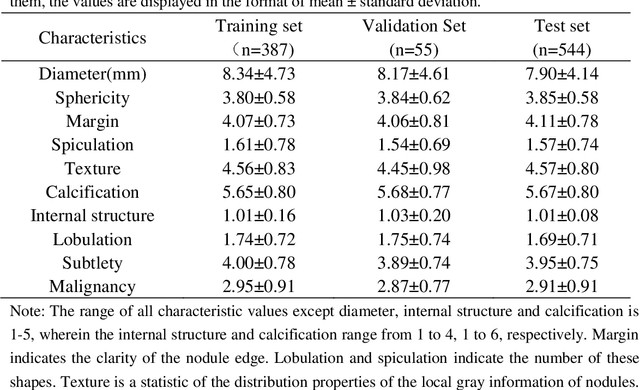

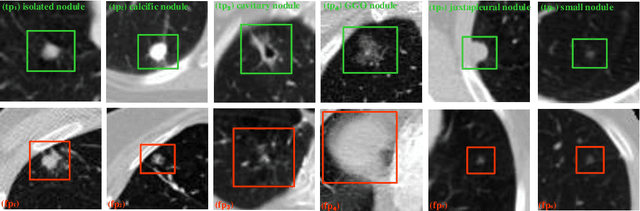

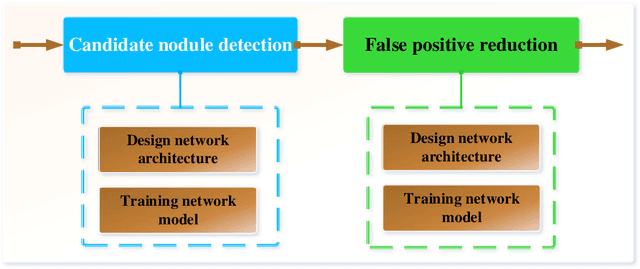

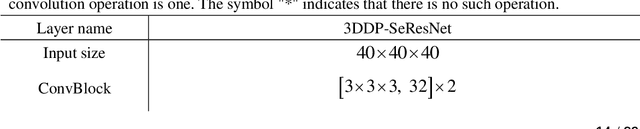

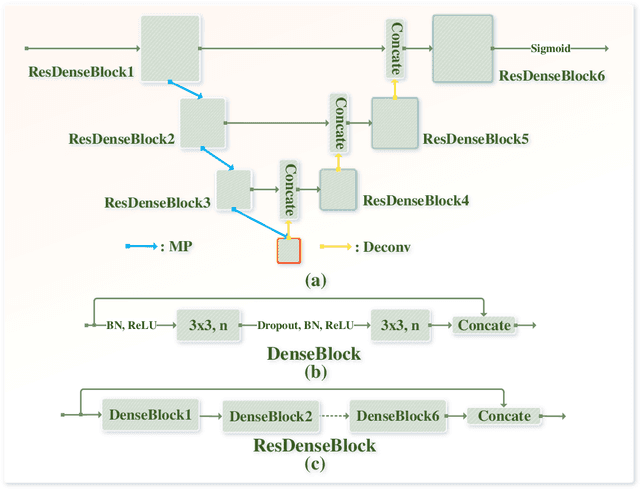

Two-Stage Convolutional Neural Network Architecture for Lung Nodule Detection

May 09, 2019

Abstract:Early detection of lung cancer is an effective way to improve the survival rate of patients. It is a critical step to have accurate detection of lung nodules in computed tomography (CT) images for the diagnosis of lung cancer. However, due to the heterogeneity of the lung nodules and the complexity of the surrounding environment, robust nodule detection has been a challenging task. In this study, we propose a two-stage convolutional neural network (TSCNN) architecture for lung nodule detection. The CNN architecture in the first stage is based on the improved UNet segmentation network to establish an initial detection of lung nodules. Simultaneously, in order to obtain a high recall rate without introducing excessive false positive nodules, we propose a novel sampling strategy, and use the offline hard mining idea for training and prediction according to the proposed cascaded prediction method. The CNN architecture in the second stage is based on the proposed dual pooling structure, which is built into three 3D CNN classification networks for false positive reduction. Since the network training requires a significant amount of training data, we adopt a data augmentation method based on random mask. Furthermore, we have improved the generalization ability of the false positive reduction model by means of ensemble learning. The proposed method has been experimentally verified on the LUNA dataset. Experimental results show that the proposed TSCNN architecture can obtain competitive detection performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge