Guang Yu

Video Abnormal Event Detection by Learning to Complete Visual Cloze Tests

Aug 05, 2021

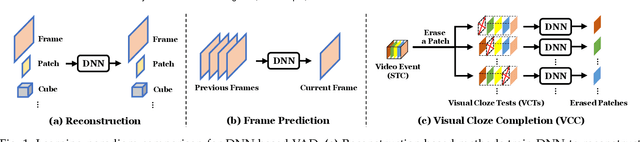

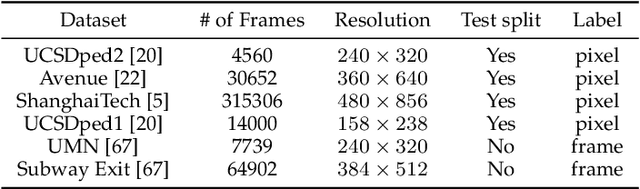

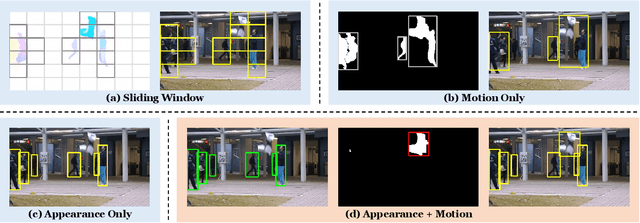

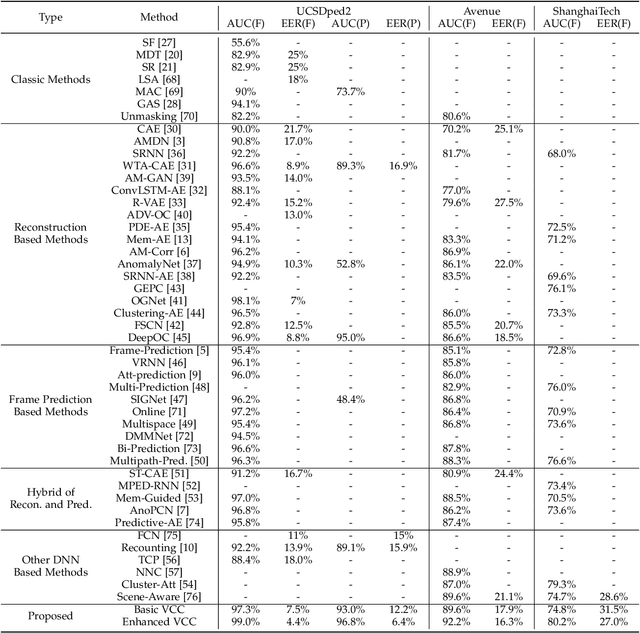

Abstract:Video abnormal event detection (VAD) is a vital semi-supervised task that requires learning with only roughly labeled normal videos, as anomalies are often practically unavailable. Although deep neural networks (DNNs) enable great progress in VAD, existing solutions typically suffer from two issues: (1) The precise and comprehensive localization of video events is ignored. (2) The video semantics and temporal context are under-explored. To address those issues, we are motivated by the prevalent cloze test in education and propose a novel approach named visual cloze completion (VCC), which performs VAD by learning to complete "visual cloze tests" (VCTs). Specifically, VCC first localizes each video event and encloses it into a spatio-temporal cube (STC). To achieve both precise and comprehensive localization, appearance and motion are used as mutually complementary cues to mark the object region associated with each video event. For each marked region, a normalized patch sequence is extracted from temporally adjacent frames and stacked into the STC. By comparing each patch and the patch sequence of a STC to a visual "word" and "sentence" respectively, we can deliberately erase a certain "word" (patch) to yield a VCT. DNNs are then trained to infer the erased patch by video semantics, so as to complete the VCT. To fully exploit the temporal context, each patch in STC is alternatively erased to create multiple VCTs, and the erased patch's optical flow is also inferred to integrate richer motion clues. Meanwhile, a new DNN architecture is designed as a model-level solution to utilize video semantics and temporal context. Extensive experiments demonstrate that VCC achieves state-of-the-art VAD performance. Our codes and results are open at \url{https://github.com/yuguangnudt/VEC_VAD/tree/VCC}

Sensing Anomalies like Humans: A Hominine Framework to Detect Abnormal Events from Unlabeled Videos

Aug 04, 2021

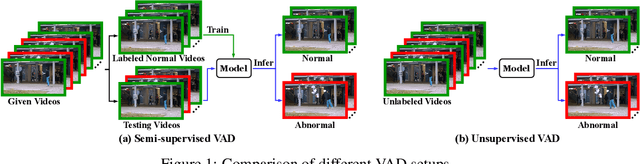

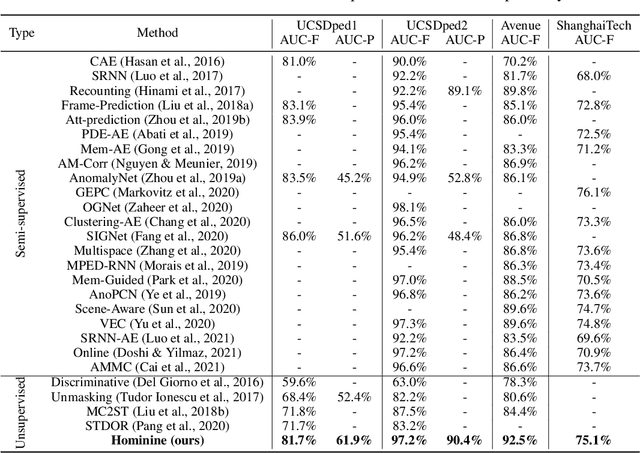

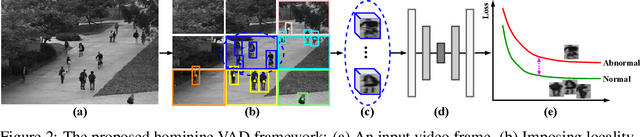

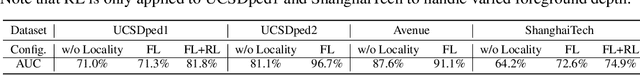

Abstract:Video anomaly detection (VAD) has constantly been a vital topic in video analysis. As anomalies are often rare, it is typically addressed under a semi-supervised setup, which requires a training set with pure normal videos. To avoid exhausted manual labeling, we are inspired by how humans sense anomalies and propose a hominine framework that enables both unsupervised and end-to-end VAD. The framework is based on two key observations: 1) Human perception is usually local, i.e. focusing on local foreground and its context when sensing anomalies. Thus, we propose to impose locality-awareness by localizing foreground with generic knowledge, and a region localization strategy is designed to exploit local context. 2) Frequently-occurred events will mould humans' definition of normality, which motivates us to devise a surrogate training paradigm. It trains a deep neural network (DNN) to learn a surrogate task with unlabeled videos, and frequently-occurred events will play a dominant role in "moulding" the DNN. In this way, a training loss gap will automatically manifest rarely-seen novel events as anomalies. For implementation, we explore various surrogate tasks as well as both classic and emerging DNN models. Extensive evaluations on commonly-used VAD benchmarks justify the framework's applicability to different surrogate tasks or DNN models, and demonstrate its astonishing effectiveness: It not only outperforms existing unsupervised solutions by a wide margin (8% to 10% AUROC gain), but also achieves comparable or even superior performance to state-of-the-art semi-supervised counterparts.

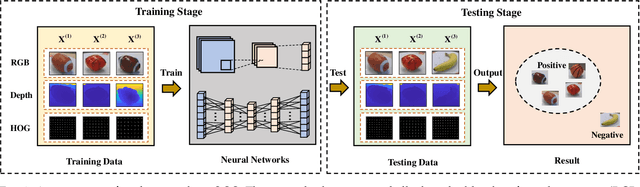

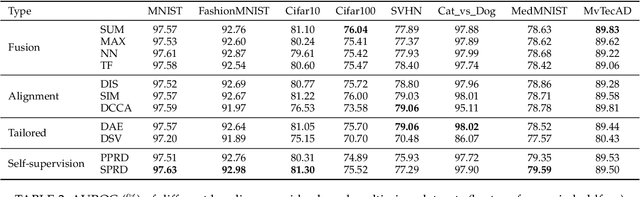

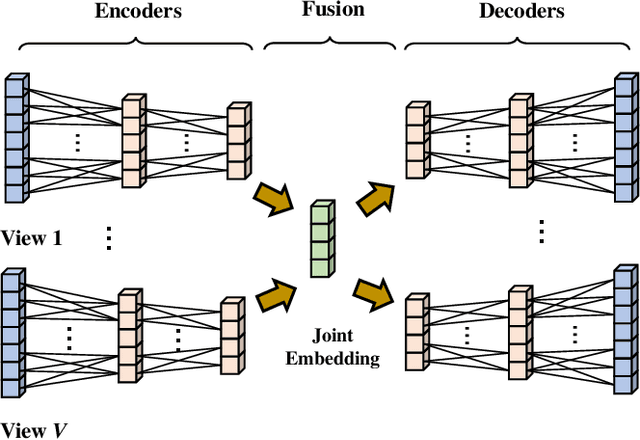

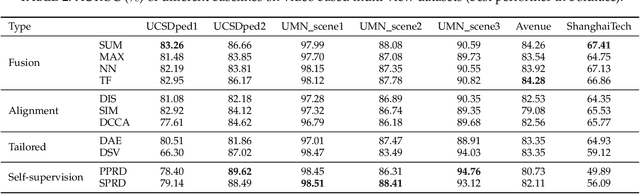

Multi-view Deep One-class Classification: A Systematic Exploration

Apr 27, 2021

Abstract:One-class classification (OCC), which models one single positive class and distinguishes it from the negative class, has been a long-standing topic with pivotal application to realms like anomaly detection. As modern society often deals with massive high-dimensional complex data spawned by multiple sources, it is natural to consider OCC from the perspective of multi-view deep learning. However, it has not been discussed by the literature and remains an unexplored topic. Motivated by this blank, this paper makes four-fold contributions: First, to our best knowledge, this is the first work that formally identifies and formulates the multi-view deep OCC problem. Second, we take recent advances in relevant areas into account and systematically devise eleven different baseline solutions for multi-view deep OCC, which lays the foundation for research on multi-view deep OCC. Third, to remedy the problem that limited benchmark datasets are available for multi-view deep OCC, we extensively collect existing public data and process them into more than 30 new multi-view benchmark datasets via multiple means, so as to provide a publicly available evaluation platform for multi-view deep OCC. Finally, by comprehensively evaluating the devised solutions on benchmark datasets, we conduct a thorough analysis on the effectiveness of the designed baselines, and hopefully provide other researchers with beneficial guidance and insight to multi-view deep OCC. Our data and codes are opened at https://github.com/liujiyuan13/MvDOCC-datasets and https://github.com/liujiyuan13/MvDOCC-code respectively to facilitate future research.

Cloze Test Helps: Effective Video Anomaly Detection via Learning to Complete Video Events

Aug 27, 2020

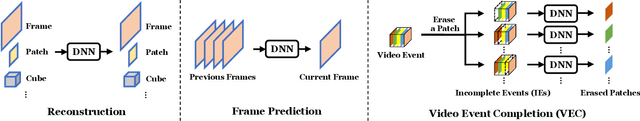

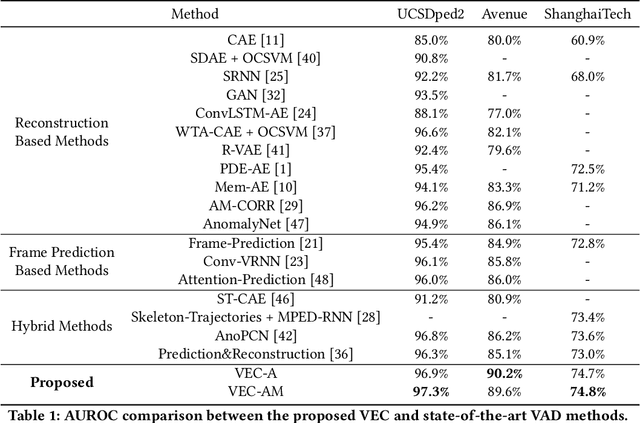

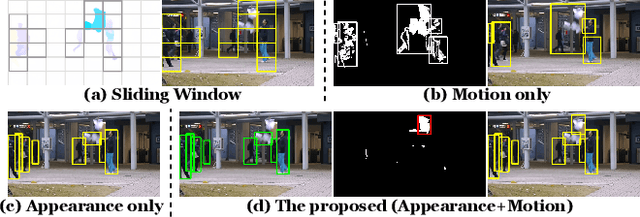

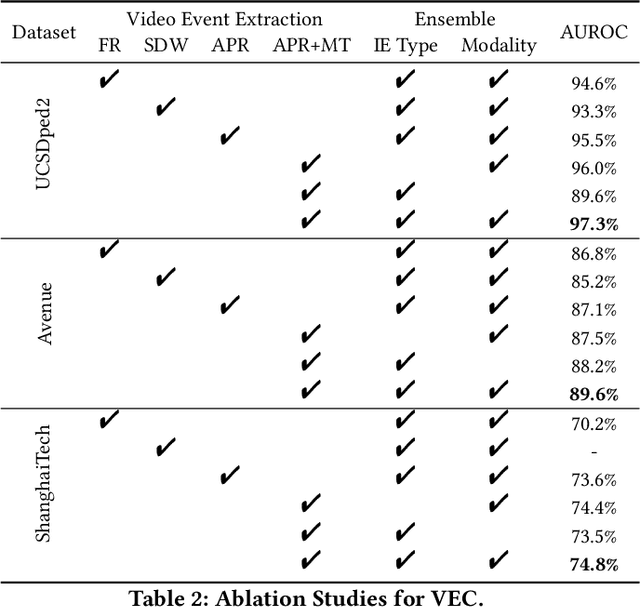

Abstract:As a vital topic in media content interpretation, video anomaly detection (VAD) has made fruitful progress via deep neural network (DNN). However, existing methods usually follow a reconstruction or frame prediction routine. They suffer from two gaps: (1) They cannot localize video activities in a both precise and comprehensive manner. (2) They lack sufficient abilities to utilize high-level semantics and temporal context information. Inspired by frequently-used cloze test in language study, we propose a brand-new VAD solution named Video Event Completion (VEC) to bridge gaps above: First, we propose a novel pipeline to achieve both precise and comprehensive enclosure of video activities. Appearance and motion are exploited as mutually complimentary cues to localize regions of interest (RoIs). A normalized spatio-temporal cube (STC) is built from each RoI as a video event, which lays the foundation of VEC and serves as a basic processing unit. Second, we encourage DNN to capture high-level semantics by solving a visual cloze test. To build such a visual cloze test, a certain patch of STC is erased to yield an incomplete event (IE). The DNN learns to restore the original video event from the IE by inferring the missing patch. Third, to incorporate richer motion dynamics, another DNN is trained to infer erased patches' optical flow. Finally, two ensemble strategies using different types of IE and modalities are proposed to boost VAD performance, so as to fully exploit the temporal context and modality information for VAD. VEC can consistently outperform state-of-the-art methods by a notable margin (typically 1.5%-5% AUROC) on commonly-used VAD benchmarks. Our codes and results can be verified at github.com/yuguangnudt/VEC_VAD.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge