Gregery T. Buzzard

Boiling flow estimation for aero-optic phase screen generation

Jan 17, 2026Abstract:Aero-optic effects due to turbulence can reduce the effectiveness of transmitting light waves to a distant target. Methods to compensate for turbulence typically rely on realistic turbulence data, which can be generated by i) experiment, ii) high-fidelity CFD, iii) low-fidelity CFD, and iv) autoregressive methods. However, each of these methods has significant drawbacks, including monetary and/or computational expense, limited quantity, inaccurate statistics, and overall complexity. In contrast, the boiling flow algorithm is a simple, computationally efficient model that can generate atmospheric phase screen data with only a handful of parameters. However, boiling flow has not been widely used in aero-optic applications, at least in part because some of these parameters, such as r0, are not clearly defined for aero-optic data. In this paper, we demonstrate a method to use the boiling flow algorithm to generate arbitrary length synthetic data to match the statistics of measured aero-optic data. Importantly, we modify the standard boiling flow method to generate anisotropic phase screens. While this model does not fully capture all statistics, it can be used to generate data that matches the temporal power spectrum or the anisotropic 2D structure function, with the ability to trade fidelity to one for fidelity to the other.

MONSTR: Model-Oriented Neutron Strain Tomographic Reconstruction

May 28, 2025Abstract:Residual strain, a tensor quantity, is a critical material property that impacts the overall performance of metal parts. Neutron Bragg edge strain tomography is a technique for imaging residual strain that works by making conventional hyperspectral computed tomography measurements, extracting the average projected strain at each detector pixel, and processing the resulting strain sinogram using a reconstruction algorithm. However, the reconstruction is severely ill-posed as the underlying inverse problem involves inferring a tensor at each voxel from scalar sinogram data. In this paper, we introduce the model-oriented neutron strain tomographic reconstruction (MONSTR) algorithm that reconstructs the 2D residual strain tensor from the neutron Bragg edge strain measurements. MONSTR is based on using the multi-agent consensus equilibrium framework for the tensor tomographic reconstruction. Specifically, we formulate the reconstruction as a consensus solution of a collection of agents representing detector physics, the tomographic reconstruction process, and physics-based constraints from continuum mechanics. Using simulated data, we demonstrate high-quality reconstruction of the strain tensor even when using very few measurements.

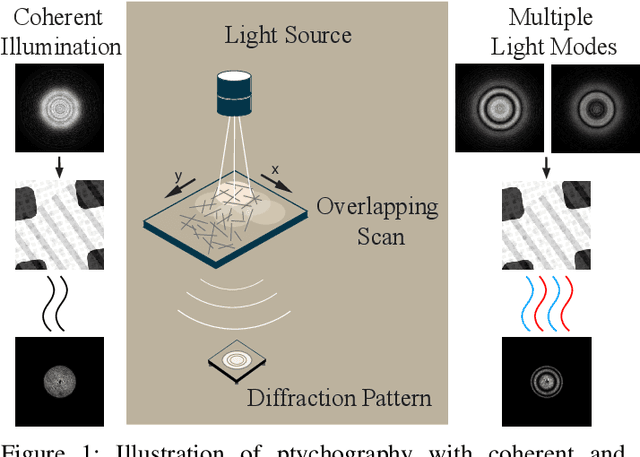

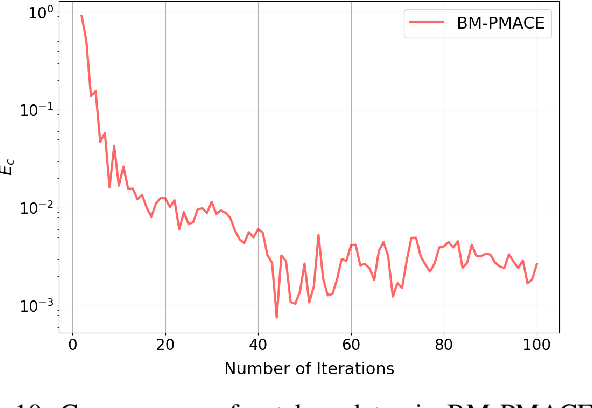

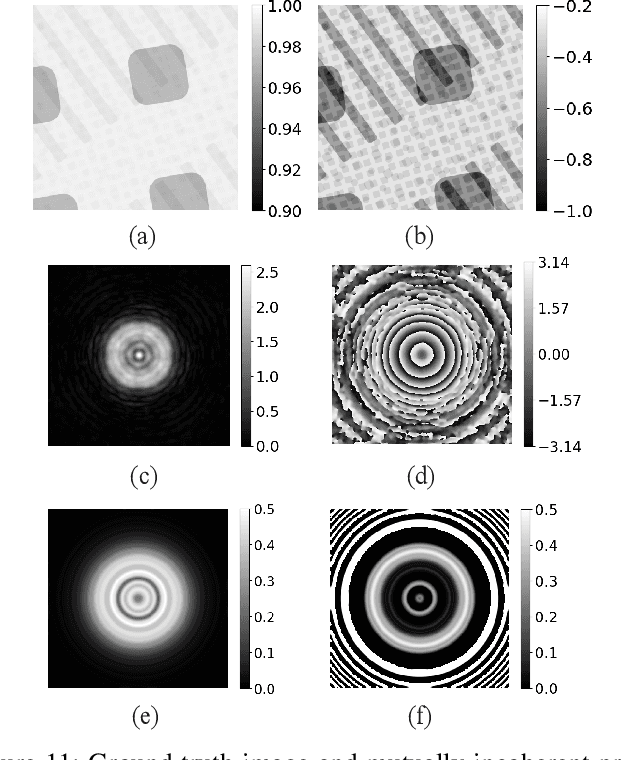

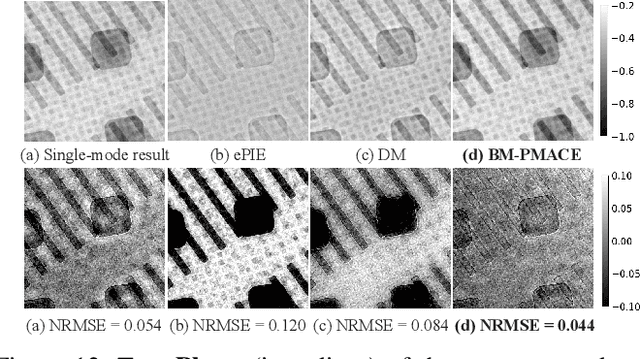

Ptychography using Blind Multi-Mode PMACE

Jan 11, 2025

Abstract:Ptychography is an imaging technique that enables nanometer-scale reconstruction of complex transmittance images by scanning objects with overlapping illumination patterns. However, the illumination function is typically unknown, which presents challenges for reconstruction, especially when using partially coherent light sources. In this paper, we introduce Blind Multi-Mode Projected Multi-Agent Consensus Equilibrium (BM-PMACE) for blind ptychographic reconstruction. We extend the PMACE framework for distributed inverse problems to jointly estimate the complex transmittance image and multiple, unknown, partially coherent probe functions. Importantly, our method maintains local probe estimates to exploit complementary information at multiple probe locations. Our method also incorporates a dynamic strategy for integrating additional probe modes. Through experimental simulations and validations using both synthetic and measured data, we demonstrate that BM-PMACE outperforms existing approaches in reconstruction quality and convergence rate.

Fast Hyperspectral Neutron Tomography

Oct 29, 2024Abstract:Hyperspectral neutron computed tomography is a tomographic imaging technique in which thousands of wavelength-specific neutron radiographs are typically measured for each tomographic view. In conventional hyperspectral reconstruction, data from each neutron wavelength bin is reconstructed separately, which is extremely time-consuming. These reconstructions often suffer from poor quality due to low signal-to-noise ratio. Consequently, material decomposition based on these reconstructions tends to lead to both inaccurate estimates of the material spectra and inaccurate volumetric material separation. In this paper, we present two novel algorithms for processing hyperspectral neutron data: fast hyperspectral reconstruction and fast material decomposition. Both algorithms rely on a subspace decomposition procedure that transforms hyperspectral views into low-dimensional projection views within an intermediate subspace, where tomographic reconstruction is performed. The use of subspace decomposition dramatically reduces reconstruction time while reducing both noise and reconstruction artifacts. We apply our algorithms to both simulated and measured neutron data and demonstrate that they reduce computation and improve the quality of the results relative to conventional methods.

ResSR: A Residual Approach to Super-Resolving Multispectral Images

Aug 23, 2024Abstract:Multispectral imaging sensors typically have wavelength-dependent resolution, which reduces the ability to distinguish small features in some spectral bands. Existing super-resolution methods upsample a multispectral image (MSI) to achieve a common resolution across all bands but are typically sensor-specific, computationally expensive, and may assume invariant image statistics across multiple length scales. In this paper, we introduce ResSR, an efficient and modular residual-based method for super-resolving the lower-resolution bands of a multispectral image. ResSR uses singular value decomposition (SVD) to identify correlations across spectral bands and then applies a residual correction process that corrects only the high-spatial frequency components of the upsampled bands. The SVD formulation improves the conditioning and simplifies the super-resolution problem, and the residual method retains accurate low-spatial frequencies from the measured data while incorporating high-spatial frequency detail from the SVD solution. While ResSR is formulated as the solution to an optimization problem, we derive an approximate closed-form solution that is fast and accurate. We formulate ResSR for any number of distinct resolutions, enabling easy application to any MSI. In a series of experiments on simulated and measured Sentinel-2 MSIs, ResSR is shown to produce image quality comparable to or better than alternative algorithms. However, it is computationally faster and can run on larger images, making it useful for processing large data sets.

Pixel-weighted Multi-pose Fusion for Metal Artifact Reduction in X-ray Computed Tomography

Jun 25, 2024Abstract:X-ray computed tomography (CT) reconstructs the internal morphology of a three dimensional object from a collection of projection images, most commonly using a single rotation axis. However, for objects containing dense materials like metal, the use of a single rotation axis may leave some regions of the object obscured by the metal, even though projections from other rotation axes (or poses) might contain complementary information that would better resolve these obscured regions. In this paper, we propose pixel-weighted Multi-pose Fusion to reduce metal artifacts by fusing the information from complementary measurement poses into a single reconstruction. Our method uses Multi-Agent Consensus Equilibrium (MACE), an extension of Plug-and-Play, as a framework for integrating projection data from different poses. A primary novelty of the proposed method is that the output of different MACE agents are fused in a pixel-weighted manner to minimize the effects of metal throughout the reconstruction. Using real CT data on an object with and without metal inserts, we demonstrate that the proposed pixel-weighted Multi-pose Fusion method significantly reduces metal artifacts relative to single-pose reconstructions.

CLAMP: Majorized Plug-and-Play for Coherent 3D LIDAR Imaging

Jun 19, 2024

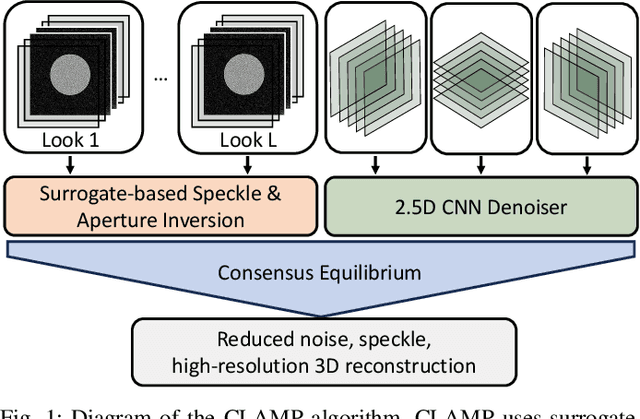

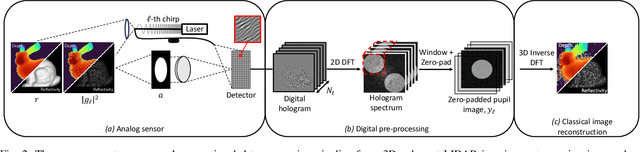

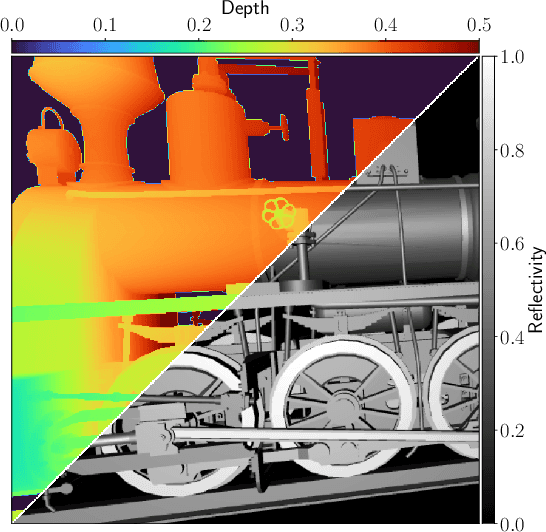

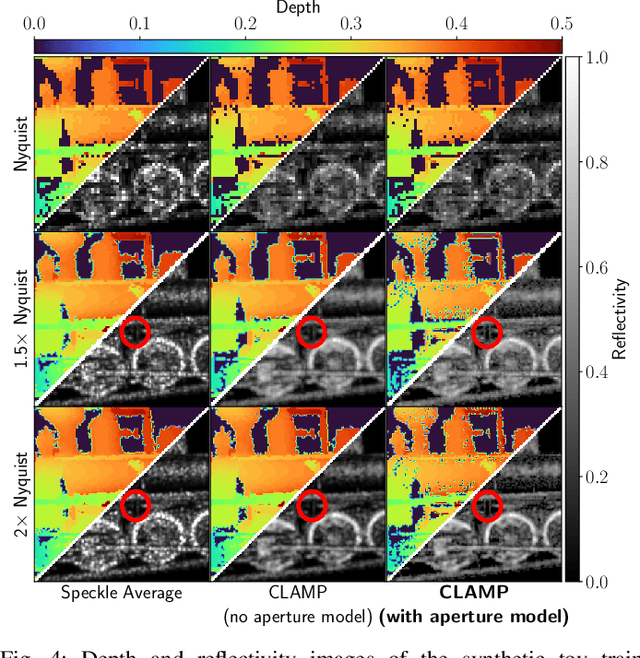

Abstract:Coherent LIDAR uses a chirped laser pulse for 3D imaging of distant targets. However, existing coherent LIDAR image reconstruction methods do not account for the system's aperture, resulting in sub-optimal resolution. Moreover, these methods use majorization-minimization for computational efficiency, but do so without a theoretical treatment of convergence. In this paper, we present Coherent LIDAR Aperture Modeled Plug-and-Play (CLAMP) for multi-look coherent LIDAR image reconstruction. CLAMP uses multi-agent consensus equilibrium (a form of PnP) to combine a neural network denoiser with an accurate physics-based forward model. CLAMP introduces an FFT-based method to account for the effects of the aperture and uses majorization of the forward model for computational efficiency. We also formalize the use of majorization-minimization in consensus optimization problems and prove convergence to the exact consensus equilibrium solution. Finally, we apply CLAMP to synthetic and measured data to demonstrate its effectiveness in producing high-resolution, speckle-free, 3D imagery.

MACE CT Reconstruction for Modular Material Decomposition from Energy Resolving Photon-Counting Data

Feb 01, 2024Abstract:X-ray computed tomography (CT) based on photon counting detectors (PCD) extends standard CT by counting detected photons in multiple energy bins. PCD data can be used to increase the contrast-to-noise ratio (CNR), increase spatial resolution, reduce radiation dose, reduce injected contrast dose, and compute a material decomposition using a specified set of basis materials. Current commercial and prototype clinical photon counting CT systems utilize PCD-CT reconstruction methods that either reconstruct from each spectral bin separately, or first create an estimate of a material sinogram using a specified set of basis materials and then reconstruct from these material sinograms. However, existing methods are not able to utilize simultaneously and in a modular fashion both the measured spectral information and advanced prior models in order to produce a material decomposition. We describe an efficient, modular framework for PCD-based CT reconstruction and material decomposition using on Multi-Agent Consensus Equilibrium (MACE). Our method employs a detector proximal map or agent that uses PCD measurements to update an estimate of the pathlength sinogram. We also create a prior agent in the form of a sinogram denoiser that enforces both physical and empirical knowledge about the material-decomposed sinogram. The sinogram reconstruction is computed using the MACE algorithm, which finds an equilibrium solution between the two agents, and the final image is reconstructed from the estimated sinogram. Importantly, the modularity of our method allows the two agents to be designed, implemented, and optimized independently. Our results on simulated data show a substantial (450%) CNR boost vs conventional maximum likelihood reconstruction when applied to a phantom used to evaluate low contrast detectability.

Texture Matching GAN for CT Image Enhancement

Dec 20, 2023Abstract:Deep neural networks (DNN) are commonly used to denoise and sharpen X-ray computed tomography (CT) images with the goal of reducing patient X-ray dosage while maintaining reconstruction quality. However, naive application of DNN-based methods can result in image texture that is undesirable in clinical applications. Alternatively, generative adversarial network (GAN) based methods can produce appropriate texture, but naive application of GANs can introduce inaccurate or even unreal image detail. In this paper, we propose a texture matching generative adversarial network (TMGAN) that enhances CT images while generating an image texture that can be matched to a target texture. We use parallel generators to separate anatomical features from the generated texture, which allows the GAN to be trained to match the desired texture without directly affecting the underlying CT image. We demonstrate that TMGAN generates enhanced image quality while also producing image texture that is desirable for clinical application.

Generative Plug and Play: Posterior Sampling for Inverse Problems

Jun 12, 2023Abstract:Over the past decade, Plug-and-Play (PnP) has become a popular method for reconstructing images using a modular framework consisting of a forward and prior model. The great strength of PnP is that an image denoiser can be used as a prior model while the forward model can be implemented using more traditional physics-based approaches. However, a limitation of PnP is that it reconstructs only a single deterministic image. In this paper, we introduce Generative Plug-and-Play (GPnP), a generalization of PnP to sample from the posterior distribution. As with PnP, GPnP has a modular framework using a physics-based forward model and an image denoising prior model. However, in GPnP these models are extended to become proximal generators, which sample from associated distributions. GPnP applies these proximal generators in alternation to produce samples from the posterior. We present experimental simulations using the well-known BM3D denoiser. Our results demonstrate that the GPnP method is robust, easy to implement, and produces intuitively reasonable samples from the posterior for sparse interpolation and tomographic reconstruction. Code to accompany this paper is available at https://github.com/gbuzzard/generative-pnp-allerton .

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge