Diyu Yang

Fast Hyperspectral Neutron Tomography

Oct 29, 2024Abstract:Hyperspectral neutron computed tomography is a tomographic imaging technique in which thousands of wavelength-specific neutron radiographs are typically measured for each tomographic view. In conventional hyperspectral reconstruction, data from each neutron wavelength bin is reconstructed separately, which is extremely time-consuming. These reconstructions often suffer from poor quality due to low signal-to-noise ratio. Consequently, material decomposition based on these reconstructions tends to lead to both inaccurate estimates of the material spectra and inaccurate volumetric material separation. In this paper, we present two novel algorithms for processing hyperspectral neutron data: fast hyperspectral reconstruction and fast material decomposition. Both algorithms rely on a subspace decomposition procedure that transforms hyperspectral views into low-dimensional projection views within an intermediate subspace, where tomographic reconstruction is performed. The use of subspace decomposition dramatically reduces reconstruction time while reducing both noise and reconstruction artifacts. We apply our algorithms to both simulated and measured neutron data and demonstrate that they reduce computation and improve the quality of the results relative to conventional methods.

Pixel-weighted Multi-pose Fusion for Metal Artifact Reduction in X-ray Computed Tomography

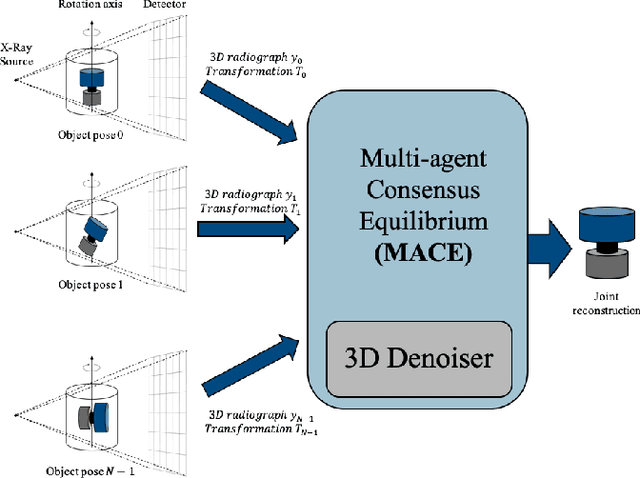

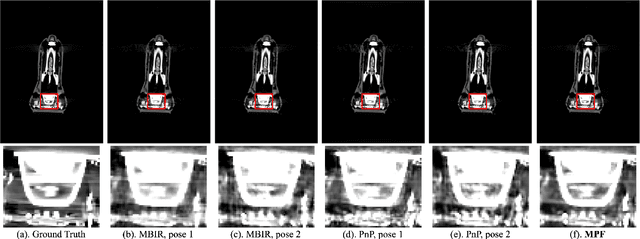

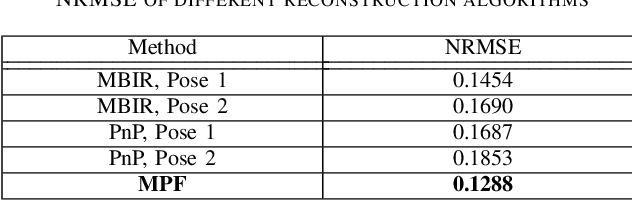

Jun 25, 2024Abstract:X-ray computed tomography (CT) reconstructs the internal morphology of a three dimensional object from a collection of projection images, most commonly using a single rotation axis. However, for objects containing dense materials like metal, the use of a single rotation axis may leave some regions of the object obscured by the metal, even though projections from other rotation axes (or poses) might contain complementary information that would better resolve these obscured regions. In this paper, we propose pixel-weighted Multi-pose Fusion to reduce metal artifacts by fusing the information from complementary measurement poses into a single reconstruction. Our method uses Multi-Agent Consensus Equilibrium (MACE), an extension of Plug-and-Play, as a framework for integrating projection data from different poses. A primary novelty of the proposed method is that the output of different MACE agents are fused in a pixel-weighted manner to minimize the effects of metal throughout the reconstruction. Using real CT data on an object with and without metal inserts, we demonstrate that the proposed pixel-weighted Multi-pose Fusion method significantly reduces metal artifacts relative to single-pose reconstructions.

Autonomous Polycrystalline Material Decomposition for Hyperspectral Neutron Tomography

Feb 27, 2023

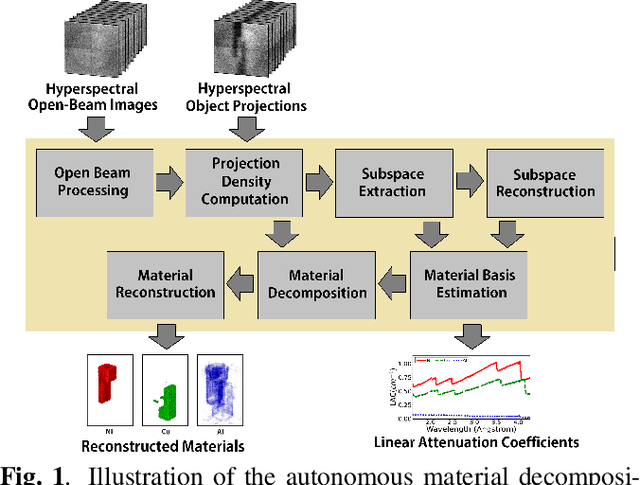

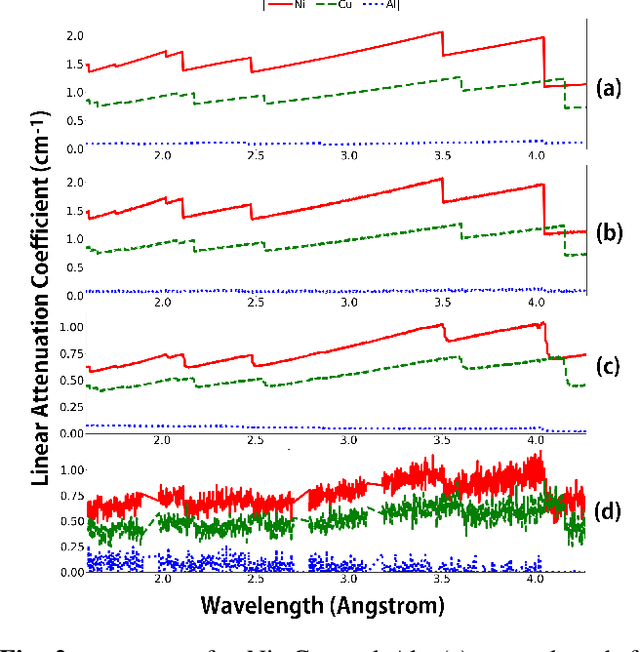

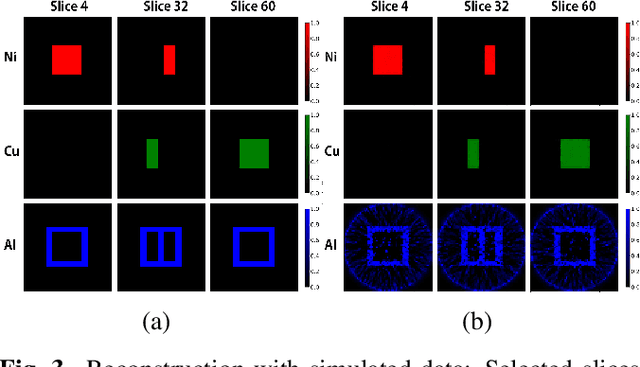

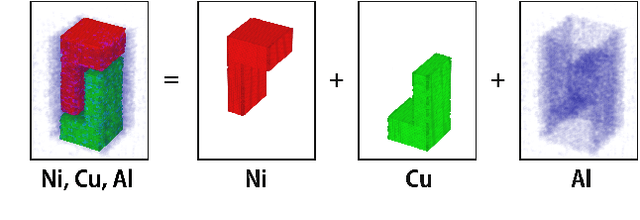

Abstract:Hyperspectral neutron tomography is an effective method for analyzing crystalline material samples with complex compositions in a non-destructive manner. Since the counts in the hyperspectral neutron radiographs directly depend on the neutron cross-sections, materials may exhibit contrasting neutron responses across wavelengths. Therefore, it is possible to extract the unique signatures associated with each material and use them to separate the crystalline phases simultaneously. We introduce an autonomous material decomposition (AMD) algorithm to automatically characterize and localize polycrystalline structures using Bragg edges with contrasting neutron responses from hyperspectral data. The algorithm estimates the linear attenuation coefficient spectra from the measured radiographs and then uses these spectra to perform polycrystalline material decomposition and reconstructs 3D material volumes to localize materials in the spatial domain. Our results demonstrate that the method can accurately estimate both the linear attenuation coefficient spectra and associated reconstructions on both simulated and experimental neutron data.

An Edge Alignment-based Orientation Selection Method for Neutron Tomography

Dec 01, 2022Abstract:Neutron computed tomography (nCT) is a 3D characterization technique used to image the internal morphology or chemical composition of samples in biology and materials sciences. A typical workflow involves placing the sample in the path of a neutron beam, acquiring projection data at a predefined set of orientations, and processing the resulting data using an analytic reconstruction algorithm. Typical nCT scans require hours to days to complete and are then processed using conventional filtered back-projection (FBP), which performs poorly with sparse views or noisy data. Hence, the main methods in order to reduce overall acquisition time are the use of an improved sampling strategy combined with the use of advanced reconstruction methods such as model-based iterative reconstruction (MBIR). In this paper, we propose an adaptive orientation selection method in which an MBIR reconstruction on previously-acquired measurements is used to define an objective function on orientations that balances a data-fitting term promoting edge alignment and a regularization term promoting orientation diversity. Using simulated and experimental data, we demonstrate that our method produces high-quality reconstructions using significantly fewer total measurements than the conventional approach.

Multi-Pose Fusion for Sparse-View CT Reconstruction Using Consensus Equilibrium

Sep 15, 2022

Abstract:CT imaging works by reconstructing an object of interest from a collection of projections. Traditional methods such as filtered-back projection (FBP) work on projection images acquired around a fixed rotation axis. However, for some CT problems, it is desirable to perform a joint reconstruction from projection data acquired from multiple rotation axes. In this paper, we present Multi-Pose Fusion, a novel algorithm that performs a joint tomographic reconstruction from CT scans acquired from multiple poses of a single object, where each pose has a distinct rotation axis. Our approach uses multi-agent consensus equilibrium (MACE), an extension of plug-and-play, as a framework for integrating projection data from different poses. We apply our method on simulated data and demonstrate that Multi-Pose Fusion can achieve a better reconstruction result than single pose reconstruction.

Ensemble Wrapper Subsampling for Deep Modulation Classification

May 10, 2020

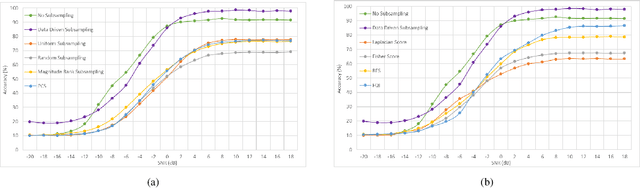

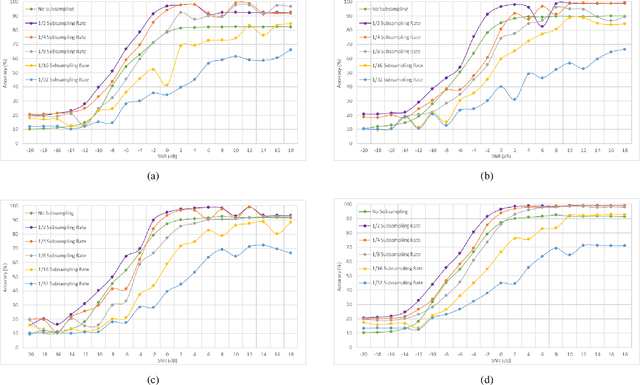

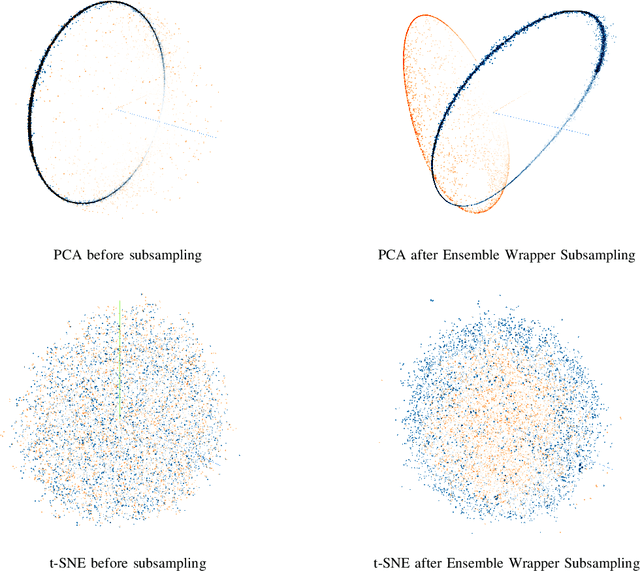

Abstract:Subsampling of received wireless signals is important for relaxing hardware requirements as well as the computational cost of signal processing algorithms that rely on the output samples. We propose a subsampling technique to facilitate the use of deep learning for automatic modulation classification in wireless communication systems. Unlike traditional approaches that rely on pre-designed strategies that are solely based on expert knowledge, the proposed data-driven subsampling strategy employs deep neural network architectures to simulate the effect of removing candidate combinations of samples from each training input vector, in a manner inspired by how wrapper feature selection models work. The subsampled data is then processed by another deep learning classifier that recognizes each of the considered 10 modulation types. We show that the proposed subsampling strategy not only introduces drastic reduction in the classifier training time, but can also improve the classification accuracy to higher levels than those reached before for the considered dataset. An important feature herein is exploiting the transferability property of deep neural networks to avoid retraining the wrapper models and obtain superior performance through an ensemble of wrappers over that possible through solely relying on any of them.

Fast Deep Learning for Automatic Modulation Classification

Jan 16, 2019

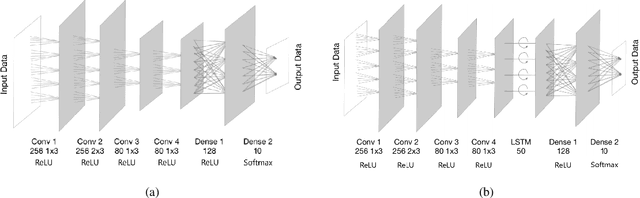

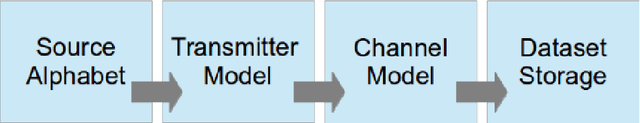

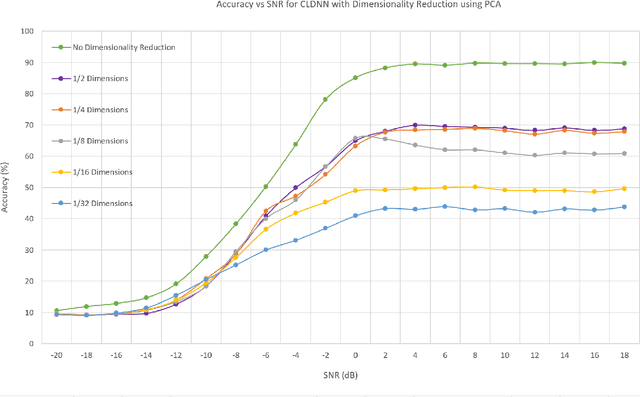

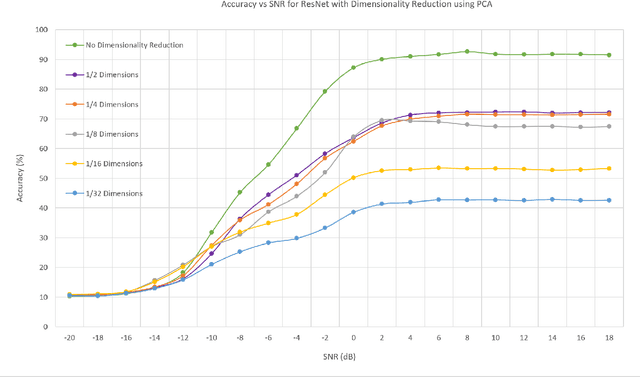

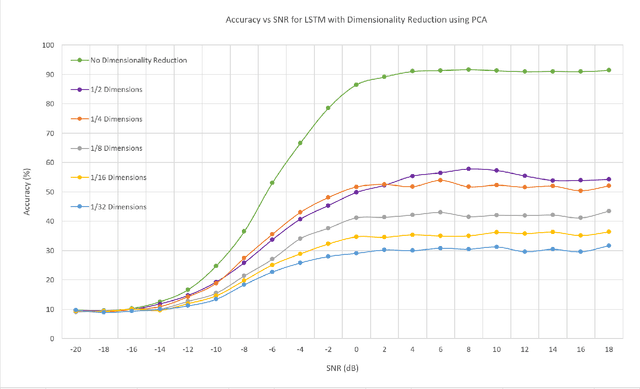

Abstract:In this work, we investigate the feasibility and effectiveness of employing deep learning algorithms for automatic recognition of the modulation type of received wireless communication signals from subsampled data. Recent work considered a GNU radio-based data set that mimics the imperfections in a real wireless channel and uses 10 different modulation types. A Convolutional Neural Network (CNN) architecture was then developed and shown to achieve performance that exceeds that of expert-based approaches. Here, we continue this line of work and investigate deep neural network architectures that deliver high classification accuracy. We identify three architectures - namely, a Convolutional Long Short-term Deep Neural Network (CLDNN), a Long Short-Term Memory neural network (LSTM), and a deep Residual Network (ResNet) - that lead to typical classification accuracy values around 90% at high SNR. We then study algorithms to reduce the training time by minimizing the size of the training data set, while incurring a minimal loss in classification accuracy. To this end, we demonstrate the performance of Principal Component Analysis in significantly reducing the training time, while maintaining good performance at low SNR. We also investigate subsampling techniques that further reduce the training time, and pave the way for online classification at high SNR. Finally, we identify representative SNR values for training each of the candidate architectures, and consequently, realize drastic reductions of the training time, with negligible loss in classification accuracy.

Deep Neural Network Architectures for Modulation Classification

Jan 05, 2018

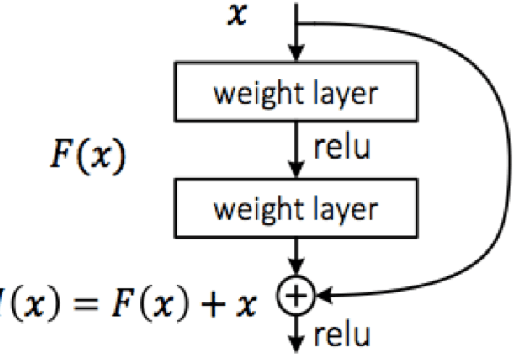

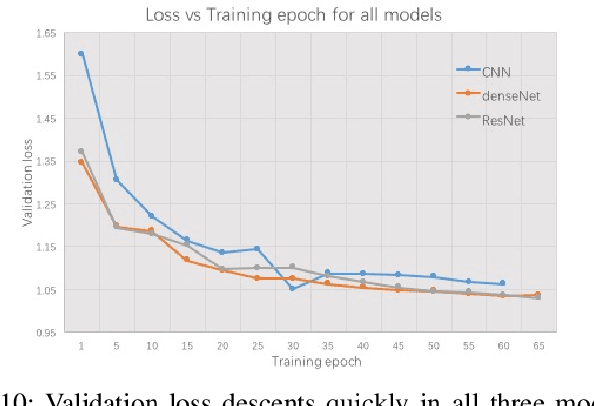

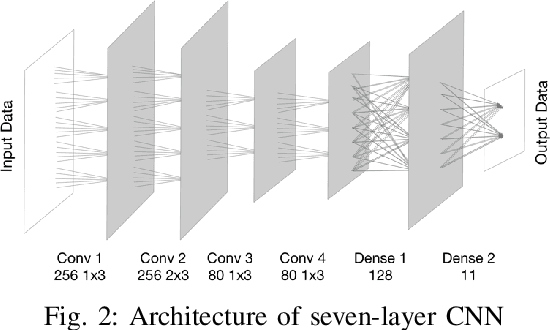

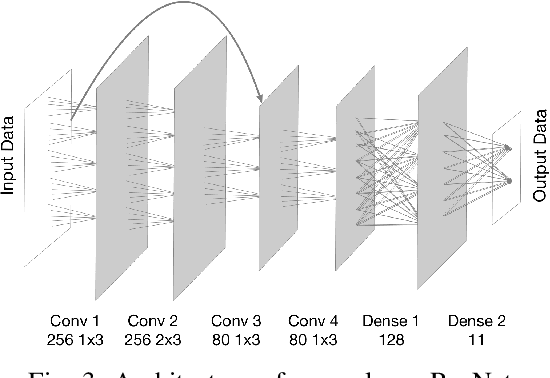

Abstract:In this work, we investigate the value of employing deep learning for the task of wireless signal modulation recognition. Recently in [1], a framework has been introduced by generating a dataset using GNU radio that mimics the imperfections in a real wireless channel, and uses 10 different modulation types. Further, a convolutional neural network (CNN) architecture was developed and shown to deliver performance that exceeds that of expert-based approaches. Here, we follow the framework of [1] and find deep neural network architectures that deliver higher accuracy than the state of the art. We tested the architecture of [1] and found it to achieve an accuracy of approximately 75% of correctly recognizing the modulation type. We first tune the CNN architecture of [1] and find a design with four convolutional layers and two dense layers that gives an accuracy of approximately 83.8% at high SNR. We then develop architectures based on the recently introduced ideas of Residual Networks (ResNet [2]) and Densely Connected Networks (DenseNet [3]) to achieve high SNR accuracies of approximately 83.5% and 86.6%, respectively. Finally, we introduce a Convolutional Long Short-term Deep Neural Network (CLDNN [4]) to achieve an accuracy of approximately 88.5% at high SNR.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge