Giovanni Montana

Action-Free Offline-to-Online RL via Discretised State Policies

Jan 31, 2026Abstract:Most existing offline RL methods presume the availability of action labels within the dataset, but in many practical scenarios, actions may be missing due to privacy, storage, or sensor limitations. We formalise the setting of action-free offline-to-online RL, where agents must learn from datasets consisting solely of $(s,r,s')$ tuples and later leverage this knowledge during online interaction. To address this challenge, we propose learning state policies that recommend desirable next-state transitions rather than actions. Our contributions are twofold. First, we introduce a simple yet novel state discretisation transformation and propose Offline State-Only DecQN (\algo), a value-based algorithm designed to pre-train state policies from action-free data. \algo{} integrates the transformation to scale efficiently to high-dimensional problems while avoiding instability and overfitting associated with continuous state prediction. Second, we propose a novel mechanism for guided online learning that leverages these pre-trained state policies to accelerate the learning of online agents. Together, these components establish a scalable and practical framework for leveraging action-free datasets to accelerate online RL. Empirical results across diverse benchmarks demonstrate that our approach improves convergence speed and asymptotic performance, while analyses reveal that discretisation and regularisation are critical to its effectiveness.

Partial Action Replacement: Tackling Distribution Shift in Offline MARL

Nov 10, 2025Abstract:Offline multi-agent reinforcement learning (MARL) is severely hampered by the challenge of evaluating out-of-distribution (OOD) joint actions. Our core finding is that when the behavior policy is factorized - a common scenario where agents act fully or partially independently during data collection - a strategy of partial action replacement (PAR) can significantly mitigate this challenge. PAR updates a single or part of agents' actions while the others remain fixed to the behavioral data, reducing distribution shift compared to full joint-action updates. Based on this insight, we develop Soft-Partial Conservative Q-Learning (SPaCQL), using PAR to mitigate OOD issue and dynamically weighting different PAR strategies based on the uncertainty of value estimation. We provide a rigorous theoretical foundation for this approach, proving that under factorized behavior policies, the induced distribution shift scales linearly with the number of deviating agents rather than exponentially with the joint-action space. This yields a provably tighter value error bound for this important class of offline MARL problems. Our theoretical results also indicate that SPaCQL adaptively addresses distribution shift using uncertainty-informed weights. Our empirical results demonstrate SPaCQL enables more effective policy learning, and manifest its remarkable superiority over baseline algorithms when the offline dataset exhibits the independence structure.

Uncertainty-Based Smooth Policy Regularisation for Reinforcement Learning with Few Demonstrations

Sep 19, 2025Abstract:In reinforcement learning with sparse rewards, demonstrations can accelerate learning, but determining when to imitate them remains challenging. We propose Smooth Policy Regularisation from Demonstrations (SPReD), a framework that addresses the fundamental question: when should an agent imitate a demonstration versus follow its own policy? SPReD uses ensemble methods to explicitly model Q-value distributions for both demonstration and policy actions, quantifying uncertainty for comparisons. We develop two complementary uncertainty-aware methods: a probabilistic approach estimating the likelihood of demonstration superiority, and an advantage-based approach scaling imitation by statistical significance. Unlike prevailing methods (e.g. Q-filter) that make binary imitation decisions, SPReD applies continuous, uncertainty-proportional regularisation weights, reducing gradient variance during training. Despite its computational simplicity, SPReD achieves remarkable gains in experiments across eight robotics tasks, outperforming existing approaches by up to a factor of 14 in complex tasks while maintaining robustness to demonstration quality and quantity. Our code is available at https://github.com/YujieZhu7/SPReD.

Efficient Verified Machine Unlearning For Distillation

Mar 28, 2025

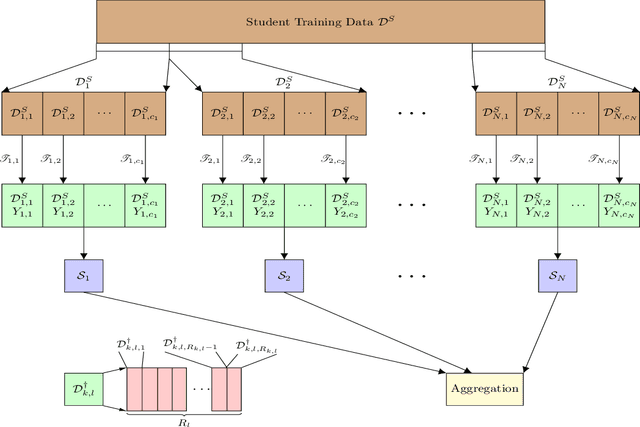

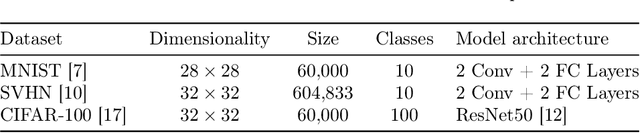

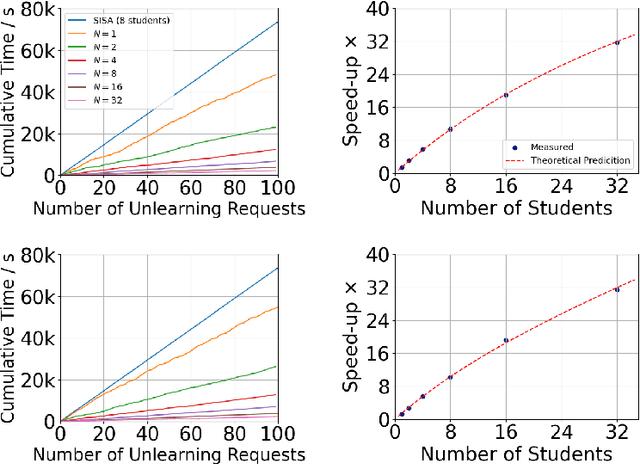

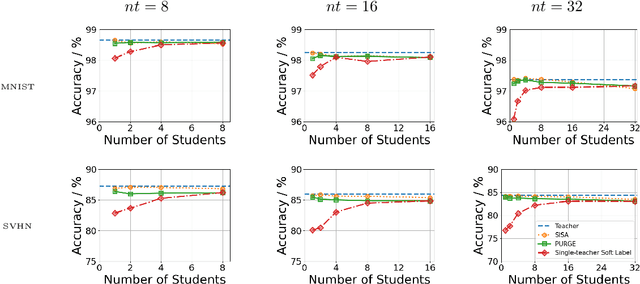

Abstract:Growing data privacy demands, driven by regulations like GDPR and CCPA, require machine unlearning methods capable of swiftly removing the influence of specific training points. Although verified approaches like SISA, using data slicing and checkpointing, achieve efficient unlearning for single models by reverting to intermediate states, these methods struggle in teacher-student knowledge distillation settings. Unlearning in the teacher typically forces costly, complete student retraining due to pervasive information propagation during distillation. Our primary contribution is PURGE (Partitioned Unlearning with Retraining Guarantee for Ensembles), a novel framework integrating verified unlearning with distillation. We introduce constituent mapping and an incremental multi-teacher strategy that partitions the distillation process, confines each teacher constituent's impact to distinct student data subsets, and crucially maintains data isolation. The PURGE framework substantially reduces retraining overhead, requiring only partial student updates when teacher-side unlearning occurs. We provide both theoretical analysis, quantifying significant speed-ups in the unlearning process, and empirical validation on multiple datasets, demonstrating that PURGE achieves these efficiency gains while maintaining student accuracy comparable to standard baselines.

Evaluation-Time Policy Switching for Offline Reinforcement Learning

Mar 15, 2025Abstract:Offline reinforcement learning (RL) looks at learning how to optimally solve tasks using a fixed dataset of interactions from the environment. Many off-policy algorithms developed for online learning struggle in the offline setting as they tend to over-estimate the behaviour of out of distributions actions. Existing offline RL algorithms adapt off-policy algorithms, employing techniques such as constraining the policy or modifying the value function to achieve good performance on individual datasets but struggle to adapt to different tasks or datasets of different qualities without tuning hyper-parameters. We introduce a policy switching technique that dynamically combines the behaviour of a pure off-policy RL agent, for improving behaviour, and a behavioural cloning (BC) agent, for staying close to the data. We achieve this by using a combination of epistemic uncertainty, quantified by our RL model, and a metric for aleatoric uncertainty extracted from the dataset. We show empirically that our policy switching technique can outperform not only the individual algorithms used in the switching process but also compete with state-of-the-art methods on numerous benchmarks. Our use of epistemic uncertainty for policy switching also allows us to naturally extend our method to the domain of offline to online fine-tuning allowing our model to adapt quickly and safely from online data, either matching or exceeding the performance of current methods that typically require additional modification or hyper-parameter fine-tuning.

Dynamic Pricing in High-Speed Railways Using Multi-Agent Reinforcement Learning

Jan 14, 2025Abstract:This paper addresses a critical challenge in the high-speed passenger railway industry: designing effective dynamic pricing strategies in the context of competing and cooperating operators. To address this, a multi-agent reinforcement learning (MARL) framework based on a non-zero-sum Markov game is proposed, incorporating random utility models to capture passenger decision making. Unlike prior studies in areas such as energy, airlines, and mobile networks, dynamic pricing for railway systems using deep reinforcement learning has received limited attention. A key contribution of this paper is a parametrisable and versatile reinforcement learning simulator designed to model a variety of railway network configurations and demand patterns while enabling realistic, microscopic modelling of user behaviour, called RailPricing-RL. This environment supports the proposed MARL framework, which models heterogeneous agents competing to maximise individual profits while fostering cooperative behaviour to synchronise connecting services. Experimental results validate the framework, demonstrating how user preferences affect MARL performance and how pricing policies influence passenger choices, utility, and overall system dynamics. This study provides a foundation for advancing dynamic pricing strategies in railway systems, aligning profitability with system-wide efficiency, and supporting future research on optimising pricing policies.

Investigating Relational State Abstraction in Collaborative MARL

Dec 19, 2024

Abstract:This paper explores the impact of relational state abstraction on sample efficiency and performance in collaborative Multi-Agent Reinforcement Learning. The proposed abstraction is based on spatial relationships in environments where direct communication between agents is not allowed, leveraging the ubiquity of spatial reasoning in real-world multi-agent scenarios. We introduce MARC (Multi-Agent Relational Critic), a simple yet effective critic architecture incorporating spatial relational inductive biases by transforming the state into a spatial graph and processing it through a relational graph neural network. The performance of MARC is evaluated across six collaborative tasks, including a novel environment with heterogeneous agents. We conduct a comprehensive empirical analysis, comparing MARC against state-of-the-art MARL baselines, demonstrating improvements in both sample efficiency and asymptotic performance, as well as its potential for generalization. Our findings suggest that a minimal integration of spatial relational inductive biases as abstraction can yield substantial benefits without requiring complex designs or task-specific engineering. This work provides insights into the potential of relational state abstraction to address sample efficiency, a key challenge in MARL, offering a promising direction for developing more efficient algorithms in spatially complex environments.

Achieving Collective Welfare in Multi-Agent Reinforcement Learning via Suggestion Sharing

Dec 16, 2024Abstract:In human society, the conflict between self-interest and collective well-being often obstructs efforts to achieve shared welfare. Related concepts like the Tragedy of the Commons and Social Dilemmas frequently manifest in our daily lives. As artificial agents increasingly serve as autonomous proxies for humans, we propose using multi-agent reinforcement learning (MARL) to address this issue - learning policies to maximise collective returns even when individual agents' interests conflict with the collective one. Traditional MARL solutions involve sharing rewards, values, and policies or designing intrinsic rewards to encourage agents to learn collectively optimal policies. We introduce a novel MARL approach based on Suggestion Sharing (SS), where agents exchange only action suggestions. This method enables effective cooperation without the need to design intrinsic rewards, achieving strong performance while revealing less private information compared to sharing rewards, values, or policies. Our theoretical analysis establishes a bound on the discrepancy between collective and individual objectives, demonstrating how sharing suggestions can align agents' behaviours with the collective objective. Experimental results demonstrate that SS performs competitively with baselines that rely on value or policy sharing or intrinsic rewards.

Learning on One Mode: Addressing Multi-Modality in Offline Reinforcement Learning

Dec 04, 2024Abstract:Offline reinforcement learning (RL) seeks to learn optimal policies from static datasets without interacting with the environment. A common challenge is handling multi-modal action distributions, where multiple behaviours are represented in the data. Existing methods often assume unimodal behaviour policies, leading to suboptimal performance when this assumption is violated. We propose Weighted Imitation Learning on One Mode (LOM), a novel approach that focuses on learning from a single, promising mode of the behaviour policy. By using a Gaussian mixture model to identify modes and selecting the best mode based on expected returns, LOM avoids the pitfalls of averaging over conflicting actions. Theoretically, we show that LOM improves performance while maintaining simplicity in policy learning. Empirically, LOM outperforms existing methods on standard D4RL benchmarks and demonstrates its effectiveness in complex, multi-modal scenarios.

An Investigation of Offline Reinforcement Learning in Factorisable Action Spaces

Nov 17, 2024Abstract:Expanding reinforcement learning (RL) to offline domains generates promising prospects, particularly in sectors where data collection poses substantial challenges or risks. Pivotal to the success of transferring RL offline is mitigating overestimation bias in value estimates for state-action pairs absent from data. Whilst numerous approaches have been proposed in recent years, these tend to focus primarily on continuous or small-scale discrete action spaces. Factorised discrete action spaces, on the other hand, have received relatively little attention, despite many real-world problems naturally having factorisable actions. In this work, we undertake a formative investigation into offline reinforcement learning in factorisable action spaces. Using value-decomposition as formulated in DecQN as a foundation, we present the case for a factorised approach and conduct an extensive empirical evaluation of several offline techniques adapted to the factorised setting. In the absence of established benchmarks, we introduce a suite of our own comprising datasets of varying quality and task complexity. Advocating for reproducible research and innovation, we make all datasets available for public use alongside our code base.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge