Jeremie Houssineau

Action-Free Offline-to-Online RL via Discretised State Policies

Jan 31, 2026Abstract:Most existing offline RL methods presume the availability of action labels within the dataset, but in many practical scenarios, actions may be missing due to privacy, storage, or sensor limitations. We formalise the setting of action-free offline-to-online RL, where agents must learn from datasets consisting solely of $(s,r,s')$ tuples and later leverage this knowledge during online interaction. To address this challenge, we propose learning state policies that recommend desirable next-state transitions rather than actions. Our contributions are twofold. First, we introduce a simple yet novel state discretisation transformation and propose Offline State-Only DecQN (\algo), a value-based algorithm designed to pre-train state policies from action-free data. \algo{} integrates the transformation to scale efficiently to high-dimensional problems while avoiding instability and overfitting associated with continuous state prediction. Second, we propose a novel mechanism for guided online learning that leverages these pre-trained state policies to accelerate the learning of online agents. Together, these components establish a scalable and practical framework for leveraging action-free datasets to accelerate online RL. Empirical results across diverse benchmarks demonstrate that our approach improves convergence speed and asymptotic performance, while analyses reveal that discretisation and regularisation are critical to its effectiveness.

Evaluation-Time Policy Switching for Offline Reinforcement Learning

Mar 15, 2025Abstract:Offline reinforcement learning (RL) looks at learning how to optimally solve tasks using a fixed dataset of interactions from the environment. Many off-policy algorithms developed for online learning struggle in the offline setting as they tend to over-estimate the behaviour of out of distributions actions. Existing offline RL algorithms adapt off-policy algorithms, employing techniques such as constraining the policy or modifying the value function to achieve good performance on individual datasets but struggle to adapt to different tasks or datasets of different qualities without tuning hyper-parameters. We introduce a policy switching technique that dynamically combines the behaviour of a pure off-policy RL agent, for improving behaviour, and a behavioural cloning (BC) agent, for staying close to the data. We achieve this by using a combination of epistemic uncertainty, quantified by our RL model, and a metric for aleatoric uncertainty extracted from the dataset. We show empirically that our policy switching technique can outperform not only the individual algorithms used in the switching process but also compete with state-of-the-art methods on numerous benchmarks. Our use of epistemic uncertainty for policy switching also allows us to naturally extend our method to the domain of offline to online fine-tuning allowing our model to adapt quickly and safely from online data, either matching or exceeding the performance of current methods that typically require additional modification or hyper-parameter fine-tuning.

Investigating Relational State Abstraction in Collaborative MARL

Dec 19, 2024

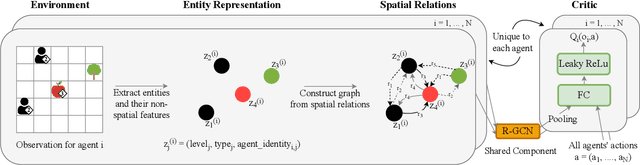

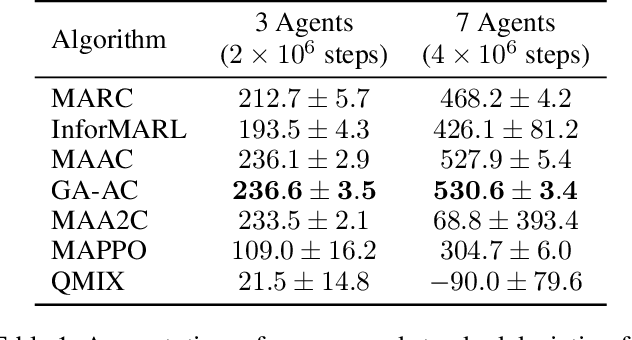

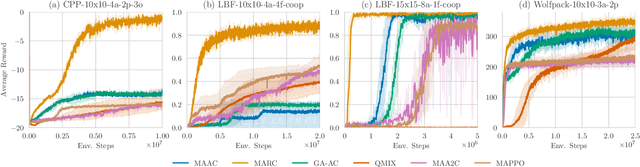

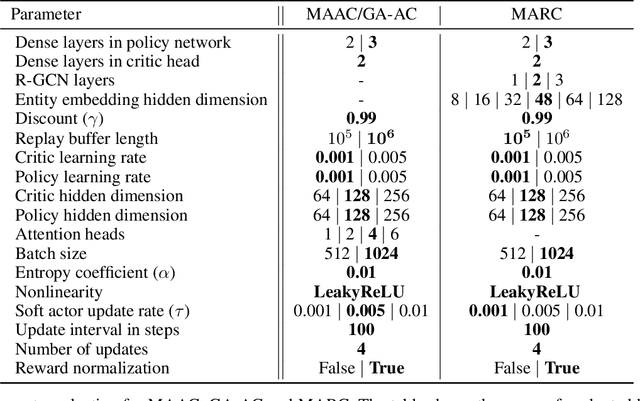

Abstract:This paper explores the impact of relational state abstraction on sample efficiency and performance in collaborative Multi-Agent Reinforcement Learning. The proposed abstraction is based on spatial relationships in environments where direct communication between agents is not allowed, leveraging the ubiquity of spatial reasoning in real-world multi-agent scenarios. We introduce MARC (Multi-Agent Relational Critic), a simple yet effective critic architecture incorporating spatial relational inductive biases by transforming the state into a spatial graph and processing it through a relational graph neural network. The performance of MARC is evaluated across six collaborative tasks, including a novel environment with heterogeneous agents. We conduct a comprehensive empirical analysis, comparing MARC against state-of-the-art MARL baselines, demonstrating improvements in both sample efficiency and asymptotic performance, as well as its potential for generalization. Our findings suggest that a minimal integration of spatial relational inductive biases as abstraction can yield substantial benefits without requiring complex designs or task-specific engineering. This work provides insights into the potential of relational state abstraction to address sample efficiency, a key challenge in MARL, offering a promising direction for developing more efficient algorithms in spatially complex environments.

Improving Active Learning with a Bayesian Representation of Epistemic Uncertainty

Dec 11, 2024

Abstract:A popular strategy for active learning is to specifically target a reduction in epistemic uncertainty, since aleatoric uncertainty is often considered as being intrinsic to the system of interest and therefore not reducible. Yet, distinguishing these two types of uncertainty remains challenging and there is no single strategy that consistently outperforms the others. We propose to use a particular combination of probability and possibility theories, with the aim of using the latter to specifically represent epistemic uncertainty, and we show how this combination leads to new active learning strategies that have desirable properties. In order to demonstrate the efficiency of these strategies in non-trivial settings, we introduce the notion of a possibilistic Gaussian process (GP) and consider GP-based multiclass and binary classification problems, for which the proposed methods display a strong performance for both simulated and real datasets.

Redesigning the ensemble Kalman filter with a dedicated model of epistemic uncertainty

Nov 28, 2024

Abstract:The problem of incorporating information from observations received serially in time is widespread in the field of uncertainty quantification. Within a probabilistic framework, such problems can be addressed using standard filtering techniques. However, in many real-world problems, some (or all) of the uncertainty is epistemic, arising from a lack of knowledge, and is difficult to model probabilistically. This paper introduces a possibilistic ensemble Kalman filter designed for this setting and characterizes some of its properties. Using possibility theory to describe epistemic uncertainty is appealing from a philosophical perspective, and it is easy to justify certain heuristics often employed in standard ensemble Kalman filters as principled approaches to capturing uncertainty within it. The possibilistic approach motivates a robust mechanism for characterizing uncertainty which shows good performance with small sample sizes, and can outperform standard ensemble Kalman filters at given sample size, even when dealing with genuinely aleatoric uncertainty.

Mitigating Relative Over-Generalization in Multi-Agent Reinforcement Learning

Nov 17, 2024Abstract:In decentralized multi-agent reinforcement learning, agents learning in isolation can lead to relative over-generalization (RO), where optimal joint actions are undervalued in favor of suboptimal ones. This hinders effective coordination in cooperative tasks, as agents tend to choose actions that are individually rational but collectively suboptimal. To address this issue, we introduce MaxMax Q-Learning (MMQ), which employs an iterative process of sampling and evaluating potential next states, selecting those with maximal Q-values for learning. This approach refines approximations of ideal state transitions, aligning more closely with the optimal joint policy of collaborating agents. We provide theoretical analysis supporting MMQ's potential and present empirical evaluations across various environments susceptible to RO. Our results demonstrate that MMQ frequently outperforms existing baselines, exhibiting enhanced convergence and sample efficiency.

A possibilistic framework for multi-target multi-sensor fusion

Sep 25, 2022

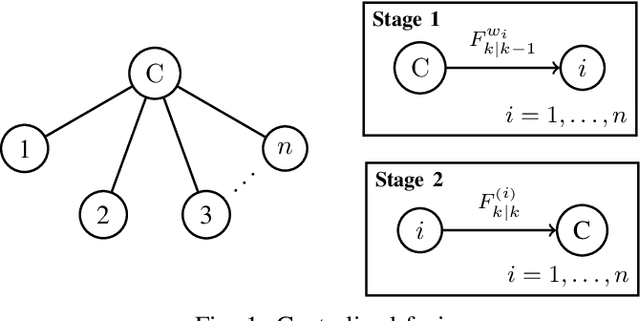

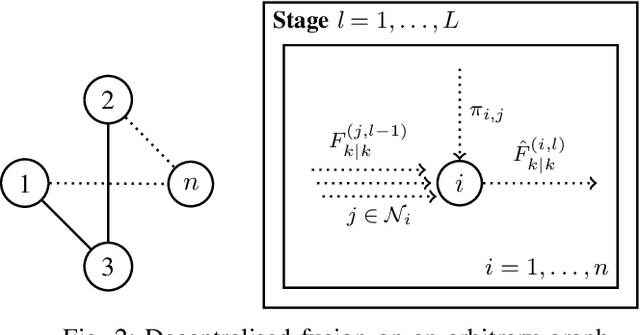

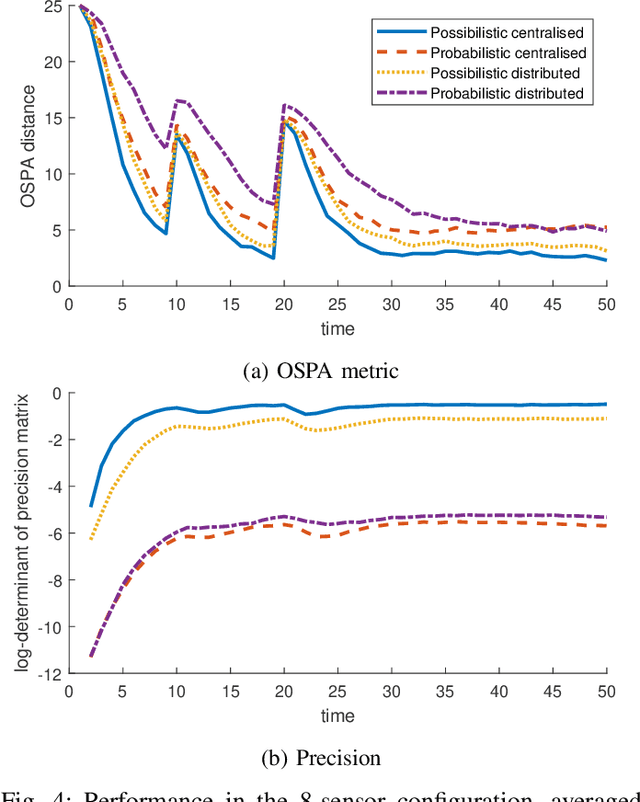

Abstract:Fusing and sharing information from multiple sensors over a network is a challenging task, especially in the context of multi-target tracking. Part of this challenge arises from the absence of a foundational rule for fusing probability distributions, with various approaches stemming from different principles. Yet, when expressing multi-target tracking algorithms within the framework of possibility theory, one specific fusion rule appears to be particularly natural and useful. In this article, this fusion rule is applied to both centralised and decentralised fusion, based on the possibilistic analogue of the probability hypothesis density filter. We then show that the proposed approach outperforms its probabilistic counterpart on simulated data.

A unified approach for multi-object triangulation, tracking and camera calibration

Oct 09, 2014

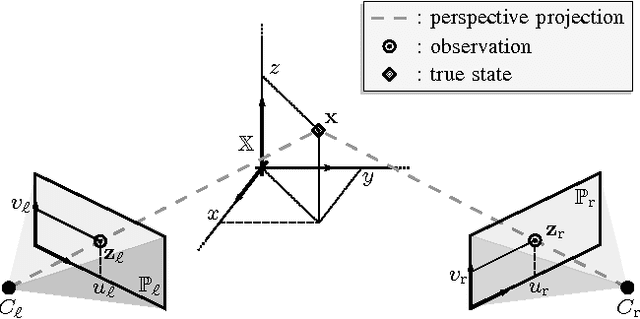

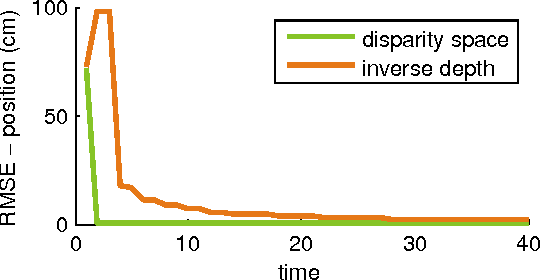

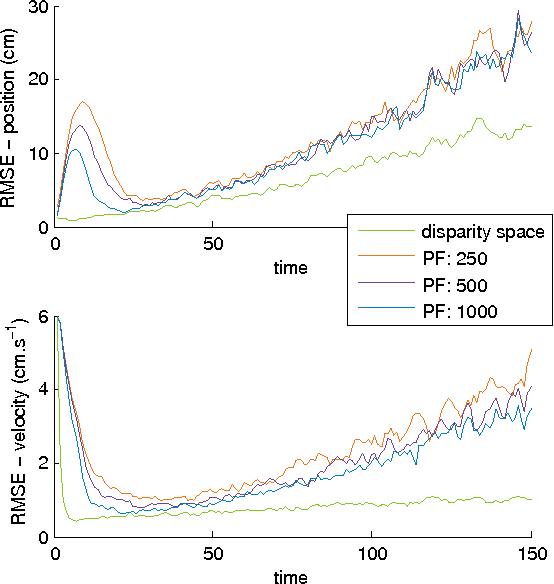

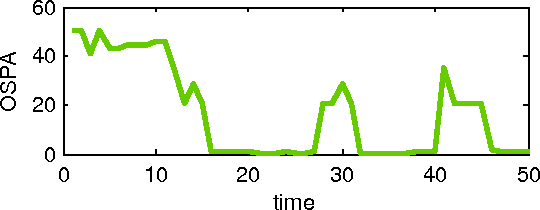

Abstract:Object triangulation, 3-D object tracking, feature correspondence, and camera calibration are key problems for estimation from camera networks. This paper addresses these problems within a unified Bayesian framework for joint multi-object tracking and sensor registration. Given that using standard filtering approaches for state estimation from cameras is problematic, an alternative parametrisation is exploited, called disparity space. The disparity space-based approach for triangulation and object tracking is shown to be more effective than non-linear versions of the Kalman filter and particle filtering for non-rectified cameras. The approach for feature correspondence is based on the Probability Hypothesis Density (PHD) filter, and hence inherits the ability to update without explicit measurement association, to initiate new targets, and to discriminate between target and clutter. The PHD filtering approach then forms the basis of a camera calibration method from static or moving objects. Results are shown on simulated data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge