Daniel Clark

A unified approach for multi-object triangulation, tracking and camera calibration

Oct 09, 2014

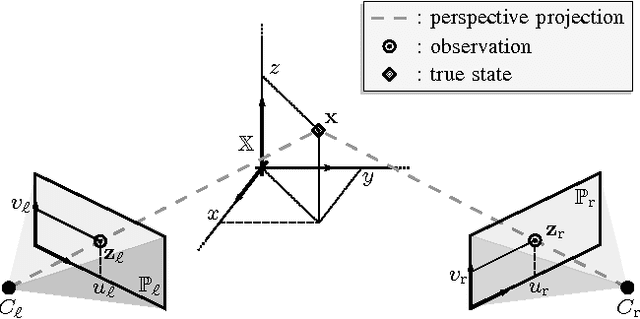

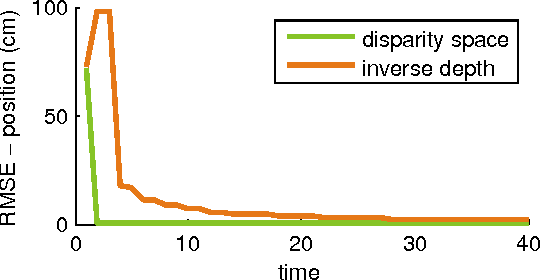

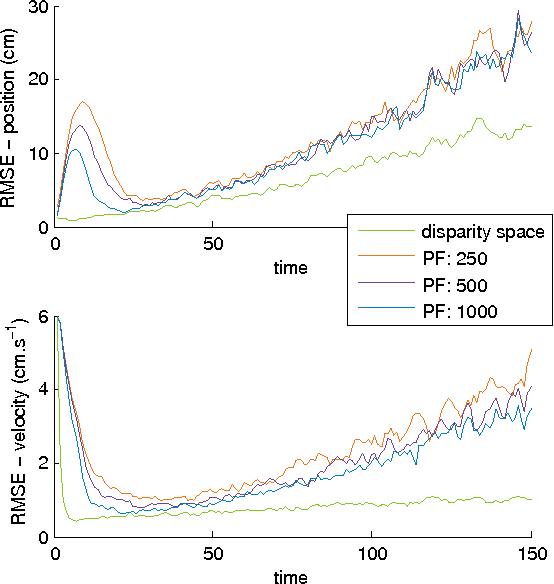

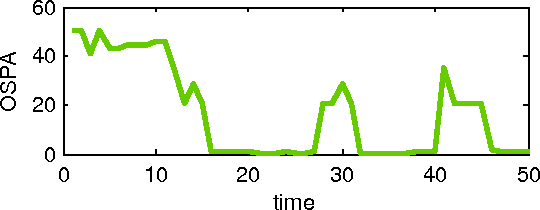

Abstract:Object triangulation, 3-D object tracking, feature correspondence, and camera calibration are key problems for estimation from camera networks. This paper addresses these problems within a unified Bayesian framework for joint multi-object tracking and sensor registration. Given that using standard filtering approaches for state estimation from cameras is problematic, an alternative parametrisation is exploited, called disparity space. The disparity space-based approach for triangulation and object tracking is shown to be more effective than non-linear versions of the Kalman filter and particle filtering for non-rectified cameras. The approach for feature correspondence is based on the Probability Hypothesis Density (PHD) filter, and hence inherits the ability to update without explicit measurement association, to initiate new targets, and to discriminate between target and clutter. The PHD filtering approach then forms the basis of a camera calibration method from static or moving objects. Results are shown on simulated data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge