Gianmarco Genalti

Data-Dependent Regret Bounds for Constrained MABs

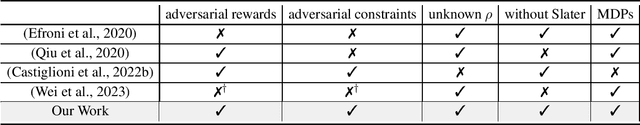

May 26, 2025Abstract:This paper initiates the study of data-dependent regret bounds in constrained MAB settings. These bounds depend on the sequence of losses that characterize the problem instance. Thus, they can be much smaller than classical $\widetilde{\mathcal{O}}(\sqrt{T})$ regret bounds, while being equivalent to them in the worst case. Despite this, data-dependent regret bounds have been completely overlooked in constrained MAB settings. The goal of this paper is to answer the following question: Can data-dependent regret bounds be derived in the presence of constraints? We answer this question affirmatively in constrained MABs with adversarial losses and stochastic constraints. Specifically, our main focus is on the most challenging and natural settings with hard constraints, where the learner must ensure that the constraints are always satisfied with high probability. We design an algorithm with a regret bound consisting of two data-dependent terms. The first term captures the difficulty of satisfying the constraints, while the second one encodes the complexity of learning independently of the presence of constraints. We also prove a lower bound showing that these two terms are not artifacts of our specific approach and analysis, but rather the fundamental components that inherently characterize the complexities of the problem. Finally, in designing our algorithm, we also derive some novel results in the related (and easier) soft constraints settings, which may be of independent interest.

Catoni-Style Change Point Detection for Regret Minimization in Non-Stationary Heavy-Tailed Bandits

May 26, 2025Abstract:Regret minimization in stochastic non-stationary bandits gained popularity over the last decade, as it can model a broad class of real-world problems, from advertising to recommendation systems. Existing literature relies on various assumptions about the reward-generating process, such as Bernoulli or subgaussian rewards. However, in settings such as finance and telecommunications, heavy-tailed distributions naturally arise. In this work, we tackle the heavy-tailed piecewise-stationary bandit problem. Heavy-tailed bandits, introduced by Bubeck et al., 2013, operate on the minimal assumption that the finite absolute centered moments of maximum order $1+\epsilon$ are uniformly bounded by a constant $v<+\infty$, for some $\epsilon \in (0,1]$. We focus on the most popular non-stationary bandit setting, i.e., the piecewise-stationary setting, in which the mean of reward-generating distributions may change at unknown time steps. We provide a novel Catoni-style change-point detection strategy tailored for heavy-tailed distributions that relies on recent advancements in the theory of sequential estimation, which is of independent interest. We introduce Robust-CPD-UCB, which combines this change-point detection strategy with optimistic algorithms for bandits, providing its regret upper bound and an impossibility result on the minimum attainable regret for any policy. Finally, we validate our approach through numerical experiments on synthetic and real-world datasets.

Bridging Rested and Restless Bandits with Graph-Triggering: Rising and Rotting

Sep 09, 2024

Abstract:Rested and Restless Bandits are two well-known bandit settings that are useful to model real-world sequential decision-making problems in which the expected reward of an arm evolves over time due to the actions we perform or due to the nature. In this work, we propose Graph-Triggered Bandits (GTBs), a unifying framework to generalize and extend rested and restless bandits. In this setting, the evolution of the arms' expected rewards is governed by a graph defined over the arms. An edge connecting a pair of arms $(i,j)$ represents the fact that a pull of arm $i$ triggers the evolution of arm $j$, and vice versa. Interestingly, rested and restless bandits are both special cases of our model for some suitable (degenerated) graph. As relevant case studies for this setting, we focus on two specific types of monotonic bandits: rising, where the expected reward of an arm grows as the number of triggers increases, and rotting, where the opposite behavior occurs. For these cases, we study the optimal policies. We provide suitable algorithms for all scenarios and discuss their theoretical guarantees, highlighting the complexity of the learning problem concerning instance-dependent terms that encode specific properties of the underlying graph structure.

Towards Fully Adaptive Regret Minimization in Heavy-Tailed Bandits

Oct 04, 2023Abstract:Heavy-tailed distributions naturally arise in many settings, from finance to telecommunications. While regret minimization under sub-Gaussian or bounded support rewards has been widely studied, learning on heavy-tailed distributions only gained popularity over the last decade. In the stochastic heavy-tailed bandit problem, an agent learns under the assumption that the distributions have finite moments of maximum order $1+\epsilon$ which are uniformly bounded by a constant $u$, for some $\epsilon \in (0,1]$. To the best of our knowledge, literature only provides algorithms requiring these two quantities as an input. In this paper, we study the stochastic adaptive heavy-tailed bandit, a variation of the standard setting where both $\epsilon$ and $u$ are unknown to the agent. We show that adaptivity comes at a cost, introducing two lower bounds on the regret of any adaptive algorithm, implying a higher regret w.r.t. the standard setting. Finally, we introduce a specific distributional assumption and provide Adaptive Robust UCB, a regret minimization strategy matching the known lower bound for the heavy-tailed MAB problem.

A Best-of-Both-Worlds Algorithm for Constrained MDPs with Long-Term Constraints

Apr 27, 2023

Abstract:We study online learning in episodic constrained Markov decision processes (CMDPs), where the goal of the learner is to collect as much reward as possible over the episodes, while guaranteeing that some long-term constraints are satisfied during the learning process. Rewards and constraints can be selected either stochastically or adversarially, and the transition function is not known to the learner. While online learning in classical unconstrained MDPs has received considerable attention over the last years, the setting of CMDPs is still largely unexplored. This is surprising, since in real-world applications, such as, e.g., autonomous driving, automated bidding, and recommender systems, there are usually additional constraints and specifications that an agent has to obey during the learning process. In this paper, we provide the first best-of-both-worlds algorithm for CMDPs with long-term constraints. Our algorithm is capable of handling settings in which rewards and constraints are selected either stochastically or adversarially, without requiring any knowledge of the underling process. Moreover, our algorithm matches state-of-the-art regret and constraint violation bounds for settings in which constraints are selected stochastically, while it is the first to provide guarantees in the case in which they are chosen adversarially.

Autoregressive Bandits

Dec 12, 2022Abstract:Autoregressive processes naturally arise in a large variety of real-world scenarios, including e.g., stock markets, sell forecasting, weather prediction, advertising, and pricing. When addressing a sequential decision-making problem in such a context, the temporal dependence between consecutive observations should be properly accounted for converge to the optimal decision policy. In this work, we propose a novel online learning setting, named Autoregressive Bandits (ARBs), in which the observed reward follows an autoregressive process of order $k$, whose parameters depend on the action the agent chooses, within a finite set of $n$ actions. Then, we devise an optimistic regret minimization algorithm AutoRegressive Upper Confidence Bounds (AR-UCB) that suffers regret of order $\widetilde{\mathcal{O}} \left( \frac{(k+1)^{3/2}\sqrt{nT}}{(1-\Gamma)^2} \right)$, being $T$ the optimization horizon and $\Gamma < 1$ an index of the stability of the system. Finally, we present a numerical validation in several synthetic and one real-world setting, in comparison with general and specific purpose bandit baselines showing the advantages of the proposed approach.

Dynamic Pricing with Volume Discounts in Online Settings

Nov 17, 2022Abstract:According to the main international reports, more pervasive industrial and business-process automation, thanks to machine learning and advanced analytic tools, will unlock more than 14 trillion USD worldwide annually by 2030. In the specific case of pricing problems-which constitute the class of problems we investigate in this paper-, the estimated unlocked value will be about 0.5 trillion USD per year. In particular, this paper focuses on pricing in e-commerce when the objective function is profit maximization and only transaction data are available. This setting is one of the most common in real-world applications. Our work aims to find a pricing strategy that allows defining optimal prices at different volume thresholds to serve different classes of users. Furthermore, we face the major challenge, common in real-world settings, of dealing with limited data available. We design a two-phase online learning algorithm, namely PVD-B, capable of exploiting the data incrementally in an online fashion. The algorithm first estimates the demand curve and retrieves the optimal average price, and subsequently it offers discounts to differentiate the prices for each volume threshold. We ran a real-world 4-month-long A/B testing experiment in collaboration with an Italian e-commerce company, in which our algorithm PVD-B-corresponding to A configuration-has been compared with human pricing specialists-corresponding to B configuration. At the end of the experiment, our algorithm produced a total turnover of about 300 KEuros, outperforming the B configuration performance by about 55%. The Italian company we collaborated with decided to adopt our algorithm for more than 1,200 products since January 2022.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge