Gianmarco De Francisci Morales

Do Androids Dream of Unseen Puppeteers? Probing for a Conspiracy Mindset in Large Language Models

Nov 05, 2025Abstract:In this paper, we investigate whether Large Language Models (LLMs) exhibit conspiratorial tendencies, whether they display sociodemographic biases in this domain, and how easily they can be conditioned into adopting conspiratorial perspectives. Conspiracy beliefs play a central role in the spread of misinformation and in shaping distrust toward institutions, making them a critical testbed for evaluating the social fidelity of LLMs. LLMs are increasingly used as proxies for studying human behavior, yet little is known about whether they reproduce higher-order psychological constructs such as a conspiratorial mindset. To bridge this research gap, we administer validated psychometric surveys measuring conspiracy mindset to multiple models under different prompting and conditioning strategies. Our findings reveal that LLMs show partial agreement with elements of conspiracy belief, and conditioning with socio-demographic attributes produces uneven effects, exposing latent demographic biases. Moreover, targeted prompts can easily shift model responses toward conspiratorial directions, underscoring both the susceptibility of LLMs to manipulation and the potential risks of their deployment in sensitive contexts. These results highlight the importance of critically evaluating the psychological dimensions embedded in LLMs, both to advance computational social science and to inform possible mitigation strategies against harmful uses.

Bias and Identifiability in the Bounded Confidence Model

Jun 13, 2025Abstract:Opinion dynamics models such as the bounded confidence models (BCMs) describe how a population can reach consensus, fragmentation, or polarization, depending on a few parameters. Connecting such models to real-world data could help understanding such phenomena, testing model assumptions. To this end, estimation of model parameters is a key aspect, and maximum likelihood estimation provides a principled way to tackle it. Here, our goal is to outline the properties of statistical estimators of the two key BCM parameters: the confidence bound and the convergence rate. We find that their maximum likelihood estimators present different characteristics: the one for the confidence bound presents a small-sample bias but is consistent, while the estimator of the convergence rate shows a persistent bias. Moreover, the joint parameter estimation is affected by identifiability issues for specific regions of the parameter space, as several local maxima are present in the likelihood function. Our results show how the analysis of the likelihood function is a fruitful approach for better understanding the pitfalls and possibilities of estimating the parameters of opinion dynamics models, and more in general, agent-based models, and for offering formal guarantees for their calibration.

Alice and the Caterpillar: A more descriptive null model for assessing data mining results

Jun 11, 2025Abstract:We introduce novel null models for assessing the results obtained from observed binary transactional and sequence datasets, using statistical hypothesis testing. Our null models maintain more properties of the observed dataset than existing ones. Specifically, they preserve the Bipartite Joint Degree Matrix of the bipartite (multi-)graph corresponding to the dataset, which ensures that the number of caterpillars, i.e., paths of length three, is preserved, in addition to other properties considered by other models. We describe Alice, a suite of Markov chain Monte Carlo algorithms for sampling datasets from our null models, based on a carefully defined set of states and efficient operations to move between them. The results of our experimental evaluation show that Alice mixes fast and scales well, and that our null model finds different significant results than ones previously considered in the literature.

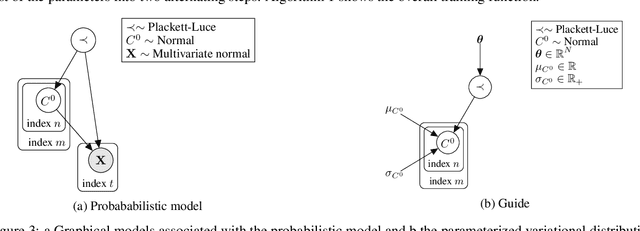

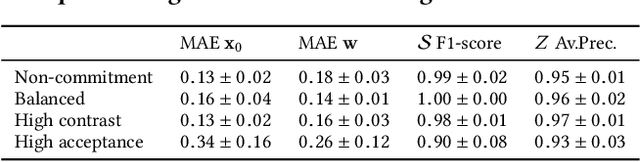

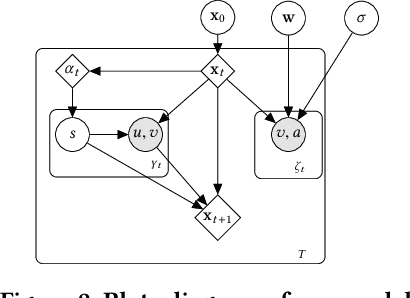

Variational Inference of Parameters in Opinion Dynamics Models

Mar 08, 2024

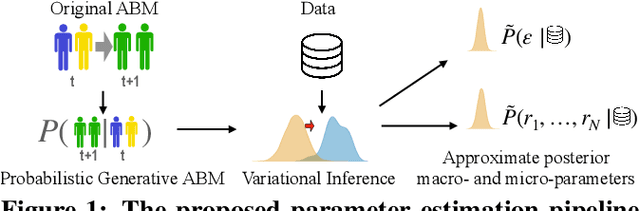

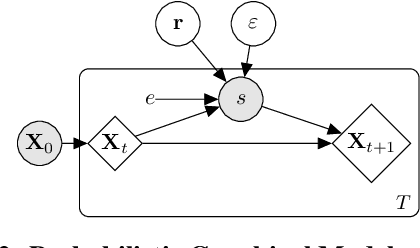

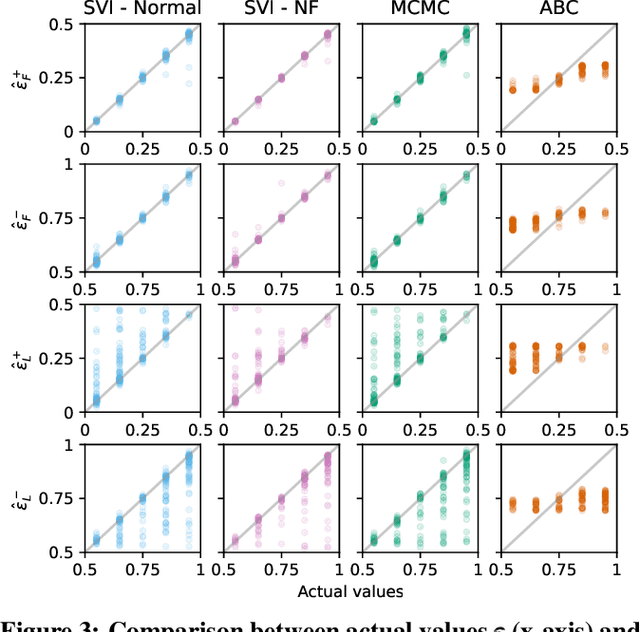

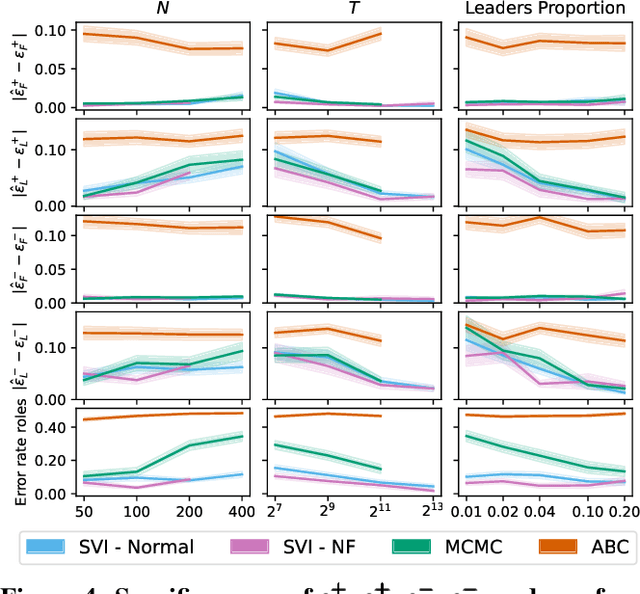

Abstract:Despite the frequent use of agent-based models (ABMs) for studying social phenomena, parameter estimation remains a challenge, often relying on costly simulation-based heuristics. This work uses variational inference to estimate the parameters of an opinion dynamics ABM, by transforming the estimation problem into an optimization task that can be solved directly. Our proposal relies on probabilistic generative ABMs (PGABMs): we start by synthesizing a probabilistic generative model from the ABM rules. Then, we transform the inference process into an optimization problem suitable for automatic differentiation. In particular, we use the Gumbel-Softmax reparameterization for categorical agent attributes and stochastic variational inference for parameter estimation. Furthermore, we explore the trade-offs of using variational distributions with different complexity: normal distributions and normalizing flows. We validate our method on a bounded confidence model with agent roles (leaders and followers). Our approach estimates both macroscopic (bounded confidence intervals and backfire thresholds) and microscopic ($200$ categorical, agent-level roles) more accurately than simulation-based and MCMC methods. Consequently, our technique enables experts to tune and validate their ABMs against real-world observations, thus providing insights into human behavior in social systems via data-driven analysis.

Extracting the Multiscale Causal Backbone of Brain Dynamics

Oct 31, 2023

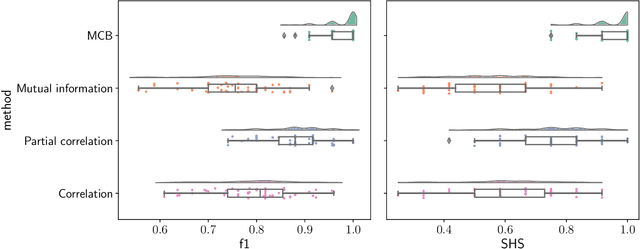

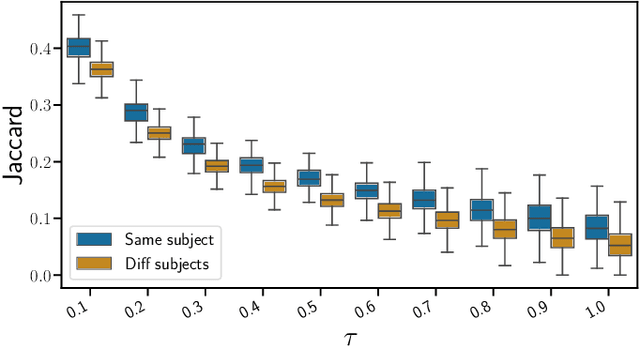

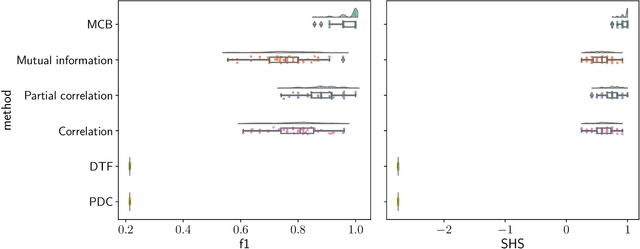

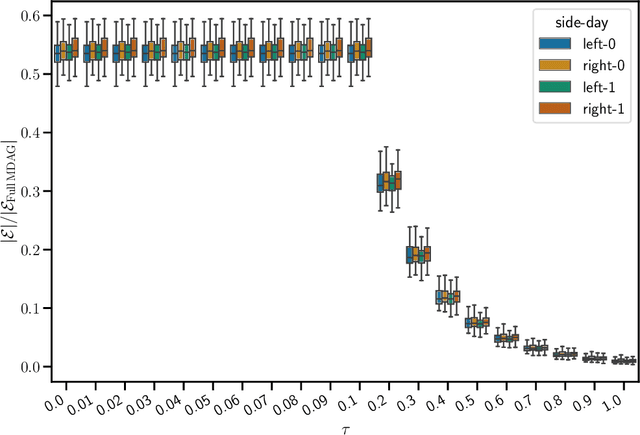

Abstract:The bulk of the research effort on brain connectivity revolves around statistical associations among brain regions, which do not directly relate to the causal mechanisms governing brain dynamics. Here we propose the multiscale causal backbone (MCB) of brain dynamics shared by a set of individuals across multiple temporal scales, and devise a principled methodology to extract it. Our approach leverages recent advances in multiscale causal structure learning and optimizes the trade-off between the model fitting and its complexity. Empirical assessment on synthetic data shows the superiority of our methodology over a baseline based on canonical functional connectivity networks. When applied to resting-state fMRI data, we find sparse MCBs for both the left and right brain hemispheres. Thanks to its multiscale nature, our approach shows that at low-frequency bands, causal dynamics are driven by brain regions associated with high-level cognitive functions; at higher frequencies instead, nodes related to sensory processing play a crucial role. Finally, our analysis of individual multiscale causal structures confirms the existence of a causal fingerprint of brain connectivity, thus supporting from a causal perspective the existing extensive research in brain connectivity fingerprinting.

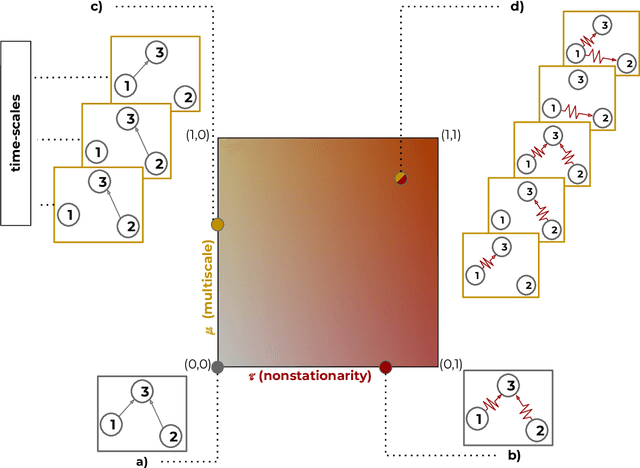

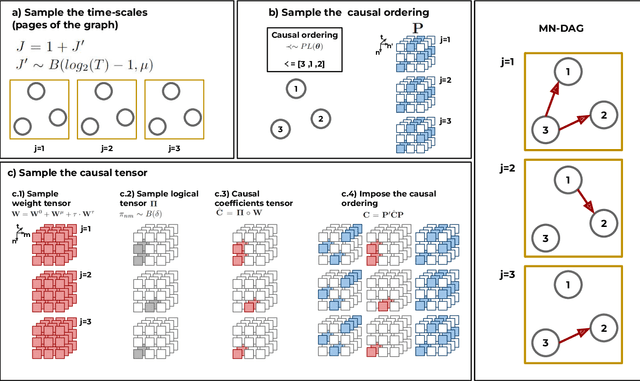

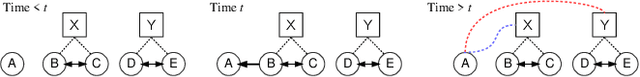

Multiscale Non-stationary Causal Structure Learning from Time Series Data

Aug 31, 2022

Abstract:This paper introduces a new type of causal structure, namely multiscale non-stationary directed acyclic graph (MN-DAG), that generalizes DAGs to the time-frequency domain. Our contribution is twofold. First, by leveraging results from spectral and causality theories, we expose a novel probabilistic generative model, which allows to sample an MN-DAG according to user-specified priors concerning the time-dependence and multiscale properties of the causal graph. Second, we devise a Bayesian method for the estimation of MN-DAGs, by means of stochastic variational inference (SVI), called Multiscale Non-Stationary Causal Structure Learner (MN-CASTLE). In addition to direct observations, MN-CASTLE exploits information from the decomposition of the total power spectrum of time series over different time resolutions. In our experiments, we first use the proposed model to generate synthetic data according to a latent MN-DAG, showing that the data generated reproduces well-known features of time series in different domains. Then we compare our learning method MN-CASTLE against baseline models on synthetic data generated with different multiscale and non-stationary settings, confirming the good performance of MN-CASTLE. Finally, we show some insights derived from the application of MN-CASTLE to study the causal structure of 7 global equity markets during the Covid-19 pandemic.

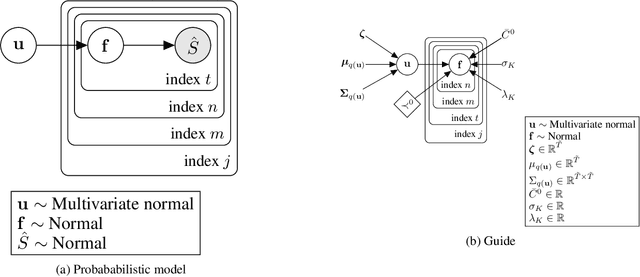

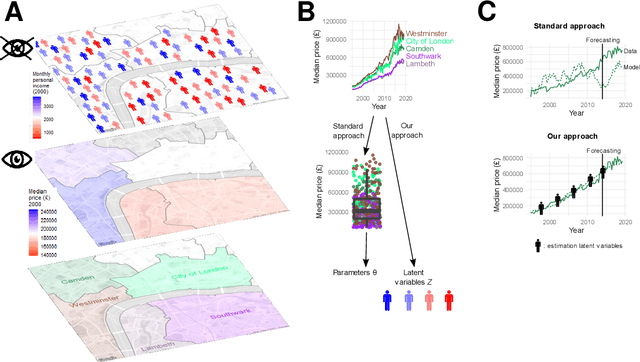

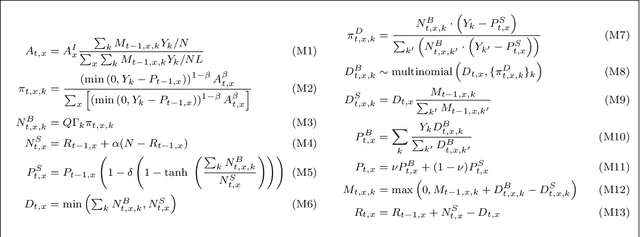

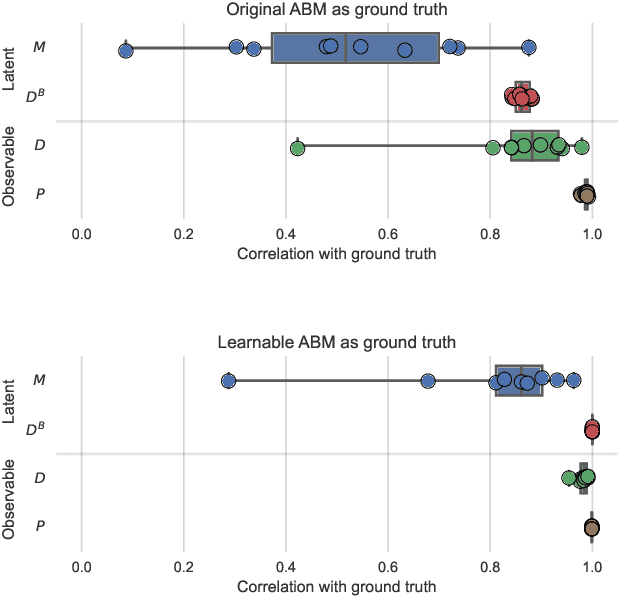

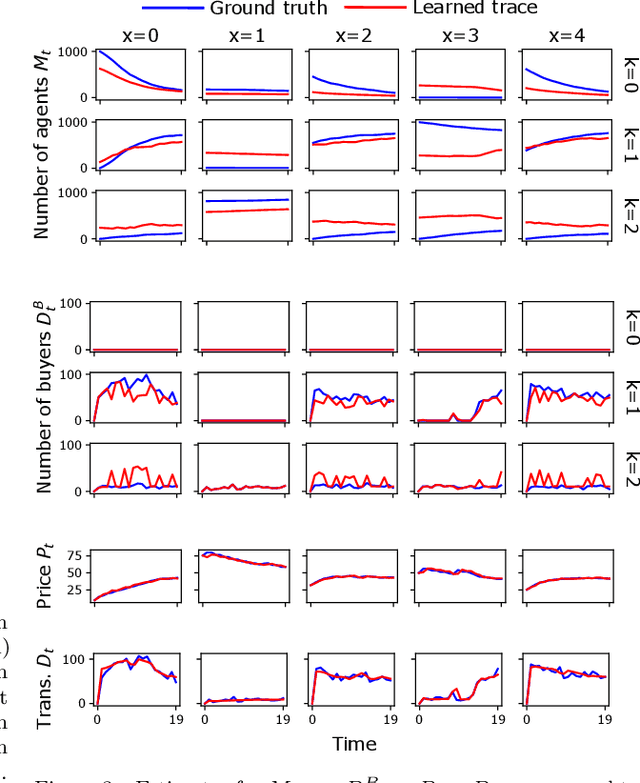

On learning agent-based models from data

May 10, 2022

Abstract:Agent-Based Models (ABMs) are used in several fields to study the evolution of complex systems from micro-level assumptions. However, ABMs typically can not estimate agent-specific (or "micro") variables: this is a major limitation which prevents ABMs from harnessing micro-level data availability and which greatly limits their predictive power. In this paper, we propose a protocol to learn the latent micro-variables of an ABM from data. The first step of our protocol is to reduce an ABM to a probabilistic model, characterized by a computationally tractable likelihood. This reduction follows two general design principles: balance of stochasticity and data availability, and replacement of unobservable discrete choices with differentiable approximations. Then, our protocol proceeds by maximizing the likelihood of the latent variables via a gradient-based expectation maximization algorithm. We demonstrate our protocol by applying it to an ABM of the housing market, in which agents with different incomes bid higher prices to live in high-income neighborhoods. We demonstrate that the obtained model allows accurate estimates of the latent variables, while preserving the general behavior of the ABM. We also show that our estimates can be used for out-of-sample forecasting. Our protocol can be seen as an alternative to black-box data assimilation methods, that forces the modeler to lay bare the assumptions of the model, to think about the inferential process, and to spot potential identification problems.

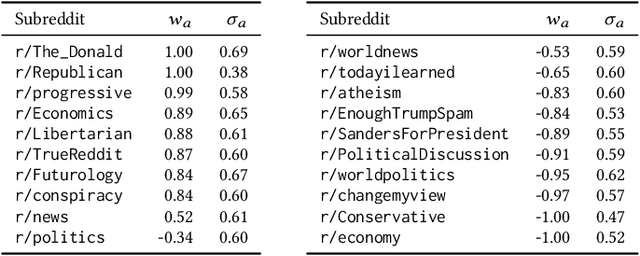

Learning Opinion Dynamics From Social Traces

Jun 02, 2020

Abstract:Opinion dynamics - the research field dealing with how people's opinions form and evolve in a social context - traditionally uses agent-based models to validate the implications of sociological theories. These models encode the causal mechanism that drives the opinion formation process, and have the advantage of being easy to interpret. However, as they do not exploit the availability of data, their predictive power is limited. Moreover, parameter calibration and model selection are manual and difficult tasks. In this work we propose an inference mechanism for fitting a generative, agent-like model of opinion dynamics to real-world social traces. Given a set of observables (e.g., actions and interactions between agents), our model can recover the most-likely latent opinion trajectories that are compatible with the assumptions about the process dynamics. This type of model retains the benefits of agent-based ones (i.e., causal interpretation), while adding the ability to perform model selection and hypothesis testing on real data. We showcase our proposal by translating a classical agent-based model of opinion dynamics into its generative counterpart. We then design an inference algorithm based on online expectation maximization to learn the latent parameters of the model. Such algorithm can recover the latent opinion trajectories from traces generated by the classical agent-based model. In addition, it can identify the most likely set of macro parameters used to generate a data trace, thus allowing testing of sociological hypotheses. Finally, we apply our model to real-world data from Reddit to explore the long-standing question about the impact of backfire effect. Our results suggest a low prominence of the effect in Reddit's political conversation.

* Published at KDD2020

Predicting the Role of Political Trolls in Social Media

Oct 04, 2019

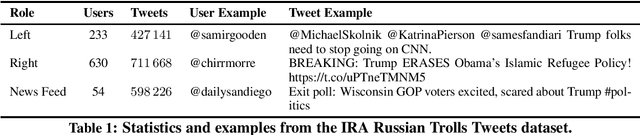

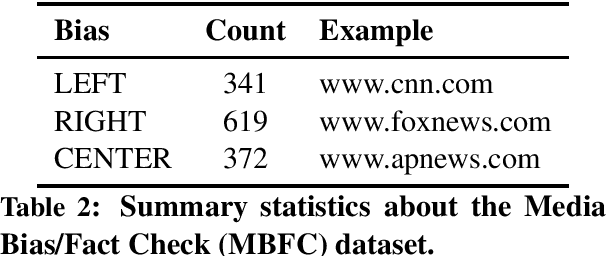

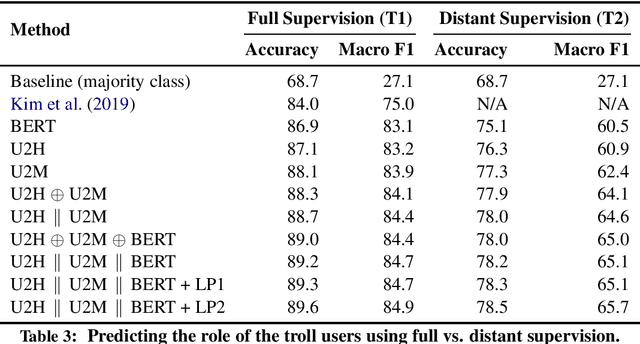

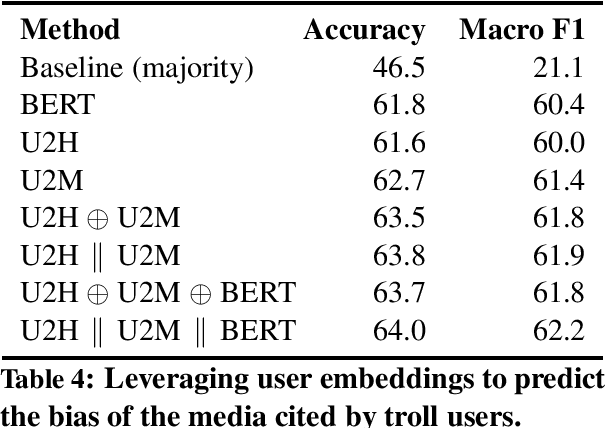

Abstract:We investigate the political roles of "Internet trolls" in social media. Political trolls, such as the ones linked to the Russian Internet Research Agency (IRA), have recently gained enormous attention for their ability to sway public opinion and even influence elections. Analysis of the online traces of trolls has shown different behavioral patterns, which target different slices of the population. However, this analysis is manual and labor-intensive, thus making it impractical as a first-response tool for newly-discovered troll farms. In this paper, we show how to automate this analysis by using machine learning in a realistic setting. In particular, we show how to classify trolls according to their political role ---left, news feed, right--- by using features extracted from social media, i.e., Twitter, in two scenarios: (i) in a traditional supervised learning scenario, where labels for trolls are available, and (ii) in a distant supervision scenario, where labels for trolls are not available, and we rely on more-commonly-available labels for news outlets mentioned by the trolls. Technically, we leverage the community structure and the text of the messages in the online social network of trolls represented as a graph, from which we extract several types of learned representations, i.e.,~embeddings, for the trolls. Experiments on the "IRA Russian Troll" dataset show that our methodology improves over the state-of-the-art in the first scenario, while providing a compelling case for the second scenario, which has not been explored in the literature thus far.

Link Prediction via Higher-Order Motif Features

Feb 08, 2019

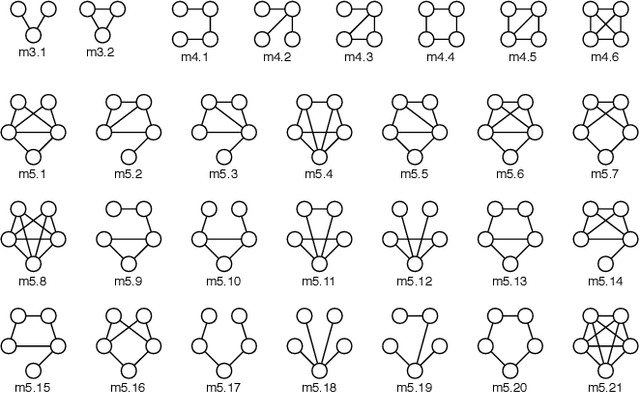

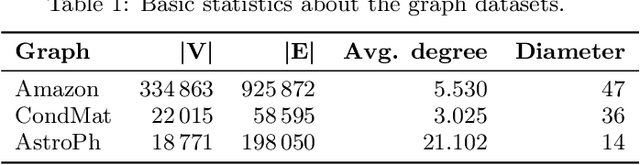

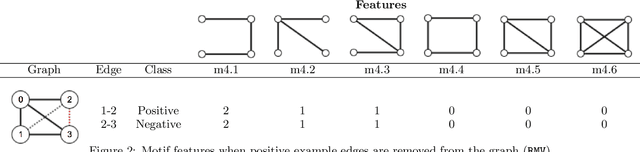

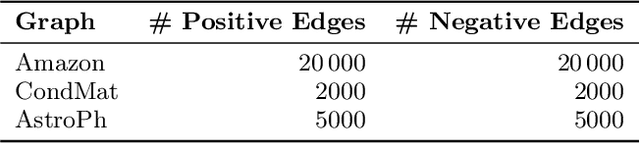

Abstract:Link prediction requires predicting which new links are likely to appear in a graph. Being able to predict unseen links with good accuracy has important applications in several domains such as social media, security, transportation, and recommendation systems. A common approach is to use features based on the common neighbors of an unconnected pair of nodes to predict whether the pair will form a link in the future. In this paper, we present an approach for link prediction that relies on higher-order analysis of the graph topology, well beyond common neighbors. We treat the link prediction problem as a supervised classification problem, and we propose a set of features that depend on the patterns or motifs that a pair of nodes occurs in. By using motifs of sizes 3, 4, and 5, our approach captures a high level of detail about the graph topology within the neighborhood of the pair of nodes, which leads to a higher classification accuracy. In addition to proposing the use of motif-based features, we also propose two optimizations related to constructing the classification dataset from the graph. First, to ensure that positive and negative examples are treated equally when extracting features, we propose adding the negative examples to the graph as an alternative to the common approach of removing the positive ones. Second, we show that it is important to control for the shortest-path distance when sampling pairs of nodes to form negative examples, since the difficulty of prediction varies with the shortest-path distance. We experimentally demonstrate that using off-the-shelf classifiers with a well constructed classification dataset results in up to 10 percentage points increase in accuracy over prior topology-based and feature learning methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge