Jacopo Lenti

Bias and Identifiability in the Bounded Confidence Model

Jun 13, 2025Abstract:Opinion dynamics models such as the bounded confidence models (BCMs) describe how a population can reach consensus, fragmentation, or polarization, depending on a few parameters. Connecting such models to real-world data could help understanding such phenomena, testing model assumptions. To this end, estimation of model parameters is a key aspect, and maximum likelihood estimation provides a principled way to tackle it. Here, our goal is to outline the properties of statistical estimators of the two key BCM parameters: the confidence bound and the convergence rate. We find that their maximum likelihood estimators present different characteristics: the one for the confidence bound presents a small-sample bias but is consistent, while the estimator of the convergence rate shows a persistent bias. Moreover, the joint parameter estimation is affected by identifiability issues for specific regions of the parameter space, as several local maxima are present in the likelihood function. Our results show how the analysis of the likelihood function is a fruitful approach for better understanding the pitfalls and possibilities of estimating the parameters of opinion dynamics models, and more in general, agent-based models, and for offering formal guarantees for their calibration.

Variational Inference of Parameters in Opinion Dynamics Models

Mar 08, 2024

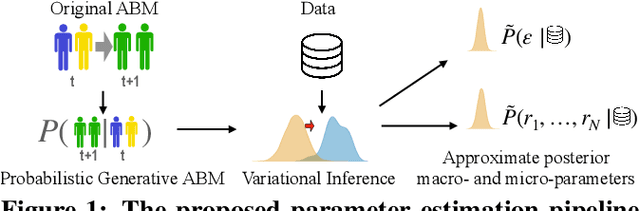

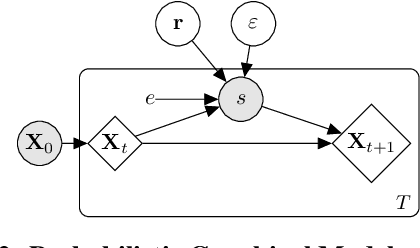

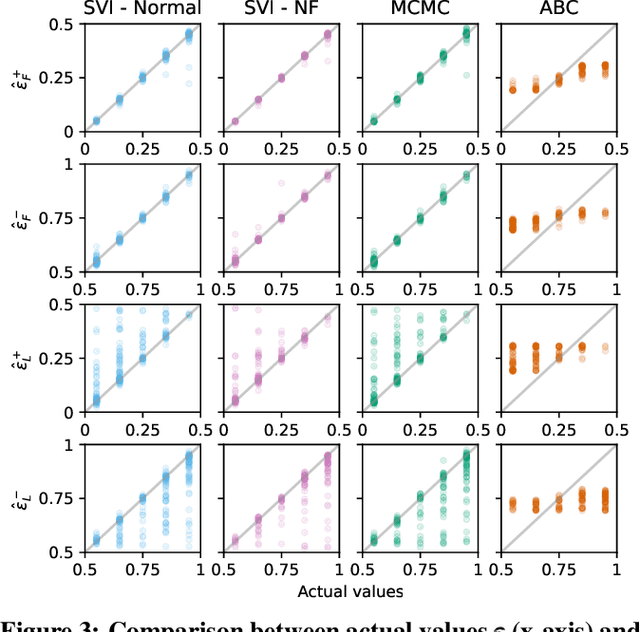

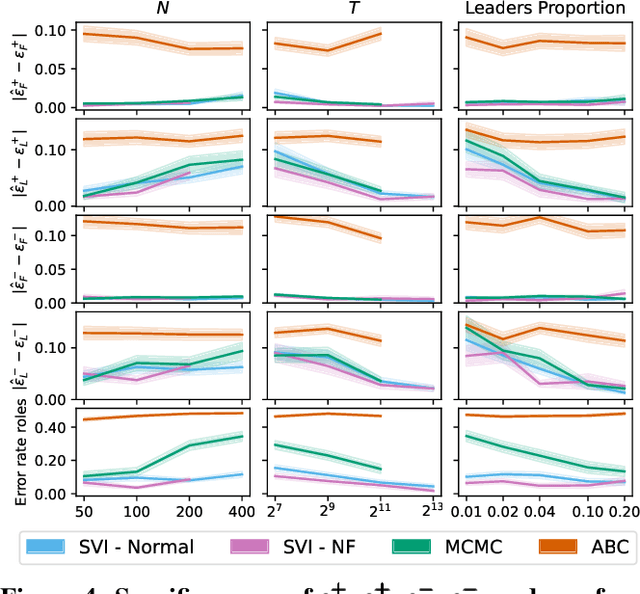

Abstract:Despite the frequent use of agent-based models (ABMs) for studying social phenomena, parameter estimation remains a challenge, often relying on costly simulation-based heuristics. This work uses variational inference to estimate the parameters of an opinion dynamics ABM, by transforming the estimation problem into an optimization task that can be solved directly. Our proposal relies on probabilistic generative ABMs (PGABMs): we start by synthesizing a probabilistic generative model from the ABM rules. Then, we transform the inference process into an optimization problem suitable for automatic differentiation. In particular, we use the Gumbel-Softmax reparameterization for categorical agent attributes and stochastic variational inference for parameter estimation. Furthermore, we explore the trade-offs of using variational distributions with different complexity: normal distributions and normalizing flows. We validate our method on a bounded confidence model with agent roles (leaders and followers). Our approach estimates both macroscopic (bounded confidence intervals and backfire thresholds) and microscopic ($200$ categorical, agent-level roles) more accurately than simulation-based and MCMC methods. Consequently, our technique enables experts to tune and validate their ABMs against real-world observations, thus providing insights into human behavior in social systems via data-driven analysis.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge