Georgios N. Yannakakis

modl.ai

PREFAB: PREFerence-based Affective Modeling for Low-Budget Self-Annotation

Jan 21, 2026Abstract:Self-annotation is the gold standard for collecting affective state labels in affective computing. Existing methods typically rely on full annotation, requiring users to continuously label affective states across entire sessions. While this process yields fine-grained data, it is time-consuming, cognitively demanding, and prone to fatigue and errors. To address these issues, we present PREFAB, a low-budget retrospective self-annotation method that targets affective inflection regions rather than full annotation. Grounded in the peak-end rule and ordinal representations of emotion, PREFAB employs a preference-learning model to detect relative affective changes, directing annotators to label only selected segments while interpolating the remainder of the stimulus. We further introduce a preview mechanism that provides brief contextual cues to assist annotation. We evaluate PREFAB through a technical performance study and a 25-participant user study. Results show that PREFAB outperforms baselines in modeling affective inflections while mitigating workload (and conditionally mitigating temporal burden). Importantly PREFAB improves annotator confidence without degrading annotation quality.

Emotions as Ambiguity-aware Ordinal Representations

Aug 27, 2025Abstract:Emotions are inherently ambiguous and dynamic phenomena, yet existing continuous emotion recognition approaches either ignore their ambiguity or treat ambiguity as an independent and static variable over time. Motivated by this gap in the literature, in this paper we introduce ambiguity-aware ordinal emotion representations, a novel framework that captures both the ambiguity present in emotion annotation and the inherent temporal dynamics of emotional traces. Specifically, we propose approaches that model emotion ambiguity through its rate of change. We evaluate our framework on two affective corpora -- RECOLA and GameVibe -- testing our proposed approaches on both bounded (arousal, valence) and unbounded (engagement) continuous traces. Our results demonstrate that ordinal representations outperform conventional ambiguity-aware models on unbounded labels, achieving the highest Concordance Correlation Coefficient (CCC) and Signed Differential Agreement (SDA) scores, highlighting their effectiveness in modeling the traces' dynamics. For bounded traces, ordinal representations excel in SDA, revealing their superior ability to capture relative changes of annotated emotion traces.

Evolutionary Level Repair

Jun 24, 2025Abstract:We address the problem of game level repair, which consists of taking a designed but non-functional game level and making it functional. This might consist of ensuring the completeness of the level, reachability of objects, or other performance characteristics. The repair problem may also be constrained in that it can only make a small number of changes to the level. We investigate search-based solutions to the level repair problem, particularly using evolutionary and quality-diversity algorithms, with good results. This level repair method is applied to levels generated using a machine learning-based procedural content generation (PCGML) method that generates stylistically appropriate but frequently broken levels. This combination of PCGML for generation and search-based methods for repair shows great promise as a hybrid procedural content generation (PCG) method.

The Procedural Content Generation Benchmark: An Open-source Testbed for Generative Challenges in Games

Mar 27, 2025Abstract:This paper introduces the Procedural Content Generation Benchmark for evaluating generative algorithms on different game content creation tasks. The benchmark comes with 12 game-related problems with multiple variants on each problem. Problems vary from creating levels of different kinds to creating rule sets for simple arcade games. Each problem has its own content representation, control parameters, and evaluation metrics for quality, diversity, and controllability. This benchmark is intended as a first step towards a standardized way of comparing generative algorithms. We use the benchmark to score three baseline algorithms: a random generator, an evolution strategy, and a genetic algorithm. Results show that some problems are easier to solve than others, as well as the impact the chosen objective has on quality, diversity, and controllability of the generated artifacts.

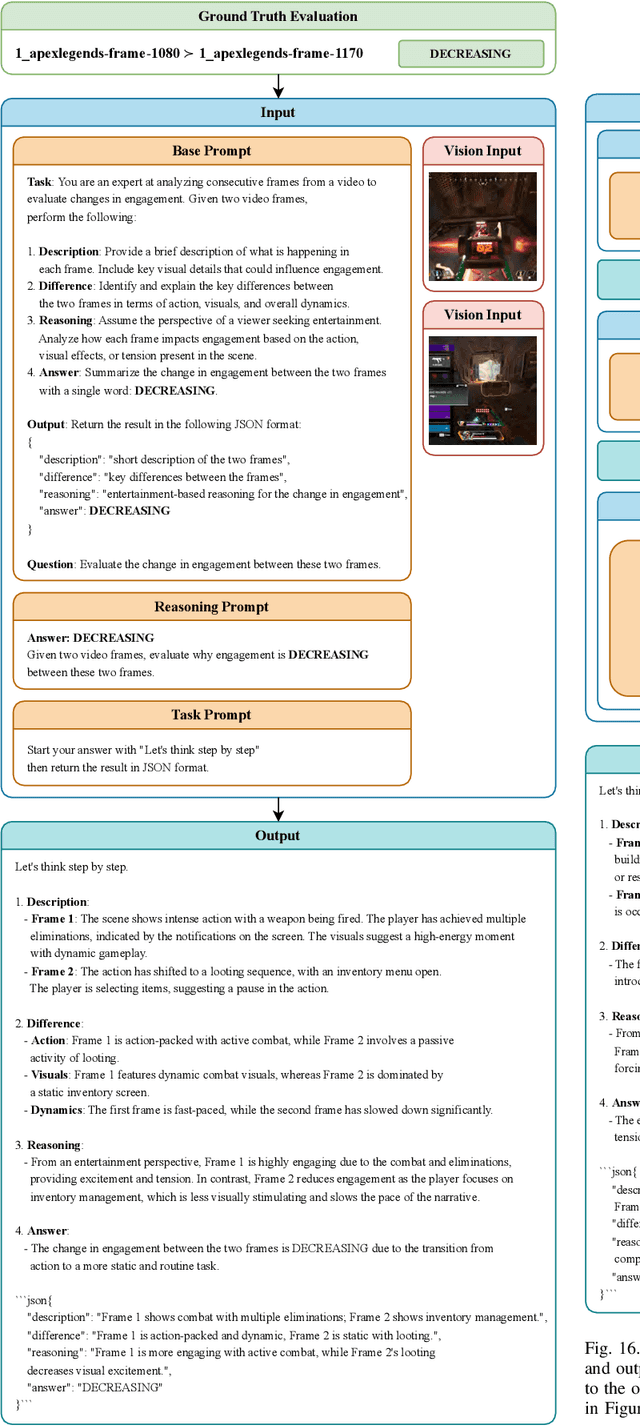

Can Large Language Models Capture Video Game Engagement?

Feb 05, 2025

Abstract:Can out-of-the-box pretrained Large Language Models (LLMs) detect human affect successfully when observing a video? To address this question, for the first time, we evaluate comprehensively the capacity of popular LLMs to annotate and successfully predict continuous affect annotations of videos when prompted by a sequence of text and video frames in a multimodal fashion. Particularly in this paper, we test LLMs' ability to correctly label changes of in-game engagement in 80 minutes of annotated videogame footage from 20 first-person shooter games of the GameVibe corpus. We run over 2,400 experiments to investigate the impact of LLM architecture, model size, input modality, prompting strategy, and ground truth processing method on engagement prediction. Our findings suggest that while LLMs rightfully claim human-like performance across multiple domains, they generally fall behind capturing continuous experience annotations provided by humans. We examine some of the underlying causes for the relatively poor overall performance, highlight the cases where LLMs exceed expectations, and draw a roadmap for the further exploration of automated emotion labelling via LLMs.

Human-like Bots for Tactical Shooters Using Compute-Efficient Sensors

Dec 30, 2024

Abstract:Artificial intelligence (AI) has enabled agents to master complex video games, from first-person shooters like Counter-Strike to real-time strategy games such as StarCraft II and racing games like Gran Turismo. While these achievements are notable, applying these AI methods in commercial video game production remains challenging due to computational constraints. In commercial scenarios, the majority of computational resources are allocated to 3D rendering, leaving limited capacity for AI methods, which often demand high computational power, particularly those relying on pixel-based sensors. Moreover, the gaming industry prioritizes creating human-like behavior in AI agents to enhance player experience, unlike academic models that focus on maximizing game performance. This paper introduces a novel methodology for training neural networks via imitation learning to play a complex, commercial-standard, VALORANT-like 2v2 tactical shooter game, requiring only modest CPU hardware during inference. Our approach leverages an innovative, pixel-free perception architecture using a small set of ray-cast sensors, which capture essential spatial information efficiently. These sensors allow AI to perform competently without the computational overhead of traditional methods. Models are trained to mimic human behavior using supervised learning on human trajectory data, resulting in realistic and engaging AI agents. Human evaluation tests confirm that our AI agents provide human-like gameplay experiences while operating efficiently under computational constraints. This offers a significant advancement in AI model development for tactical shooter games and possibly other genres.

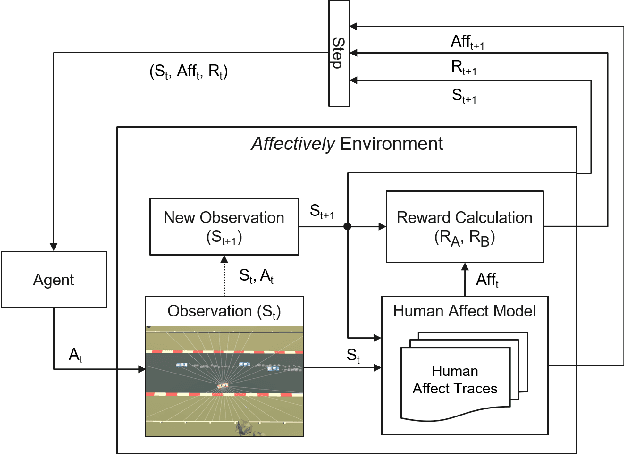

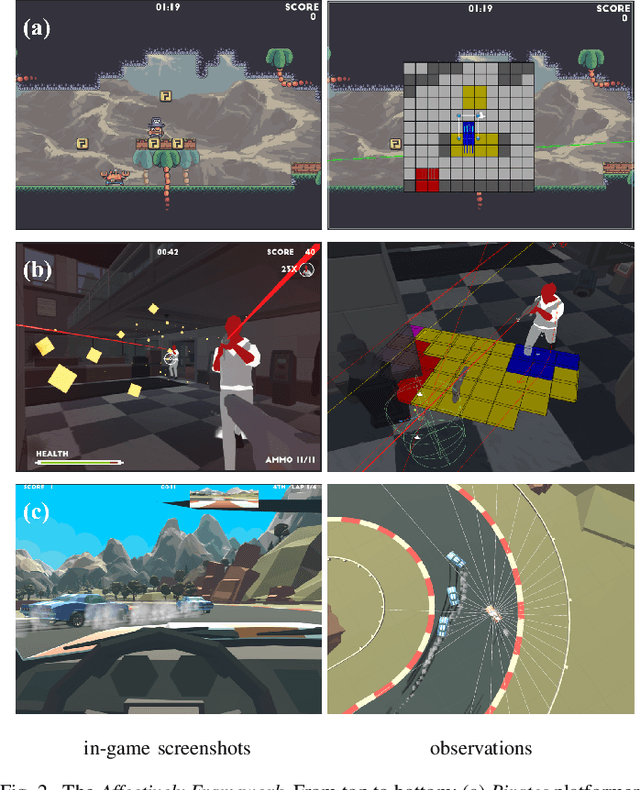

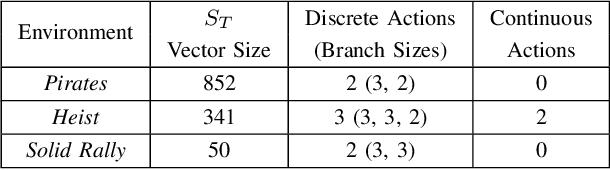

Affectively Framework: Towards Human-like Affect-Based Agents

Jul 25, 2024

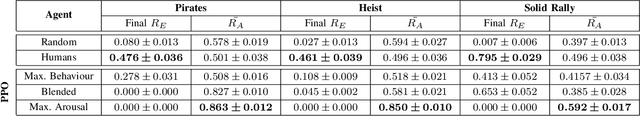

Abstract:Game environments offer a unique opportunity for training virtual agents due to their interactive nature, which provides diverse play traces and affect labels. Despite their potential, no reinforcement learning framework incorporates human affect models as part of their observation space or reward mechanism. To address this, we present the \emph{Affectively Framework}, a set of Open-AI Gym environments that integrate affect as part of the observation space. This paper introduces the framework and its three game environments and provides baseline experiments to validate its effectiveness and potential.

Dynamic Quality-Diversity Search

Apr 07, 2024Abstract:Evolutionary search via the quality-diversity (QD) paradigm can discover highly performing solutions in different behavioural niches, showing considerable potential in complex real-world scenarios such as evolutionary robotics. Yet most QD methods only tackle static tasks that are fixed over time, which is rarely the case in the real world. Unlike noisy environments, where the fitness of an individual changes slightly at every evaluation, dynamic environments simulate tasks where external factors at unknown and irregular intervals alter the performance of the individual with a severity that is unknown a priori. Literature on optimisation in dynamic environments is extensive, yet such environments have not been explored in the context of QD search. This paper introduces a novel and generalisable Dynamic QD methodology that aims to keep the archive of past solutions updated in the case of environment changes. Secondly, we present a novel characterisation of dynamic environments that can be easily applied to well-known benchmarks, with minor interventions to move them from a static task to a dynamic one. Our Dynamic QD intervention is applied on MAP-Elites and CMA-ME, two powerful QD algorithms, and we test the dynamic variants on different dynamic tasks.

MAP-Elites with Transverse Assessment for Multimodal Problems in Creative Domains

Mar 11, 2024Abstract:The recent advances in language-based generative models have paved the way for the orchestration of multiple generators of different artefact types (text, image, audio, etc.) into one system. Presently, many open-source pre-trained models combine text with other modalities, thus enabling shared vector embeddings to be compared across different generators. Within this context we propose a novel approach to handle multimodal creative tasks using Quality Diversity evolution. Our contribution is a variation of the MAP-Elites algorithm, MAP-Elites with Transverse Assessment (MEliTA), which is tailored for multimodal creative tasks and leverages deep learned models that assess coherence across modalities. MEliTA decouples the artefacts' modalities and promotes cross-pollination between elites. As a test bed for this algorithm, we generate text descriptions and cover images for a hypothetical video game and assign each artefact a unique modality-specific behavioural characteristic. Results indicate that MEliTA can improve text-to-image mappings within the solution space, compared to a baseline MAP-Elites algorithm that strictly treats each image-text pair as one solution. Our approach represents a significant step forward in multimodal bottom-up orchestration and lays the groundwork for more complex systems coordinating multimodal creative agents in the future.

Large Language Models and Games: A Survey and Roadmap

Feb 28, 2024Abstract:Recent years have seen an explosive increase in research on large language models (LLMs), and accompanying public engagement on the topic. While starting as a niche area within natural language processing, LLMs have shown remarkable potential across a broad range of applications and domains, including games. This paper surveys the current state of the art across the various applications of LLMs in and for games, and identifies the different roles LLMs can take within a game. Importantly, we discuss underexplored areas and promising directions for future uses of LLMs in games and we reconcile the potential and limitations of LLMs within the games domain. As the first comprehensive survey and roadmap at the intersection of LLMs and games, we are hopeful that this paper will serve as the basis for groundbreaking research and innovation in this exciting new field.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge