Gang Wei

MIPI 2024 Challenge on Nighttime Flare Removal: Methods and Results

Apr 30, 2024

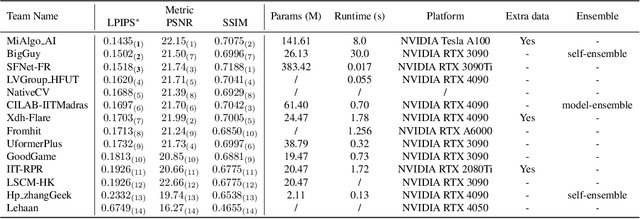

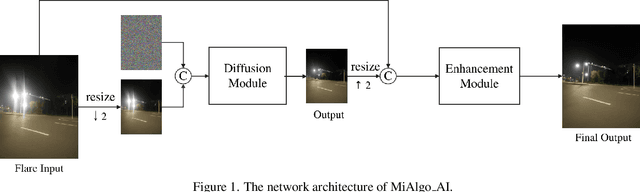

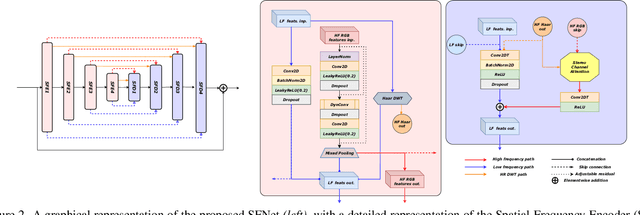

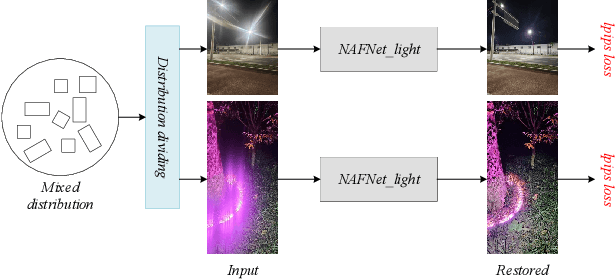

Abstract:The increasing demand for computational photography and imaging on mobile platforms has led to the widespread development and integration of advanced image sensors with novel algorithms in camera systems. However, the scarcity of high-quality data for research and the rare opportunity for in-depth exchange of views from industry and academia constrain the development of mobile intelligent photography and imaging (MIPI). Building on the achievements of the previous MIPI Workshops held at ECCV 2022 and CVPR 2023, we introduce our third MIPI challenge including three tracks focusing on novel image sensors and imaging algorithms. In this paper, we summarize and review the Nighttime Flare Removal track on MIPI 2024. In total, 170 participants were successfully registered, and 14 teams submitted results in the final testing phase. The developed solutions in this challenge achieved state-of-the-art performance on Nighttime Flare Removal. More details of this challenge and the link to the dataset can be found at https://mipi-challenge.org/MIPI2024/.

DAVOS: Semi-Supervised Video Object Segmentation via Adversarial Domain Adaptation

May 24, 2021

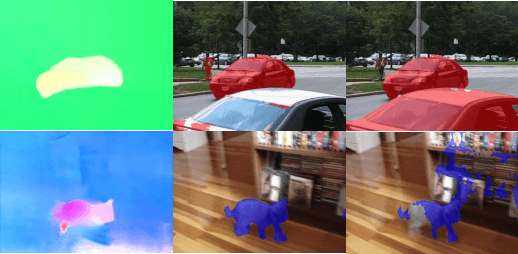

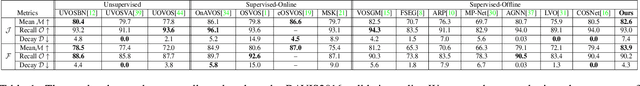

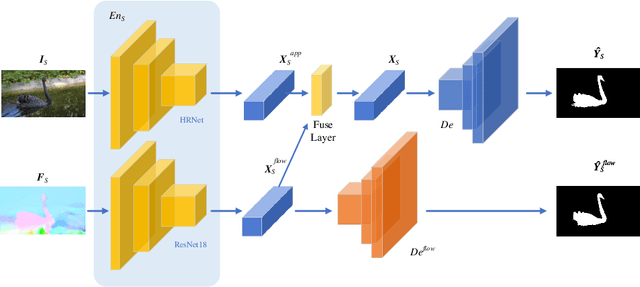

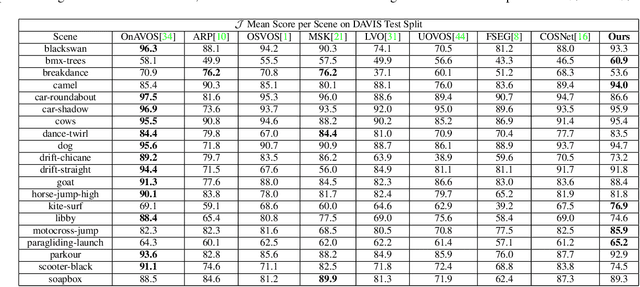

Abstract:Domain shift has always been one of the primary issues in video object segmentation (VOS), for which models suffer from degeneration when tested on unfamiliar datasets. Recently, many online methods have emerged to narrow the performance gap between training data (source domain) and test data (target domain) by fine-tuning on annotations of test data which are usually in shortage. In this paper, we propose a novel method to tackle domain shift by first introducing adversarial domain adaptation to the VOS task, with supervised training on the source domain and unsupervised training on the target domain. By fusing appearance and motion features with a convolution layer, and by adding supervision onto the motion branch, our model achieves state-of-the-art performance on DAVIS2016 with 82.6% mean IoU score after supervised training. Meanwhile, our adversarial domain adaptation strategy significantly raises the performance of the trained model when applied on FBMS59 and Youtube-Object, without exploiting extra annotations.

A Light-Weight Object Detection Framework with FPA Module for Optical Remote Sensing Imagery

Sep 07, 2020

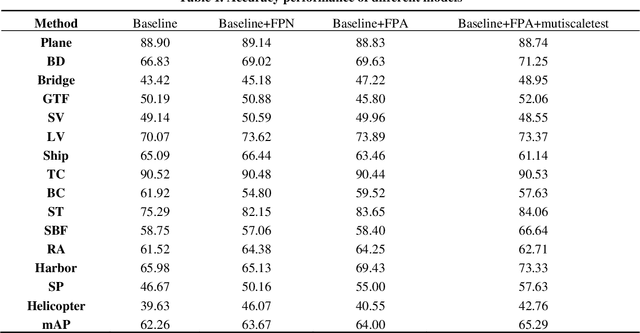

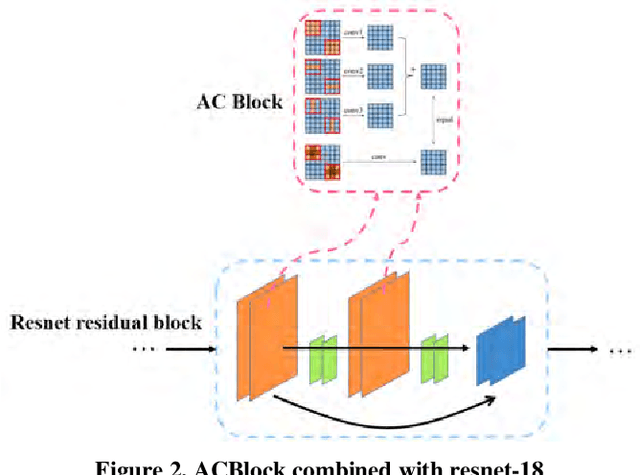

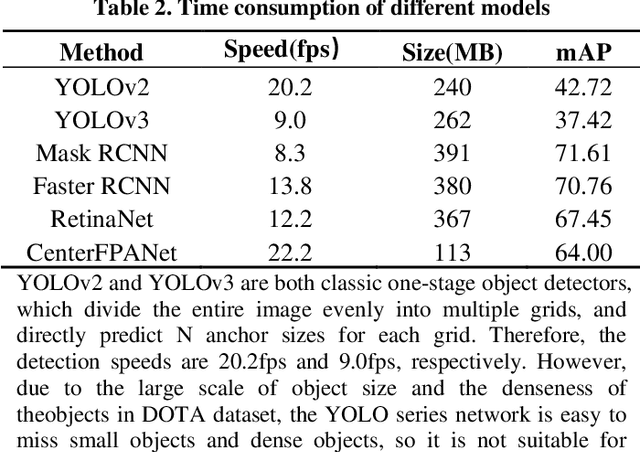

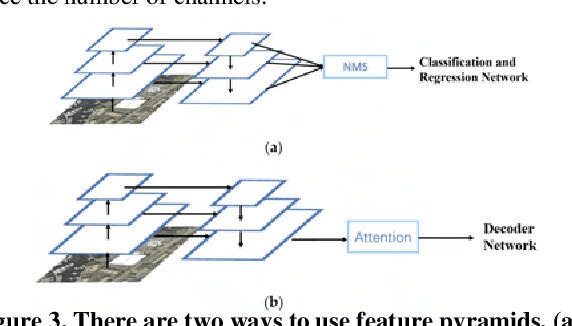

Abstract:With the development of remote sensing technology, the acquisition of remote sensing images is easier and easier, which provides sufficient data resources for the task of detecting remote sensing objects. However, how to detect objects quickly and accurately from many complex optical remote sensing images is a challenging hot issue. In this paper, we propose an efficient anchor free object detector, CenterFPANet. To pursue speed, we use a lightweight backbone and introduce the asymmetric revolution block. To improve the accuracy, we designed the FPA module, which links the feature maps of different levels, and introduces the attention mechanism to dynamically adjust the weights of each level of feature maps, which solves the problem of detection difficulty caused by large size range of remote sensing objects. This strategy can improve the accuracy of remote sensing image object detection without reducing the detection speed. On the DOTA dataset, CenterFPANet mAP is 64.00%, and FPS is 22.2, which is close to the accuracy of the anchor-based methods currently used and much faster than them. Compared with Faster RCNN, mAP is 6.76% lower but 60.87% faster. All in all, CenterFPANet achieves a balance between speed and accuracy in large-scale optical remote sensing object detection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge