Frank Hölzle

Enhancing Privacy: The Utility of Stand-Alone Synthetic CT and MRI for Tumor and Bone Segmentation

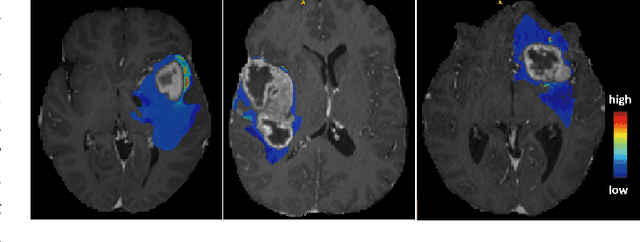

Jun 13, 2025Abstract:AI requires extensive datasets, while medical data is subject to high data protection. Anonymization is essential, but poses a challenge for some regions, such as the head, as identifying structures overlap with regions of clinical interest. Synthetic data offers a potential solution, but studies often lack rigorous evaluation of realism and utility. Therefore, we investigate to what extent synthetic data can replace real data in segmentation tasks. We employed head and neck cancer CT scans and brain glioma MRI scans from two large datasets. Synthetic data were generated using generative adversarial networks and diffusion models. We evaluated the quality of the synthetic data using MAE, MS-SSIM, Radiomics and a Visual Turing Test (VTT) performed by 5 radiologists and their usefulness in segmentation tasks using DSC. Radiomics indicates high fidelity of synthetic MRIs, but fall short in producing highly realistic CT tissue, with correlation coefficient of 0.8784 and 0.5461 for MRI and CT tumors, respectively. DSC results indicate limited utility of synthetic data: tumor segmentation achieved DSC=0.064 on CT and 0.834 on MRI, while bone segmentation a mean DSC=0.841. Relation between DSC and correlation is observed, but is limited by the complexity of the task. VTT results show synthetic CTs' utility, but with limited educational applications. Synthetic data can be used independently for the segmentation task, although limited by the complexity of the structures to segment. Advancing generative models to better tolerate heterogeneous inputs and learn subtle details is essential for enhancing their realism and expanding their application potential.

Cyto R-CNN and CytoNuke Dataset: Towards reliable whole-cell segmentation in bright-field histological images

Feb 04, 2024Abstract:Background: Cell segmentation in bright-field histological slides is a crucial topic in medical image analysis. Having access to accurate segmentation allows researchers to examine the relationship between cellular morphology and clinical observations. Unfortunately, most segmentation methods known today are limited to nuclei and cannot segmentate the cytoplasm. Material & Methods: We present a new network architecture Cyto R-CNN that is able to accurately segment whole cells (with both the nucleus and the cytoplasm) in bright-field images. We also present a new dataset CytoNuke, consisting of multiple thousand manual annotations of head and neck squamous cell carcinoma cells. Utilizing this dataset, we compared the performance of Cyto R-CNN to other popular cell segmentation algorithms, including QuPath's built-in algorithm, StarDist and Cellpose. To evaluate segmentation performance, we calculated AP50, AP75 and measured 17 morphological and staining-related features for all detected cells. We compared these measurements to the gold standard of manual segmentation using the Kolmogorov-Smirnov test. Results: Cyto R-CNN achieved an AP50 of 58.65% and an AP75 of 11.56% in whole-cell segmentation, outperforming all other methods (QuPath $19.46/0.91\%$; StarDist $45.33/2.32\%$; Cellpose $31.85/5.61\%$). Cell features derived from Cyto R-CNN showed the best agreement to the gold standard ($\bar{D} = 0.15$) outperforming QuPath ($\bar{D} = 0.22$), StarDist ($\bar{D} = 0.25$) and Cellpose ($\bar{D} = 0.23$). Conclusion: Our newly proposed Cyto R-CNN architecture outperforms current algorithms in whole-cell segmentation while providing more reliable cell measurements than any other model. This could improve digital pathology workflows, potentially leading to improved diagnosis. Moreover, our published dataset can be used to develop further models in the future.

MedShapeNet -- A Large-Scale Dataset of 3D Medical Shapes for Computer Vision

Sep 12, 2023

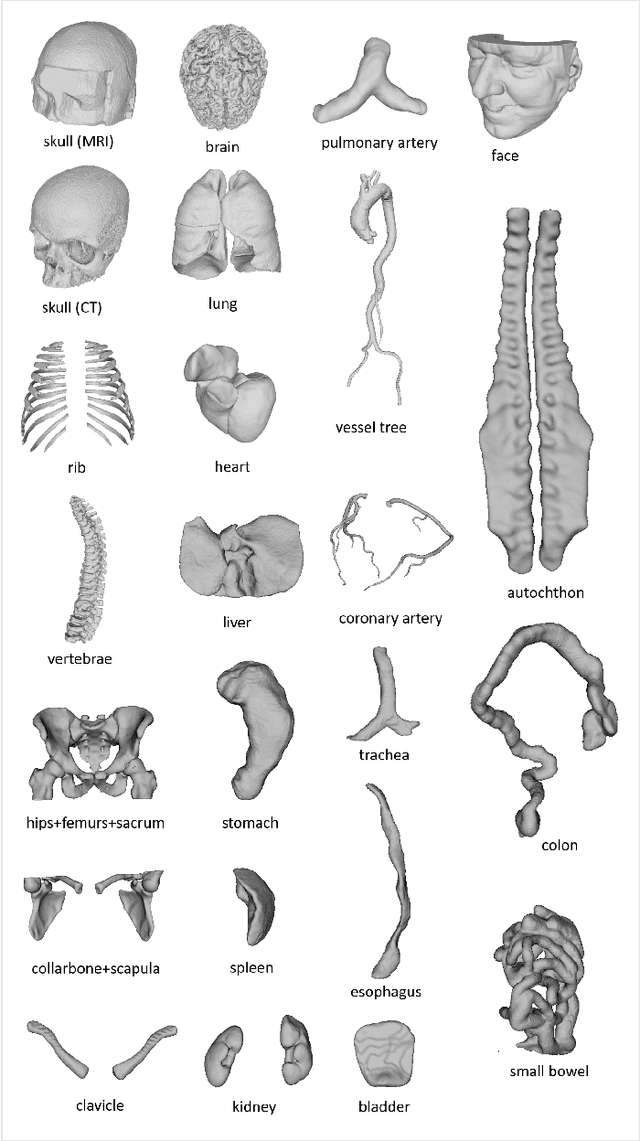

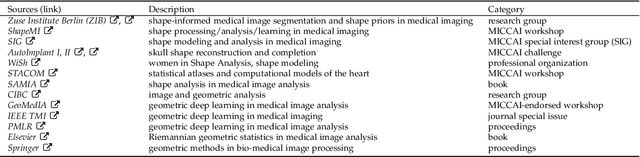

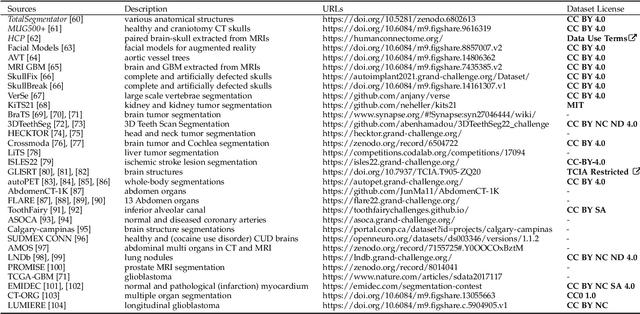

Abstract:We present MedShapeNet, a large collection of anatomical shapes (e.g., bones, organs, vessels) and 3D surgical instrument models. Prior to the deep learning era, the broad application of statistical shape models (SSMs) in medical image analysis is evidence that shapes have been commonly used to describe medical data. Nowadays, however, state-of-the-art (SOTA) deep learning algorithms in medical imaging are predominantly voxel-based. In computer vision, on the contrary, shapes (including, voxel occupancy grids, meshes, point clouds and implicit surface models) are preferred data representations in 3D, as seen from the numerous shape-related publications in premier vision conferences, such as the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), as well as the increasing popularity of ShapeNet (about 51,300 models) and Princeton ModelNet (127,915 models) in computer vision research. MedShapeNet is created as an alternative to these commonly used shape benchmarks to facilitate the translation of data-driven vision algorithms to medical applications, and it extends the opportunities to adapt SOTA vision algorithms to solve critical medical problems. Besides, the majority of the medical shapes in MedShapeNet are modeled directly on the imaging data of real patients, and therefore it complements well existing shape benchmarks comprising of computer-aided design (CAD) models. MedShapeNet currently includes more than 100,000 medical shapes, and provides annotations in the form of paired data. It is therefore also a freely available repository of 3D models for extended reality (virtual reality - VR, augmented reality - AR, mixed reality - MR) and medical 3D printing. This white paper describes in detail the motivations behind MedShapeNet, the shape acquisition procedures, the use cases, as well as the usage of the online shape search portal: https://medshapenet.ikim.nrw/

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge