Fernando Garcia

Data-driven emotional body language generation for social robotics

May 02, 2022

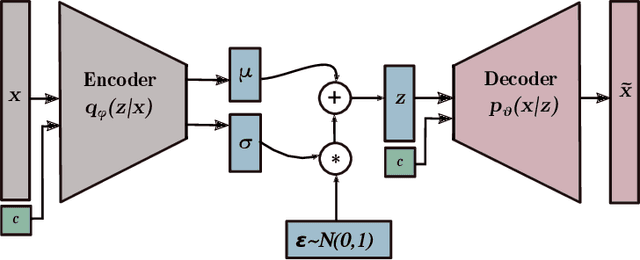

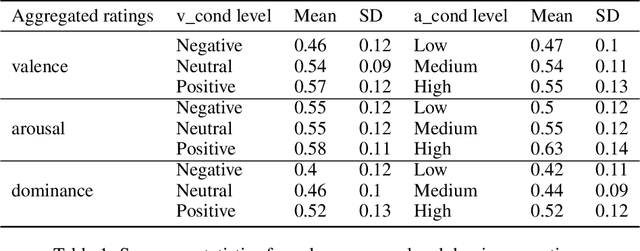

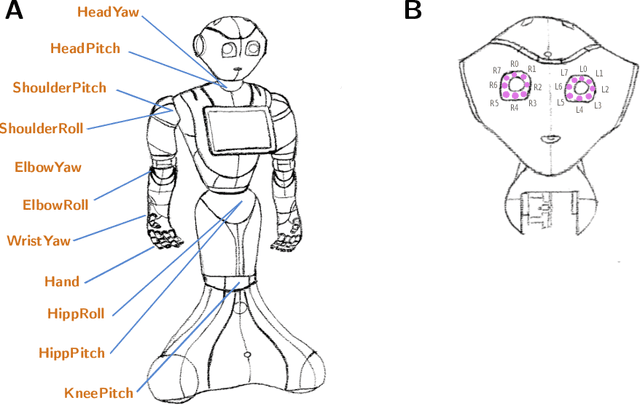

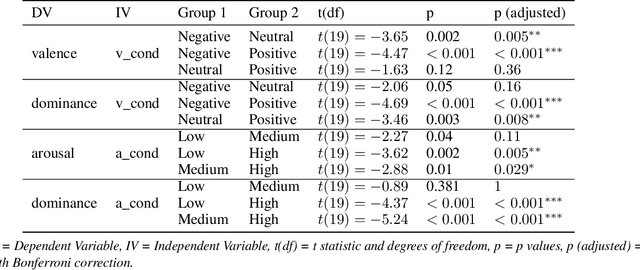

Abstract:In social robotics, endowing humanoid robots with the ability to generate bodily expressions of affect can improve human-robot interaction and collaboration, since humans attribute, and perhaps subconsciously anticipate, such traces to perceive an agent as engaging, trustworthy, and socially present. Robotic emotional body language needs to be believable, nuanced and relevant to the context. We implemented a deep learning data-driven framework that learns from a few hand-designed robotic bodily expressions and can generate numerous new ones of similar believability and lifelikeness. The framework uses the Conditional Variational Autoencoder model and a sampling approach based on the geometric properties of the model's latent space to condition the generative process on targeted levels of valence and arousal. The evaluation study found that the anthropomorphism and animacy of the generated expressions are not perceived differently from the hand-designed ones, and the emotional conditioning was adequately differentiable between most levels except the pairs of neutral-positive valence and low-medium arousal. Furthermore, an exploratory analysis of the results reveals a possible impact of the conditioning on the perceived dominance of the robot, as well as on the participants' attention.

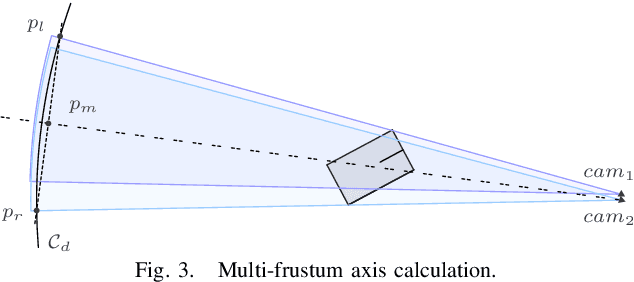

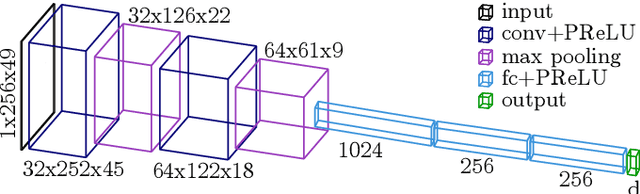

siaNMS: Non-Maximum Suppression with Siamese Networks for Multi-Camera 3D Object Detection

Feb 19, 2020

Abstract:The rapid development of embedded hardware in autonomous vehicles broadens their computational capabilities, thus bringing the possibility to mount more complete sensor setups able to handle driving scenarios of higher complexity. As a result, new challenges such as multiple detections of the same object have to be addressed. In this work, a siamese network is integrated into the pipeline of a well-known 3D object detector approach to suppress duplicate proposals coming from different cameras via re-identification. Additionally, associations are exploited to enhance the 3D box regression of the object by aggregating their corresponding LiDAR frustums. The experimental evaluation on the nuScenes dataset shows that the proposed method outperforms traditional NMS approaches.

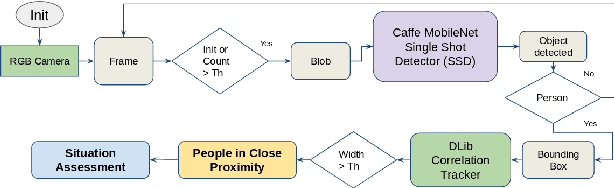

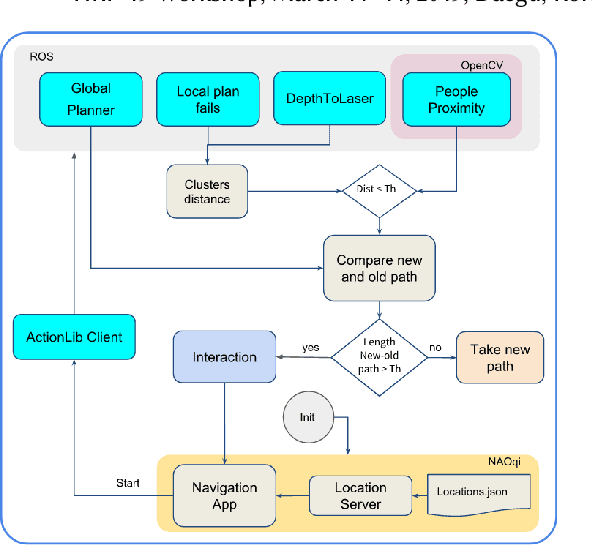

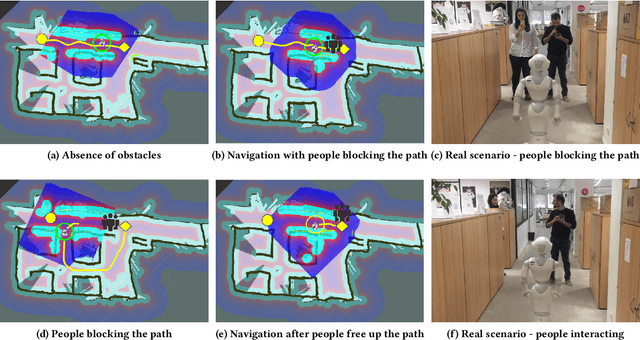

Enabling Socially Competent navigation through incorporating HRI

Apr 19, 2019

Abstract:Over the last years, social robots have been deployed in public environments making evident the need of human-aware navigation capabilities. In this regard, the robotics community have made efforts to include proxemics or social conventions within the navigation approaches. Nevertheless, few works have tackled the problem of labelling humans as an interactive agent when blocking the robot motion trajectory. Current state of the art navigation planners will either propose an alternative path or freeze the motion until the path is free. We present the first prototype of a framework designed to enhance social competency of robots while navigating in indoor environments. The implementation is done using Navigation and Object Detection open-source software. Specifically, the Robot Operating System (ROS) navigation stack, and OpenCV with Caffe deep learning models and MobileNet Single Shot Detector (SSD), respectively.

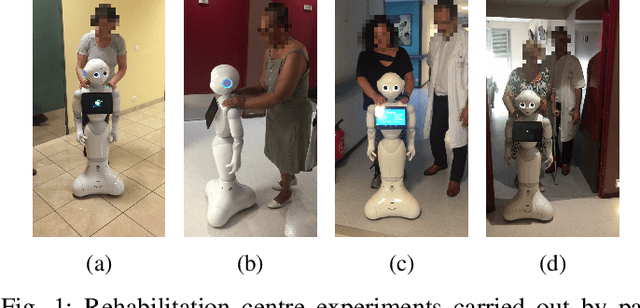

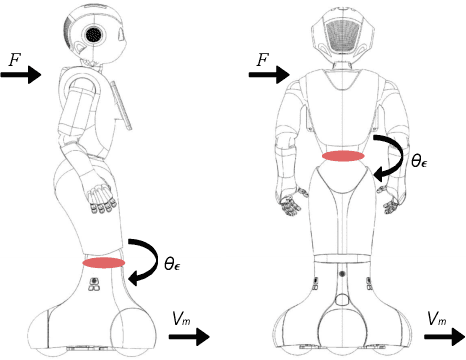

Wait for me! Towards socially assistive walk companions

Apr 18, 2019

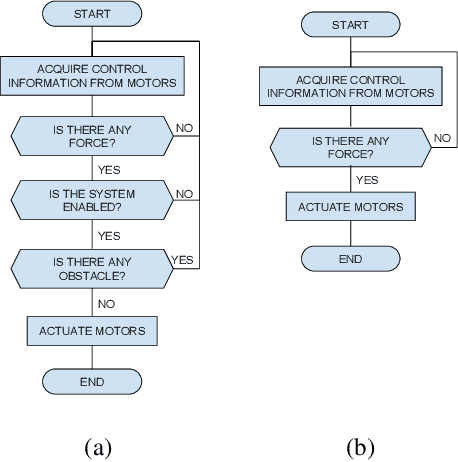

Abstract:The aim of the present study involves designing a humanoid robot guide as a walking trainer for elderly and rehabilitation patients. The system is based on the humanoid robot Pepper with a compliance approach that allows to match the motion intention of the user to the robot's pace. This feasibility study is backed up by an experimental evaluation conducted in a rehabilitation centre. We hypothesize that Pepper robot used as an assistive partner, can also benefit elderly users by motivating them to perform physical activity.

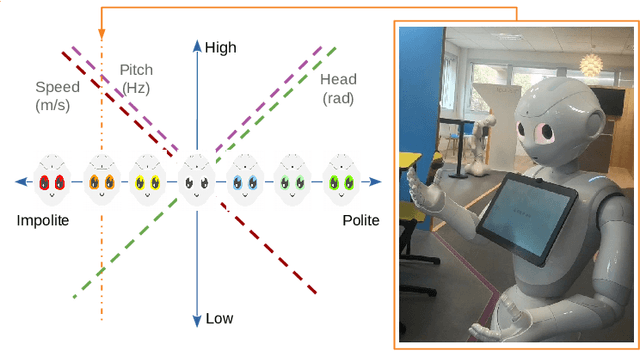

Towards Dialogue-based Navigation with Multivariate Adaptation driven by Intention and Politeness for Social Robots

Nov 14, 2018

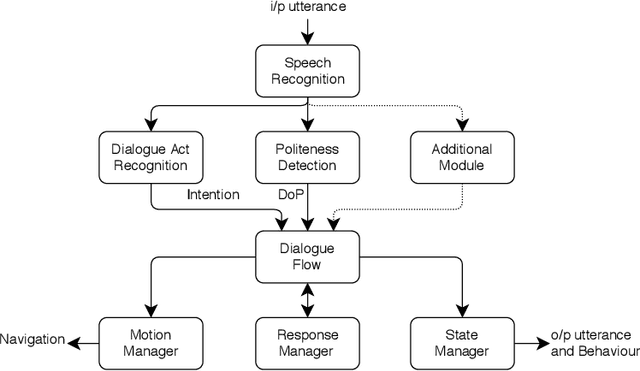

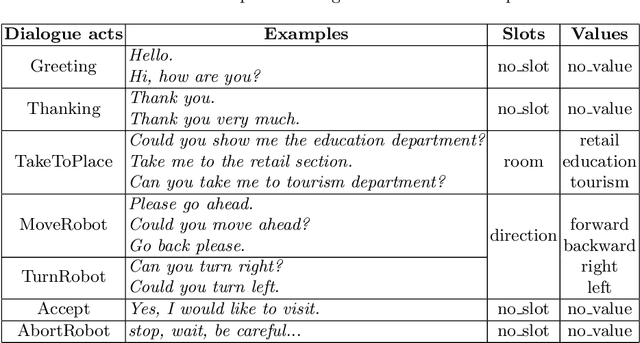

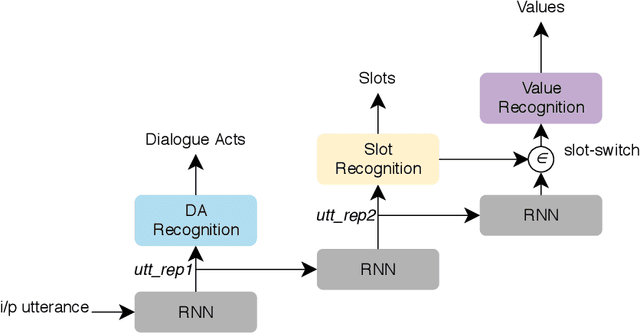

Abstract:Service robots need to show appropriate social behaviour in order to be deployed in social environments such as healthcare, education, retail, etc. Some of the main capabilities that robots should have are navigation and conversational skills. If the person is impatient, the person might want a robot to navigate faster and vice versa. Linguistic features that indicate politeness can provide social cues about a person's patient and impatient behaviour. The novelty presented in this paper is to dynamically incorporate politeness in robotic dialogue systems for navigation. Understanding the politeness in users' speech can be used to modulate the robot behaviour and responses. Therefore, we developed a dialogue system to navigate in an indoor environment, which produces different robot behaviours and responses based on users' intention and degree of politeness. We deploy and test our system with the Pepper robot that adapts to the changes in user's politeness.

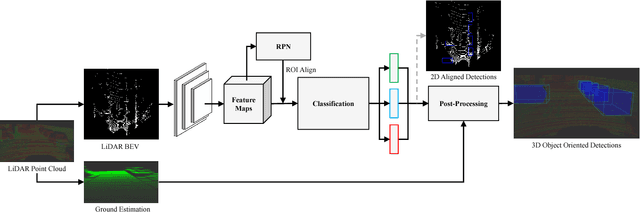

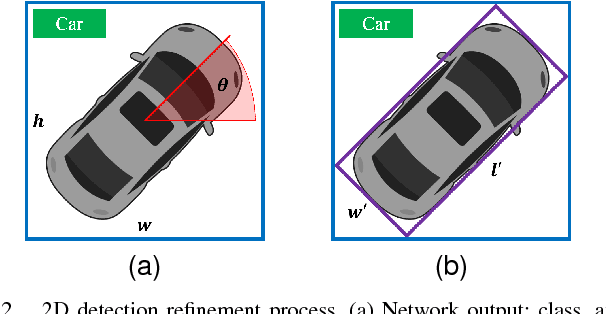

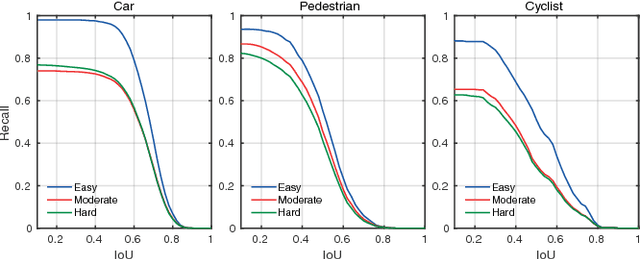

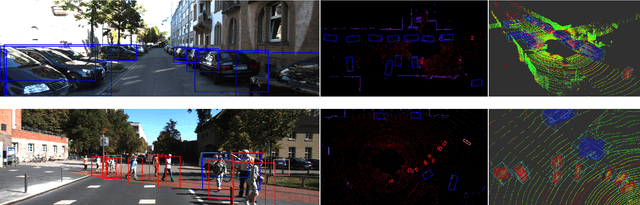

BirdNet: a 3D Object Detection Framework from LiDAR information

May 03, 2018

Abstract:Understanding driving situations regardless the conditions of the traffic scene is a cornerstone on the path towards autonomous vehicles; however, despite common sensor setups already include complementary devices such as LiDAR or radar, most of the research on perception systems has traditionally focused on computer vision. We present a LiDAR-based 3D object detection pipeline entailing three stages. First, laser information is projected into a novel cell encoding for bird's eye view projection. Later, both object location on the plane and its heading are estimated through a convolutional neural network originally designed for image processing. Finally, 3D oriented detections are computed in a post-processing phase. Experiments on KITTI dataset show that the proposed framework achieves state-of-the-art results among comparable methods. Further tests with different LiDAR sensors in real scenarios assess the multi-device capabilities of the approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge