Chandrakant Bothe

Conversational Analysis of Daily Dialog Data using Polite Emotional Dialogue Acts

May 11, 2022

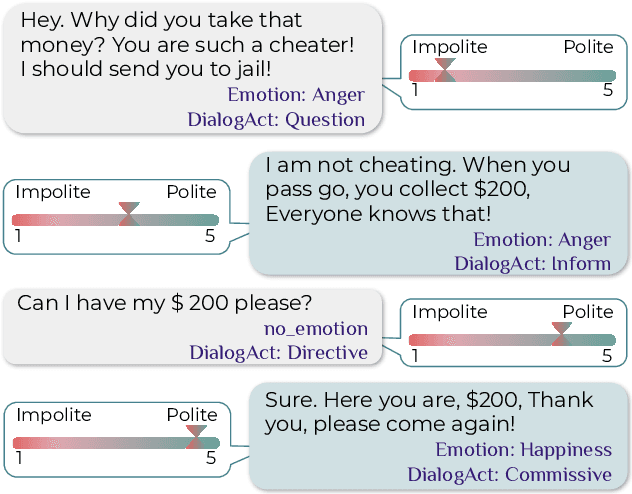

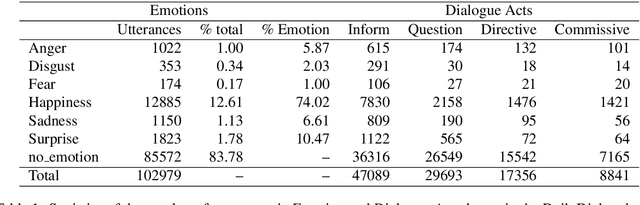

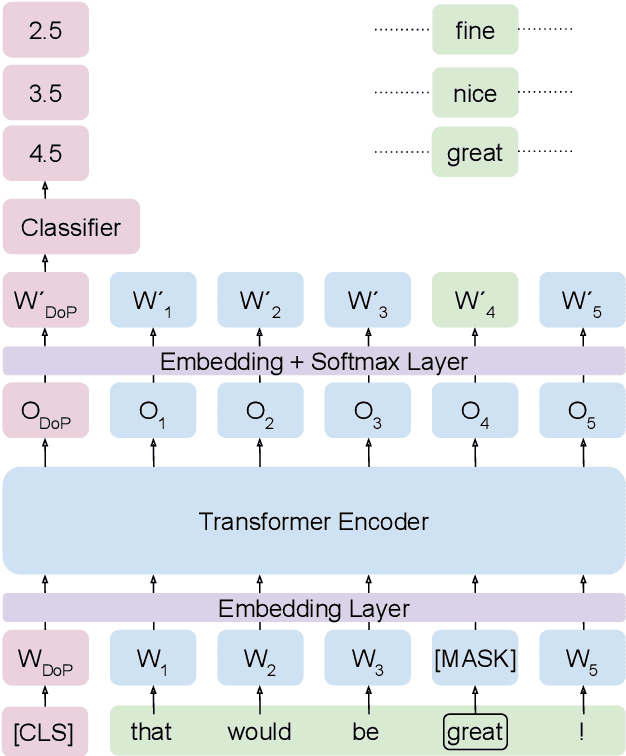

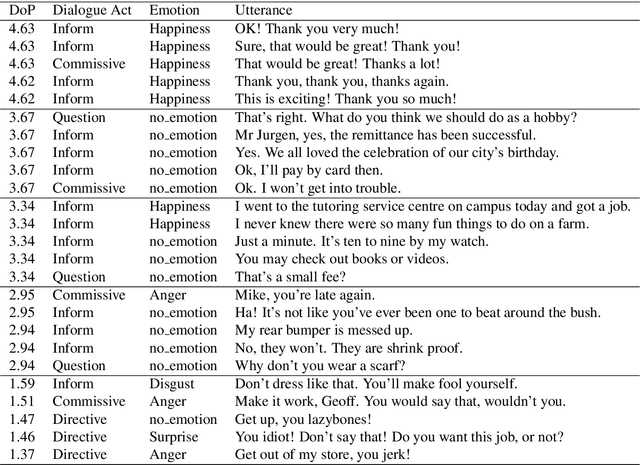

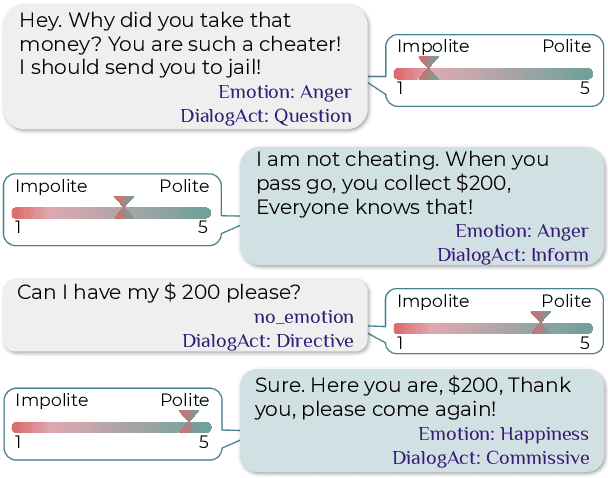

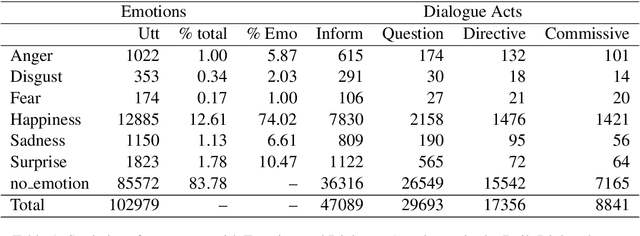

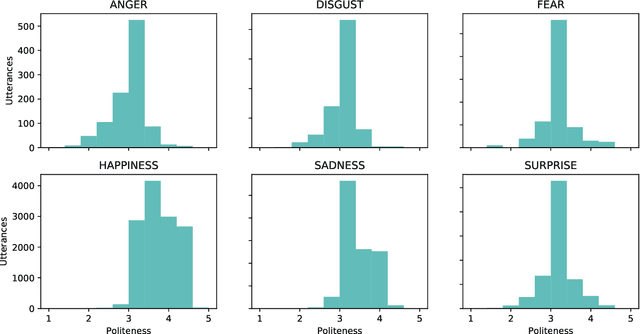

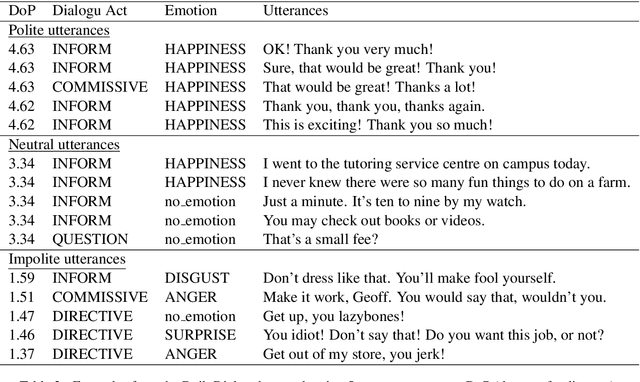

Abstract:Many socio-linguistic cues are used in conversational analysis, such as emotion, sentiment, and dialogue acts. One of the fundamental cues is politeness, which linguistically possesses properties such as social manners useful in conversational analysis. This article presents findings of polite emotional dialogue act associations, where we can correlate the relationships between the socio-linguistic cues. We confirm our hypothesis that the utterances with the emotion classes Anger and Disgust are more likely to be impolite. At the same time, Happiness and Sadness are more likely to be polite. A less expectable phenomenon occurs with dialogue acts Inform and Commissive which contain more polite utterances than Question and Directive. Finally, we conclude on the future work of these findings to extend the learning of social behaviours using politeness.

Polite Emotional Dialogue Acts for Conversational Analysis in Daily Dialog Data

Dec 28, 2021

Abstract:Many socio-linguistic cues are used in the conversational analysis, such as emotion, sentiment, and dialogue acts. One of the fundamental social cues is politeness, which linguistically possesses properties useful in conversational analysis. This short article presents some of the brief findings of polite emotional dialogue acts, where we can correlate the relational bonds between these socio-linguistics cues. We found that the utterances with emotion classes Anger and Disgust are more likely to be impolite while Happiness and Sadness to be polite. Similar phenomenon occurs with dialogue acts, Inform and Commissive contain many polite utterances than Question and Directive. Finally, we will conclude on the future work of these findings.

Enriching Existing Conversational Emotion Datasets with Dialogue Acts using Neural Annotators

Dec 05, 2019

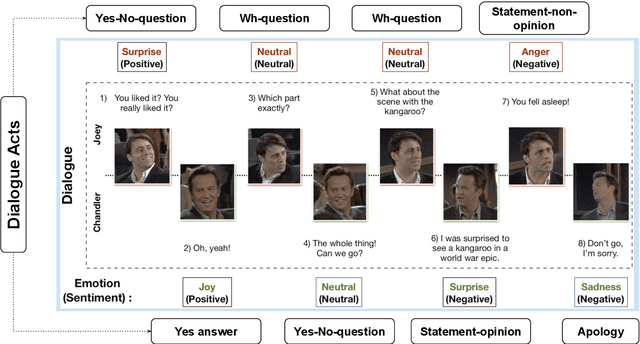

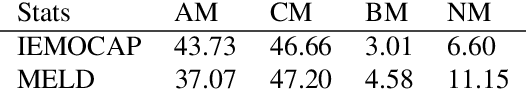

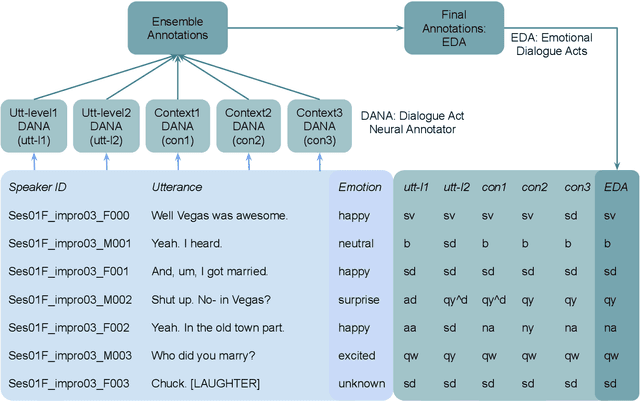

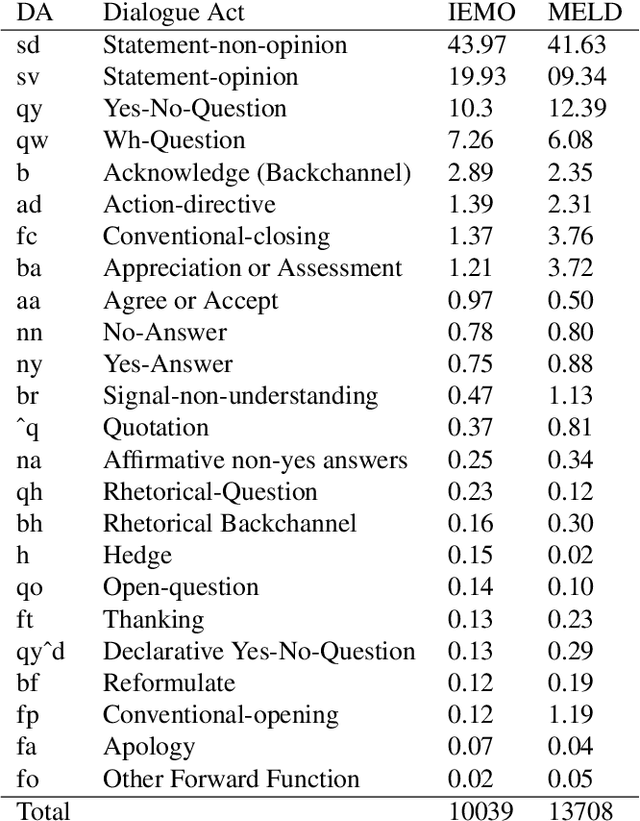

Abstract:The recognition of emotion and dialogue acts enrich conversational analysis and help to build natural dialogue systems. Emotion makes us understand feelings and dialogue acts reflect the intentions and performative functions in the utterances. However, most of the textual and multi-modal conversational emotion datasets contain only emotion labels but not dialogue acts. To address this problem, we propose to use a pool of various recurrent neural models trained on a dialogue act corpus, with or without context. These neural models annotate the emotion corpus with dialogue act labels and an ensemble annotator extracts the final dialogue act label. We annotated two popular multi-modal emotion datasets: IEMOCAP and MELD. We analysed the co-occurrence of emotion and dialogue act labels and discovered specific relations. For example, Accept/Agree dialogue acts often occur with the Joy emotion, Apology with Sadness, and Thanking with Joy. We make the Emotional Dialogue Act (EDA) corpus publicly available to the research community for further study and analysis.

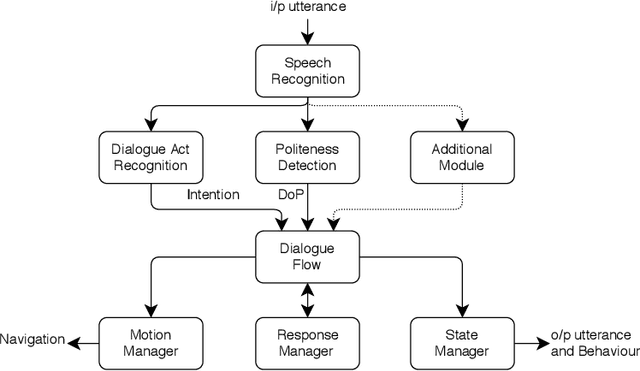

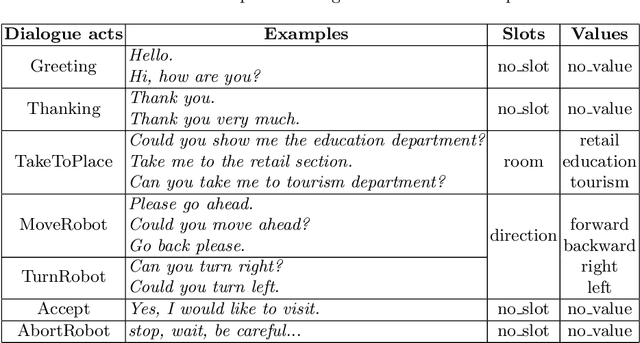

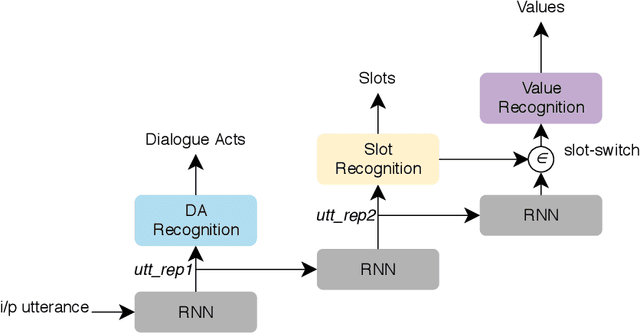

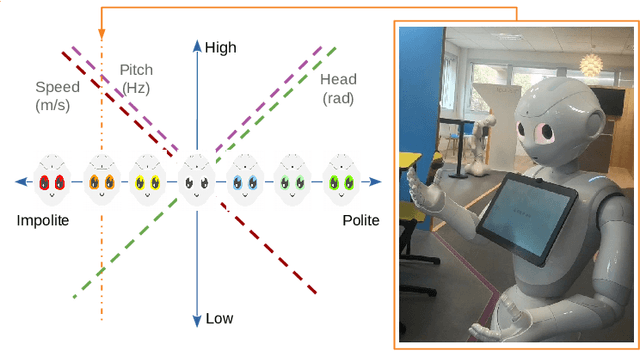

Towards Dialogue-based Navigation with Multivariate Adaptation driven by Intention and Politeness for Social Robots

Nov 14, 2018

Abstract:Service robots need to show appropriate social behaviour in order to be deployed in social environments such as healthcare, education, retail, etc. Some of the main capabilities that robots should have are navigation and conversational skills. If the person is impatient, the person might want a robot to navigate faster and vice versa. Linguistic features that indicate politeness can provide social cues about a person's patient and impatient behaviour. The novelty presented in this paper is to dynamically incorporate politeness in robotic dialogue systems for navigation. Understanding the politeness in users' speech can be used to modulate the robot behaviour and responses. Therefore, we developed a dialogue system to navigate in an indoor environment, which produces different robot behaviours and responses based on users' intention and degree of politeness. We deploy and test our system with the Pepper robot that adapts to the changes in user's politeness.

Discourse-Wizard: Discovering Deep Discourse Structure in your Conversation with RNNs

Jun 29, 2018

Abstract:Spoken language understanding is one of the key factors in a dialogue system, and a context in a conversation plays an important role to understand the current utterance. In this work, we demonstrate the importance of context within the dialogue for neural network models through an online web interface live demo. We developed two different neural models: a model that does not use context and a context-based model. The no-context model classifies dialogue acts at an utterance-level whereas the context-based model takes some preceding utterances into account. We make these trained neural models available as a live demo called Discourse-Wizard using a modular server architecture. The live demo provides an easy to use interface for conversational analysis and for discovering deep discourse structures in a conversation.

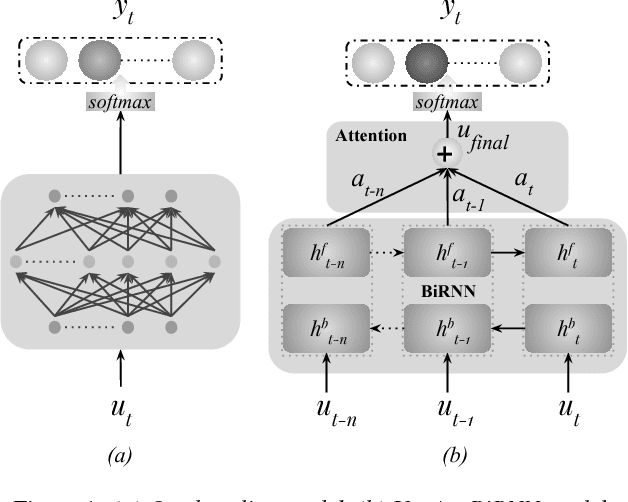

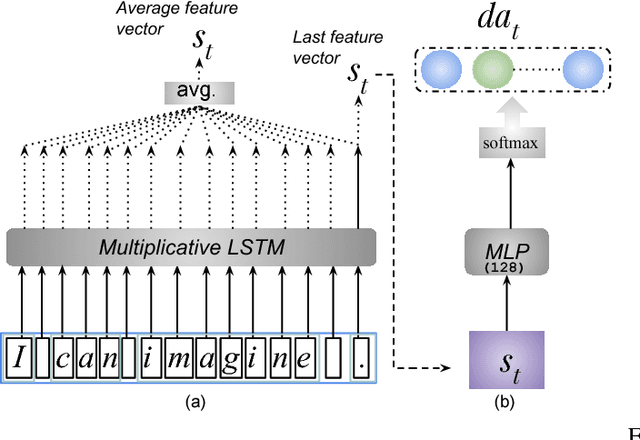

Conversational Analysis using Utterance-level Attention-based Bidirectional Recurrent Neural Networks

Jun 20, 2018

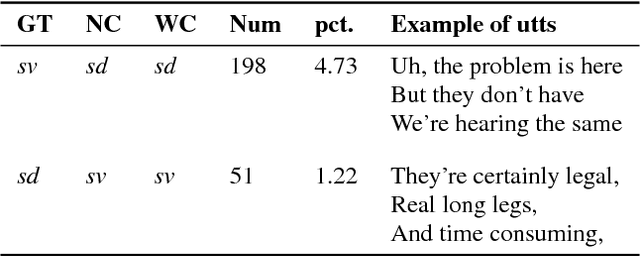

Abstract:Recent approaches for dialogue act recognition have shown that context from preceding utterances is important to classify the subsequent one. It was shown that the performance improves rapidly when the context is taken into account. We propose an utterance-level attention-based bidirectional recurrent neural network (Utt-Att-BiRNN) model to analyze the importance of preceding utterances to classify the current one. In our setup, the BiRNN is given the input set of current and preceding utterances. Our model outperforms previous models that use only preceding utterances as context on the used corpus. Another contribution of the article is to discover the amount of information in each utterance to classify the subsequent one and to show that context-based learning not only improves the performance but also achieves higher confidence in the classification. We use character- and word-level features to represent the utterances. The results are presented for character and word feature representations and as an ensemble model of both representations. We found that when classifying short utterances, the closest preceding utterances contributes to a higher degree.

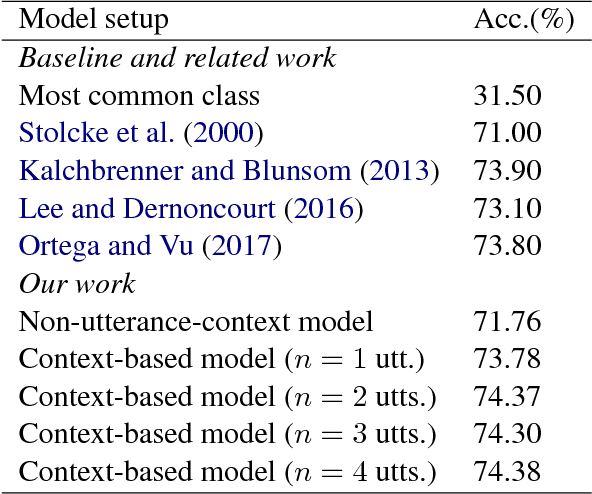

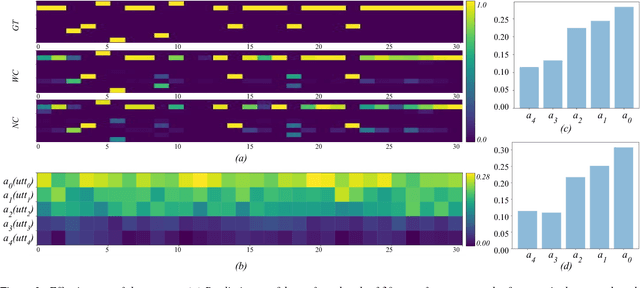

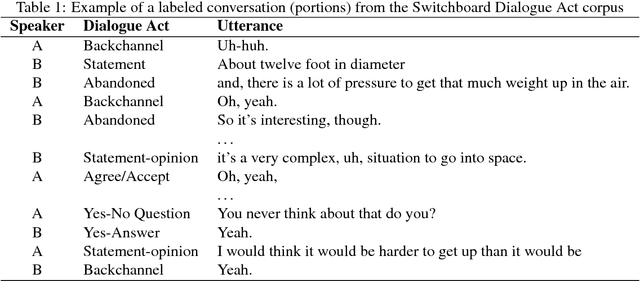

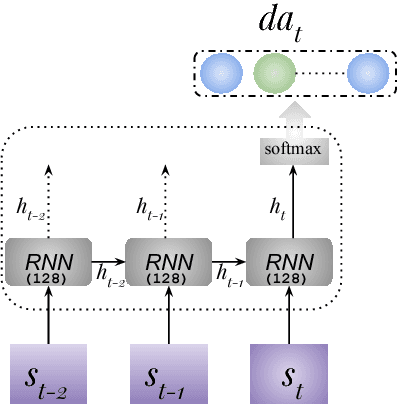

A Context-based Approach for Dialogue Act Recognition using Simple Recurrent Neural Networks

May 16, 2018

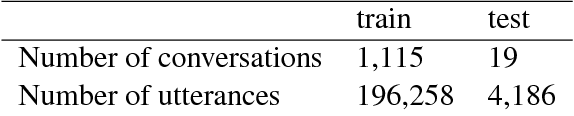

Abstract:Dialogue act recognition is an important part of natural language understanding. We investigate the way dialogue act corpora are annotated and the learning approaches used so far. We find that the dialogue act is context-sensitive within the conversation for most of the classes. Nevertheless, previous models of dialogue act classification work on the utterance-level and only very few consider context. We propose a novel context-based learning method to classify dialogue acts using a character-level language model utterance representation, and we notice significant improvement. We evaluate this method on the Switchboard Dialogue Act corpus, and our results show that the consideration of the preceding utterances as a context of the current utterance improves dialogue act detection.

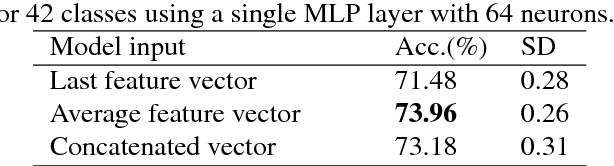

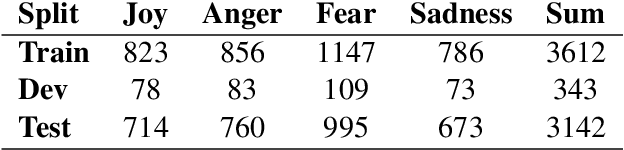

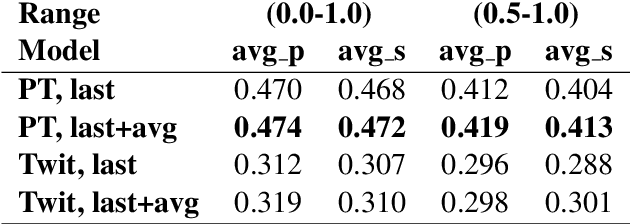

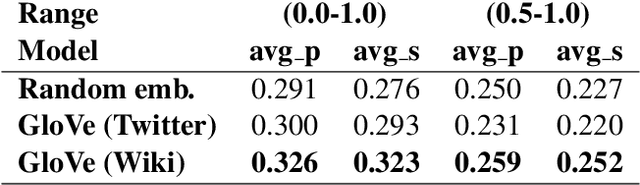

GradAscent at EmoInt-2017: Character- and Word-Level Recurrent Neural Network Models for Tweet Emotion Intensity Detection

Mar 30, 2018

Abstract:The WASSA 2017 EmoInt shared task has the goal to predict emotion intensity values of tweet messages. Given the text of a tweet and its emotion category (anger, joy, fear, and sadness), the participants were asked to build a system that assigns emotion intensity values. Emotion intensity estimation is a challenging problem given the short length of the tweets, the noisy structure of the text and the lack of annotated data. To solve this problem, we developed an ensemble of two neural models, processing input on the character. and word-level with a lexicon-driven system. The correlation scores across all four emotions are averaged to determine the bottom-line competition metric, and our system ranks place forth in full intensity range and third in 0.5-1 range of intensity among 23 systems at the time of writing (June 2017).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge