Francisco Miguel Moreno

Autonomous Driving: Framework for Pedestrian Intention Estimationin a Real World Scenario

Jun 04, 2020

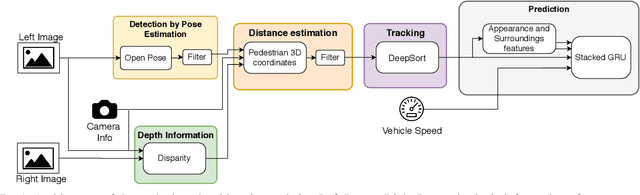

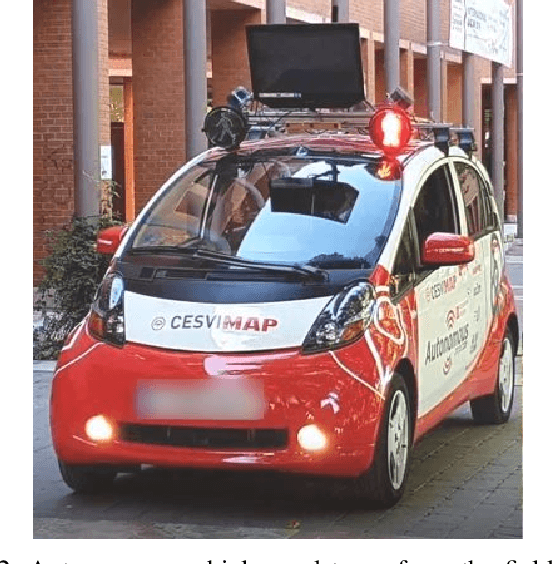

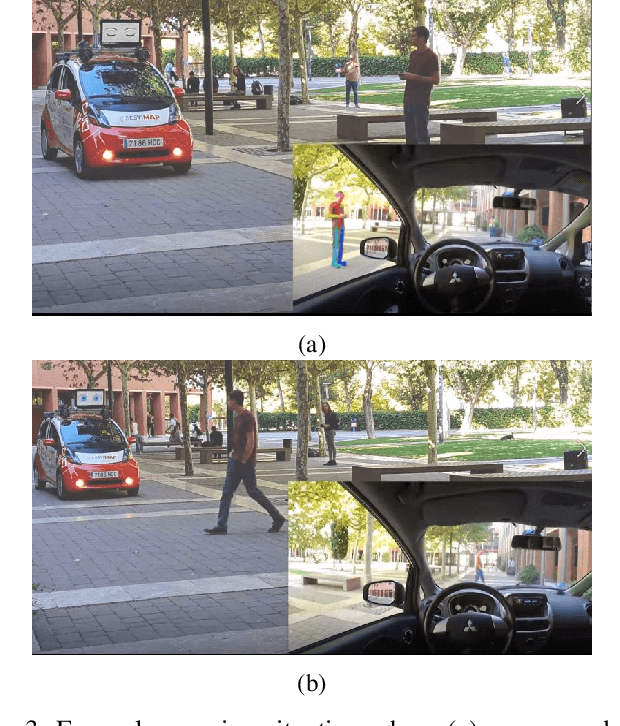

Abstract:Rapid advancements in driver-assistance technology will lead to the integration of fully autonomous vehicles on our roads that will interact with other road users. To address the problem that driverless vehicles make interaction through eye contact impossible, we describe a framework for estimating the crossing intentions of pedestrians in order to reduce the uncertainty that the lack of eye contact between road users creates. The framework was deployed in a real vehicle and tested with three experimental cases that showed a variety of communication messages to pedestrians in a shared space scenario. Results from the performed field tests showed the feasibility of the presented approach.

BirdNet: a 3D Object Detection Framework from LiDAR information

May 03, 2018

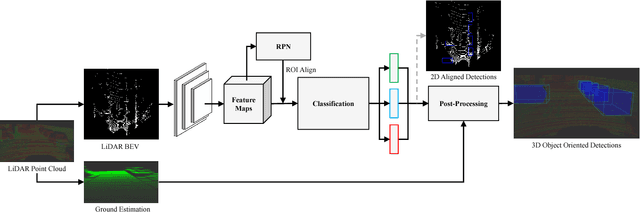

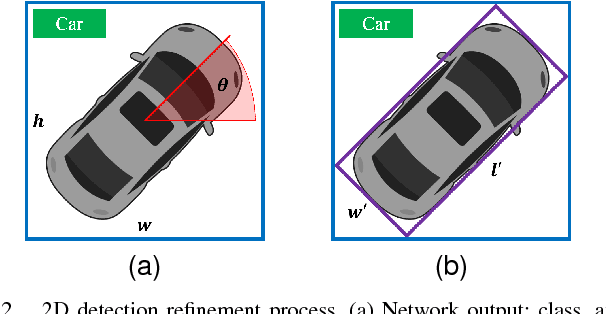

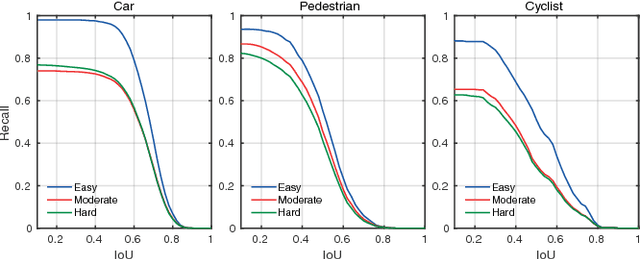

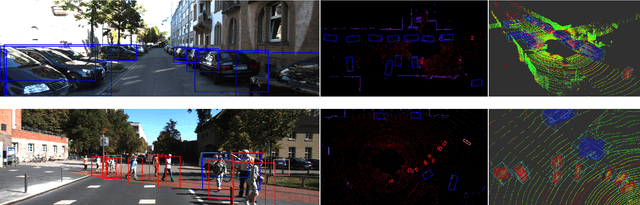

Abstract:Understanding driving situations regardless the conditions of the traffic scene is a cornerstone on the path towards autonomous vehicles; however, despite common sensor setups already include complementary devices such as LiDAR or radar, most of the research on perception systems has traditionally focused on computer vision. We present a LiDAR-based 3D object detection pipeline entailing three stages. First, laser information is projected into a novel cell encoding for bird's eye view projection. Later, both object location on the plane and its heading are estimated through a convolutional neural network originally designed for image processing. Finally, 3D oriented detections are computed in a post-processing phase. Experiments on KITTI dataset show that the proposed framework achieves state-of-the-art results among comparable methods. Further tests with different LiDAR sensors in real scenarios assess the multi-device capabilities of the approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge