Arturo de la Escalera

Joint object detection and re-identification for 3D obstacle multi-camera systems

Oct 09, 2023

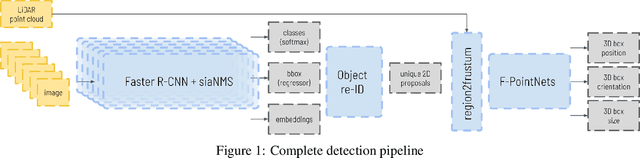

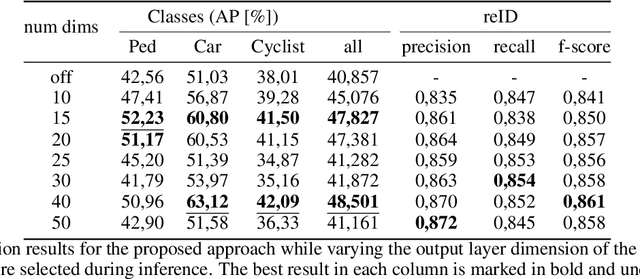

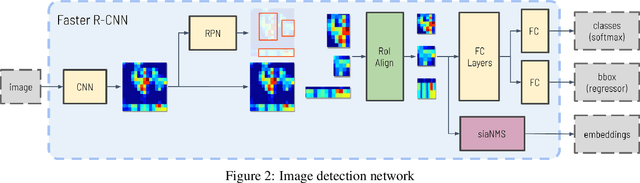

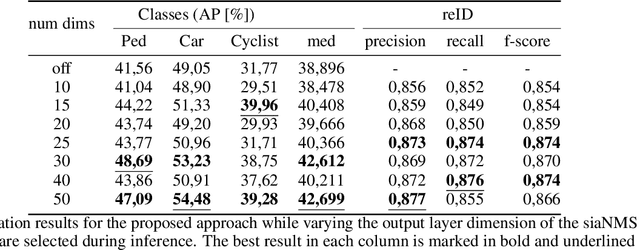

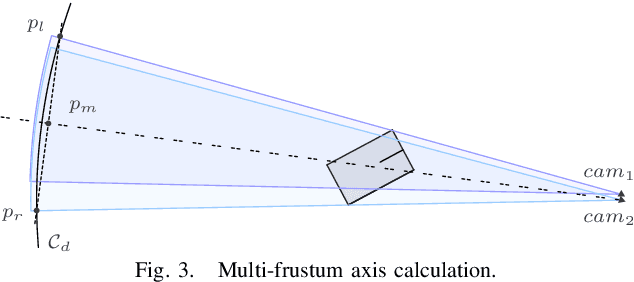

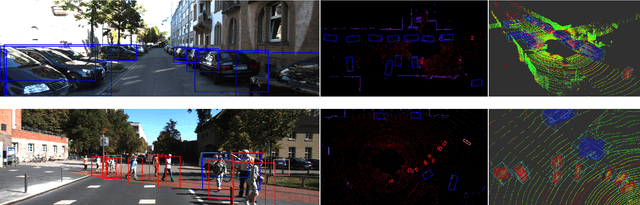

Abstract:In recent years, the field of autonomous driving has witnessed remarkable advancements, driven by the integration of a multitude of sensors, including cameras and LiDAR systems, in different prototypes. However, with the proliferation of sensor data comes the pressing need for more sophisticated information processing techniques. This research paper introduces a novel modification to an object detection network that uses camera and lidar information, incorporating an additional branch designed for the task of re-identifying objects across adjacent cameras within the same vehicle while elevating the quality of the baseline 3D object detection outcomes. The proposed methodology employs a two-step detection pipeline: initially, an object detection network is employed, followed by a 3D box estimator that operates on the filtered point cloud generated from the network's detections. Extensive experimental evaluations encompassing both 2D and 3D domains validate the effectiveness of the proposed approach and the results underscore the superiority of this method over traditional Non-Maximum Suppression (NMS) techniques, with an improvement of more than 5\% in the car category in the overlapping areas.

Automated Defect Recognition of Castings defects using Neural Networks

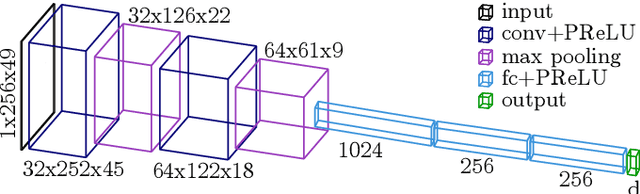

Sep 06, 2022Abstract:Industrial X-ray analysis is common in aerospace, automotive or nuclear industries where structural integrity of some parts needs to be guaranteed. However, the interpretation of radiographic images is sometimes difficult and may lead to two experts disagree on defect classification. The Automated Defect Recognition (ADR) system presented herein will reduce the analysis time and will also help reducing the subjective interpretation of the defects while increasing the reliability of the human inspector. Our Convolutional Neural Network (CNN) model achieves 94.2\% accuracy (mAP@IoU=50\%), which is considered as similar to expected human performance, when applied to an automotive aluminium castings dataset (GDXray), exceeding current state of the art for this dataset. On an industrial environment, its inference time is less than 400 ms per DICOM image, so it can be installed on production facilities with no impact on delivery time. In addition, an ablation study of the main hyper-parameters to optimise model accuracy from the initial baseline result of 75\% mAP up to 94.2\% mAP, was also conducted.

* This preprint has not undergone peer review (when applicable) or any post-submission improvements or corrections. The Version of Record of this article is published in Journal of Nondestructive Evaluation, and is available online at https://doi.org/10.1007/s10921-021-00842-1

Self-awareness in intelligent vehicles: Feature based dynamic Bayesian models for abnormality detection

Oct 29, 2020

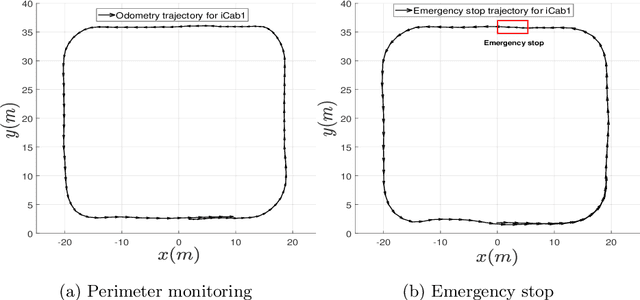

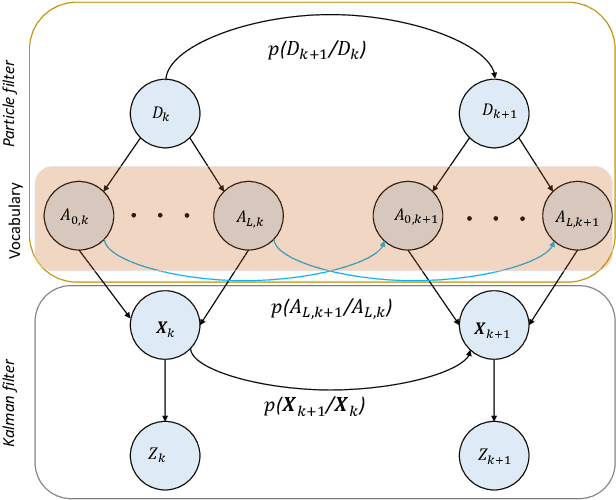

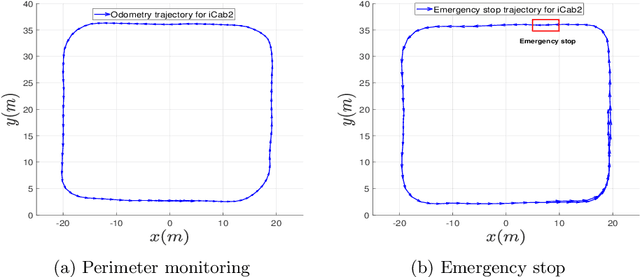

Abstract:The evolution of Intelligent Transportation Systems in recent times necessitates the development of self-awareness in agents. Before the intensive use of Machine Learning, the detection of abnormalities was manually programmed by checking every variable and creating huge nested conditions that are very difficult to track. This paper aims to introduce a novel method to develop self-awareness in autonomous vehicles that mainly focuses on detecting abnormal situations around the considered agents. Multi-sensory time-series data from the vehicles are used to develop the data-driven Dynamic Bayesian Network (DBN) models used for future state prediction and the detection of dynamic abnormalities. Moreover, an initial level collective awareness model that can perform joint anomaly detection in co-operative tasks is proposed. The GNG algorithm learns the DBN models' discrete node variables; probabilistic transition links connect the node variables. A Markov Jump Particle Filter (MJPF) is applied to predict future states and detect when the vehicle is potentially misbehaving using learned DBNs as filter parameters. In this paper, datasets from real experiments of autonomous vehicles performing various tasks used to learn and test a set of switching DBN models.

Self-awareness in Intelligent Vehicles: Experience Based Abnormality Detection

Oct 28, 2020

Abstract:The evolution of Intelligent Transportation System in recent times necessitates the development of self-driving agents: the self-awareness consciousness. This paper aims to introduce a novel method to detect abnormalities based on internal cross-correlation parameters of the vehicle. Before the implementation of Machine Learning, the detection of abnormalities were manually programmed by checking every variable and creating huge nested conditions that are very difficult to track. Nowadays, it is possible to train a Dynamic Bayesian Network (DBN) model to automatically evaluate and detect when the vehicle is potentially misbehaving. In this paper, different scenarios have been set in order to train and test a switching DBN for Perimeter Monitoring Task using a semantic segmentation for the DBN model and Hellinger Distance metric for abnormality measurements.

siaNMS: Non-Maximum Suppression with Siamese Networks for Multi-Camera 3D Object Detection

Feb 19, 2020

Abstract:The rapid development of embedded hardware in autonomous vehicles broadens their computational capabilities, thus bringing the possibility to mount more complete sensor setups able to handle driving scenarios of higher complexity. As a result, new challenges such as multiple detections of the same object have to be addressed. In this work, a siamese network is integrated into the pipeline of a well-known 3D object detector approach to suppress duplicate proposals coming from different cameras via re-identification. Additionally, associations are exploited to enhance the 3D box regression of the object by aggregating their corresponding LiDAR frustums. The experimental evaluation on the nuScenes dataset shows that the proposed method outperforms traditional NMS approaches.

BirdNet: a 3D Object Detection Framework from LiDAR information

May 03, 2018

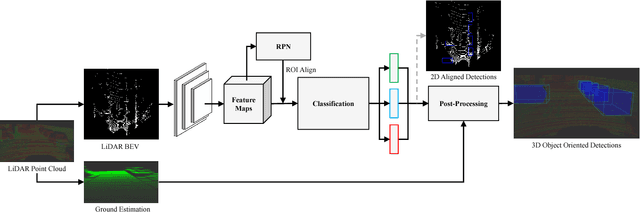

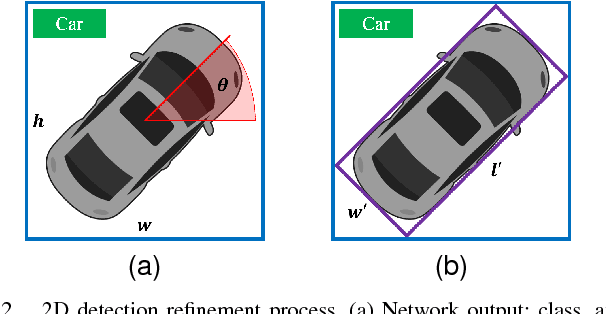

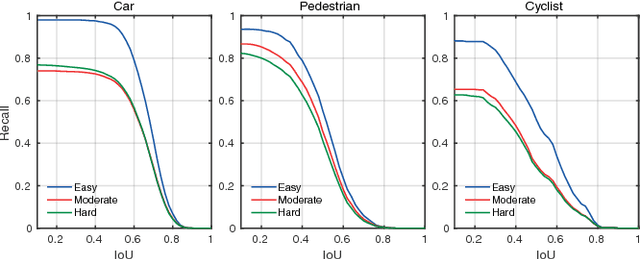

Abstract:Understanding driving situations regardless the conditions of the traffic scene is a cornerstone on the path towards autonomous vehicles; however, despite common sensor setups already include complementary devices such as LiDAR or radar, most of the research on perception systems has traditionally focused on computer vision. We present a LiDAR-based 3D object detection pipeline entailing three stages. First, laser information is projected into a novel cell encoding for bird's eye view projection. Later, both object location on the plane and its heading are estimated through a convolutional neural network originally designed for image processing. Finally, 3D oriented detections are computed in a post-processing phase. Experiments on KITTI dataset show that the proposed framework achieves state-of-the-art results among comparable methods. Further tests with different LiDAR sensors in real scenarios assess the multi-device capabilities of the approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge