David Martin

Self-awareness in intelligent vehicles: Feature based dynamic Bayesian models for abnormality detection

Oct 29, 2020

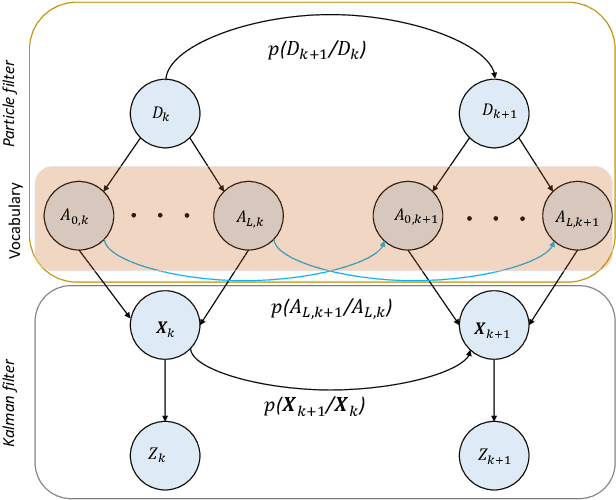

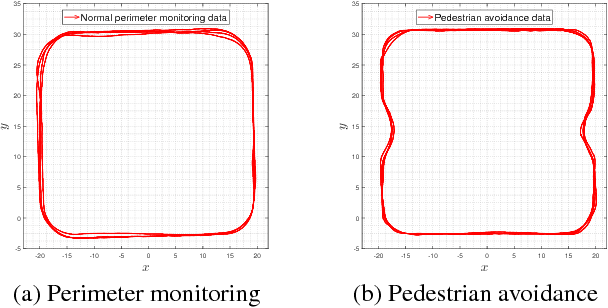

Abstract:The evolution of Intelligent Transportation Systems in recent times necessitates the development of self-awareness in agents. Before the intensive use of Machine Learning, the detection of abnormalities was manually programmed by checking every variable and creating huge nested conditions that are very difficult to track. This paper aims to introduce a novel method to develop self-awareness in autonomous vehicles that mainly focuses on detecting abnormal situations around the considered agents. Multi-sensory time-series data from the vehicles are used to develop the data-driven Dynamic Bayesian Network (DBN) models used for future state prediction and the detection of dynamic abnormalities. Moreover, an initial level collective awareness model that can perform joint anomaly detection in co-operative tasks is proposed. The GNG algorithm learns the DBN models' discrete node variables; probabilistic transition links connect the node variables. A Markov Jump Particle Filter (MJPF) is applied to predict future states and detect when the vehicle is potentially misbehaving using learned DBNs as filter parameters. In this paper, datasets from real experiments of autonomous vehicles performing various tasks used to learn and test a set of switching DBN models.

Self-awareness in Intelligent Vehicles: Experience Based Abnormality Detection

Oct 28, 2020

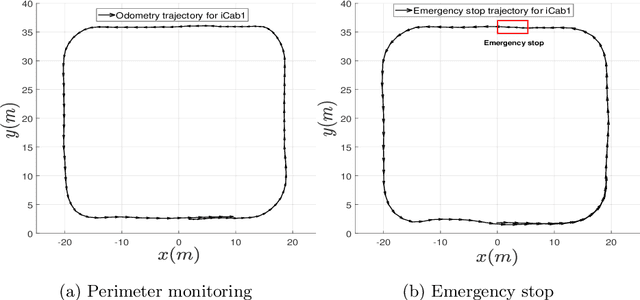

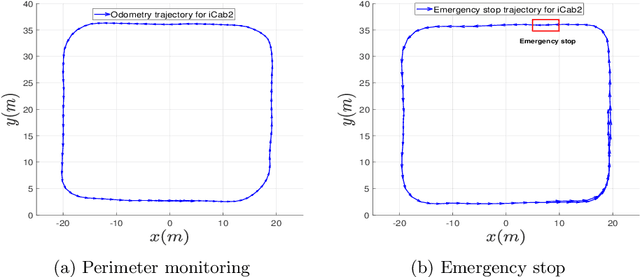

Abstract:The evolution of Intelligent Transportation System in recent times necessitates the development of self-driving agents: the self-awareness consciousness. This paper aims to introduce a novel method to detect abnormalities based on internal cross-correlation parameters of the vehicle. Before the implementation of Machine Learning, the detection of abnormalities were manually programmed by checking every variable and creating huge nested conditions that are very difficult to track. Nowadays, it is possible to train a Dynamic Bayesian Network (DBN) model to automatically evaluate and detect when the vehicle is potentially misbehaving. In this paper, different scenarios have been set in order to train and test a switching DBN for Perimeter Monitoring Task using a semantic segmentation for the DBN model and Hellinger Distance metric for abnormality measurements.

Wikidata on MARS

Aug 14, 2020Abstract:Multi-attributed relational structures (MARSs) have been proposed as a formal data model for generalized property graphs, along with multi-attributed rule-based predicate logic (MARPL) as a useful rule-based logic in which to write inference rules over property graphs. Wikidata can be modelled in an extended MARS that adds the (imprecise) datatypes of Wikidata. The rules of inference for the Wikidata ontology can be modelled as a MARPL ontology, with extensions to handle the Wikidata datatypes and functions over these datatypes. Because many Wikidata qualifiers should participate in most inference rules in Wikidata a method of implicitly handling qualifier values on a per-qualifier basis is needed to make this modelling useful. The meaning of Wikidata is then the extended MARS that is the closure of running these rules on the Wikidata data model. Wikidata constraints can be modelled as multi-attributed predicate logic (MAPL) formulae, again extended with datatypes, that are evaluated over this extended MARS. The result models Wikidata in a way that fixes several of its major problems.

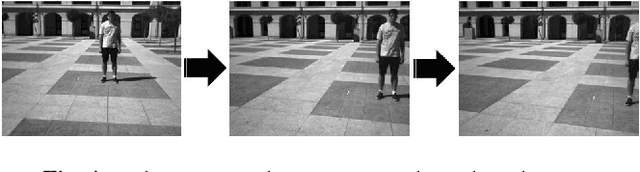

Anomaly Detection in Video Data Based on Probabilistic Latent Space Models

Mar 17, 2020

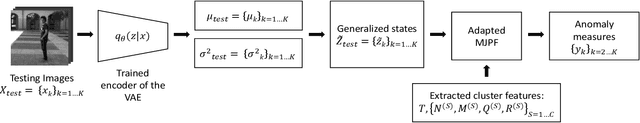

Abstract:This paper proposes a method for detecting anomalies in video data. A Variational Autoencoder (VAE) is used for reducing the dimensionality of video frames, generating latent space information that is comparable to low-dimensional sensory data (e.g., positioning, steering angle), making feasible the development of a consistent multi-modal architecture for autonomous vehicles. An Adapted Markov Jump Particle Filter defined by discrete and continuous inference levels is employed to predict the following frames and detecting anomalies in new video sequences. Our method is evaluated on different video scenarios where a semi-autonomous vehicle performs a set of tasks in a closed environment.

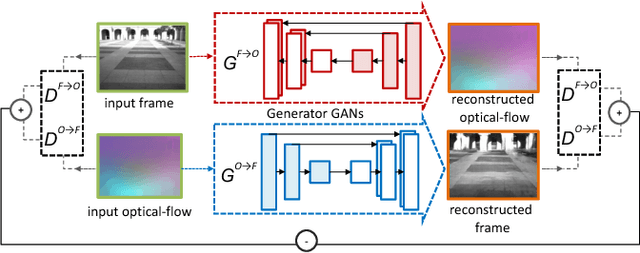

Hierarchy of GANs for learning embodied self-awareness model

Jun 08, 2018

Abstract:In recent years several architectures have been proposed to learn embodied agents complex self-awareness models. In this paper, dynamic incremental self-awareness (SA) models are proposed that allow experiences done by an agent to be modeled in a hierarchical fashion, starting from more simple situations to more structured ones. Each situation is learned from subsets of private agent perception data as a model capable to predict normal behaviors and detect abnormalities. Hierarchical SA models have been already proposed using low dimensional sensorial inputs. In this work, a hierarchical model is introduced by means of a cross-modal Generative Adversarial Networks (GANs) processing high dimensional visual data. Different levels of the GANs are detected in a self-supervised manner using GANs discriminators decision boundaries. Real experiments on semi-autonomous ground vehicles are presented.

Learning Multi-Modal Self-Awareness Models for Autonomous Vehicles from Human Driving

Jun 07, 2018

Abstract:This paper presents a novel approach for learning self-awareness models for autonomous vehicles. The proposed technique is based on the availability of synchronized multi-sensor dynamic data related to different maneuvering tasks performed by a human operator. It is shown that different machine learning approaches can be used to first learn single modality models using coupled Dynamic Bayesian Networks; such models are then correlated at event level to discover contextual multi-modal concepts. In the presented case, visual perception and localization are used as modalities. Cross-correlations among modalities in time is discovered from data and are described as probabilistic links connecting shared and private multi-modal DBNs at the event (discrete) level. Results are presented on experiments performed on an autonomous vehicle, highlighting potentiality of the proposed approach to allow anomaly detection and autonomous decision making based on learned self-awareness models.

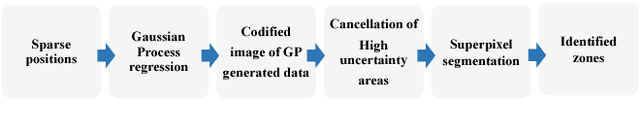

A Multi-perspective Approach To Anomaly Detection For Self-aware Embodied Agents

Mar 17, 2018

Abstract:This paper focuses on multi-sensor anomaly detection for moving cognitive agents using both external and private first-person visual observations. Both observation types are used to characterize agents' motion in a given environment. The proposed method generates locally uniform motion models by dividing a Gaussian process that approximates agents' displacements on the scene and provides a Shared Level (SL) self-awareness based on Environment Centered (EC) models. Such models are then used to train in a semi-unsupervised way a set of Generative Adversarial Networks (GANs) that produce an estimation of external and internal parameters of moving agents. Obtained results exemplify the feasibility of using multi-perspective data for predicting and analyzing trajectory information.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge