Irene Cortés

Joint object detection and re-identification for 3D obstacle multi-camera systems

Oct 09, 2023

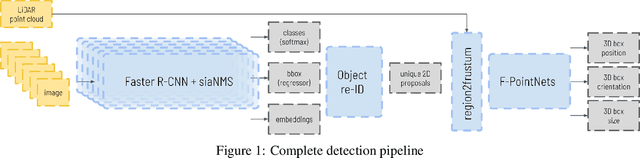

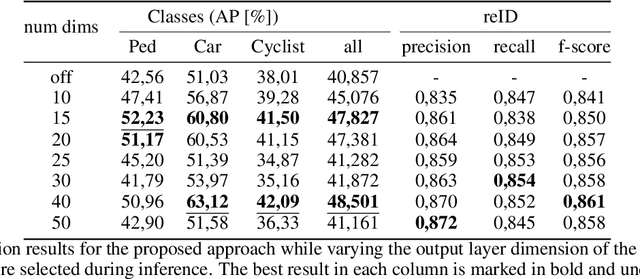

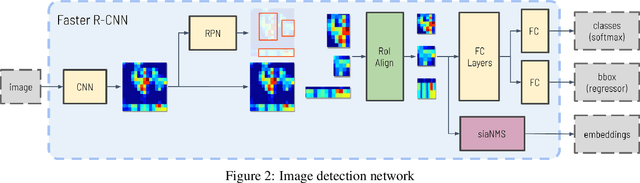

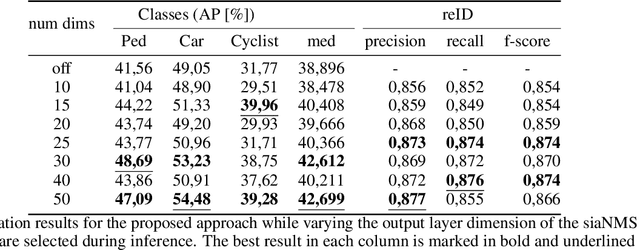

Abstract:In recent years, the field of autonomous driving has witnessed remarkable advancements, driven by the integration of a multitude of sensors, including cameras and LiDAR systems, in different prototypes. However, with the proliferation of sensor data comes the pressing need for more sophisticated information processing techniques. This research paper introduces a novel modification to an object detection network that uses camera and lidar information, incorporating an additional branch designed for the task of re-identifying objects across adjacent cameras within the same vehicle while elevating the quality of the baseline 3D object detection outcomes. The proposed methodology employs a two-step detection pipeline: initially, an object detection network is employed, followed by a 3D box estimator that operates on the filtered point cloud generated from the network's detections. Extensive experimental evaluations encompassing both 2D and 3D domains validate the effectiveness of the proposed approach and the results underscore the superiority of this method over traditional Non-Maximum Suppression (NMS) techniques, with an improvement of more than 5\% in the car category in the overlapping areas.

Towards Autonomous Driving: a Multi-Modal 360$^{\circ}$ Perception Proposal

Aug 21, 2020

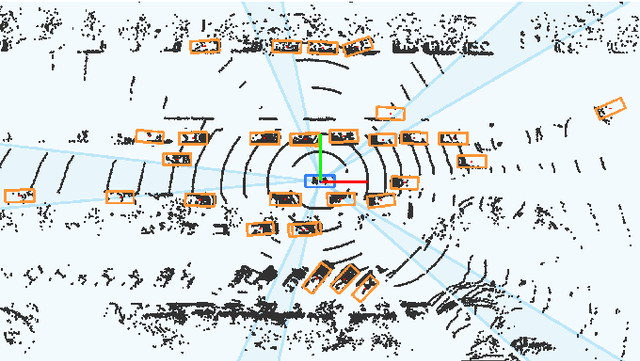

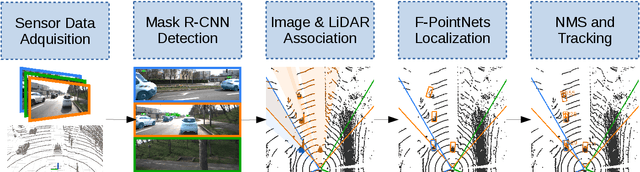

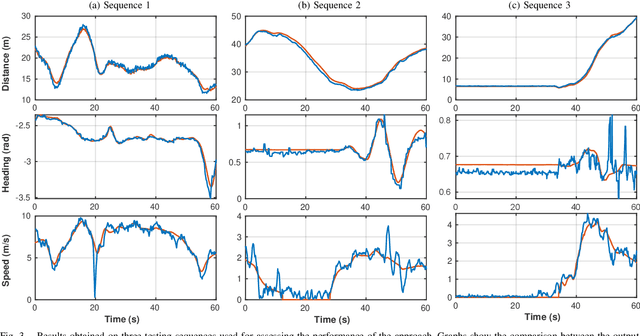

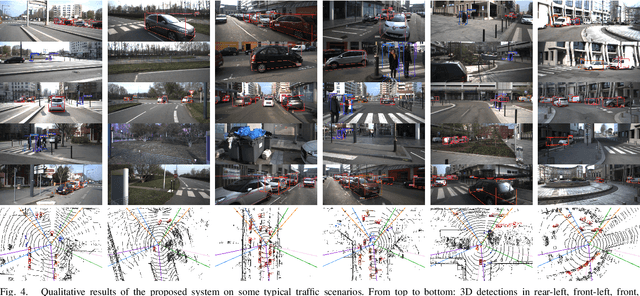

Abstract:In this paper, a multi-modal 360$^{\circ}$ framework for 3D object detection and tracking for autonomous vehicles is presented. The process is divided into four main stages. First, images are fed into a CNN network to obtain instance segmentation of the surrounding road participants. Second, LiDAR-to-image association is performed for the estimated mask proposals. Then, the isolated points of every object are processed by a PointNet ensemble to compute their corresponding 3D bounding boxes and poses. Lastly, a tracking stage based on Unscented Kalman Filter is used to track the agents along time. The solution, based on a novel sensor fusion configuration, provides accurate and reliable road environment detection. A wide variety of tests of the system, deployed in an autonomous vehicle, have successfully assessed the suitability of the proposed perception stack in a real autonomous driving application.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge