Alejandro Barrera

Cycle and Semantic Consistent Adversarial Domain Adaptation for Reducing Simulation-to-Real Domain Shift in LiDAR Bird's Eye View

Apr 22, 2021

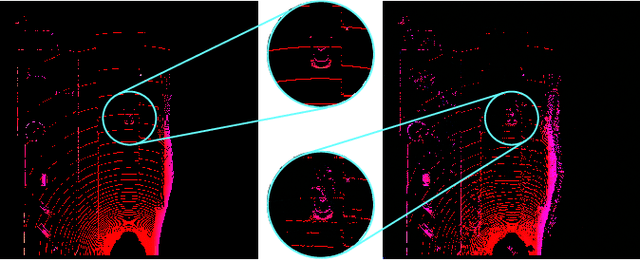

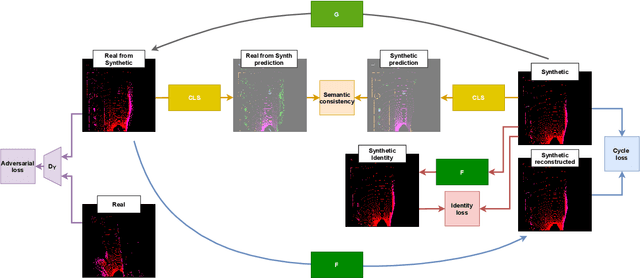

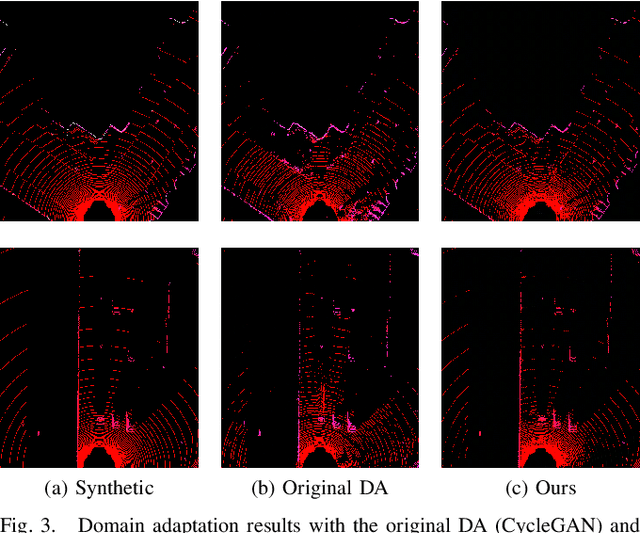

Abstract:The performance of object detection methods based on LiDAR information is heavily impacted by the availability of training data, usually limited to certain laser devices. As a result, the use of synthetic data is becoming popular when training neural network models, as both sensor specifications and driving scenarios can be generated ad-hoc. However, bridging the gap between virtual and real environments is still an open challenge, as current simulators cannot completely mimic real LiDAR operation. To tackle this issue, domain adaptation strategies are usually applied, obtaining remarkable results on vehicle detection when applied to range view (RV) and bird's eye view (BEV) projections while failing for smaller road agents. In this paper, we present a BEV domain adaptation method based on CycleGAN that uses prior semantic classification in order to preserve the information of small objects of interest during the domain adaptation process. The quality of the generated BEVs has been evaluated using a state-of-the-art 3D object detection framework at KITTI 3D Object Detection Benchmark. The obtained results show the advantages of the proposed method over the existing alternatives.

Towards Autonomous Driving: a Multi-Modal 360$^{\circ}$ Perception Proposal

Aug 21, 2020

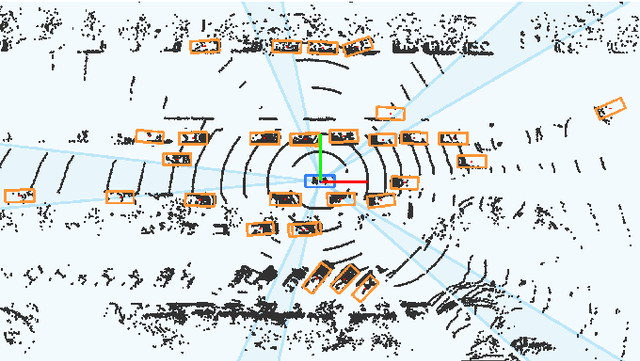

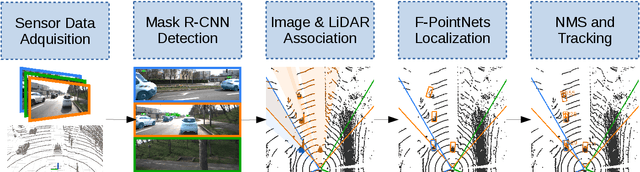

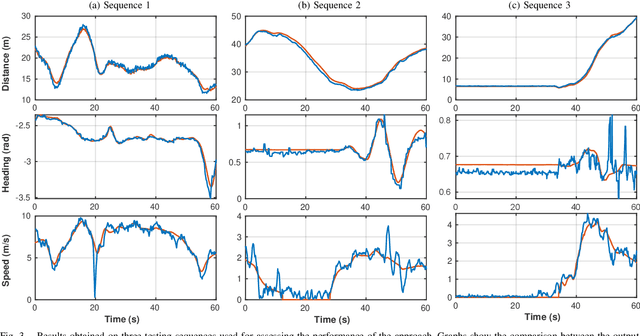

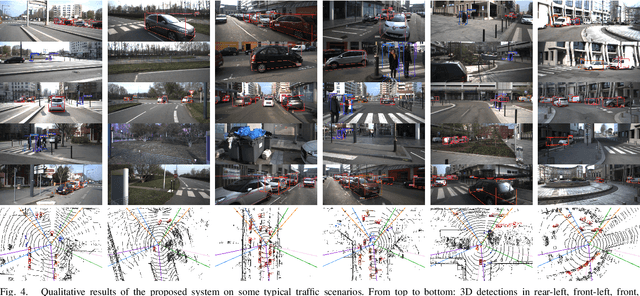

Abstract:In this paper, a multi-modal 360$^{\circ}$ framework for 3D object detection and tracking for autonomous vehicles is presented. The process is divided into four main stages. First, images are fed into a CNN network to obtain instance segmentation of the surrounding road participants. Second, LiDAR-to-image association is performed for the estimated mask proposals. Then, the isolated points of every object are processed by a PointNet ensemble to compute their corresponding 3D bounding boxes and poses. Lastly, a tracking stage based on Unscented Kalman Filter is used to track the agents along time. The solution, based on a novel sensor fusion configuration, provides accurate and reliable road environment detection. A wide variety of tests of the system, deployed in an autonomous vehicle, have successfully assessed the suitability of the proposed perception stack in a real autonomous driving application.

BirdNet+: End-to-End 3D Object Detection in LiDAR Bird's Eye View

Mar 09, 2020

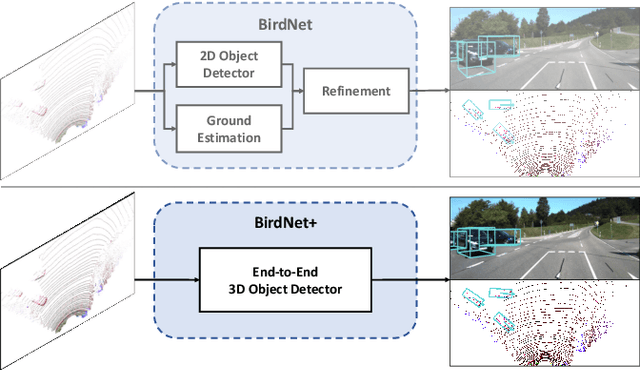

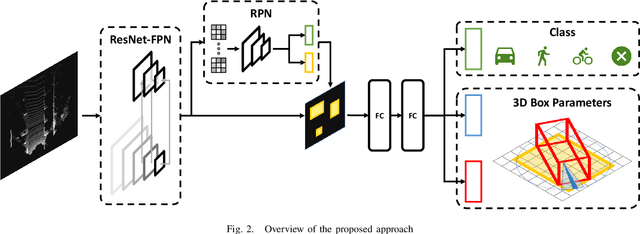

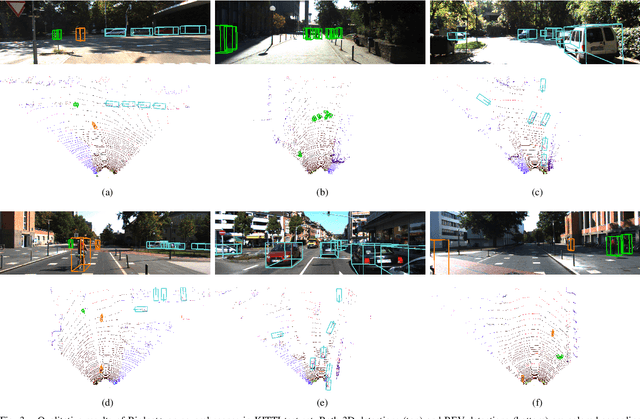

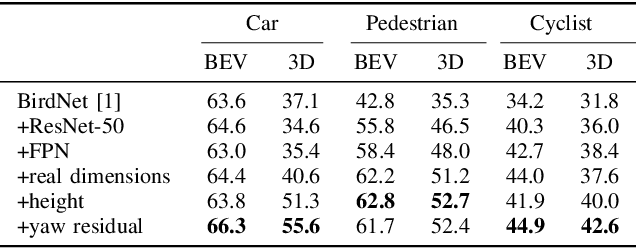

Abstract:On-board 3D object detection in autonomous vehicles often relies on geometry information captured by LiDAR devices. Albeit image features are typically preferred for detection, numerous approaches take only spatial data as input. Exploiting this information in inference usually involves the use of compact representations such as the Bird's Eye View (BEV) projection, which entails a loss of information and thus hinders the joint inference of all the parameters of the objects' 3D boxes. In this paper, we present a fully end-to-end 3D object detection framework that can infer oriented 3D boxes solely from BEV images by using a two-stage object detector and ad-hoc regression branches, eliminating the need for a post-processing stage. The method outperforms its predecessor (BirdNet) by a large margin and obtains state-of-the-art results on the KITTI 3D Object Detection Benchmark for all the categories in evaluation.

Lane Detection and Classification using Cascaded CNNs

Jul 17, 2019

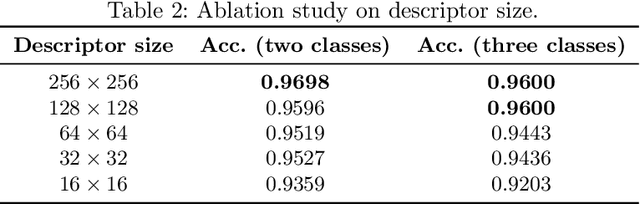

Abstract:Lane detection is extremely important for autonomous vehicles. For this reason, many approaches use lane boundary information to locate the vehicle inside the street, or to integrate GPS-based localization. As many other computer vision based tasks, convolutional neural networks (CNNs) represent the state-of-the-art technology to indentify lane boundaries. However, the position of the lane boundaries w.r.t. the vehicle may not suffice for a reliable positioning, as for path planning or localization information regarding lane types may also be needed. In this work, we present an end-to-end system for lane boundary identification, clustering and classification, based on two cascaded neural networks, that runs in real-time. To build the system, 14336 lane boundaries instances of the TuSimple dataset for lane detection have been labelled using 8 different classes. Our dataset and the code for inference are available online.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge