Nikolas Hemion

Data-driven emotional body language generation for social robotics

May 02, 2022

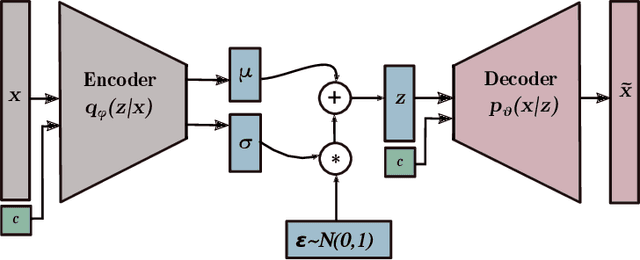

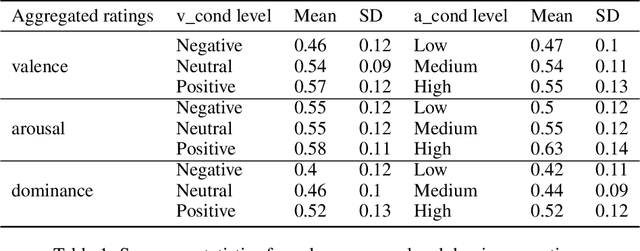

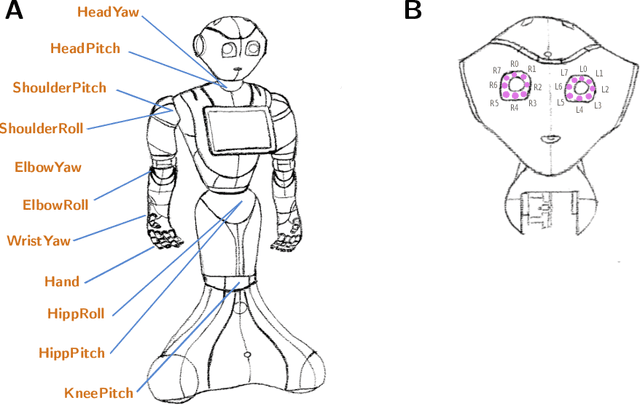

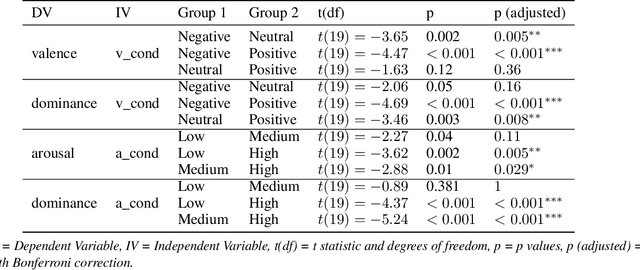

Abstract:In social robotics, endowing humanoid robots with the ability to generate bodily expressions of affect can improve human-robot interaction and collaboration, since humans attribute, and perhaps subconsciously anticipate, such traces to perceive an agent as engaging, trustworthy, and socially present. Robotic emotional body language needs to be believable, nuanced and relevant to the context. We implemented a deep learning data-driven framework that learns from a few hand-designed robotic bodily expressions and can generate numerous new ones of similar believability and lifelikeness. The framework uses the Conditional Variational Autoencoder model and a sampling approach based on the geometric properties of the model's latent space to condition the generative process on targeted levels of valence and arousal. The evaluation study found that the anthropomorphism and animacy of the generated expressions are not perceived differently from the hand-designed ones, and the emotional conditioning was adequately differentiable between most levels except the pairs of neutral-positive valence and low-medium arousal. Furthermore, an exploratory analysis of the results reveals a possible impact of the conditioning on the perceived dominance of the robot, as well as on the participants' attention.

Grounding Perception: A Developmental Approach to Sensorimotor Contingencies

Oct 03, 2018

Abstract:Sensorimotor contingency theory offers a promising account of the nature of perception, a topic rarely addressed in the robotics community. We propose a developmental framework to address the problem of the autonomous acquisition of sensorimotor contingencies by a naive robot. While exploring the world, the robot internally encodes contingencies as predictive models that capture the structure they imply in its sensorimotor experience. Three preliminary applications are presented to illustrate our approach to the acquisition of perceptive abilities: discovering the environment, discovering objects, and discovering a visual field.

Grounding object perception in a naive agent's sensorimotor experience

Sep 26, 2016

Abstract:Artificial object perception usually relies on a priori defined models and feature extraction algorithms. We study how the concept of object can be grounded in the sensorimotor experience of a naive agent. Without any knowledge about itself or the world it is immersed in, the agent explores its sensorimotor space and identifies objects as consistent networks of sensorimotor transitions, independent from their context. A fundamental drive for prediction is assumed to explain the emergence of such networks from a developmental standpoint. An algorithm is proposed and tested to illustrate the approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge