Feiyang Liu

Towards Pixel-Level Prediction for Gaze Following: Benchmark and Approach

Nov 30, 2024Abstract:Following the gaze of other people and analyzing the target they are looking at can help us understand what they are thinking, and doing, and predict the actions that may follow. Existing methods for gaze following struggle to perform well in natural scenes with diverse objects, and focus on gaze points rather than objects, making it difficult to deliver clear semantics and accurate scope of the targets. To address this shortcoming, we propose a novel gaze target prediction solution named GazeSeg, that can fully utilize the spatial visual field of the person as guiding information and lead to a progressively coarse-to-fine gaze target segmentation and recognition process. Specifically, a prompt-based visual foundation model serves as the encoder, working in conjunction with three distinct decoding modules (e.g. FoV perception, heatmap generation, and segmentation) to form the framework for gaze target prediction. Then, with the head bounding box performed as an initial prompt, GazeSeg obtains the FoV map, heatmap, and segmentation map progressively, leading to a unified framework for multiple tasks (e.g. direction estimation, gaze target segmentation, and recognition). In particular, to facilitate this research, we construct and release a new dataset, comprising 72k images with pixel-level annotations and 270 categories of gaze targets, built upon the GazeFollow dataset. The quantitative evaluation shows that our approach achieves the Dice of 0.325 in gaze target segmentation and 71.7% top-5 recognition. Meanwhile, our approach also outperforms previous state-of-the-art methods, achieving 0.953 in AUC on the gaze-following task. The dataset and code will be released.

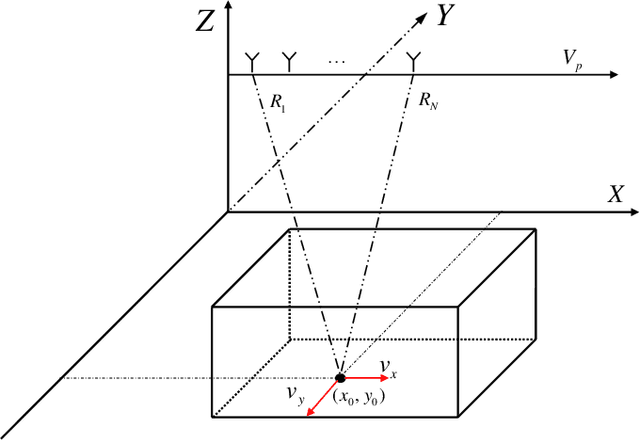

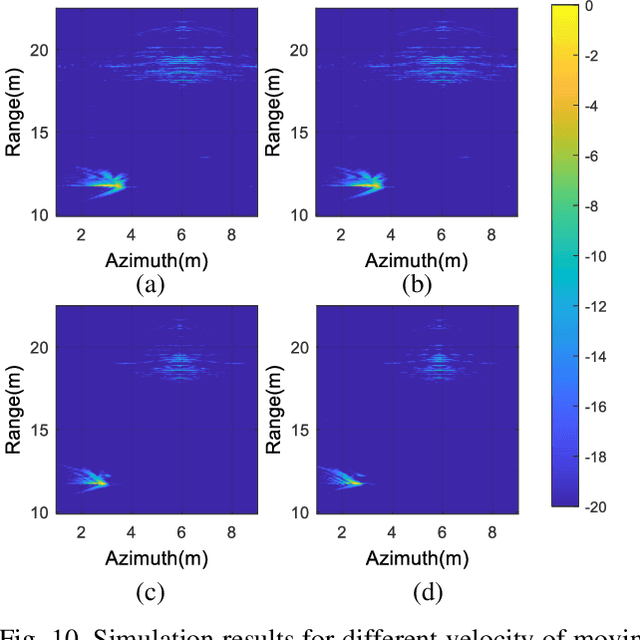

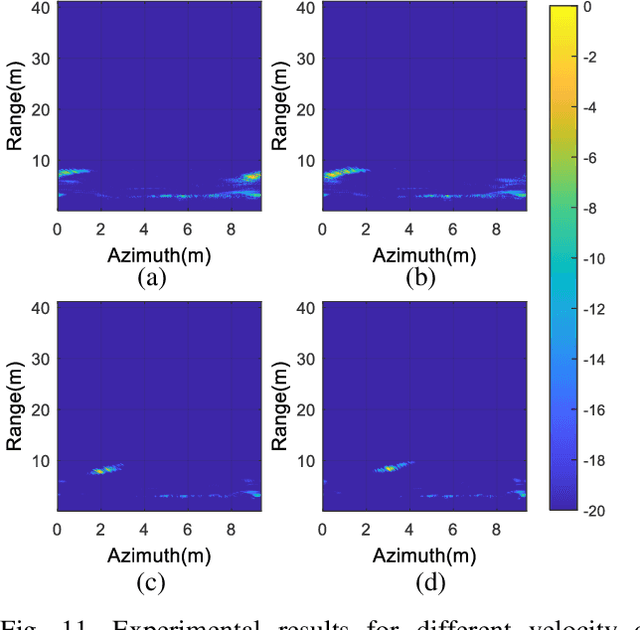

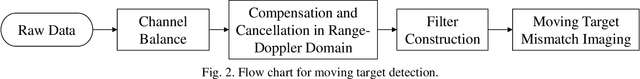

Moving Target Detection Method Based on Range? Doppler Domain Compensation and Cancellation for UAV-Mounted Radar

Jul 04, 2024

Abstract:Combining unmanned aerial vehicle (UAV) with through-the-wall radar can realize moving targets detection in complex building scenes. However, clutters generated by obstacles and static objects are always stronger and non-stationary, which results in heavy impacts on moving targets detection. To address this issue, this paper proposes a moving target detection method based on Range-Doppler domain compensation and cancellation for UAV mounted dual channel radar. In the proposed method, phase compensation is performed on the dual channel in range-Doppler domain and then cancellation is utilized to achieve roughly clutters suppression. Next, a filter is constructed based on the cancellation result and the raw echoes, which is used to suppress stationary clutter furthermore. Finally, mismatch imaging is used to focus moving target for detection. Both simulation and UAV-based experiment results are analyzed to verify the efficacy and practicability of the proposed method.

Integrated Sensing and Communication for Network-Assisted Full-Duplex Cell-Free Distributed Massive MIMO Systems

Nov 13, 2023

Abstract:In this paper, we combine the network-assisted full-duplex (NAFD) technology and distributed radar sensing to implement integrated sensing and communication (ISAC). The ISAC system features both uplink and downlink remote radio units (RRUs) equipped with communication and sensing capabilities. We evaluate the communication and sensing performance of the system using the sum communication rates and the Cramer-Rao lower bound (CRLB), respectively. We compare the performance of the proposed scheme with other ISAC schemes, the result shows that the proposed scheme can provide more stable sensing and better communication performance. Furthermore, we propose two power allocation algorithms to optimize the communication and sensing performance jointly. One algorithm is based on the deep Q-network (DQN) and the other one is based on the non-dominated sorting genetic algorithm II (NSGA-II). The proposed algorithms provide more feasible solutions and achieve better system performance than the equal power allocation algorithm.

Passive Integrated Sensing and Communication Scheme based on RF Fingerprint Information Extraction for Cell-Free RAN

Nov 10, 2023Abstract:This paper investigates how to achieve integrated sensing and communication (ISAC) based on a cell-free radio access network (CF-RAN) architecture with a minimum footprint of communication resources. We propose a new passive sensing scheme. The scheme is based on the radio frequency (RF) fingerprint learning of the RF radio unit (RRU) to build an RF fingerprint library of RRUs. The source RRU is identified by comparing the RF fingerprints carried by the signal at the receiver side. The receiver extracts the channel parameters from the signal and estimates the channel environment, thus locating the reflectors in the environment. The proposed scheme can effectively solve the problem of interference between signals in the same time-frequency domain but in different spatial domains when multiple RRUs jointly serve users in CF-RAN architecture. Simulation results show that the proposed passive ISAC scheme can effectively detect reflector location information in the environment without degrading the communication performance.

Data Augmentation for Human Behavior Analysis in Multi-Person Conversations

Aug 03, 2023

Abstract:In this paper, we present the solution of our team HFUT-VUT for the MultiMediate Grand Challenge 2023 at ACM Multimedia 2023. The solution covers three sub-challenges: bodily behavior recognition, eye contact detection, and next speaker prediction. We select Swin Transformer as the baseline and exploit data augmentation strategies to address the above three tasks. Specifically, we crop the raw video to remove the noise from other parts. At the same time, we utilize data augmentation to improve the generalization of the model. As a result, our solution achieves the best results of 0.6262 for bodily behavior recognition in terms of mean average precision and the accuracy of 0.7771 for eye contact detection on the corresponding test set. In addition, our approach also achieves comparable results of 0.5281 for the next speaker prediction in terms of unweighted average recall.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge