Feilong Yan

AADS: Augmented Autonomous Driving Simulation using Data-driven Algorithms

Jan 23, 2019

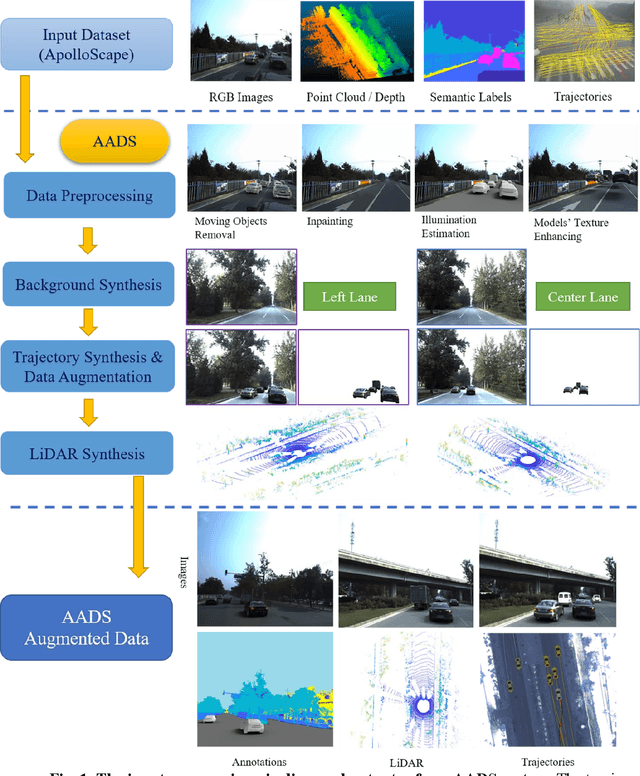

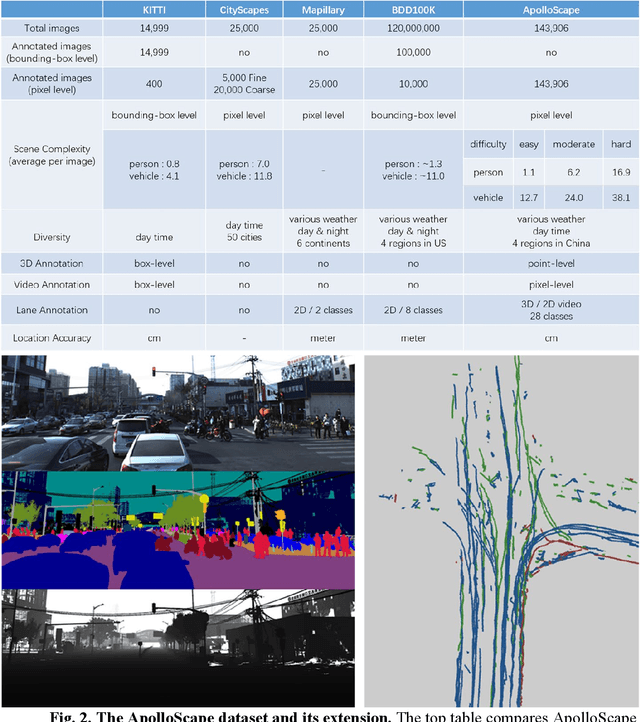

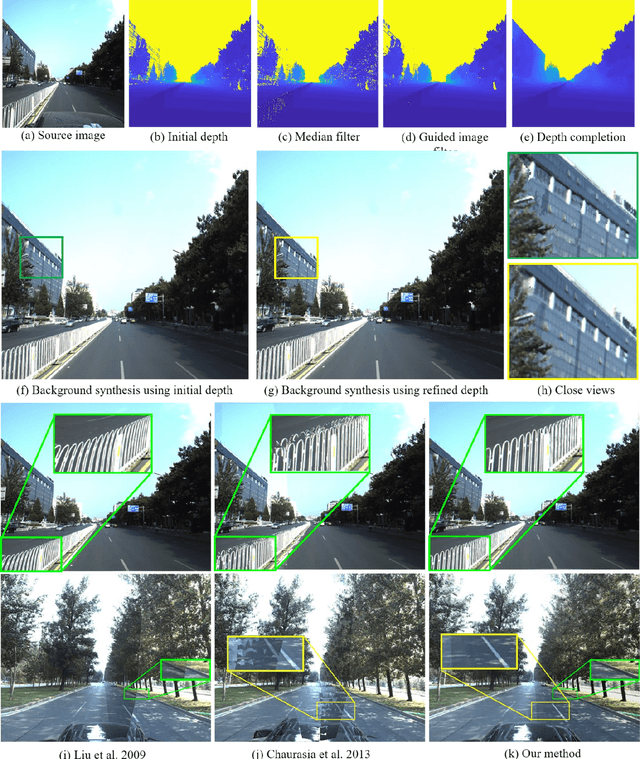

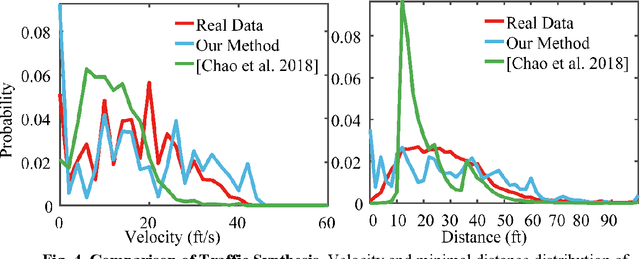

Abstract:Simulation systems have become an essential component in the development and validation of autonomous driving technologies. The prevailing state-of-the-art approach for simulation is to use game engines or high-fidelity computer graphics (CG) models to create driving scenarios. However, creating CG models and vehicle movements (e.g., the assets for simulation) remains a manual task that can be costly and time-consuming. In addition, the fidelity of CG images still lacks the richness and authenticity of real-world images and using these images for training leads to degraded performance. In this paper we present a novel approach to address these issues: Augmented Autonomous Driving Simulation (AADS). Our formulation augments real-world pictures with a simulated traffic flow to create photo-realistic simulation images and renderings. More specifically, we use LiDAR and cameras to scan street scenes. From the acquired trajectory data, we generate highly plausible traffic flows for cars and pedestrians and compose them into the background. The composite images can be re-synthesized with different viewpoints and sensor models. The resulting images are photo-realistic, fully annotated, and ready for end-to-end training and testing of autonomous driving systems from perception to planning. We explain our system design and validate our algorithms with a number of autonomous driving tasks from detection to segmentation and predictions. Compared to traditional approaches, our method offers unmatched scalability and realism. Scalability is particularly important for AD simulation and we believe the complexity and diversity of the real world cannot be realistically captured in a virtual environment. Our augmented approach combines the flexibility in a virtual environment (e.g., vehicle movements) with the richness of the real world to allow effective simulation of anywhere on earth.

Simulating LIDAR Point Cloud for Autonomous Driving using Real-world Scenes and Traffic Flows

Nov 17, 2018

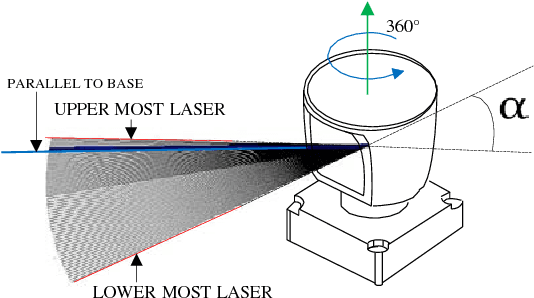

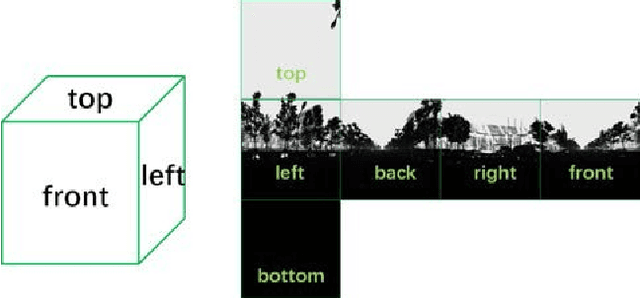

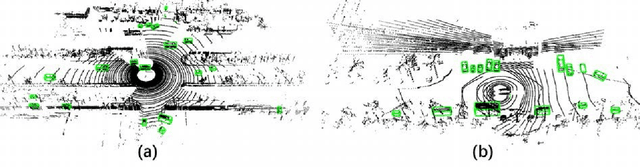

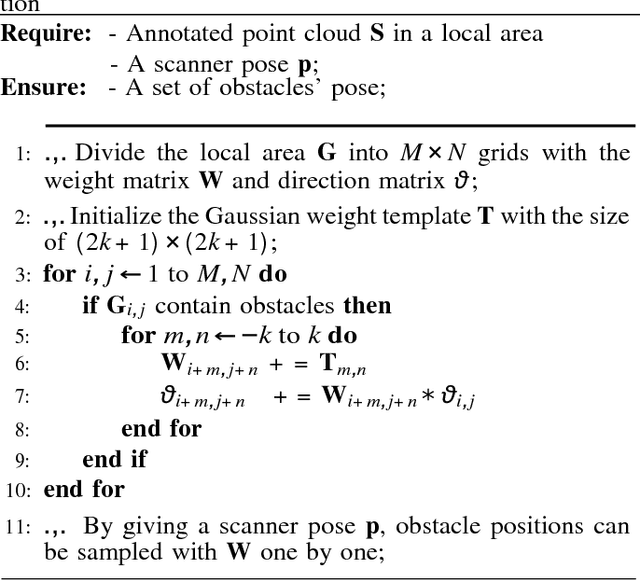

Abstract:We present a LIDAR simulation framework that can automatically generate 3D point cloud based on LIDAR type and placement. The point cloud, annotated with ground truth semantic labels, is to be used as training data to improve environmental perception capabilities for autonomous driving vehicles. Different from previous simulators, we generate the point cloud based on real environment and real traffic flow. More specifically we employ a mobile LIDAR scanner with cameras to capture real world scenes. The input to our simulation framework includes dense 3D point cloud and registered color images. Moving objects (such as cars, pedestrians, bicyclists) are automatically identified and recorded. These objects are then removed from the input point cloud to restore a static background (e.g., environment without movable objects). With that we can insert synthetic models of various obstacles, such as vehicles and pedestrians in the static background to create various traffic scenes. A novel LIDAR renderer takes the composite scene to generate new realistic LIDAR points that are already annotated at point level for synthetic objects. Experimental results show that our system is able to close the performance gap between simulation and real data to be 1 ~ 6% in different applications, and for model fine tuning, only 10% ~ 20% extra real data could help to outperform the original model trained with full real dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge