Fanny Yang

OneStory: Coherent Multi-Shot Video Generation with Adaptive Memory

Dec 08, 2025

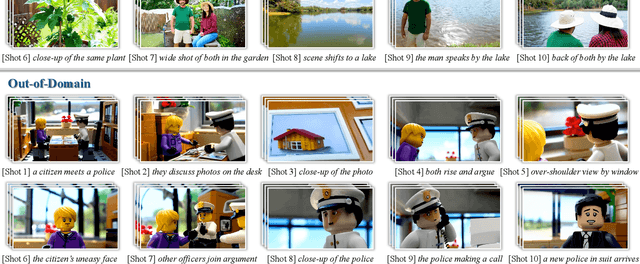

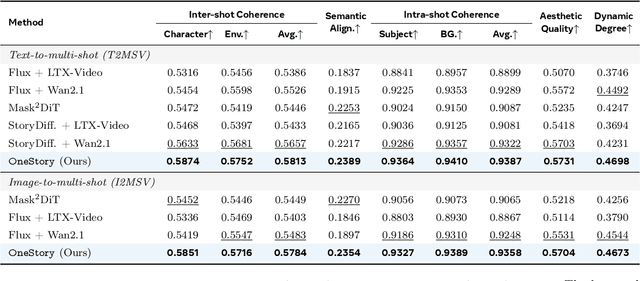

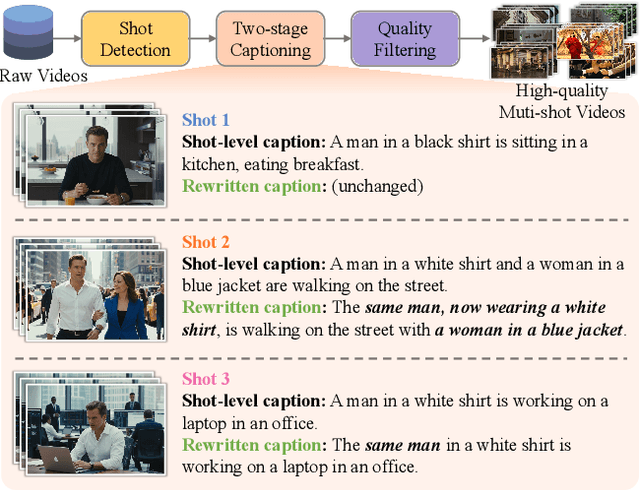

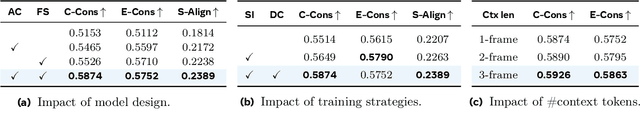

Abstract:Storytelling in real-world videos often unfolds through multiple shots -- discontinuous yet semantically connected clips that together convey a coherent narrative. However, existing multi-shot video generation (MSV) methods struggle to effectively model long-range cross-shot context, as they rely on limited temporal windows or single keyframe conditioning, leading to degraded performance under complex narratives. In this work, we propose OneStory, enabling global yet compact cross-shot context modeling for consistent and scalable narrative generation. OneStory reformulates MSV as a next-shot generation task, enabling autoregressive shot synthesis while leveraging pretrained image-to-video (I2V) models for strong visual conditioning. We introduce two key modules: a Frame Selection module that constructs a semantically-relevant global memory based on informative frames from prior shots, and an Adaptive Conditioner that performs importance-guided patchification to generate compact context for direct conditioning. We further curate a high-quality multi-shot dataset with referential captions to mirror real-world storytelling patterns, and design effective training strategies under the next-shot paradigm. Finetuned from a pretrained I2V model on our curated 60K dataset, OneStory achieves state-of-the-art narrative coherence across diverse and complex scenes in both text- and image-conditioned settings, enabling controllable and immersive long-form video storytelling.

On the sample complexity of semi-supervised multi-objective learning

Aug 23, 2025Abstract:In multi-objective learning (MOL), several possibly competing prediction tasks must be solved jointly by a single model. Achieving good trade-offs may require a model class $\mathcal{G}$ with larger capacity than what is necessary for solving the individual tasks. This, in turn, increases the statistical cost, as reflected in known MOL bounds that depend on the complexity of $\mathcal{G}$. We show that this cost is unavoidable for some losses, even in an idealized semi-supervised setting, where the learner has access to the Bayes-optimal solutions for the individual tasks as well as the marginal distributions over the covariates. On the other hand, for objectives defined with Bregman losses, we prove that the complexity of $\mathcal{G}$ may come into play only in terms of unlabeled data. Concretely, we establish sample complexity upper bounds, showing precisely when and how unlabeled data can significantly alleviate the need for labeled data. These rates are achieved by a simple, semi-supervised algorithm via pseudo-labeling.

ROC-n-reroll: How verifier imperfection affects test-time scaling

Jul 16, 2025Abstract:Test-time scaling aims to improve language model performance by leveraging additional compute during inference. While many works have empirically studied techniques like Best-of-N (BoN) and rejection sampling that make use of a verifier to enable test-time scaling, there is little theoretical understanding of how verifier imperfection affects performance. In this work, we address this gap. Specifically, we prove how instance-level accuracy of these methods is precisely characterized by the geometry of the verifier's ROC curve. Interestingly, while scaling is determined by the local geometry of the ROC curve for rejection sampling, it depends on global properties of the ROC curve for BoN. As a consequence when the ROC curve is unknown, it is impossible to extrapolate the performance of rejection sampling based on the low-compute regime. Furthermore, while rejection sampling outperforms BoN for fixed compute, in the infinite-compute limit both methods converge to the same level of accuracy, determined by the slope of the ROC curve near the origin. Our theoretical results are confirmed by experiments on GSM8K using different versions of Llama and Qwen to generate and verify solutions.

Doubly robust identification of treatment effects from multiple environments

Mar 18, 2025Abstract:Practical and ethical constraints often require the use of observational data for causal inference, particularly in medicine and social sciences. Yet, observational datasets are prone to confounding, potentially compromising the validity of causal conclusions. While it is possible to correct for biases if the underlying causal graph is known, this is rarely a feasible ask in practical scenarios. A common strategy is to adjust for all available covariates, yet this approach can yield biased treatment effect estimates, especially when post-treatment or unobserved variables are present. We propose RAMEN, an algorithm that produces unbiased treatment effect estimates by leveraging the heterogeneity of multiple data sources without the need to know or learn the underlying causal graph. Notably, RAMEN achieves doubly robust identification: it can identify the treatment effect whenever the causal parents of the treatment or those of the outcome are observed, and the node whose parents are observed satisfies an invariance assumption. Empirical evaluations on synthetic and real-world datasets show that our approach outperforms existing methods.

Learning Pareto manifolds in high dimensions: How can regularization help?

Mar 11, 2025Abstract:Simultaneously addressing multiple objectives is becoming increasingly important in modern machine learning. At the same time, data is often high-dimensional and costly to label. For a single objective such as prediction risk, conventional regularization techniques are known to improve generalization when the data exhibits low-dimensional structure like sparsity. However, it is largely unexplored how to leverage this structure in the context of multi-objective learning (MOL) with multiple competing objectives. In this work, we discuss how the application of vanilla regularization approaches can fail, and propose a two-stage MOL framework that can successfully leverage low-dimensional structure. We demonstrate its effectiveness experimentally for multi-distribution learning and fairness-risk trade-offs.

Efficient Randomized Experiments Using Foundation Models

Feb 06, 2025

Abstract:Randomized experiments are the preferred approach for evaluating the effects of interventions, but they are costly and often yield estimates with substantial uncertainty. On the other hand, in silico experiments leveraging foundation models offer a cost-effective alternative that can potentially attain higher statistical precision. However, the benefits of in silico experiments come with a significant risk: statistical inferences are not valid if the models fail to accurately predict experimental responses to interventions. In this paper, we propose a novel approach that integrates the predictions from multiple foundation models with experimental data while preserving valid statistical inference. Our estimator is consistent and asymptotically normal, with asymptotic variance no larger than the standard estimator based on experimental data alone. Importantly, these statistical properties hold even when model predictions are arbitrarily biased. Empirical results across several randomized experiments show that our estimator offers substantial precision gains, equivalent to a reduction of up to 20% in the sample size needed to match the same precision as the standard estimator based on experimental data alone.

Achievable distributional robustness when the robust risk is only partially identified

Feb 04, 2025Abstract:In safety-critical applications, machine learning models should generalize well under worst-case distribution shifts, that is, have a small robust risk. Invariance-based algorithms can provably take advantage of structural assumptions on the shifts when the training distributions are heterogeneous enough to identify the robust risk. However, in practice, such identifiability conditions are rarely satisfied -- a scenario so far underexplored in the theoretical literature. In this paper, we aim to fill the gap and propose to study the more general setting when the robust risk is only partially identifiable. In particular, we introduce the worst-case robust risk as a new measure of robustness that is always well-defined regardless of identifiability. Its minimum corresponds to an algorithm-independent (population) minimax quantity that measures the best achievable robustness under partial identifiability. While these concepts can be defined more broadly, in this paper we introduce and derive them explicitly for a linear model for concreteness of the presentation. First, we show that existing robustness methods are provably suboptimal in the partially identifiable case. We then evaluate these methods and the minimizer of the (empirical) worst-case robust risk on real-world gene expression data and find a similar trend: the test error of existing robustness methods grows increasingly suboptimal as the fraction of data from unseen environments increases, whereas accounting for partial identifiability allows for better generalization.

Copyright-Protected Language Generation via Adaptive Model Fusion

Dec 09, 2024Abstract:The risk of language models reproducing copyrighted material from their training data has led to the development of various protective measures. Among these, inference-time strategies that impose constraints via post-processing have shown promise in addressing the complexities of copyright regulation. However, they often incur prohibitive computational costs or suffer from performance trade-offs. To overcome these limitations, we introduce Copyright-Protecting Model Fusion (CP-Fuse), a novel approach that combines models trained on disjoint sets of copyrighted material during inference. In particular, CP-Fuse adaptively aggregates the model outputs to minimize the reproduction of copyrighted content, adhering to a crucial balancing property that prevents the regurgitation of memorized data. Through extensive experiments, we show that CP-Fuse significantly reduces the reproduction of protected material without compromising the quality of text and code generation. Moreover, its post-hoc nature allows seamless integration with other protective measures, further enhancing copyright safeguards. Lastly, we show that CP-Fuse is robust against common techniques for extracting training data.

Atmospheric Transport Modeling of CO$_2$ with Neural Networks

Aug 20, 2024Abstract:Accurately describing the distribution of CO$_2$ in the atmosphere with atmospheric tracer transport models is essential for greenhouse gas monitoring and verification support systems to aid implementation of international climate agreements. Large deep neural networks are poised to revolutionize weather prediction, which requires 3D modeling of the atmosphere. While similar in this regard, atmospheric transport modeling is subject to new challenges. Both, stable predictions for longer time horizons and mass conservation throughout need to be achieved, while IO plays a larger role compared to computational costs. In this study we explore four different deep neural networks (UNet, GraphCast, Spherical Fourier Neural Operator and SwinTransformer) which have proven as state-of-the-art in weather prediction to assess their usefulness for atmospheric tracer transport modeling. For this, we assemble the CarbonBench dataset, a systematic benchmark tailored for machine learning emulators of Eulerian atmospheric transport. Through architectural adjustments, we decouple the performance of our emulators from the distribution shift caused by a steady rise in atmospheric CO$_2$. More specifically, we center CO$_2$ input fields to zero mean and then use an explicit flux scheme and a mass fixer to assure mass balance. This design enables stable and mass conserving transport for over 6 months with all four neural network architectures. In our study, the SwinTransformer displays particularly strong emulation skill (90-day $R^2 > 0.99$), with physically plausible emulation even for forward runs of multiple years. This work paves the way forward towards high resolution forward and inverse modeling of inert trace gases with neural networks.

Strong Copyright Protection for Language Models via Adaptive Model Fusion

Jul 29, 2024Abstract:The risk of language models unintentionally reproducing copyrighted material from their training data has led to the development of various protective measures. In this paper, we propose model fusion as an effective solution to safeguard against copyright infringement. In particular, we introduce Copyright-Protecting Fusion (CP-Fuse), an algorithm that adaptively combines language models to minimize the reproduction of protected materials. CP-Fuse is inspired by the recently proposed Near-Access Free (NAF) framework and additionally incorporates a desirable balancing property that we demonstrate prevents the reproduction of memorized training data. Our results show that CP-Fuse significantly reduces the memorization of copyrighted content while maintaining high-quality text and code generation. Furthermore, we demonstrate how CP-Fuse can be integrated with other techniques for enhanced protection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge