Erman Ayday

Privacy-Preserving AI-Enabled Decentralized Learning and Employment Records System

Jan 06, 2026Abstract:Learning and Employment Record (LER) systems are emerging as critical infrastructure for securely compiling and sharing educational and work achievements. Existing blockchain-based platforms leverage verifiable credentials but typically lack automated skill-credential generation and the ability to incorporate unstructured evidence of learning. In this paper,a privacy-preserving, AI-enabled decentralized LER system is proposed to address these gaps. Digitally signed transcripts from educational institutions are accepted, and verifiable self-issued skill credentials are derived inside a trusted execution environment (TEE) by a natural language processing pipeline that analyzes formal records (e.g., transcripts, syllabi) and informal artifacts. All verification and job-skill matching are performed inside the enclave with selective disclosure, so raw credentials and private keys remain enclave-confined. Job matching relies solely on attested skill vectors and is invariant to non-skill resume fields, thereby reducing opportunities for screening bias.The NLP component was evaluated on sample learner data; the mapping follows the validated Syllabus-to-O*NET methodology,and a stability test across repeated runs observed <5% variance in top-ranked skills. Formal security statements and proof sketches are provided showing that derived credentials are unforgeable and that sensitive information remains confidential. The proposed system thus supports secure education and employment credentialing, robust transcript verification,and automated, privacy-preserving skill extraction within a decentralized framework.

Comparing Reconstruction Attacks on Pretrained Versus Full Fine-tuned Large Language Model Embeddings on Homo Sapiens Splice Sites Genomic Data

Nov 09, 2025Abstract:This study investigates embedding reconstruction attacks in large language models (LLMs) applied to genomic sequences, with a specific focus on how fine-tuning affects vulnerability to these attacks. Building upon Pan et al.'s seminal work demonstrating that embeddings from pretrained language models can leak sensitive information, we conduct a comprehensive analysis using the HS3D genomic dataset to determine whether task-specific optimization strengthens or weakens privacy protections. Our research extends Pan et al.'s work in three significant dimensions. First, we apply their reconstruction attack pipeline to pretrained and fine-tuned model embeddings, addressing a critical gap in their methodology that did not specify embedding types. Second, we implement specialized tokenization mechanisms tailored specifically for DNA sequences, enhancing the model's ability to process genomic data, as these models are pretrained on natural language and not DNA. Third, we perform a detailed comparative analysis examining position-specific, nucleotide-type, and privacy changes between pretrained and fine-tuned embeddings. We assess embeddings vulnerabilities across different types and dimensions, providing deeper insights into how task adaptation shifts privacy risks throughout genomic sequences. Our findings show a clear distinction in reconstruction vulnerability between pretrained and fine-tuned embeddings. Notably, fine-tuning strengthens resistance to reconstruction attacks in multiple architectures -- XLNet (+19.8\%), GPT-2 (+9.8\%), and BERT (+7.8\%) -- pointing to task-specific optimization as a potential privacy enhancement mechanism. These results highlight the need for advanced protective mechanisms for language models processing sensitive genomic data, while highlighting fine-tuning as a potential privacy-enhancing technique worth further exploration.

ZKPROV: A Zero-Knowledge Approach to Dataset Provenance for Large Language Models

Jun 26, 2025Abstract:As the deployment of large language models (LLMs) grows in sensitive domains, ensuring the integrity of their computational provenance becomes a critical challenge, particularly in regulated sectors such as healthcare, where strict requirements are applied in dataset usage. We introduce ZKPROV, a novel cryptographic framework that enables zero-knowledge proofs of LLM provenance. It allows users to verify that a model is trained on a reliable dataset without revealing sensitive information about it or its parameters. Unlike prior approaches that focus on complete verification of the training process (incurring significant computational cost) or depend on trusted execution environments, ZKPROV offers a distinct balance. Our method cryptographically binds a trained model to its authorized training dataset(s) through zero-knowledge proofs while avoiding proof of every training step. By leveraging dataset-signed metadata and compact model parameter commitments, ZKPROV provides sound and privacy-preserving assurances that the result of the LLM is derived from a model trained on the claimed authorized and relevant dataset. Experimental results demonstrate the efficiency and scalability of the ZKPROV in generating this proof and verifying it, achieving a practical solution for real-world deployments. We also provide formal security guarantees, proving that our approach preserves dataset confidentiality while ensuring trustworthy dataset provenance.

Empowering Digital Agriculture: A Privacy-Preserving Framework for Data Sharing and Collaborative Research

Jun 25, 2025Abstract:Data-driven agriculture, which integrates technology and data into agricultural practices, has the potential to improve crop yield, disease resilience, and long-term soil health. However, privacy concerns, such as adverse pricing, discrimination, and resource manipulation, deter farmers from sharing data, as it can be used against them. To address this barrier, we propose a privacy-preserving framework that enables secure data sharing and collaboration for research and development while mitigating privacy risks. The framework combines dimensionality reduction techniques (like Principal Component Analysis (PCA)) and differential privacy by introducing Laplacian noise to protect sensitive information. The proposed framework allows researchers to identify potential collaborators for a target farmer and train personalized machine learning models either on the data of identified collaborators via federated learning or directly on the aggregated privacy-protected data. It also allows farmers to identify potential collaborators based on similarities. We have validated this on real-life datasets, demonstrating robust privacy protection against adversarial attacks and utility performance comparable to a centralized system. We demonstrate how this framework can facilitate collaboration among farmers and help researchers pursue broader research objectives. The adoption of the framework can empower researchers and policymakers to leverage agricultural data responsibly, paving the way for transformative advances in data-driven agriculture. By addressing critical privacy challenges, this work supports secure data integration, fostering innovation and sustainability in agricultural systems.

The Feasibility of Topic-Based Watermarking on Academic Peer Reviews

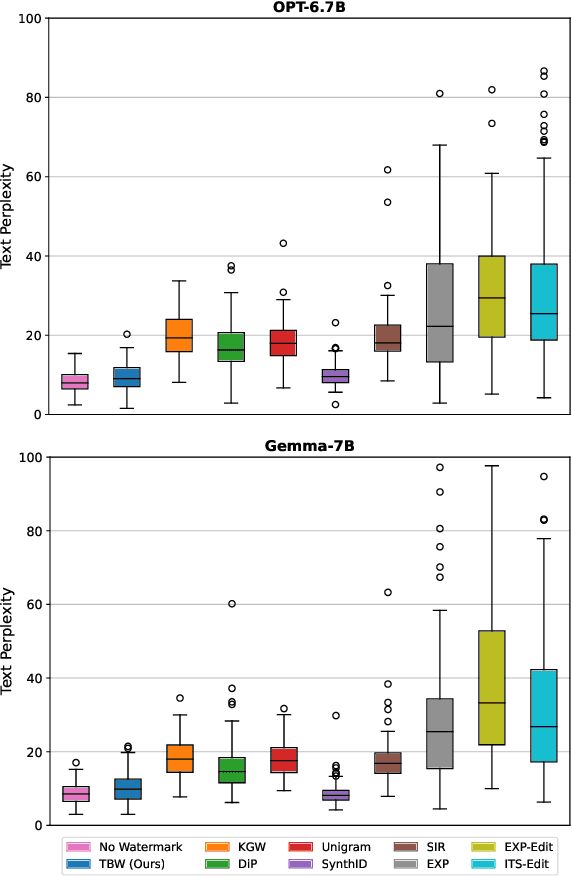

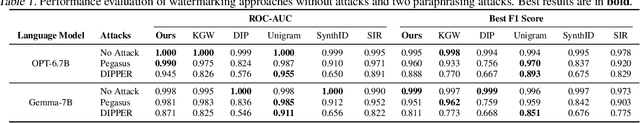

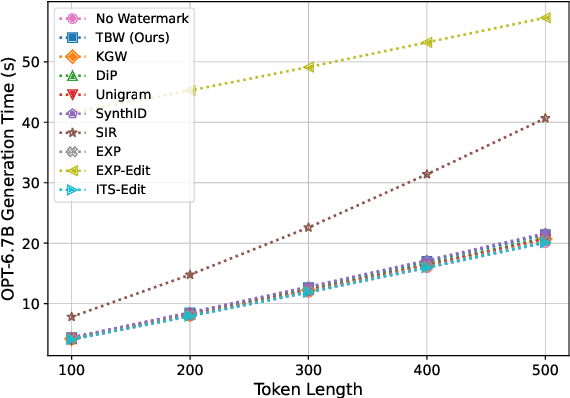

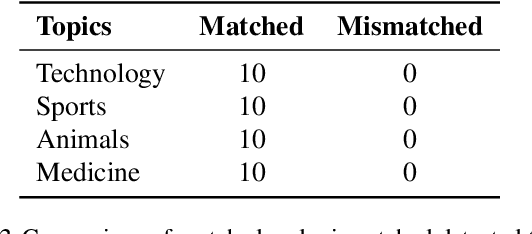

May 27, 2025Abstract:Large language models (LLMs) are increasingly integrated into academic workflows, with many conferences and journals permitting their use for tasks such as language refinement and literature summarization. However, their use in peer review remains prohibited due to concerns around confidentiality breaches, hallucinated content, and inconsistent evaluations. As LLM-generated text becomes more indistinguishable from human writing, there is a growing need for reliable attribution mechanisms to preserve the integrity of the review process. In this work, we evaluate topic-based watermarking (TBW), a lightweight, semantic-aware technique designed to embed detectable signals into LLM-generated text. We conduct a comprehensive assessment across multiple LLM configurations, including base, few-shot, and fine-tuned variants, using authentic peer review data from academic conferences. Our results show that TBW maintains review quality relative to non-watermarked outputs, while demonstrating strong robustness to paraphrasing-based evasion. These findings highlight the viability of TBW as a minimally intrusive and practical solution for enforcing LLM usage in peer review.

Privacy-Preserving Model and Preprocessing Verification for Machine Learning

Jan 14, 2025

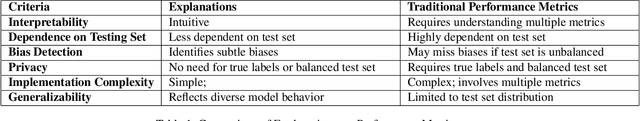

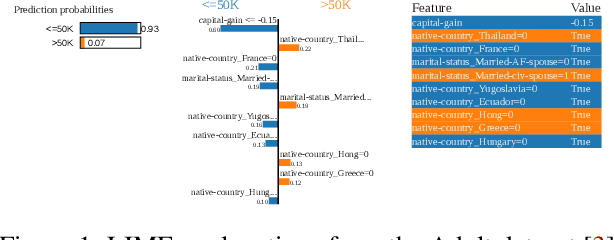

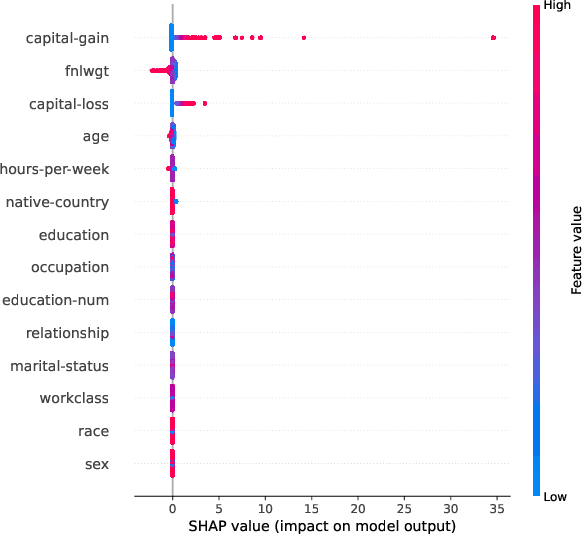

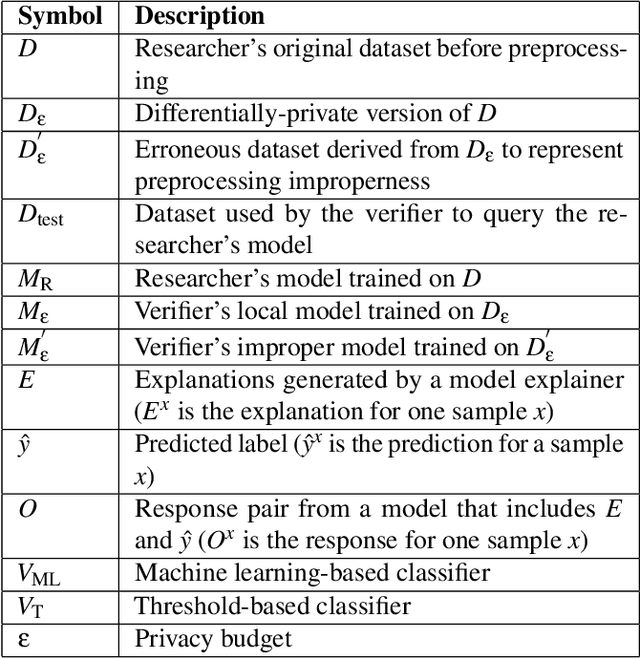

Abstract:This paper presents a framework for privacy-preserving verification of machine learning models, focusing on models trained on sensitive data. Integrating Local Differential Privacy (LDP) with model explanations from LIME and SHAP, our framework enables robust verification without compromising individual privacy. It addresses two key tasks: binary classification, to verify if a target model was trained correctly by applying the appropriate preprocessing steps, and multi-class classification, to identify specific preprocessing errors. Evaluations on three real-world datasets-Diabetes, Adult, and Student Record-demonstrate that while the ML-based approach is particularly effective in binary tasks, the threshold-based method performs comparably in multi-class tasks. Results indicate that although verification accuracy varies across datasets and noise levels, the framework provides effective detection of preprocessing errors, strong privacy guarantees, and practical applicability for safeguarding sensitive data.

Privacy-Preserving Data Linkage Across Private and Public Datasets for Collaborative Agriculture Research

Sep 09, 2024

Abstract:Digital agriculture leverages technology to enhance crop yield, disease resilience, and soil health, playing a critical role in agricultural research. However, it raises privacy concerns such as adverse pricing, price discrimination, higher insurance costs, and manipulation of resources, deterring farm operators from sharing data due to potential misuse. This study introduces a privacy-preserving framework that addresses these risks while allowing secure data sharing for digital agriculture. Our framework enables comprehensive data analysis while protecting privacy. It allows stakeholders to harness research-driven policies that link public and private datasets. The proposed algorithm achieves this by: (1) identifying similar farmers based on private datasets, (2) providing aggregate information like time and location, (3) determining trends in price and product availability, and (4) correlating trends with public policy data, such as food insecurity statistics. We validate the framework with real-world Farmer's Market datasets, demonstrating its efficacy through machine learning models trained on linked privacy-preserved data. The results support policymakers and researchers in addressing food insecurity and pricing issues. This work significantly contributes to digital agriculture by providing a secure method for integrating and analyzing data, driving advancements in agricultural technology and development.

Privacy-Preserving Optimal Parameter Selection for Collaborative Clustering

Jun 08, 2024

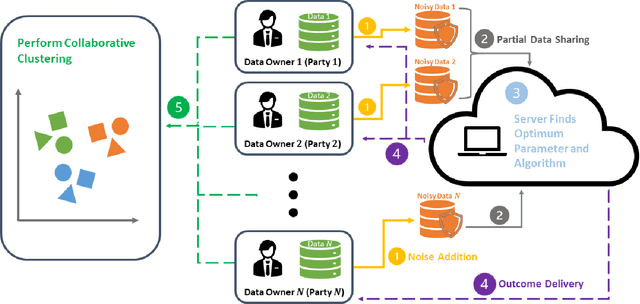

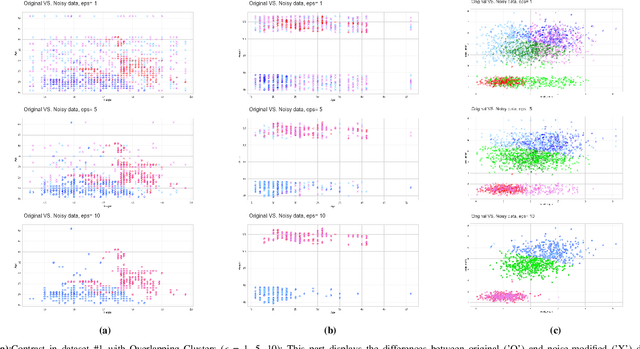

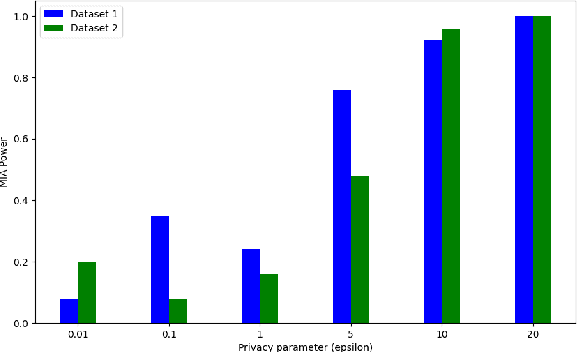

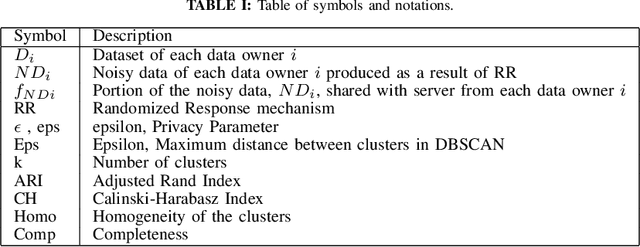

Abstract:This study investigates the optimal selection of parameters for collaborative clustering while ensuring data privacy. We focus on key clustering algorithms within a collaborative framework, where multiple data owners combine their data. A semi-trusted server assists in recommending the most suitable clustering algorithm and its parameters. Our findings indicate that the privacy parameter ($\epsilon$) minimally impacts the server's recommendations, but an increase in $\epsilon$ raises the risk of membership inference attacks, where sensitive information might be inferred. To mitigate these risks, we implement differential privacy techniques, particularly the Randomized Response mechanism, to add noise and protect data privacy. Our approach demonstrates that high-quality clustering can be achieved while maintaining data confidentiality, as evidenced by metrics such as the Adjusted Rand Index and Silhouette Score. This study contributes to privacy-aware data sharing, optimal algorithm and parameter selection, and effective communication between data owners and the server.

Topic-based Watermarks for LLM-Generated Text

Apr 02, 2024

Abstract:Recent advancements of large language models (LLMs) have resulted in indistinguishable text outputs comparable to human-generated text. Watermarking algorithms are potential tools that offer a way to differentiate between LLM- and human-generated text by embedding detectable signatures within LLM-generated output. However, current watermarking schemes lack robustness against known attacks against watermarking algorithms. In addition, they are impractical considering an LLM generates tens of thousands of text outputs per day and the watermarking algorithm needs to memorize each output it generates for the detection to work. In this work, focusing on the limitations of current watermarking schemes, we propose the concept of a "topic-based watermarking algorithm" for LLMs. The proposed algorithm determines how to generate tokens for the watermarked LLM output based on extracted topics of an input prompt or the output of a non-watermarked LLM. Inspired from previous work, we propose using a pair of lists (that are generated based on the specified extracted topic(s)) that specify certain tokens to be included or excluded while generating the watermarked output of the LLM. Using the proposed watermarking algorithm, we show the practicality of a watermark detection algorithm. Furthermore, we discuss a wide range of attacks that can emerge against watermarking algorithms for LLMs and the benefit of the proposed watermarking scheme for the feasibility of modeling a potential attacker considering its benefit vs. loss.

Protecting Sensitive Data through Federated Co-Training

Oct 09, 2023

Abstract:In many critical applications, sensitive data is inherently distributed. Federated learning trains a model collaboratively by aggregating the parameters of locally trained models. This avoids exposing sensitive local data. It is possible, though, to infer upon the sensitive data from the shared model parameters. At the same time, many types of machine learning models do not lend themselves to parameter aggregation, such as decision trees, or rule ensembles. It has been observed that in many applications, in particular healthcare, large unlabeled datasets are publicly available. They can be used to exchange information between clients by distributed distillation, i.e., co-regularizing local training via the discrepancy between the soft predictions of each local client on the unlabeled dataset. This, however, still discloses private information and restricts the types of models to those trainable via gradient-based methods. We propose to go one step further and use a form of federated co-training, where local hard labels on the public unlabeled datasets are shared and aggregated into a consensus label. This consensus label can be used for local training by any supervised machine learning model. We show that this federated co-training approach achieves a model quality comparable to both federated learning and distributed distillation on a set of benchmark datasets and real-world medical datasets. It improves privacy over both approaches, protecting against common membership inference attacks to the highest degree. Furthermore, we show that federated co-training can collaboratively train interpretable models, such as decision trees and rule ensembles, achieving a model quality comparable to centralized training.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge