Emad Boctor

OpenPros: A Large-Scale Dataset for Limited View Prostate Ultrasound Computed Tomography

May 18, 2025Abstract:Prostate cancer is one of the most common and lethal cancers among men, making its early detection critically important. Although ultrasound imaging offers greater accessibility and cost-effectiveness compared to MRI, traditional transrectal ultrasound methods suffer from low sensitivity, especially in detecting anteriorly located tumors. Ultrasound computed tomography provides quantitative tissue characterization, but its clinical implementation faces significant challenges, particularly under anatomically constrained limited-angle acquisition conditions specific to prostate imaging. To address these unmet needs, we introduce OpenPros, the first large-scale benchmark dataset explicitly developed for limited-view prostate USCT. Our dataset includes over 280,000 paired samples of realistic 2D speed-of-sound (SOS) phantoms and corresponding ultrasound full-waveform data, generated from anatomically accurate 3D digital prostate models derived from real clinical MRI/CT scans and ex vivo ultrasound measurements, annotated by medical experts. Simulations are conducted under clinically realistic configurations using advanced finite-difference time-domain and Runge-Kutta acoustic wave solvers, both provided as open-source components. Through comprehensive baseline experiments, we demonstrate that state-of-the-art deep learning methods surpass traditional physics-based approaches in both inference efficiency and reconstruction accuracy. Nevertheless, current deep learning models still fall short of delivering clinically acceptable high-resolution images with sufficient accuracy. By publicly releasing OpenPros, we aim to encourage the development of advanced machine learning algorithms capable of bridging this performance gap and producing clinically usable, high-resolution, and highly accurate prostate ultrasound images. The dataset is publicly accessible at https://open-pros.github.io/.

Feature-aggregated spatiotemporal spine surface estimation for wearable patch ultrasound volumetric imaging

Nov 11, 2022Abstract:Clear identification of bone structures is crucial for ultrasound-guided lumbar interventions, but it can be challenging due to the complex shapes of the self-shadowing vertebra anatomy and the extensive background speckle noise from the surrounding soft tissue structures. Therefore, we propose to use a patch-like wearable ultrasound solution to capture the reflective bone surfaces from multiple imaging angles and create 3D bone representations for interventional guidance. In this work, we will present our method for estimating the vertebra bone surfaces by using a spatiotemporal U-Net architecture learning from the B-Mode image and aggregated feature maps of hand-crafted filters. The methods are evaluated on spine phantom image data collected by our proposed miniaturized wearable "patch" ultrasound device, and the results show that a significant improvement on baseline method can be achieved with promising accuracy. Equipped with this surface estimation framework, our wearable ultrasound system can potentially provide intuitive and accurate interventional guidance for clinicians in augmented reality setting.

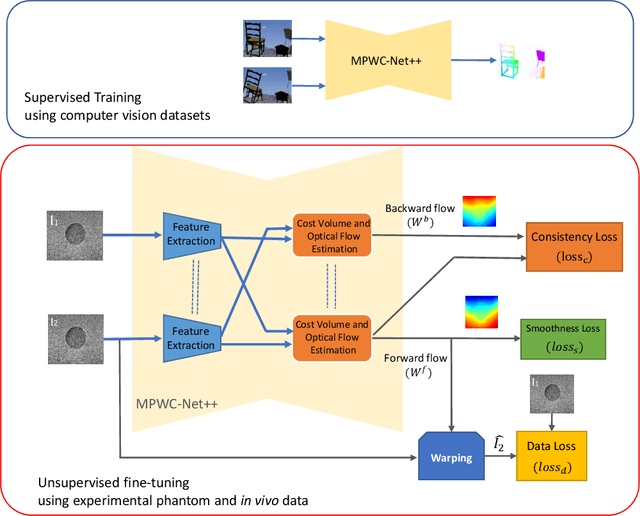

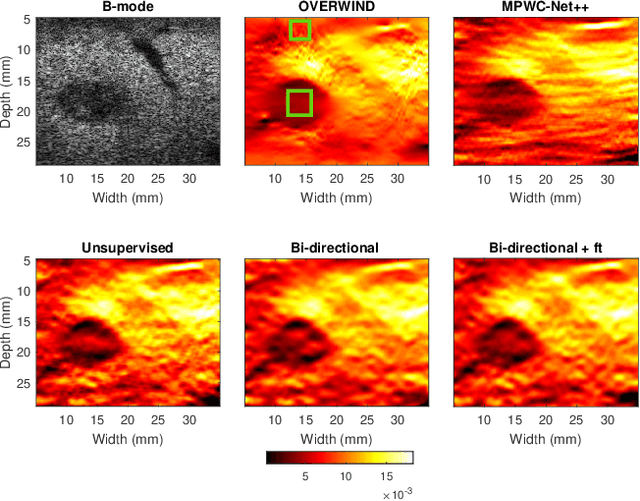

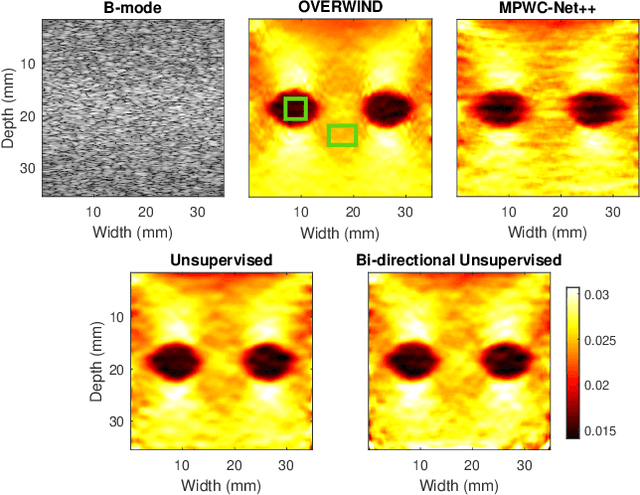

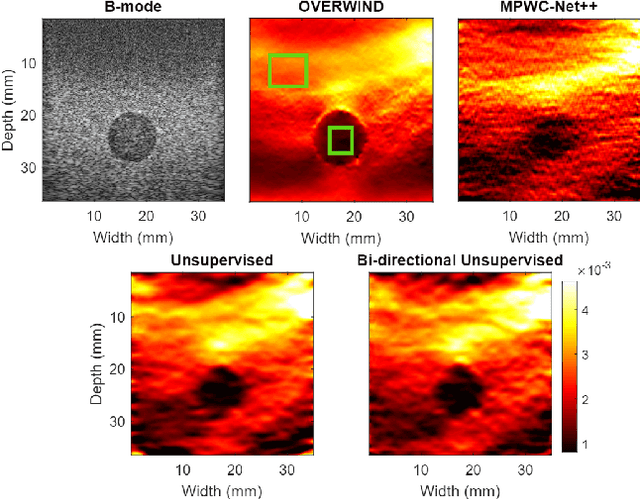

Bi-Directional Semi-Supervised Training of Convolutional Neural Networks for Ultrasound Elastography Displacement Estimation

Jan 31, 2022

Abstract:The performance of ultrasound elastography (USE) heavily depends on the accuracy of displacement estimation. Recently, Convolutional Neural Networks (CNN) have shown promising performance in optical flow estimation and have been adopted for USE displacement estimation. Networks trained on computer vision images are not optimized for USE displacement estimation since there is a large gap between the computer vision images and the high-frequency Radio Frequency (RF) ultrasound data. Many researchers tried to adopt the optical flow CNNs to USE by applying transfer learning to improve the performance of CNNs for USE. However, the ground truth displacement in real ultrasound data is unknown, and simulated data exhibits a domain shift compared to the real data and is also computationally expensive to generate. To resolve this issue, semi-supervised methods have been proposed wherein the networks pre-trained on computer vision images are fine-tuned using real ultrasound data. In this paper, we employ a semi-supervised method by exploiting the first and second-order derivatives of the displacement field for the regularization. We also modify the network structure to estimate both forward and backward displacements, and propose to use consistency between the forward and backward strains as an additional regularizer to further enhance the performance. We validate our method using several experimental phantom and in vivo data. We also show that the network fine-tuned by our proposed method using experimental phantom data performs well on in vivo data similar to the network fine-tuned on in vivo data. Our results also show that the proposed method outperforms current deep learning methods and is comparable to computationally expensive optimization-based algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge