Edwin Goh

ShadowNav: Autonomous Global Localization for Lunar Navigation in Darkness

May 06, 2024Abstract:The ability to determine the pose of a rover in an inertial frame autonomously is a crucial capability necessary for the next generation of surface rover missions on other planetary bodies. Currently, most on-going rover missions utilize ground-in-the-loop interventions to manually correct for drift in the pose estimate and this human supervision bottlenecks the distance over which rovers can operate autonomously and carry out scientific measurements. In this paper, we present ShadowNav, an autonomous approach for global localization on the Moon with an emphasis on driving in darkness and at nighttime. Our approach uses the leading edge of Lunar craters as landmarks and a particle filtering approach is used to associate detected craters with known ones on an offboard map. We discuss the key design decisions in developing the ShadowNav framework for use with a Lunar rover concept equipped with a stereo camera and an external illumination source. Finally, we demonstrate the efficacy of our proposed approach in both a Lunar simulation environment and on data collected during a field test at Cinder Lakes, Arizona.

Improving Contrastive Learning on Visually Homogeneous Mars Rover Images

Oct 17, 2022

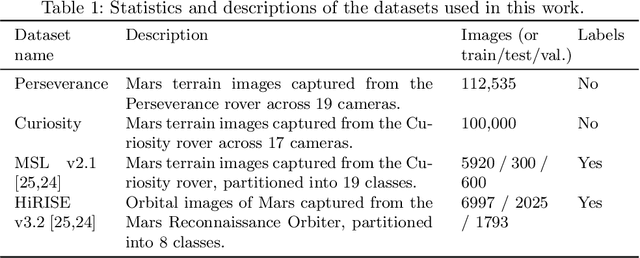

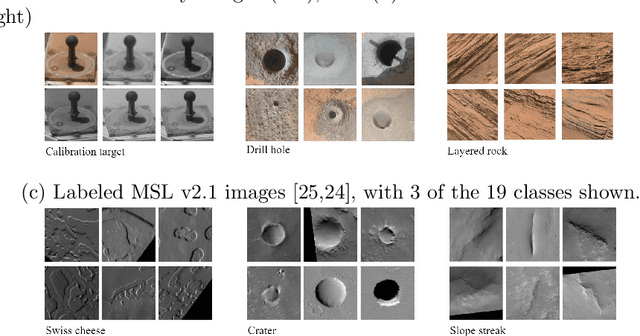

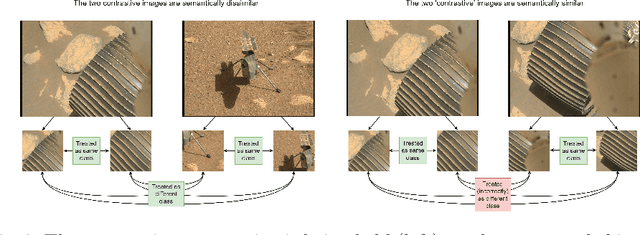

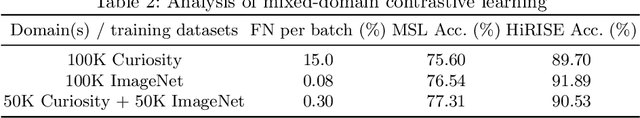

Abstract:Contrastive learning has recently demonstrated superior performance to supervised learning, despite requiring no training labels. We explore how contrastive learning can be applied to hundreds of thousands of unlabeled Mars terrain images, collected from the Mars rovers Curiosity and Perseverance, and from the Mars Reconnaissance Orbiter. Such methods are appealing since the vast majority of Mars images are unlabeled as manual annotation is labor intensive and requires extensive domain knowledge. Contrastive learning, however, assumes that any given pair of distinct images contain distinct semantic content. This is an issue for Mars image datasets, as any two pairs of Mars images are far more likely to be semantically similar due to the lack of visual diversity on the planet's surface. Making the assumption that pairs of images will be in visual contrast - when they are in fact not - results in pairs that are falsely considered as negatives, impacting training performance. In this study, we propose two approaches to resolve this: 1) an unsupervised deep clustering step on the Mars datasets, which identifies clusters of images containing similar semantic content and corrects false negative errors during training, and 2) a simple approach which mixes data from different domains to increase visual diversity of the total training dataset. Both cases reduce the rate of false negative pairs, thus minimizing the rate in which the model is incorrectly penalized during contrastive training. These modified approaches remain fully unsupervised end-to-end. To evaluate their performance, we add a single linear layer trained to generate class predictions based on these contrastively-learned features and demonstrate increased performance compared to supervised models; observing an improvement in classification accuracy of 3.06% using only 10% of the labeled data.

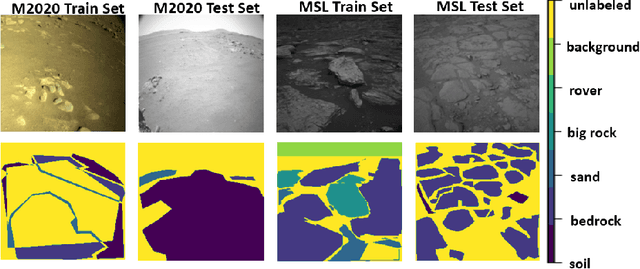

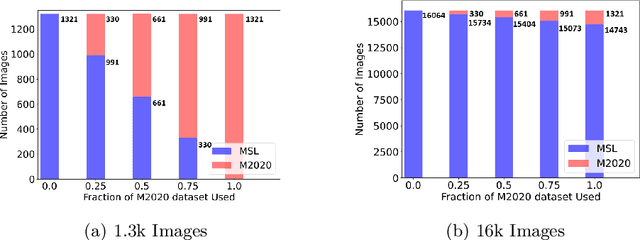

Mixed-domain Training Improves Multi-Mission Terrain Segmentation

Sep 27, 2022

Abstract:Planetary rover missions must utilize machine learning-based perception to continue extra-terrestrial exploration with little to no human presence. Martian terrain segmentation has been critical for rover navigation and hazard avoidance to perform further exploratory tasks, e.g. soil sample collection and searching for organic compounds. Current Martian terrain segmentation models require a large amount of labeled data to achieve acceptable performance, and also require retraining for deployment across different domains, i.e. different rover missions, or different tasks, i.e. geological identification and navigation. This research proposes a semi-supervised learning approach that leverages unsupervised contrastive pretraining of a backbone for a multi-mission semantic segmentation for Martian surfaces. This model will expand upon the current Martian segmentation capabilities by being able to deploy across different Martian rover missions for terrain navigation, by utilizing a mixed-domain training set that ensures feature diversity. Evaluation results of using average pixel accuracy show that a semi-supervised mixed-domain approach improves accuracy compared to single domain training and supervised training by reaching an accuracy of 97% for the Mars Science Laboratory's Curiosity Rover and 79.6% for the Mars 2020 Perseverance Rover. Further, providing different weighting methods to loss functions improved the models correct predictions for minority or rare classes by over 30% using the recall metric compared to standard cross-entropy loss. These results can inform future multi-mission and multi-task semantic segmentation for rover missions in a data-efficient manner.

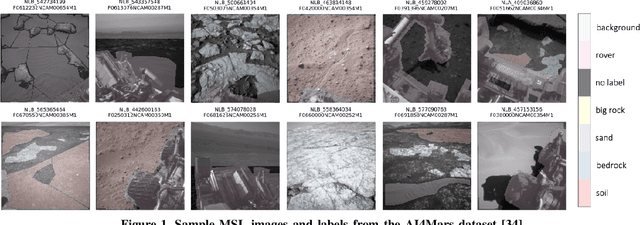

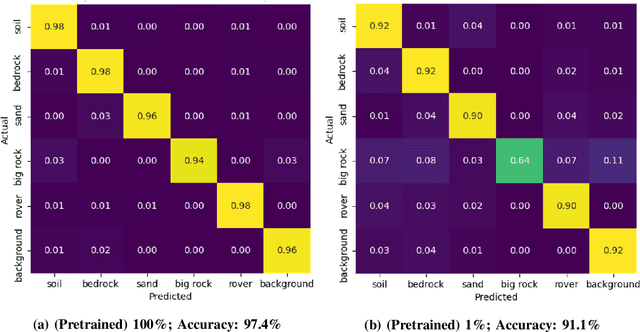

Mars Terrain Segmentation with Less Labels

Feb 01, 2022

Abstract:Planetary rover systems need to perform terrain segmentation to identify drivable areas as well as identify specific types of soil for sample collection. The latest Martian terrain segmentation methods rely on supervised learning which is very data hungry and difficult to train where only a small number of labeled samples are available. Moreover, the semantic classes are defined differently for different applications (e.g., rover traversal vs. geological) and as a result the network has to be trained from scratch each time, which is an inefficient use of resources. This research proposes a semi-supervised learning framework for Mars terrain segmentation where a deep segmentation network trained in an unsupervised manner on unlabeled images is transferred to the task of terrain segmentation trained on few labeled images. The network incorporates a backbone module which is trained using a contrastive loss function and an output atrous convolution module which is trained using a pixel-wise cross-entropy loss function. Evaluation results using the metric of segmentation accuracy show that the proposed method with contrastive pretraining outperforms plain supervised learning by 2%-10%. Moreover, the proposed model is able to achieve a segmentation accuracy of 91.1% using only 161 training images (1% of the original dataset) compared to 81.9% with plain supervised learning.

Scheduling the NASA Deep Space Network with Deep Reinforcement Learning

Feb 09, 2021

Abstract:With three complexes spread evenly across the Earth, NASA's Deep Space Network (DSN) is the primary means of communications as well as a significant scientific instrument for dozens of active missions around the world. A rapidly rising number of spacecraft and increasingly complex scientific instruments with higher bandwidth requirements have resulted in demand that exceeds the network's capacity across its 12 antennae. The existing DSN scheduling process operates on a rolling weekly basis and is time-consuming; for a given week, generation of the final baseline schedule of spacecraft tracking passes takes roughly 5 months from the initial requirements submission deadline, with several weeks of peer-to-peer negotiations in between. This paper proposes a deep reinforcement learning (RL) approach to generate candidate DSN schedules from mission requests and spacecraft ephemeris data with demonstrated capability to address real-world operational constraints. A deep RL agent is developed that takes mission requests for a given week as input, and interacts with a DSN scheduling environment to allocate tracks such that its reward signal is maximized. A comparison is made between an agent trained using Proximal Policy Optimization and its random, untrained counterpart. The results represent a proof-of-concept that, given a well-shaped reward signal, a deep RL agent can learn the complex heuristics used by experts to schedule the DSN. A trained agent can potentially be used to generate candidate schedules to bootstrap the scheduling process and thus reduce the turnaround cycle for DSN scheduling.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge