Douglas McLelland

Low-power Ship Detection in Satellite Images Using Neuromorphic Hardware

Jun 17, 2024Abstract:Transmitting Earth observation image data from satellites to ground stations incurs significant costs in terms of power and bandwidth. For maritime ship detection, on-board data processing can identify ships and reduce the amount of data sent to the ground. However, most images captured on board contain only bodies of water or land, with the Airbus Ship Detection dataset showing only 22.1\% of images containing ships. We designed a low-power, two-stage system to optimize performance instead of relying on a single complex model. The first stage is a lightweight binary classifier that acts as a gating mechanism to detect the presence of ships. This stage runs on Brainchip's Akida 1.0, which leverages activation sparsity to minimize dynamic power consumption. The second stage employs a YOLOv5 object detection model to identify the location and size of ships. This approach achieves a mean Average Precision (mAP) of 76.9\%, which increases to 79.3\% when evaluated solely on images containing ships, by reducing false positives. Additionally, we calculated that evaluating the full validation set on a NVIDIA Jetson Nano device requires 111.4 kJ of energy. Our two-stage system reduces this energy consumption to 27.3 kJ, which is less than a fourth, demonstrating the efficiency of a heterogeneous computing system.

Event-Based Eye Tracking. AIS 2024 Challenge Survey

Apr 17, 2024

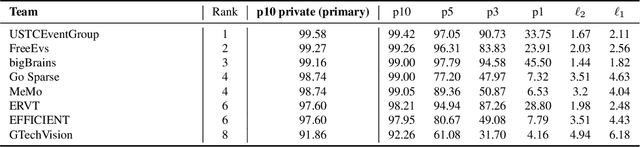

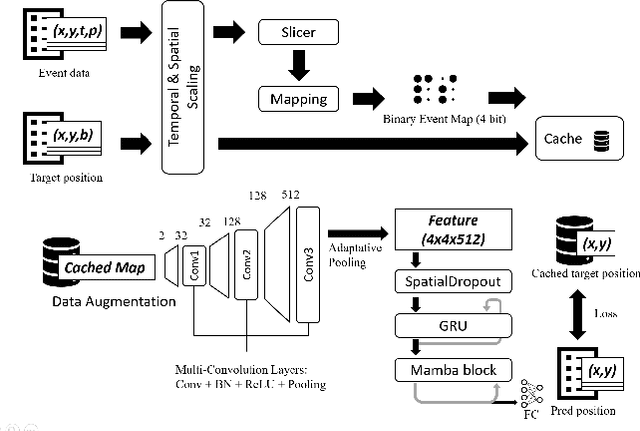

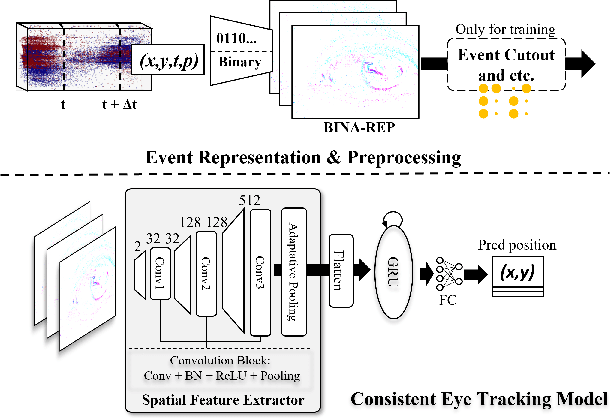

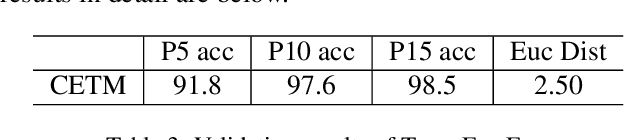

Abstract:This survey reviews the AIS 2024 Event-Based Eye Tracking (EET) Challenge. The task of the challenge focuses on processing eye movement recorded with event cameras and predicting the pupil center of the eye. The challenge emphasizes efficient eye tracking with event cameras to achieve good task accuracy and efficiency trade-off. During the challenge period, 38 participants registered for the Kaggle competition, and 8 teams submitted a challenge factsheet. The novel and diverse methods from the submitted factsheets are reviewed and analyzed in this survey to advance future event-based eye tracking research.

A Lightweight Spatiotemporal Network for Online Eye Tracking with Event Camera

Apr 13, 2024Abstract:Event-based data are commonly encountered in edge computing environments where efficiency and low latency are critical. To interface with such data and leverage their rich temporal features, we propose a causal spatiotemporal convolutional network. This solution targets efficient implementation on edge-appropriate hardware with limited resources in three ways: 1) deliberately targets a simple architecture and set of operations (convolutions, ReLU activations) 2) can be configured to perform online inference efficiently via buffering of layer outputs 3) can achieve more than 90% activation sparsity through regularization during training, enabling very significant efficiency gains on event-based processors. In addition, we propose a general affine augmentation strategy acting directly on the events, which alleviates the problem of dataset scarcity for event-based systems. We apply our model on the AIS 2024 event-based eye tracking challenge, reaching a score of 0.9916 p10 accuracy on the Kaggle private testset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge