Don Towsley

Learning Best Paths in Quantum Networks

Jun 14, 2025Abstract:Quantum networks (QNs) transmit delicate quantum information across noisy quantum channels. Crucial applications, like quantum key distribution (QKD) and distributed quantum computation (DQC), rely on efficient quantum information transmission. Learning the best path between a pair of end nodes in a QN is key to enhancing such applications. This paper addresses learning the best path in a QN in the online learning setting. We explore two types of feedback: "link-level" and "path-level". Link-level feedback pertains to QNs with advanced quantum switches that enable link-level benchmarking. Path-level feedback, on the other hand, is associated with basic quantum switches that permit only path-level benchmarking. We introduce two online learning algorithms, BeQuP-Link and BeQuP-Path, to identify the best path using link-level and path-level feedback, respectively. To learn the best path, BeQuP-Link benchmarks the critical links dynamically, while BeQuP-Path relies on a subroutine, transferring path-level observations to estimate link-level parameters in a batch manner. We analyze the quantum resource complexity of these algorithms and demonstrate that both can efficiently and, with high probability, determine the best path. Finally, we perform NetSquid-based simulations and validate that both algorithms accurately and efficiently identify the best path.

Quickest Change Detection with Confusing Change

May 01, 2024Abstract:In the problem of quickest change detection (QCD), a change occurs at some unknown time in the distribution of a sequence of independent observations. This work studies a QCD problem where the change is either a bad change, which we aim to detect, or a confusing change, which is not of our interest. Our objective is to detect a bad change as quickly as possible while avoiding raising a false alarm for pre-change or a confusing change. We identify a specific set of pre-change, bad change, and confusing change distributions that pose challenges beyond the capabilities of standard Cumulative Sum (CuSum) procedures. Proposing novel CuSum-based detection procedures, S-CuSum and J-CuSum, leveraging two CuSum statistics, we offer solutions applicable across all kinds of pre-change, bad change, and confusing change distributions. For both S-CuSum and J-CuSum, we provide analytical performance guarantees and validate them by numerical results. Furthermore, both procedures are computationally efficient as they only require simple recursive updates.

Cooperative Multi-agent Bandits: Distributed Algorithms with Optimal Individual Regret and Constant Communication Costs

Aug 08, 2023Abstract:Recently, there has been extensive study of cooperative multi-agent multi-armed bandits where a set of distributed agents cooperatively play the same multi-armed bandit game. The goal is to develop bandit algorithms with the optimal group and individual regrets and low communication between agents. The prior work tackled this problem using two paradigms: leader-follower and fully distributed algorithms. Prior algorithms in both paradigms achieve the optimal group regret. The leader-follower algorithms achieve constant communication costs but fail to achieve optimal individual regrets. The state-of-the-art fully distributed algorithms achieve optimal individual regrets but fail to achieve constant communication costs. This paper presents a simple yet effective communication policy and integrates it into a learning algorithm for cooperative bandits. Our algorithm achieves the best of both paradigms: optimal individual regret and constant communication costs.

On Collaboration in Distributed Parameter Estimation with Resource Constraints

Jul 12, 2023

Abstract:We study sensor/agent data collection and collaboration policies for parameter estimation, accounting for resource constraints and correlation between observations collected by distinct sensors/agents. Specifically, we consider a group of sensors/agents each samples from different variables of a multivariate Gaussian distribution and has different estimation objectives, and we formulate a sensor/agent's data collection and collaboration policy design problem as a Fisher information maximization (or Cramer-Rao bound minimization) problem. When the knowledge of correlation between variables is available, we analytically identify two particular scenarios: (1) where the knowledge of the correlation between samples cannot be leveraged for collaborative estimation purposes and (2) where the optimal data collection policy involves investing scarce resources to collaboratively sample and transfer information that is not of immediate interest and whose statistics are already known, with the sole goal of increasing the confidence on the estimate of the parameter of interest. When the knowledge of certain correlation is unavailable but collaboration may still be worthwhile, we propose novel ways to apply multi-armed bandit algorithms to learn the optimal data collection and collaboration policy in our distributed parameter estimation problem and demonstrate that the proposed algorithms, DOUBLE-F, DOUBLE-Z, UCB-F, UCB-Z, are effective through simulations.

On-Demand Communication for Asynchronous Multi-Agent Bandits

Feb 15, 2023

Abstract:This paper studies a cooperative multi-agent multi-armed stochastic bandit problem where agents operate asynchronously -- agent pull times and rates are unknown, irregular, and heterogeneous -- and face the same instance of a K-armed bandit problem. Agents can share reward information to speed up the learning process at additional communication costs. We propose ODC, an on-demand communication protocol that tailors the communication of each pair of agents based on their empirical pull times. ODC is efficient when the pull times of agents are highly heterogeneous, and its communication complexity depends on the empirical pull times of agents. ODC is a generic protocol that can be integrated into most cooperative bandit algorithms without degrading their performance. We then incorporate ODC into the natural extensions of UCB and AAE algorithms and propose two communication-efficient cooperative algorithms. Our analysis shows that both algorithms are near-optimal in regret.

Robust Path Selection in Software-defined WANs using Deep Reinforcement Learning

Dec 22, 2022

Abstract:In the context of an efficient network traffic engineering process where the network continuously measures a new traffic matrix and updates the set of paths in the network, an automated process is required to quickly and efficiently identify when and what set of paths should be used. Unfortunately, the burden of finding the optimal solution for the network updating process in each given time interval is high since the computation complexity of optimization approaches using linear programming increases significantly as the size of the network increases. In this paper, we use deep reinforcement learning to derive a data-driven algorithm that does the path selection in the network considering the overhead of route computation and path updates. Our proposed scheme leverages information about past network behavior to identify a set of robust paths to be used for multiple future time intervals to avoid the overhead of updating the forwarding behavior of routers frequently. We compare the results of our approach to other traffic engineering solutions through extensive simulations across real network topologies. Our results demonstrate that our scheme fares well by a factor of 40% with respect to reducing link utilization compared to traditional TE schemes such as ECMP. Our scheme provides a slightly higher link utilization (around 25%) compared to schemes that only minimize link utilization and do not care about path updating overhead.

Quickest Change Detection in the Presence of Transient Adversarial Attacks

Jun 07, 2022

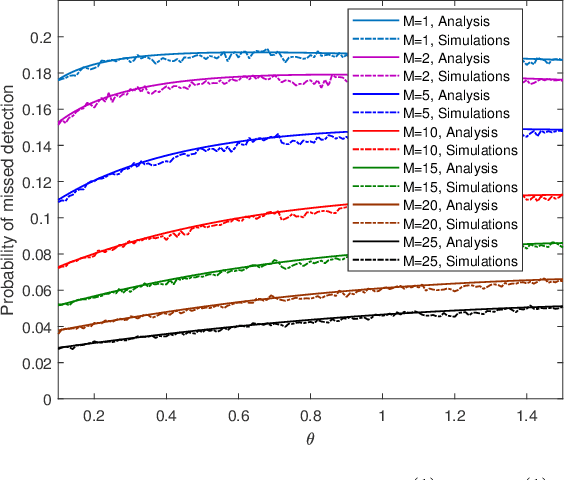

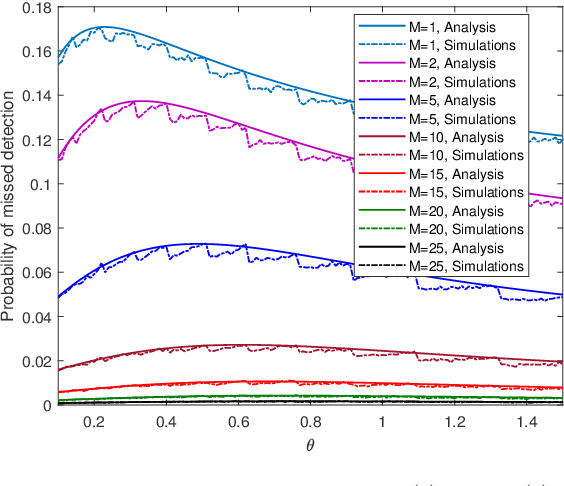

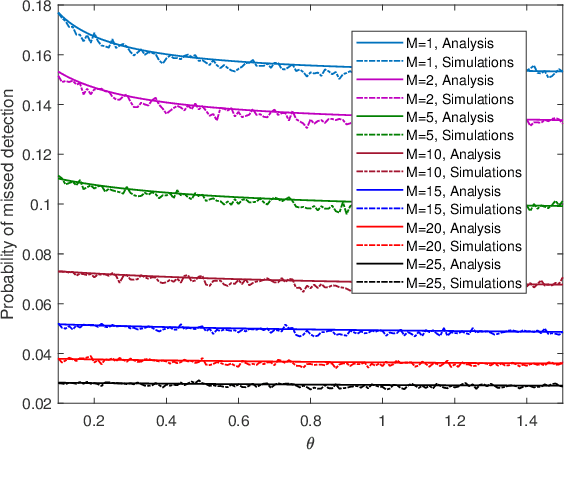

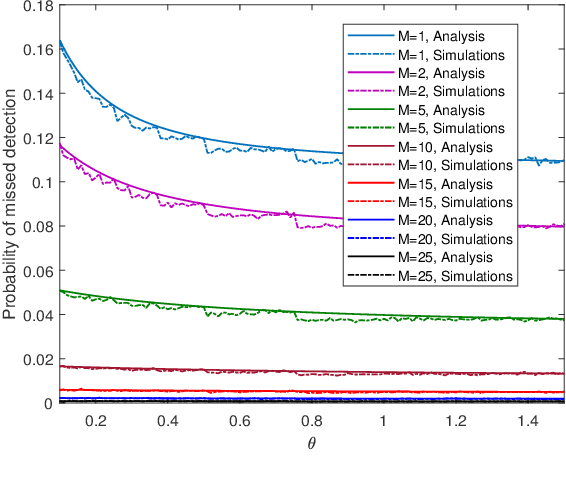

Abstract:We study a monitoring system in which the distributions of sensors' observations change from a nominal distribution to an abnormal distribution in response to an adversary's presence. The system uses the quickest change detection procedure, the Shewhart rule, to detect the adversary that uses its resources to affect the abnormal distribution, so as to hide its presence. The metric of interest is the probability of missed detection within a predefined number of time-slots after the changepoint. Assuming that the adversary's resource constraints are known to the detector, we find the number of required sensors to make the worst-case probability of missed detection less than an acceptable level. The distributions of observations are assumed to be Gaussian, and the presence of the adversary affects their mean. We also provide simulation results to support our analysis.

To Collaborate or Not in Distributed Statistical Estimation with Resource Constraints?

May 31, 2022

Abstract:We study how the amount of correlation between observations collected by distinct sensors/learners affects data collection and collaboration strategies by analyzing Fisher information and the Cramer-Rao bound. In particular, we consider a simple setting wherein two sensors sample from a bivariate Gaussian distribution, which already motivates the adoption of various strategies, depending on the correlation between the two variables and resource constraints. We identify two particular scenarios: (1) where the knowledge of the correlation between samples cannot be leveraged for collaborative estimation purposes and (2) where the optimal data collection strategy involves investing scarce resources to collaboratively sample and transfer information that is not of immediate interest and whose statistics are already known, with the sole goal of increasing the confidence on an estimate of the parameter of interest. We discuss two applications, IoT DDoS attack detection and distributed estimation in wireless sensor networks, that may benefit from our results.

Distributed Bandits with Heterogeneous Agents

Jan 23, 2022

Abstract:This paper tackles a multi-agent bandit setting where $M$ agents cooperate together to solve the same instance of a $K$-armed stochastic bandit problem. The agents are \textit{heterogeneous}: each agent has limited access to a local subset of arms and the agents are asynchronous with different gaps between decision-making rounds. The goal for each agent is to find its optimal local arm, and agents can cooperate by sharing their observations with others. While cooperation between agents improves the performance of learning, it comes with an additional complexity of communication between agents. For this heterogeneous multi-agent setting, we propose two learning algorithms, \ucbo and \AAE. We prove that both algorithms achieve order-optimal regret, which is $O\left(\sum_{i:\tilde{\Delta}_i>0} \log T/\tilde{\Delta}_i\right)$, where $\tilde{\Delta}_i$ is the minimum suboptimality gap between the reward mean of arm $i$ and any local optimal arm. In addition, a careful selection of the valuable information for cooperation, \AAE achieves a low communication complexity of $O(\log T)$. Last, numerical experiments verify the efficiency of both algorithms.

Bosonic Random Walk Networks for Graph Learning

Dec 31, 2020

Abstract:The development of Graph Neural Networks (GNNs) has led to great progress in machine learning on graph-structured data. These networks operate via diffusing information across the graph nodes while capturing the structure of the graph. Recently there has also seen tremendous progress in quantum computing techniques. In this work, we explore applications of multi-particle quantum walks on diffusing information across graphs. Our model is based on learning the operators that govern the dynamics of quantum random walkers on graphs. We demonstrate the effectiveness of our method on classification and regression tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge