Dominik Sobania

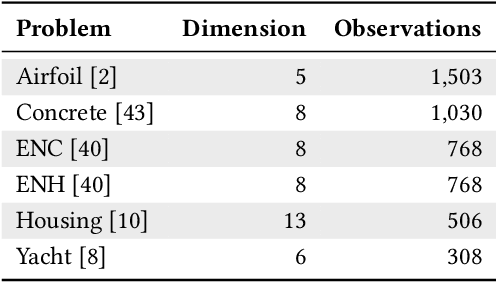

ROIDS: Robust Outlier-Aware Informed Down-Sampling

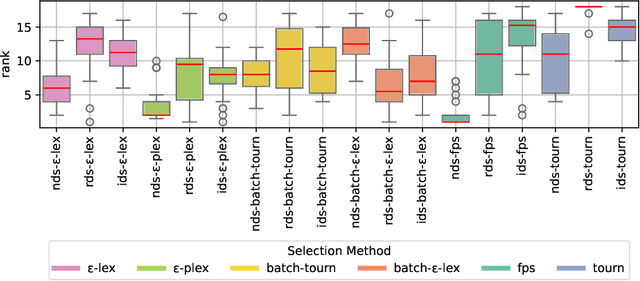

Jan 27, 2026Abstract:Informed down-sampling (IDS) is known to improve performance in symbolic regression when combined with various selection strategies, especially tournament selection. However, recent work found that IDS's gains are not consistent across all problems. Our analysis reveals that IDS performance is worse for problems containing outliers. IDS systematically favors including outliers in subsets which pushes GP towards finding solutions that overfit to outliers. To address this, we introduce ROIDS (Robust Outlier-Aware Informed Down-Sampling), which excludes potential outliers from the sampling process of IDS. With ROIDS it is possible to keep the advantages of IDS without overfitting to outliers and to compete on a wide range of benchmark problems. This is also reflected in our experiments in which ROIDS shows the desired behavior on all studied benchmark problems. ROIDS consistently outperforms IDS on synthetic problems with added outliers as well as on a wide range of complex real-world problems, surpassing IDS on over 80% of the real-world benchmark problems. Moreover, compared to all studied baseline approaches, ROIDS achieves the best average rank across all tested benchmark problems. This robust behavior makes ROIDS a reliable down-sampling method for selection in symbolic regression, especially when outliers may be included in the data set.

Was Tournament Selection All We Ever Needed? A Critical Reflection on Lexicase Selection

Feb 25, 2025

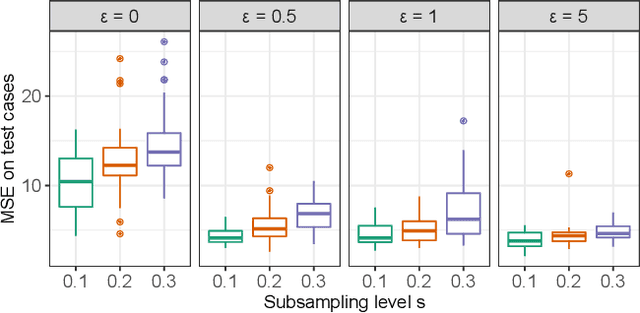

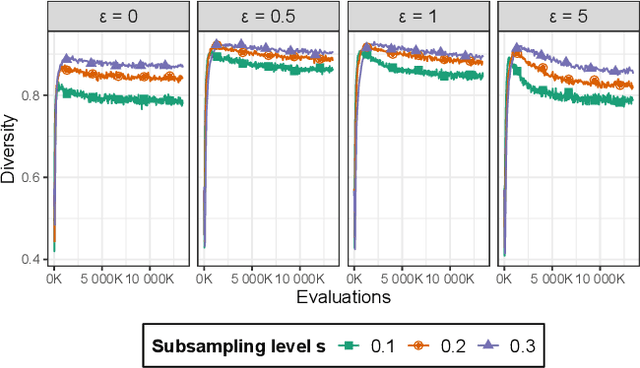

Abstract:The success of lexicase selection has led to various extensions, including its combination with down-sampling, which further increased performance. However, recent work found that down-sampling also leads to significant improvements in the performance of tournament selection. This raises the question of whether tournament selection combined with down-sampling is the better choice, given its faster running times. To address this question, we run a set of experiments comparing epsilon-lexicase and tournament selection with different down-sampling techniques on synthetic problems of varying noise levels and problem sizes as well as real-world symbolic regression problems. Overall, we find that down-sampling improves generalization and performance even when compared over the same number of generations. This means that down-sampling is beneficial even with way fewer fitness evaluations. Additionally, down-sampling successfully reduces code growth. We observe that population diversity increases for tournament selection when combined with down-sampling. Further, we find that tournament selection and epsilon-lexicase selection with down-sampling perform similar, while tournament selection is significantly faster. We conclude that tournament selection should be further analyzed and improved in future work instead of only focusing on the improvement of lexicase variants.

Transformer Semantic Genetic Programming for Symbolic Regression

Jan 30, 2025

Abstract:In standard genetic programming (stdGP), solutions are varied by modifying their syntax, with uncertain effects on their semantics. Geometric-semantic genetic programming (GSGP), a popular variant of GP, effectively searches the semantic solution space using variation operations based on linear combinations, although it results in significantly larger solutions. This paper presents Transformer Semantic Genetic Programming (TSGP), a novel and flexible semantic approach that uses a generative transformer model as search operator. The transformer is trained on synthetic test problems and learns semantic similarities between solutions. Once the model is trained, it can be used to create offspring solutions with high semantic similarity also for unseen and unknown problems. Experiments on several symbolic regression problems show that TSGP generates solutions with comparable or even significantly better prediction quality than stdGP, SLIM_GSGP, DSR, and DAE-GP. Like SLIM_GSGP, TSGP is able to create new solutions that are semantically similar without creating solutions of large size. An analysis of the search dynamic reveals that the solutions generated by TSGP are semantically more similar than the solutions generated by the benchmark approaches allowing a better exploration of the semantic solution space.

ComfyGI: Automatic Improvement of Image Generation Workflows

Nov 21, 2024

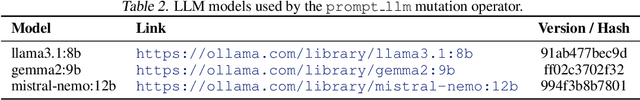

Abstract:Automatic image generation is no longer just of interest to researchers, but also to practitioners. However, current models are sensitive to the settings used and automatic optimization methods often require human involvement. To bridge this gap, we introduce ComfyGI, a novel approach to automatically improve workflows for image generation without the need for human intervention driven by techniques from genetic improvement. This enables image generation with significantly higher quality in terms of the alignment with the given description and the perceived aesthetics. On the performance side, we find that overall, the images generated with an optimized workflow are about 50% better compared to the initial workflow in terms of the median ImageReward score. These already good results are even surpassed in our human evaluation, as the participants preferred the images improved by ComfyGI in around 90% of the cases.

Lexicase-based Selection Methods with Down-sampling for Symbolic Regression Problems: Overview and Benchmark

Jul 31, 2024

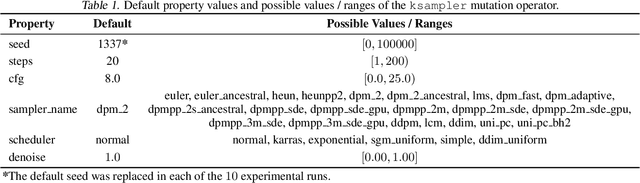

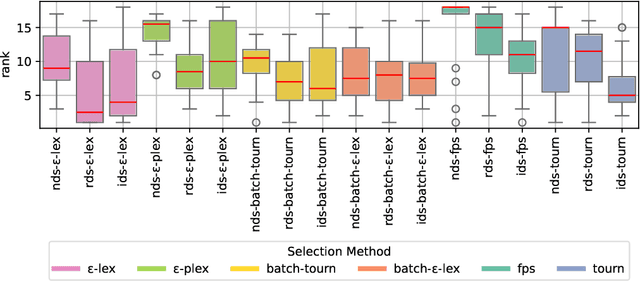

Abstract:In recent years, several new lexicase-based selection variants have emerged due to the success of standard lexicase selection in various application domains. For symbolic regression problems, variants that use an epsilon-threshold or batches of training cases, among others, have led to performance improvements. Lately, especially variants that combine lexicase selection and down-sampling strategies have received a lot of attention. This paper evaluates random as well as informed down-sampling in combination with the relevant lexicase-based selection methods on a wide range of symbolic regression problems. In contrast to most work, we not only compare the methods over a given evaluation budget, but also over a given time as time is usually limited in practice. We find that for a given evaluation budget, epsilon-lexicase selection in combination with random or informed down-sampling outperforms all other methods. Only for a rather long running time of 24h, the best performing method is tournament selection in combination with informed down-sampling. If the given running time is very short, lexicase variants using batches of training cases perform best.

Large Language Models Suffer From Their Own Output: An Analysis of the Self-Consuming Training Loop

Nov 28, 2023Abstract:Large language models (LLM) have become state of the art in many benchmarks and conversational LLM applications like ChatGPT are now widely used by the public. Those LLMs can be used to generate large amounts of content which is posted on the internet to various platforms. As LLMs are trained on datasets usually collected from the internet, this LLM-generated content might be used to train the next generation of LLMs. Therefore, a self-consuming training loop emerges in which new LLM generations are trained on the output from the previous generations. We empirically study this self-consuming training loop using a novel dataset to analytically and accurately measure quality and diversity of generated outputs. We find that this self-consuming training loop initially improves both quality and diversity. However, after a few generations the output inevitably degenerates in diversity. We find that the rate of degeneration depends on the proportion of real and generated data.

Enhancing Genetic Improvement Mutations Using Large Language Models

Oct 18, 2023Abstract:Large language models (LLMs) have been successfully applied to software engineering tasks, including program repair. However, their application in search-based techniques such as Genetic Improvement (GI) is still largely unexplored. In this paper, we evaluate the use of LLMs as mutation operators for GI to improve the search process. We expand the Gin Java GI toolkit to call OpenAI's API to generate edits for the JCodec tool. We randomly sample the space of edits using 5 different edit types. We find that the number of patches passing unit tests is up to 75% higher with LLM-based edits than with standard Insert edits. Further, we observe that the patches found with LLMs are generally less diverse compared to standard edits. We ran GI with local search to find runtime improvements. Although many improving patches are found by LLM-enhanced GI, the best improving patch was found by standard GI.

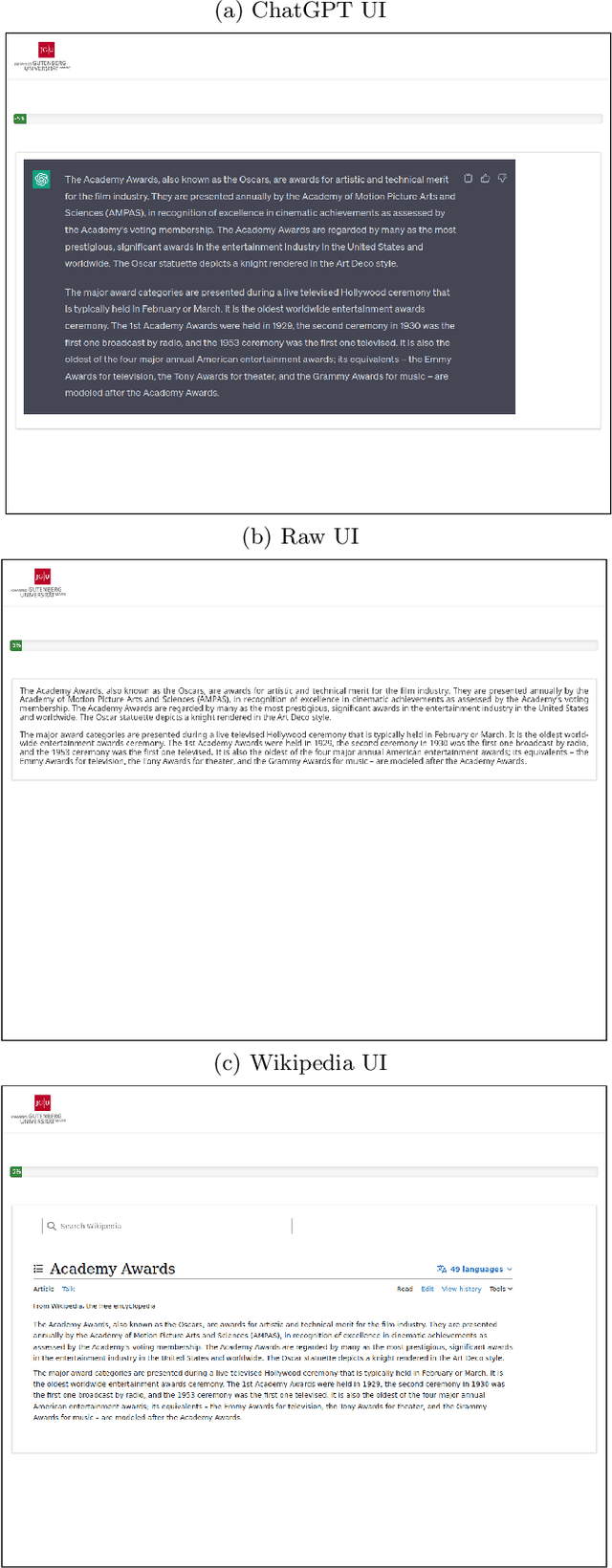

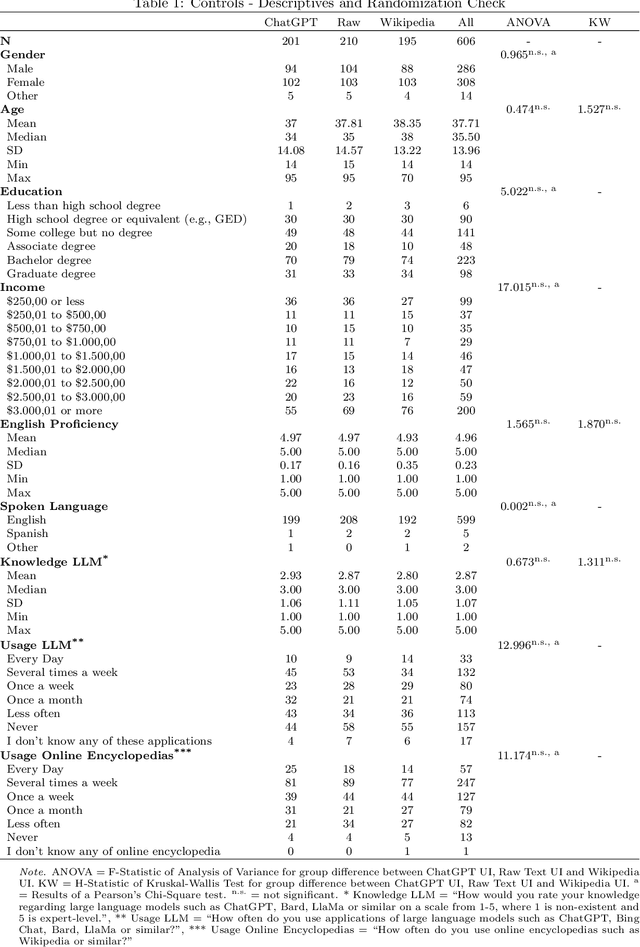

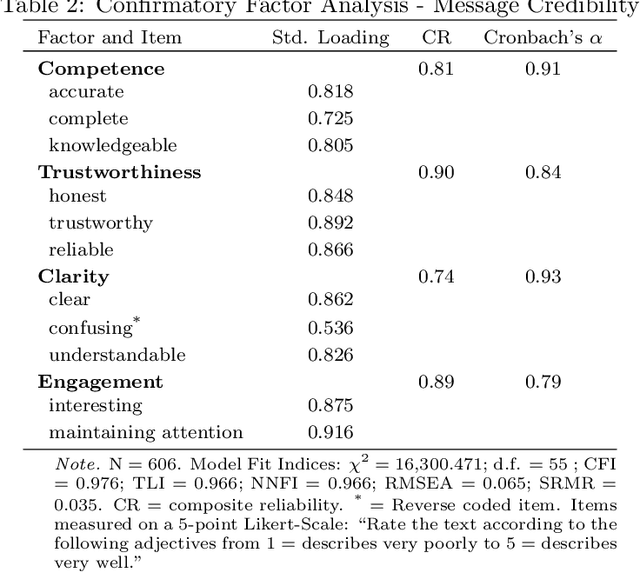

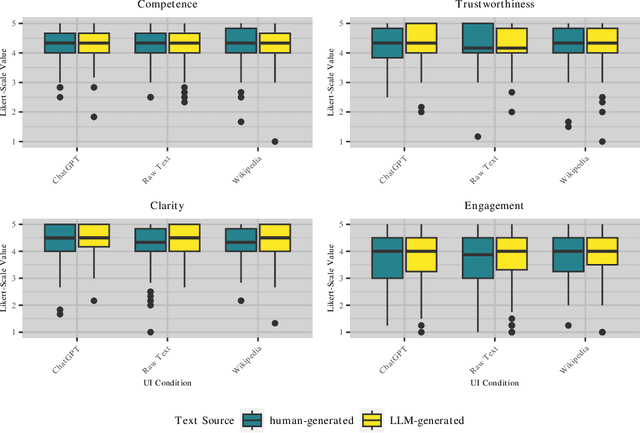

Do You Trust ChatGPT? -- Perceived Credibility of Human and AI-Generated Content

Sep 05, 2023

Abstract:This paper examines how individuals perceive the credibility of content originating from human authors versus content generated by large language models, like the GPT language model family that powers ChatGPT, in different user interface versions. Surprisingly, our results demonstrate that regardless of the user interface presentation, participants tend to attribute similar levels of credibility. While participants also do not report any different perceptions of competence and trustworthiness between human and AI-generated content, they rate AI-generated content as being clearer and more engaging. The findings from this study serve as a call for a more discerning approach to evaluating information sources, encouraging users to exercise caution and critical thinking when engaging with content generated by AI systems.

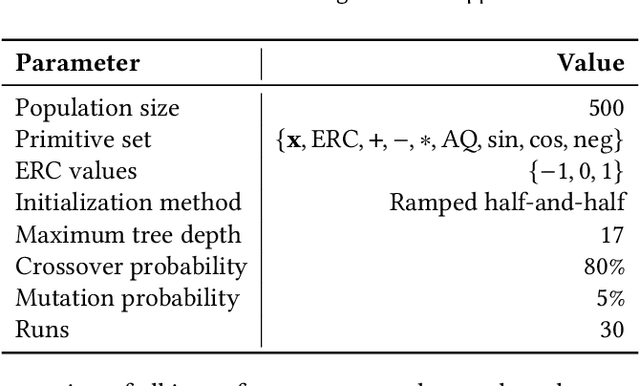

Analyzing the Interaction Between Down-Sampling and Selection

Apr 14, 2023Abstract:Genetic programming systems often use large training sets to evaluate the quality of candidate solutions for selection. However, evaluating populations on large training sets can be computationally expensive. Down-sampling training sets has long been used to decrease the computational cost of evaluation in a wide range of application domains. Indeed, recent studies have shown that both random and informed down-sampling can substantially improve problem-solving success for GP systems that use the lexicase parent selection algorithm. We use the PushGP framework to experimentally test whether these down-sampling techniques can also improve problem-solving success in the context of two other commonly used selection methods, fitness-proportionate and tournament selection, across eight GP problems (four program synthesis and four symbolic regression). We verified that down-sampling can benefit the problem-solving success of both fitness-proportionate and tournament selection. However, the number of problems wherein down-sampling improved problem-solving success varied by selection scheme, suggesting that the impact of down-sampling depends both on the problem and choice of selection scheme. Surprisingly, we found that down-sampling was most consistently beneficial when combined with lexicase selection as compared to tournament and fitness-proportionate selection. Overall, our results suggest that down-sampling should be considered more often when solving test-based GP problems.

Down-Sampled Epsilon-Lexicase Selection for Real-World Symbolic Regression Problems

Feb 08, 2023

Abstract:Epsilon-lexicase selection is a parent selection method in genetic programming that has been successfully applied to symbolic regression problems. Recently, the combination of random subsampling with lexicase selection significantly improved performance in other genetic programming domains such as program synthesis. However, the influence of subsampling on the solution quality of real-world symbolic regression problems has not yet been studied. In this paper, we propose down-sampled epsilon-lexicase selection which combines epsilon-lexicase selection with random subsampling to improve the performance in the domain of symbolic regression. Therefore, we compare down-sampled epsilon-lexicase with traditional selection methods on common real-world symbolic regression problems and analyze its influence on the properties of the population over a genetic programming run. We find that the diversity is reduced by using down-sampled epsilon-lexicase selection compared to standard epsilon-lexicase selection. This comes along with high hyperselection rates we observe for down-sampled epsilon-lexicase selection. Further, we find that down-sampled epsilon-lexicase selection outperforms the traditional selection methods on all studied problems. Overall, with down-sampled epsilon-lexicase selection we observe an improvement of the solution quality of up to 85% in comparison to standard epsilon-lexicase selection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge