Dmitry Kislyuk

Visual Product Graph: Bridging Visual Products And Composite Images For End-to-End Style Recommendations

May 27, 2025Abstract:Retrieving semantically similar but visually distinct contents has been a critical capability in visual search systems. In this work, we aim to tackle this problem with Visual Product Graph (VPG), leveraging high-performance infrastructure for storage and state-of-the-art computer vision models for image understanding. VPG is built to be an online real-time retrieval system that enables navigation from individual products to composite scenes containing those products, along with complementary recommendations. Our system not only offers contextual insights by showcasing how products can be styled in a context, but also provides recommendations for complementary products drawn from these inspirations. We discuss the essential components for building the Visual Product Graph, along with the core computer vision model improvements across object detection, foundational visual embeddings, and other visual signals. Our system achieves a 78.8% extremely similar@1 in end-to-end human relevance evaluations, and a 6% module engagement rate. The "Ways to Style It" module, powered by the Visual Product Graph technology, is deployed in production at Pinterest.

Large-scale Reinforcement Learning for Diffusion Models

Jan 20, 2024Abstract:Text-to-image diffusion models are a class of deep generative models that have demonstrated an impressive capacity for high-quality image generation. However, these models are susceptible to implicit biases that arise from web-scale text-image training pairs and may inaccurately model aspects of images we care about. This can result in suboptimal samples, model bias, and images that do not align with human ethics and preferences. In this paper, we present an effective scalable algorithm to improve diffusion models using Reinforcement Learning (RL) across a diverse set of reward functions, such as human preference, compositionality, and fairness over millions of images. We illustrate how our approach substantially outperforms existing methods for aligning diffusion models with human preferences. We further illustrate how this substantially improves pretrained Stable Diffusion (SD) models, generating samples that are preferred by humans 80.3% of the time over those from the base SD model while simultaneously improving both the composition and diversity of generated samples.

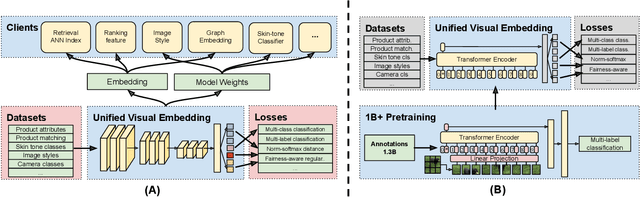

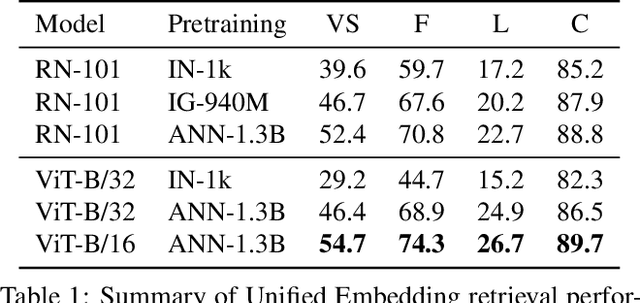

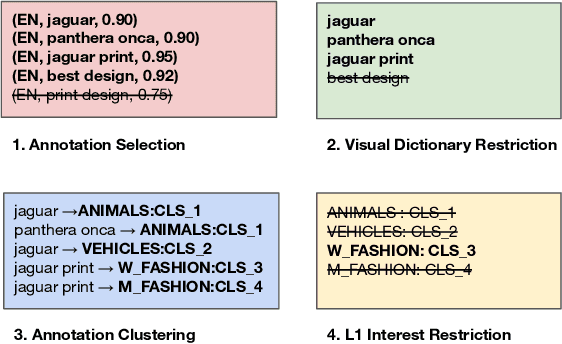

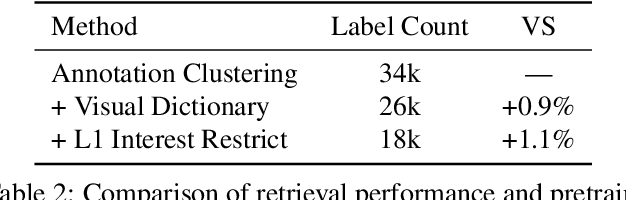

Billion-Scale Pretraining with Vision Transformers for Multi-Task Visual Representations

Aug 12, 2021

Abstract:Large-scale pretraining of visual representations has led to state-of-the-art performance on a range of benchmark computer vision tasks, yet the benefits of these techniques at extreme scale in complex production systems has been relatively unexplored. We consider the case of a popular visual discovery product, where these representations are trained with multi-task learning, from use-case specific visual understanding (e.g. skin tone classification) to general representation learning for all visual content (e.g. embeddings for retrieval). In this work, we describe how we (1) generate a dataset with over a billion images via large weakly-supervised pretraining to improve the performance of these visual representations, and (2) leverage Transformers to replace the traditional convolutional backbone, with insights into both system and performance improvements, especially at 1B+ image scale. To support this backbone model, we detail a systematic approach to deriving weakly-supervised image annotations from heterogenous text signals, demonstrating the benefits of clustering techniques to handle the long-tail distribution of image labels. Through a comprehensive study of offline and online evaluation, we show that large-scale Transformer-based pretraining provides significant benefits to industry computer vision applications. The model is deployed in a production visual shopping system, with 36% improvement in top-1 relevance and 23% improvement in click-through volume. We conduct extensive experiments to better understand the empirical relationships between Transformer-based architectures, dataset scale, and the performance of production vision systems.

Toward Transformer-Based Object Detection

Dec 17, 2020

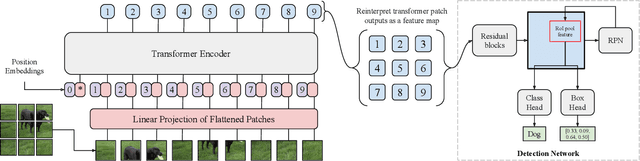

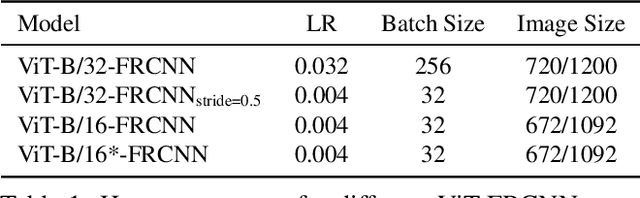

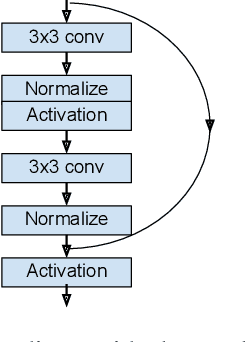

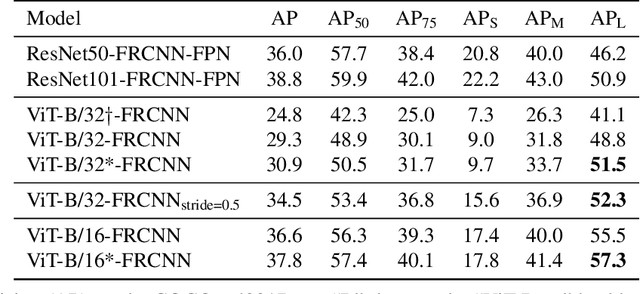

Abstract:Transformers have become the dominant model in natural language processing, owing to their ability to pretrain on massive amounts of data, then transfer to smaller, more specific tasks via fine-tuning. The Vision Transformer was the first major attempt to apply a pure transformer model directly to images as input, demonstrating that as compared to convolutional networks, transformer-based architectures can achieve competitive results on benchmark classification tasks. However, the computational complexity of the attention operator means that we are limited to low-resolution inputs. For more complex tasks such as detection or segmentation, maintaining a high input resolution is crucial to ensure that models can properly identify and reflect fine details in their output. This naturally raises the question of whether or not transformer-based architectures such as the Vision Transformer are capable of performing tasks other than classification. In this paper, we determine that Vision Transformers can be used as a backbone by a common detection task head to produce competitive COCO results. The model that we propose, ViT-FRCNN, demonstrates several known properties associated with transformers, including large pretraining capacity and fast fine-tuning performance. We also investigate improvements over a standard detection backbone, including superior performance on out-of-domain images, better performance on large objects, and a lessened reliance on non-maximum suppression. We view ViT-FRCNN as an important stepping stone toward a pure-transformer solution of complex vision tasks such as object detection.

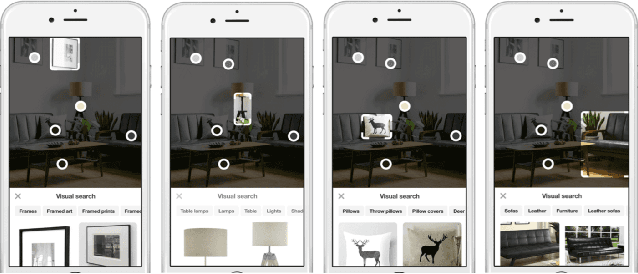

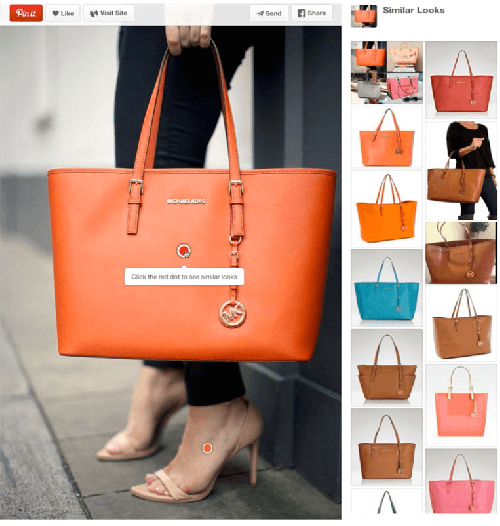

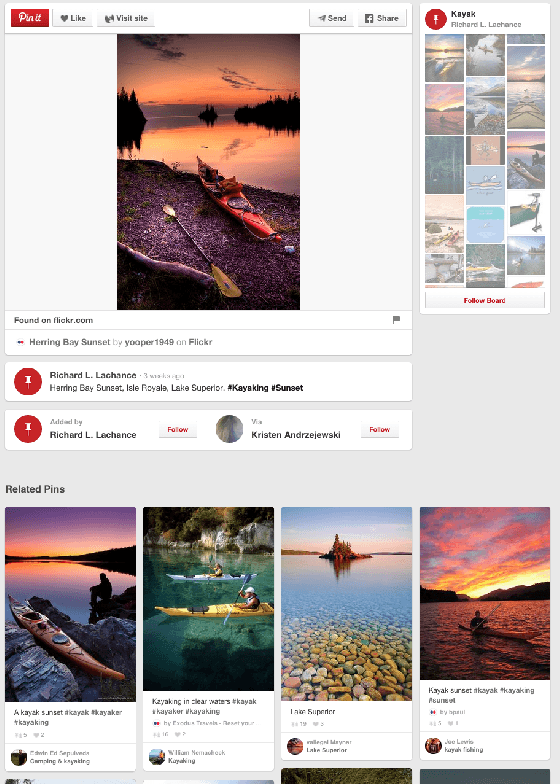

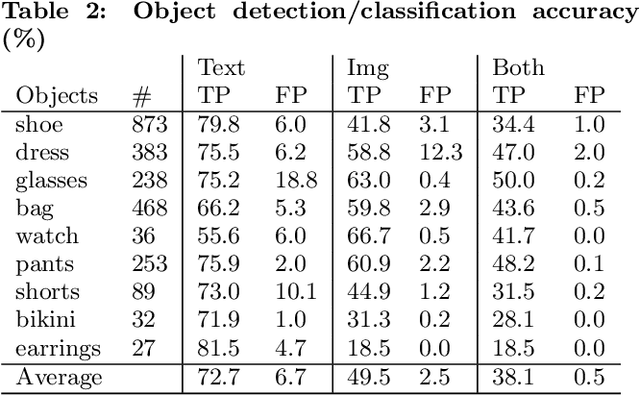

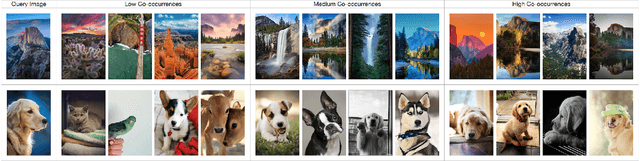

Visual Discovery at Pinterest

Mar 25, 2017

Abstract:Over the past three years Pinterest has experimented with several visual search and recommendation services, including Related Pins (2014), Similar Looks (2015), Flashlight (2016) and Lens (2017). This paper presents an overview of our visual discovery engine powering these services, and shares the rationales behind our technical and product decisions such as the use of object detection and interactive user interfaces. We conclude that this visual discovery engine significantly improves engagement in both search and recommendation tasks.

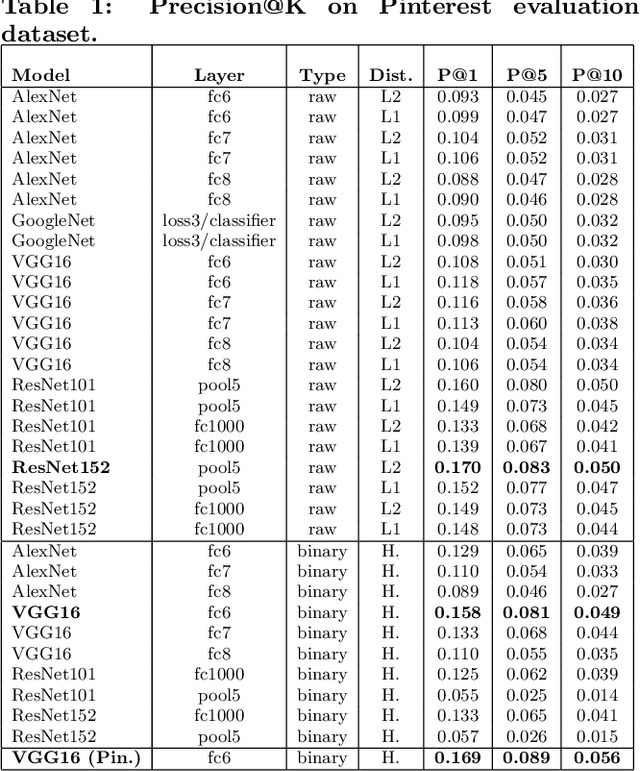

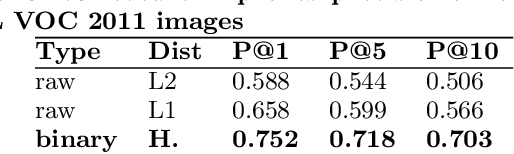

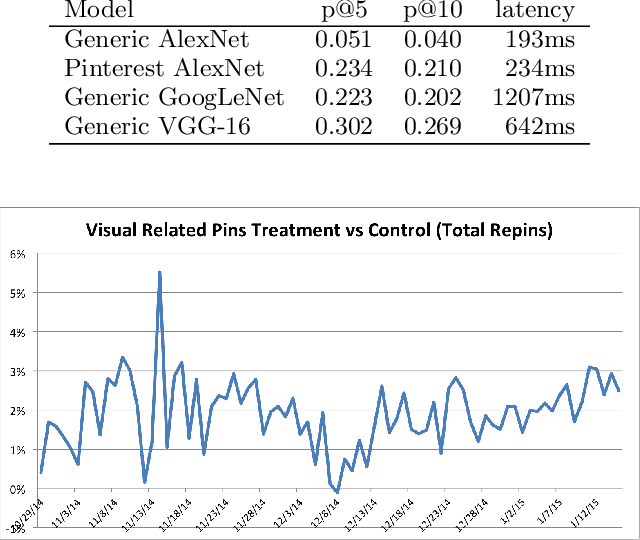

Visual Search at Pinterest

Mar 08, 2017

Abstract:We demonstrate that, with the availability of distributed computation platforms such as Amazon Web Services and open-source tools, it is possible for a small engineering team to build, launch and maintain a cost-effective, large-scale visual search system with widely available tools. We also demonstrate, through a comprehensive set of live experiments at Pinterest, that content recommendation powered by visual search improve user engagement. By sharing our implementation details and the experiences learned from launching a commercial visual search engines from scratch, we hope visual search are more widely incorporated into today's commercial applications.

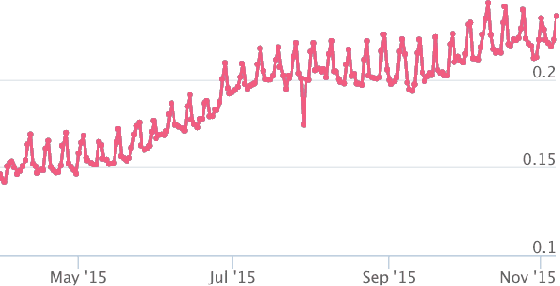

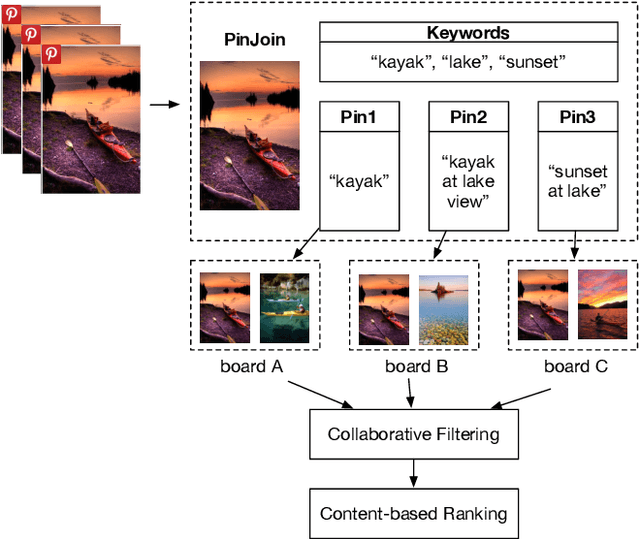

Human Curation and Convnets: Powering Item-to-Item Recommendations on Pinterest

Nov 12, 2015

Abstract:This paper presents Pinterest Related Pins, an item-to-item recommendation system that combines collaborative filtering with content-based ranking. We demonstrate that signals derived from user curation, the activity of users organizing content, are highly effective when used in conjunction with content-based ranking. This paper also demonstrates the effectiveness of visual features, such as image or object representations learned from convnets, in improving the user engagement rate of our item-to-item recommendation system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge