Dennis Bontempi

Artificial Intelligence in Medicine, Radiology and Nuclear Medicine, CARIM & GROW, Maastricht University, Department of Radiation Oncology, Brigham and Women's Hospital, Dana-Farber Cancer Institute, Harvard Medical School

MHub.ai: A Simple, Standardized, and Reproducible Platform for AI Models in Medical Imaging

Jan 15, 2026Abstract:Artificial intelligence (AI) has the potential to transform medical imaging by automating image analysis and accelerating clinical research. However, research and clinical use are limited by the wide variety of AI implementations and architectures, inconsistent documentation, and reproducibility issues. Here, we introduce MHub.ai, an open-source, container-based platform that standardizes access to AI models with minimal configuration, promoting accessibility and reproducibility in medical imaging. MHub.ai packages models from peer-reviewed publications into standardized containers that support direct processing of DICOM and other formats, provide a unified application interface, and embed structured metadata. Each model is accompanied by publicly available reference data that can be used to confirm model operation. MHub.ai includes an initial set of state-of-the-art segmentation, prediction, and feature extraction models for different modalities. The modular framework enables adaptation of any model and supports community contributions. We demonstrate the utility of the platform in a clinical use case through comparative evaluation of lung segmentation models. To further strengthen transparency and reproducibility, we publicly release the generated segmentations and evaluation metrics and provide interactive dashboards that allow readers to inspect individual cases and reproduce or extend our analysis. By simplifying model use, MHub.ai enables side-by-side benchmarking with identical execution commands and standardized outputs, and lowers the barrier to clinical translation.

Foundation Artificial Intelligence Models for Health Recognition Using Face Photographs (FAHR-Face)

Jun 17, 2025Abstract:Background: Facial appearance offers a noninvasive window into health. We built FAHR-Face, a foundation model trained on >40 million facial images and fine-tuned it for two distinct tasks: biological age estimation (FAHR-FaceAge) and survival risk prediction (FAHR-FaceSurvival). Methods: FAHR-FaceAge underwent a two-stage, age-balanced fine-tuning on 749,935 public images; FAHR-FaceSurvival was fine-tuned on 34,389 photos of cancer patients. Model robustness (cosmetic surgery, makeup, pose, lighting) and independence (saliency mapping) was tested extensively. Both models were clinically tested in two independent cancer patient datasets with survival analyzed by multivariable Cox models and adjusted for clinical prognostic factors. Findings: For age estimation, FAHR-FaceAge had the lowest mean absolute error of 5.1 years on public datasets, outperforming benchmark models and maintaining accuracy across the full human lifespan. In cancer patients, FAHR-FaceAge outperformed a prior facial age estimation model in survival prognostication. FAHR-FaceSurvival demonstrated robust prediction of mortality, and the highest-risk quartile had more than triple the mortality of the lowest (adjusted hazard ratio 3.22; P<0.001). These findings were validated in the independent cohort and both models showed generalizability across age, sex, race and cancer subgroups. The two algorithms provided distinct, complementary prognostic information; saliency mapping revealed each model relied on distinct facial regions. The combination of FAHR-FaceAge and FAHR-FaceSurvival improved prognostic accuracy. Interpretation: A single foundation model can generate inexpensive, scalable facial biomarkers that capture both biological ageing and disease-related mortality risk. The foundation model enabled effective training using relatively small clinical datasets.

Vision Foundation Models for Computed Tomography

Jan 15, 2025Abstract:Foundation models (FMs) have shown transformative potential in radiology by performing diverse, complex tasks across imaging modalities. Here, we developed CT-FM, a large-scale 3D image-based pre-trained model designed explicitly for various radiological tasks. CT-FM was pre-trained using 148,000 computed tomography (CT) scans from the Imaging Data Commons through label-agnostic contrastive learning. We evaluated CT-FM across four categories of tasks, namely, whole-body and tumor segmentation, head CT triage, medical image retrieval, and semantic understanding, showing superior performance against state-of-the-art models. Beyond quantitative success, CT-FM demonstrated the ability to cluster regions anatomically and identify similar anatomical and structural concepts across scans. Furthermore, it remained robust across test-retest settings and indicated reasonable salient regions attached to its embeddings. This study demonstrates the value of large-scale medical imaging foundation models and by open-sourcing the model weights, code, and data, aims to support more adaptable, reliable, and interpretable AI solutions in radiology.

Enrichment of the NLST and NSCLC-Radiomics computed tomography collections with AI-derived annotations

May 31, 2023Abstract:Public imaging datasets are critical for the development and evaluation of automated tools in cancer imaging. Unfortunately, many do not include annotations or image-derived features, complicating their downstream analysis. Artificial intelligence-based annotation tools have been shown to achieve acceptable performance and thus can be used to automatically annotate large datasets. As part of the effort to enrich public data available within NCI Imaging Data Commons (IDC), here we introduce AI-generated annotations for two collections of computed tomography images of the chest, NSCLC-Radiomics, and the National Lung Screening Trial. Using publicly available AI algorithms we derived volumetric annotations of thoracic organs at risk, their corresponding radiomics features, and slice-level annotations of anatomical landmarks and regions. The resulting annotations are publicly available within IDC, where the DICOM format is used to harmonize the data and achieve FAIR principles. The annotations are accompanied by cloud-enabled notebooks demonstrating their use. This study reinforces the need for large, publicly accessible curated datasets and demonstrates how AI can be used to aid in cancer imaging.

CEREBRUM: a fast and fully-volumetric Convolutional Encoder-decodeR for weakly-supervised sEgmentation of BRain strUctures from out-of-the-scanner MRI

Sep 26, 2019

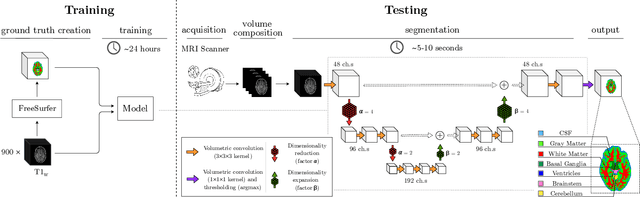

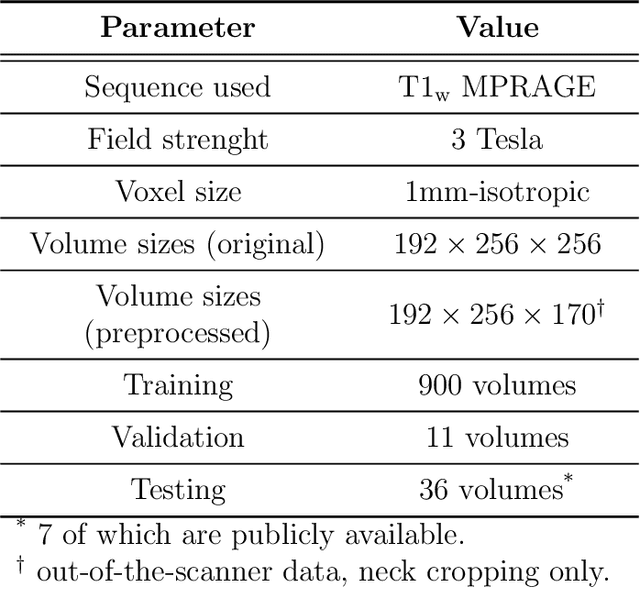

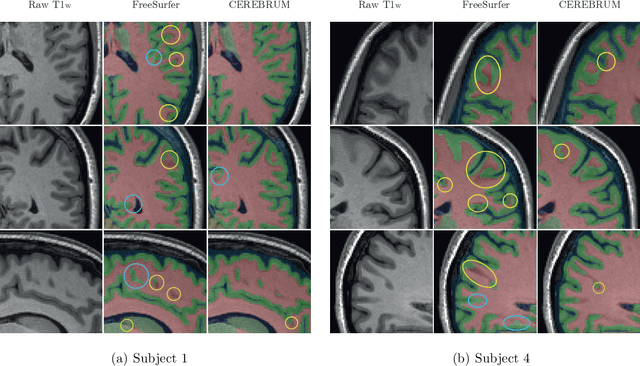

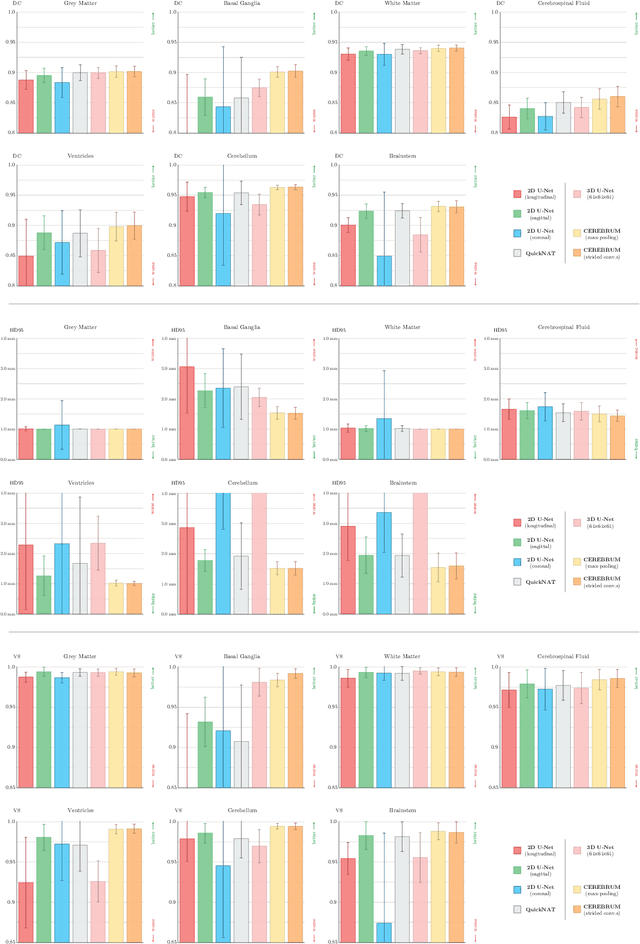

Abstract:Many functional and structural neuroimaging studies call for accurate morphometric segmentation of different brain structures starting from image intensity values of MRI scans. Current automatic (multi-) atlas-based segmentation strategies often lack accuracy on difficult-to-segment brain structures and, since these methods rely on atlas-to-scan alignment, they may take long processing times. Recently, methods deploying solutions based on Convolutional Neural Networks (CNNs) are making the direct analysis of out-of-the-scanner data feasible. However, current CNN-based solutions partition the test volume into 2D or 3D patches, which are processed independently. This entails a loss of global contextual information thereby negatively impacting the segmentation accuracy. In this work, we design and test an optimised end-to-end CNN architecture that makes the exploitation of global spatial information computationally tractable, allowing to process a whole MRI volume at once. We adopt a weakly supervised learning strategy by exploiting a large dataset composed by 947 out-of-the-scanner (3 Tesla T1-weighted 1mm isotropic MP-RAGE 3D sequences) MR Images. The resulting model is able to produce accurate multi-structure segmentation results in only few seconds. Different quantitative measures demonstrate an improved accuracy of our solution when compared to state-of-the-art techniques. Moreover, through a randomised survey involving expert neuroscientists, we show that subjective judgements clearly prefer our solution with respect to the widely adopted atlas-based FreeSurfer software.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge