David Caruso

Meta Reality Labs Research

Benchmarking Egocentric Visual-Inertial SLAM at City Scale

Sep 30, 2025Abstract:Precise 6-DoF simultaneous localization and mapping (SLAM) from onboard sensors is critical for wearable devices capturing egocentric data, which exhibits specific challenges, such as a wider diversity of motions and viewpoints, prevalent dynamic visual content, or long sessions affected by time-varying sensor calibration. While recent progress on SLAM has been swift, academic research is still driven by benchmarks that do not reflect these challenges or do not offer sufficiently accurate ground truth poses. In this paper, we introduce a new dataset and benchmark for visual-inertial SLAM with egocentric, multi-modal data. We record hours and kilometers of trajectories through a city center with glasses-like devices equipped with various sensors. We leverage surveying tools to obtain control points as indirect pose annotations that are metric, centimeter-accurate, and available at city scale. This makes it possible to evaluate extreme trajectories that involve walking at night or traveling in a vehicle. We show that state-of-the-art systems developed by academia are not robust to these challenges and we identify components that are responsible for this. In addition, we design tracks with different levels of difficulty to ease in-depth analysis and evaluation of less mature approaches. The dataset and benchmark are available at https://www.lamaria.ethz.ch.

Robust Indoor Localization with Ranging-IMU Fusion

Sep 15, 2023

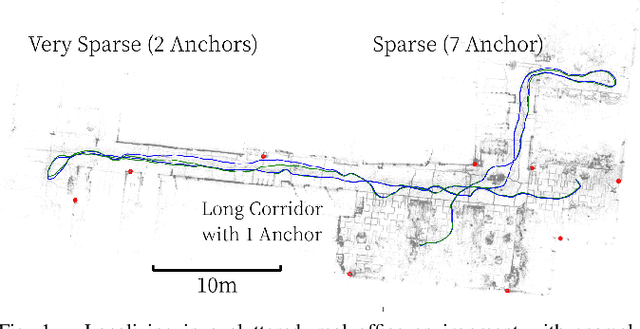

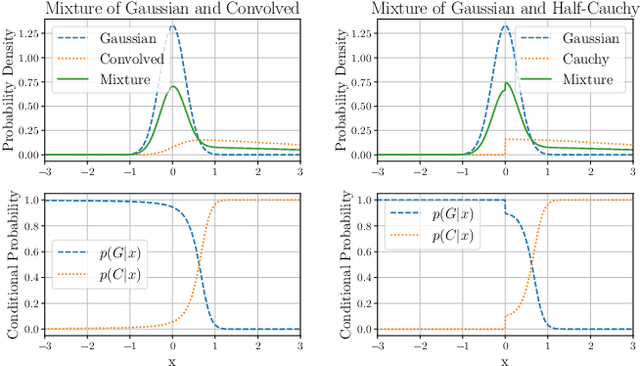

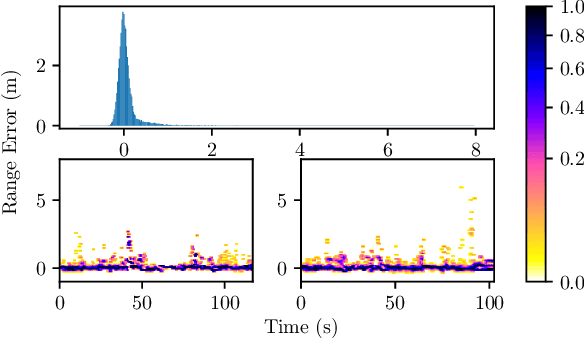

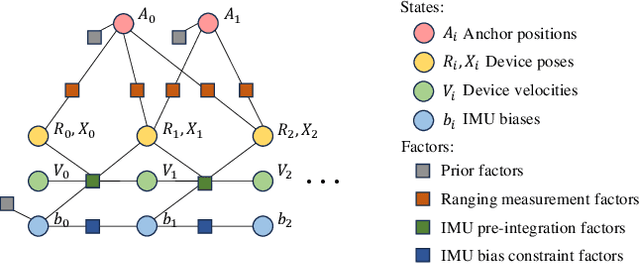

Abstract:Indoor wireless ranging localization is a promising approach for low-power and high-accuracy localization of wearable devices. A primary challenge in this domain stems from non-line of sight propagation of radio waves. This study tackles a fundamental issue in wireless ranging: the unpredictability of real-time multipath determination, especially in challenging conditions such as when there is no direct line of sight. We achieve this by fusing range measurements with inertial measurements obtained from a low cost Inertial Measurement Unit (IMU). For this purpose, we introduce a novel asymmetric noise model crafted specifically for non-Gaussian multipath disturbances. Additionally, we present a novel Levenberg-Marquardt (LM)-family trust-region adaptation of the iSAM2 fusion algorithm, which is optimized for robust performance for our ranging-IMU fusion problem. We evaluate our solution in a densely occupied real office environment. Our proposed solution can achieve temporally consistent localization with an average absolute accuracy of $\sim$0.3m in real-world settings. Furthermore, our results indicate that we can achieve comparable accuracy even with infrequent (1Hz) range measurements.

Neural Inertial Localization

Mar 29, 2022

Abstract:This paper proposes the inertial localization problem, the task of estimating the absolute location from a sequence of inertial sensor measurements. This is an exciting and unexplored area of indoor localization research, where we present a rich dataset with 53 hours of inertial sensor data and the associated ground truth locations. We developed a solution, dubbed neural inertial localization (NILoc) which 1) uses a neural inertial navigation technique to turn inertial sensor history to a sequence of velocity vectors; then 2) employs a transformer-based neural architecture to find the device location from the sequence of velocities. We only use an IMU sensor, which is energy efficient and privacy preserving compared to WiFi, cameras, and other data sources. Our approach is significantly faster and achieves competitive results even compared with state-of-the-art methods that require a floorplan and run 20 to 30 times slower. We share our code, model and data at https://sachini.github.io/niloc.

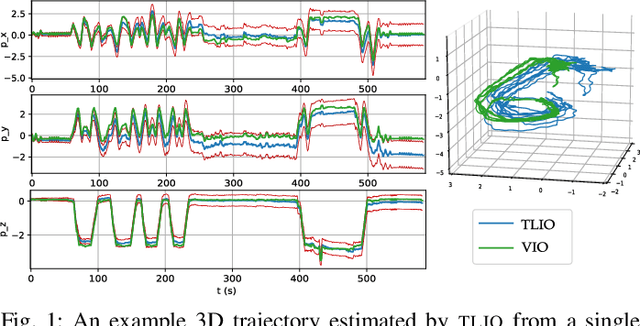

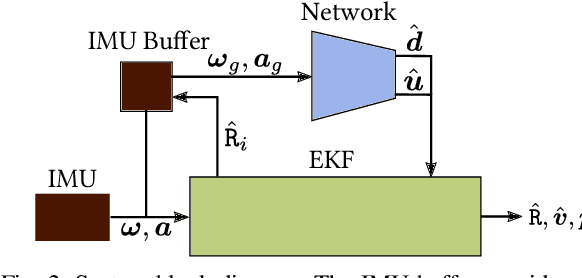

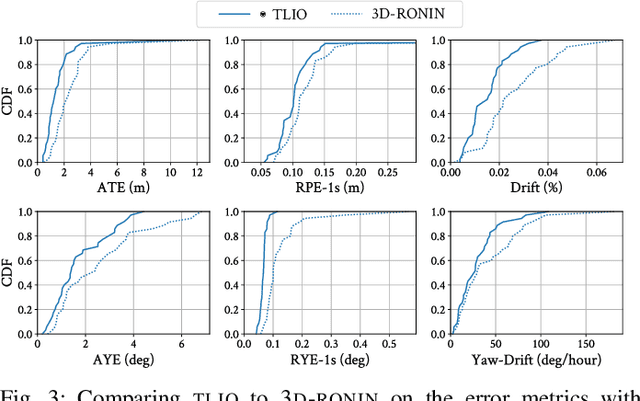

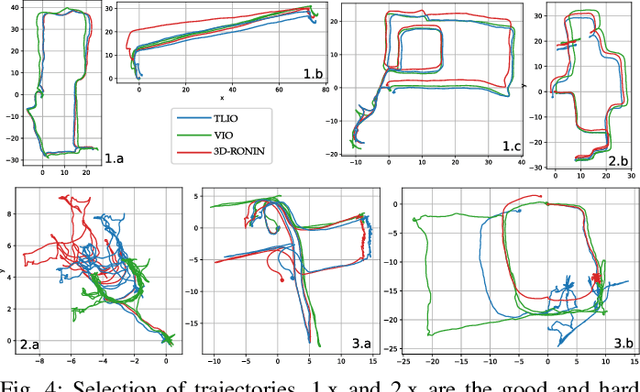

TLIO: Tight Learned Inertial Odometry

Jul 10, 2020

Abstract:In this work we propose a tightly-coupled Extended Kalman Filter framework for IMU-only state estimation. Strap-down IMU measurements provide relative state estimates based on IMU kinematic motion model. However the integration of measurements is sensitive to sensor bias and noise, causing significant drift within seconds. Recent research by Yan et al. (RoNIN) and Chen et al. (IONet) showed the capability of using trained neural networks to obtain accurate 2D displacement estimates from segments of IMU data and obtained good position estimates from concatenating them. This paper demonstrates a network that regresses 3D displacement estimates and its uncertainty, giving us the ability to tightly fuse the relative state measurement into a stochastic cloning EKF to solve for pose, velocity and sensor biases. We show that our network, trained with pedestrian data from a headset, can produce statistically consistent measurement and uncertainty to be used as the update step in the filter, and the tightly-coupled system outperforms velocity integration approaches in position estimates, and AHRS attitude filter in orientation estimates.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge