Qi Qu

Meta Reality Labs Research

Learnable Wireless Digital Twins: Reconstructing Electromagnetic Field with Neural Representations

Sep 04, 2024

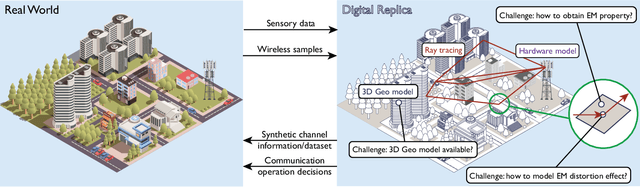

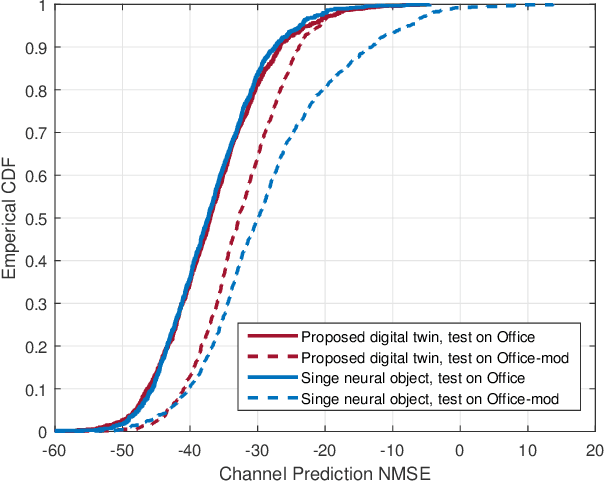

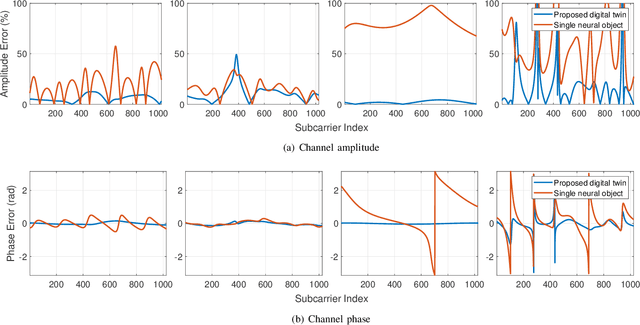

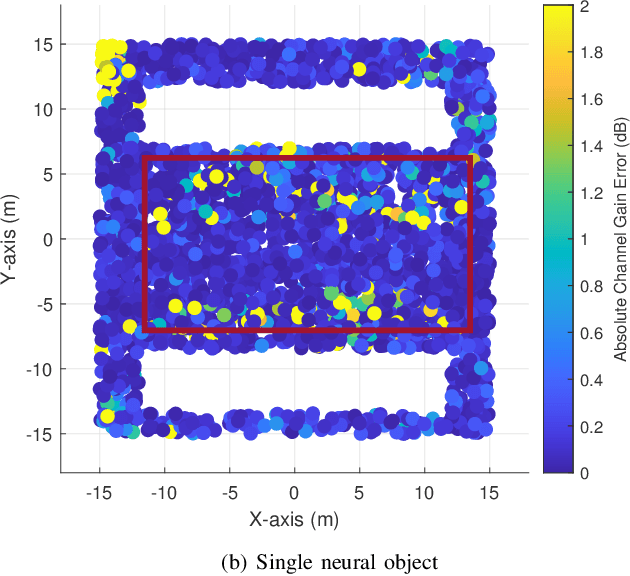

Abstract:Fully harvesting the gain of multiple-input and multiple-output (MIMO) requires accurate channel information. However, conventional channel acquisition methods mainly rely on pilot training signals, resulting in significant training overheads (time, energy, spectrum). Digital twin-aided communications have been proposed in [1] to reduce or eliminate this overhead by approximating the real world with a digital replica. However, how to implement a digital twin-aided communication system brings new challenges. In particular, how to model the 3D environment and the associated EM properties, as well as how to update the environment dynamics in a coherent manner. To address these challenges, motivated by the latest advancements in computer vision, 3D reconstruction and neural radiance field, we propose an end-to-end deep learning framework for future generation wireless systems that can reconstruct the 3D EM field covered by a wireless access point, based on widely available crowd-sourced world-locked wireless samples between the access point and the devices. This visionary framework is grounded in classical EM theory and employs deep learning models to learn the EM properties and interaction behaviors of the objects in the environment. Simulation results demonstrate that the proposed learnable digital twin can implicitly learn the EM properties of the objects, accurately predict wireless channels, and generalize to changes in the environment, highlighting the prospect of this novel direction for future generation wireless platforms.

Can 5G NR Sidelink communications support wireless augmented reality?

Oct 03, 2023

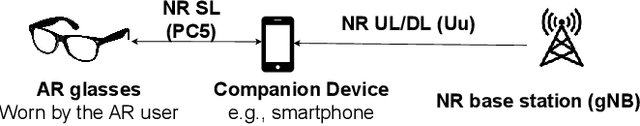

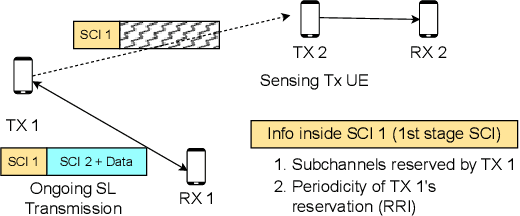

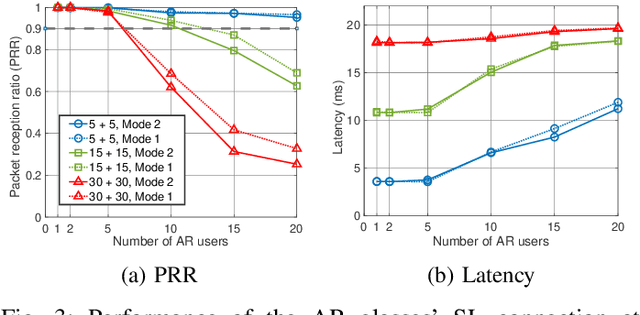

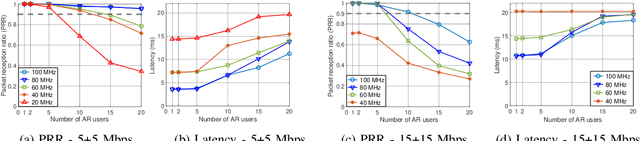

Abstract:Smart glasses that support augmented reality (AR) have the potential to become the consumer's primary medium of connecting to the future internet. For the best quality of user experience, AR glasses must have a small form factor and long battery life, while satisfying the data rate and latency requirements of AR applications. To extend the AR glasses' battery life, the computation and processing involved in AR may be offloaded to a companion device, such as a smartphone, through a wireless connection. Sidelink (SL), i.e., the D2D communication interface of 5G NR, is a potential candidate for this wireless link. In this paper, we use system-level simulations to analyze the feasibility of NR SL for supporting AR. Our simulator incorporates the PHY layer structure and MAC layer resource scheduling of 3GPP SL, standard 3GPP channel models, and MCS configurations. Our results suggest that the current SL standard specifications are insufficient for high-end AR use cases with heavy interaction but can support simpler previews and file transfers. We further propose two enhancements to SL resource allocation, which have the potential to offer significant performance improvements for AR applications.

Robust Indoor Localization with Ranging-IMU Fusion

Sep 15, 2023

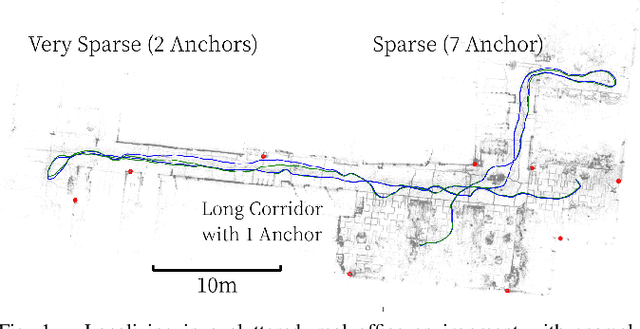

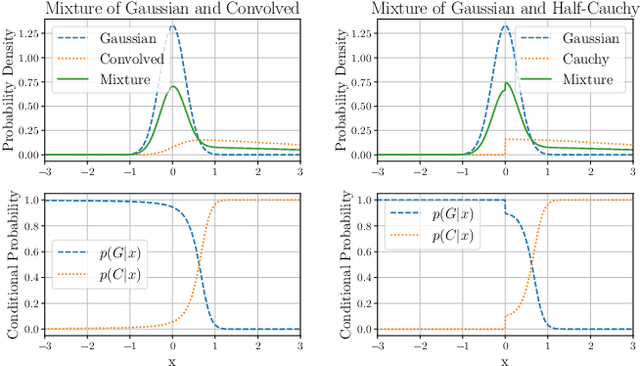

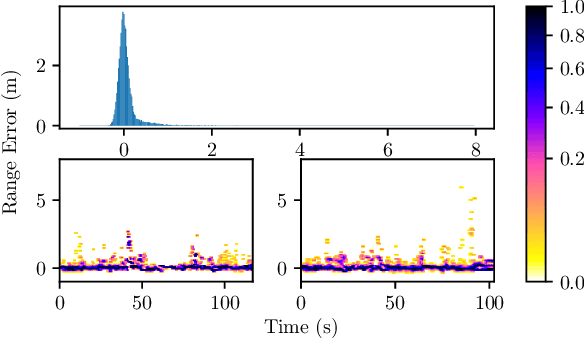

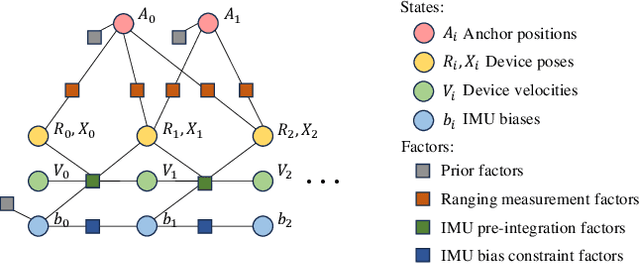

Abstract:Indoor wireless ranging localization is a promising approach for low-power and high-accuracy localization of wearable devices. A primary challenge in this domain stems from non-line of sight propagation of radio waves. This study tackles a fundamental issue in wireless ranging: the unpredictability of real-time multipath determination, especially in challenging conditions such as when there is no direct line of sight. We achieve this by fusing range measurements with inertial measurements obtained from a low cost Inertial Measurement Unit (IMU). For this purpose, we introduce a novel asymmetric noise model crafted specifically for non-Gaussian multipath disturbances. Additionally, we present a novel Levenberg-Marquardt (LM)-family trust-region adaptation of the iSAM2 fusion algorithm, which is optimized for robust performance for our ranging-IMU fusion problem. We evaluate our solution in a densely occupied real office environment. Our proposed solution can achieve temporally consistent localization with an average absolute accuracy of $\sim$0.3m in real-world settings. Furthermore, our results indicate that we can achieve comparable accuracy even with infrequent (1Hz) range measurements.

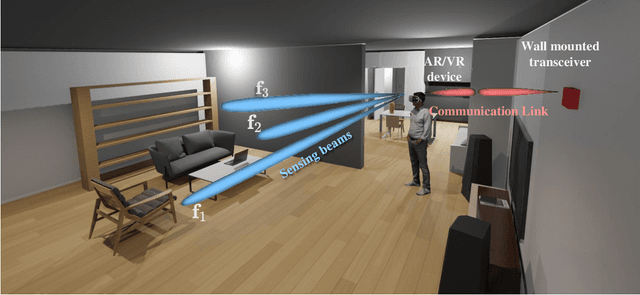

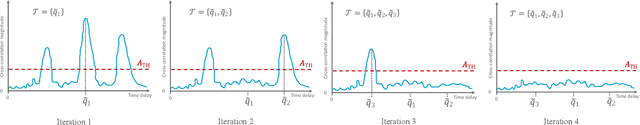

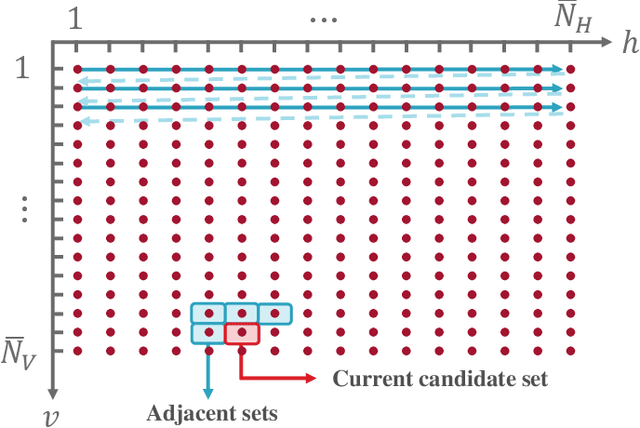

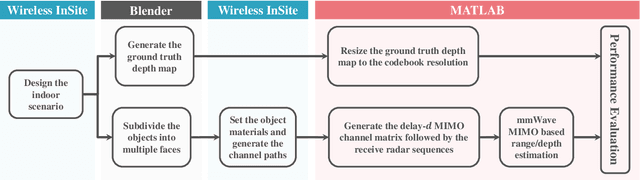

Millimeter Wave MIMO based Depth Maps for Wireless Virtual and Augmented Reality

Feb 13, 2021

Abstract:Augmented and virtual reality systems (AR/VR) are rapidly becoming key components of the wireless landscape. For immersive AR/VR experience, these devices should be able to construct accurate depth perception of the surrounding environment. Current AR/VR devices rely heavily on using RGB-D depth cameras to achieve this goal. The performance of these depth cameras, however, has clear limitations in several scenarios, such as the cases with shiny objects, dark surfaces, and abrupt color transition among other limitations. In this paper, we propose a novel solution for AR/VR depth map construction using mmWave MIMO communication transceivers. This is motivated by the deployment of advanced mmWave communication systems in future AR/VR devices for meeting the high data rate demands and by the interesting propagation characteristics of mmWave signals. Accounting for the constraints on these systems, we develop a comprehensive framework for constructing accurate and high-resolution depth maps using mmWave systems. In this framework, we developed new sensing beamforming codebook approaches that are specific for the depth map construction objective. Using these codebooks, and leveraging tools from successive interference cancellation, we develop a joint beam processing approach that can construct high-resolution depth maps using practical mmWave antenna arrays. Extensive simulation results highlight the potential of the proposed solution in building accurate depth maps. Further, these simulations show the promising gains of mmWave based depth perception compared to RGB-based approaches in several important use cases.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge