Daniel Castro

Abductive reasoning as the basis to reproduce expert criteria in ECG Atrial Fibrillation identification

Feb 16, 2018

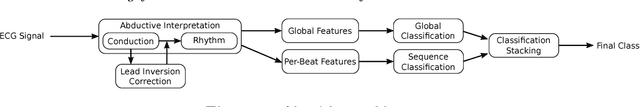

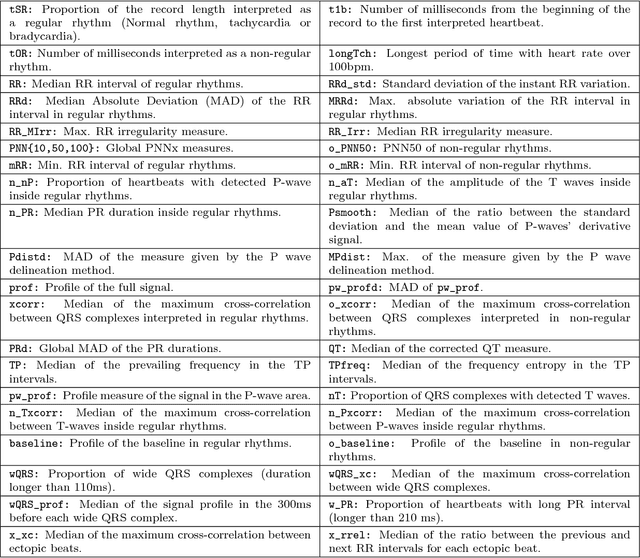

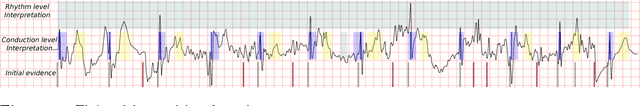

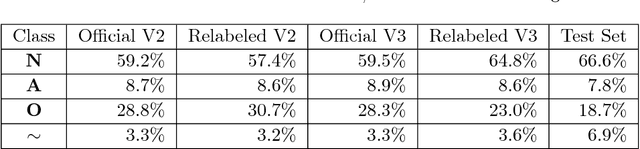

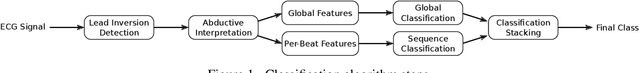

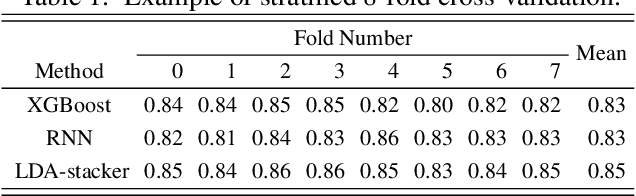

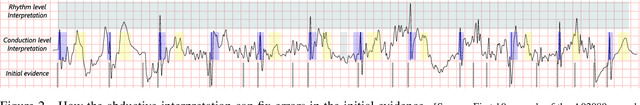

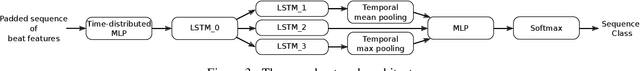

Abstract:Objective: This work aims at providing a new method for the automatic detection of atrial fibrillation, other arrhythmia and noise on short single lead ECG signals, emphasizing the importance of the interpretability of the classification results. Approach: A morphological and rhythm description of the cardiac behavior is obtained by a knowledge-based interpretation of the signal using the \textit{Construe} abductive framework. Then, a set of meaningful features are extracted for each individual heartbeat and as a summary of the full record. The feature distributions were used to elucidate the expert criteria underlying the labeling of the 2017 Physionet/CinC Challenge dataset, enabling a manual partial relabeling to improve the consistency of the classification rules. Finally, state-of-the-art machine learning methods are combined to provide an answer on the basis of the feature values. Main results: The proposal tied for the first place in the official stage of the Challenge, with a combined $F_1$ score of 0.83, and was even improved in the follow-up stage to 0.85 with a significant simplification of the model. Significance: This approach demonstrates the potential of \textit{Construe} to provide robust and valuable descriptions of temporal data even with significant amounts of noise and artifacts. Also, we discuss the importance of a consistent classification criteria in manually labeled training datasets, and the fundamental advantages of knowledge-based approaches to formalize and validate that criteria.

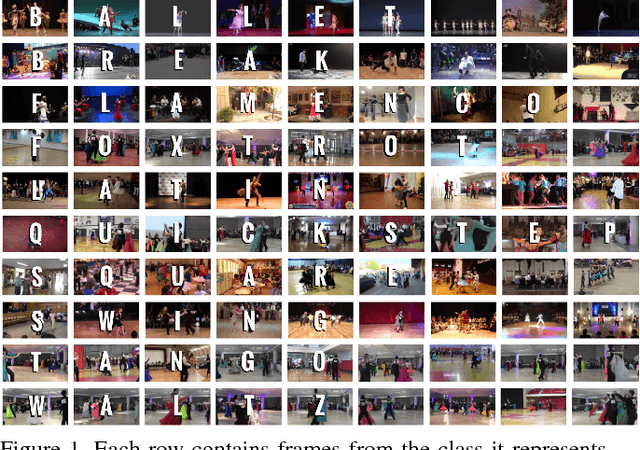

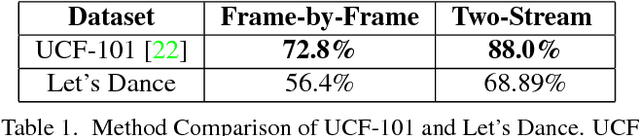

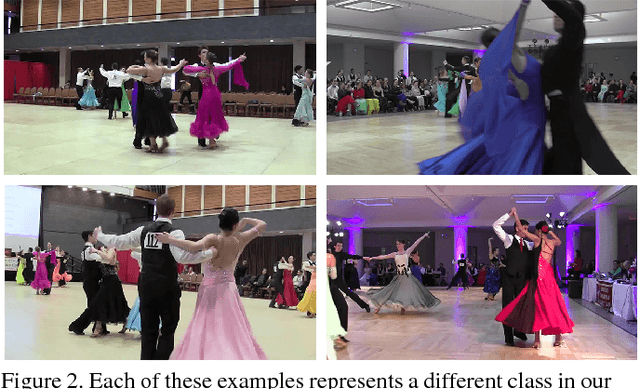

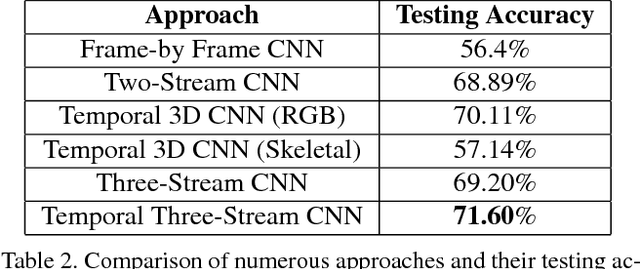

Let's Dance: Learning From Online Dance Videos

Jan 23, 2018

Abstract:In recent years, deep neural network approaches have naturally extended to the video domain, in their simplest case by aggregating per-frame classifications as a baseline for action recognition. A majority of the work in this area extends from the imaging domain, leading to visual-feature heavy approaches on temporal data. To address this issue we introduce "Let's Dance", a 1000 video dataset (and growing) comprised of 10 visually overlapping dance categories that require motion for their classification. We stress the important of human motion as a key distinguisher in our work given that, as we show in this work, visual information is not sufficient to classify motion-heavy categories. We compare our datasets' performance using imaging techniques with UCF-101 and demonstrate this inherent difficulty. We present a comparison of numerous state-of-the-art techniques on our dataset using three different representations (video, optical flow and multi-person pose data) in order to analyze these approaches. We discuss the motion parameterization of each of them and their value in learning to categorize online dance videos. Lastly, we release this dataset (and its three representations) for the research community to use.

Arrhythmia Classification from the Abductive Interpretation of Short Single-Lead ECG Records

Nov 10, 2017

Abstract:In this work we propose a new method for the rhythm classification of short single-lead ECG records, using a set of high-level and clinically meaningful features provided by the abductive interpretation of the records. These features include morphological and rhythm-related features that are used to build two classifiers: one that evaluates the record globally, using aggregated values for each feature; and another one that evaluates the record as a sequence, using a Recurrent Neural Network fed with the individual features for each detected heartbeat. The two classifiers are finally combined using the stacking technique, providing an answer by means of four target classes: Normal sinus rhythm, Atrial fibrillation, Other anomaly, and Noisy. The approach has been validated against the 2017 Physionet/CinC Challenge dataset, obtaining a final score of 0.83 and ranking first in the competition.

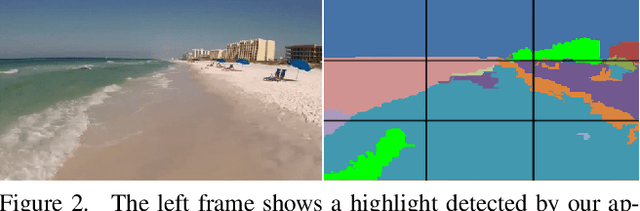

Discovering Picturesque Highlights from Egocentric Vacation Videos

Jan 18, 2016

Abstract:We present an approach for identifying picturesque highlights from large amounts of egocentric video data. Given a set of egocentric videos captured over the course of a vacation, our method analyzes the videos and looks for images that have good picturesque and artistic properties. We introduce novel techniques to automatically determine aesthetic features such as composition, symmetry and color vibrancy in egocentric videos and rank the video frames based on their photographic qualities to generate highlights. Our approach also uses contextual information such as GPS, when available, to assess the relative importance of each geographic location where the vacation videos were shot. Furthermore, we specifically leverage the properties of egocentric videos to improve our highlight detection. We demonstrate results on a new egocentric vacation dataset which includes 26.5 hours of videos taken over a 14 day vacation that spans many famous tourist destinations and also provide results from a user-study to access our results.

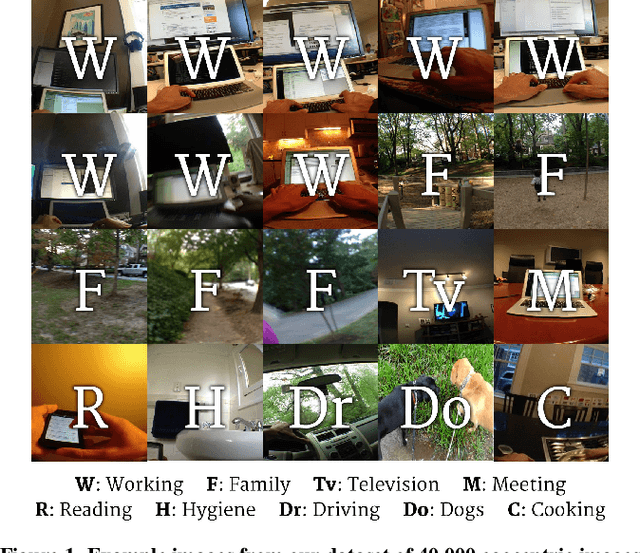

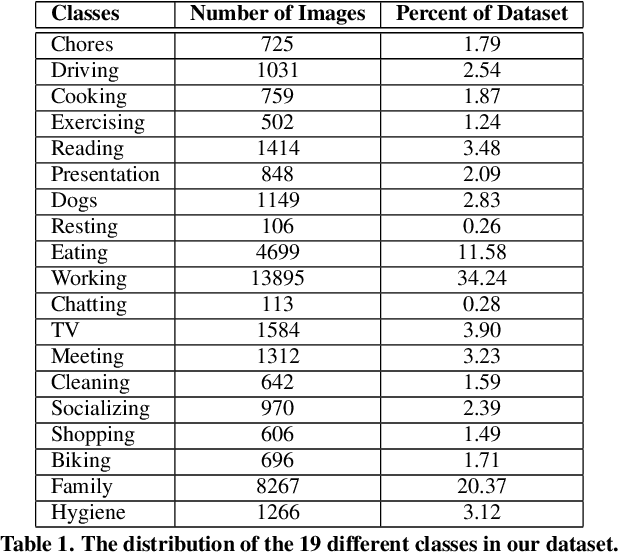

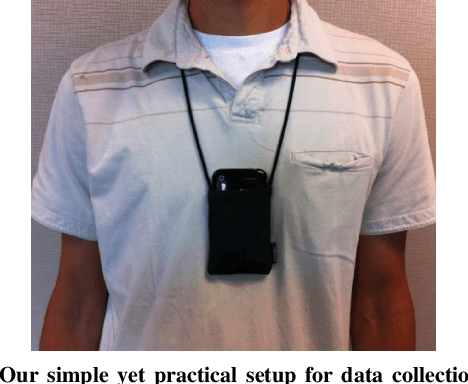

Predicting Daily Activities From Egocentric Images Using Deep Learning

Oct 06, 2015

Abstract:We present a method to analyze images taken from a passive egocentric wearable camera along with the contextual information, such as time and day of week, to learn and predict everyday activities of an individual. We collected a dataset of 40,103 egocentric images over a 6 month period with 19 activity classes and demonstrate the benefit of state-of-the-art deep learning techniques for learning and predicting daily activities. Classification is conducted using a Convolutional Neural Network (CNN) with a classification method we introduce called a late fusion ensemble. This late fusion ensemble incorporates relevant contextual information and increases our classification accuracy. Our technique achieves an overall accuracy of 83.07% in predicting a person's activity across the 19 activity classes. We also demonstrate some promising results from two additional users by fine-tuning the classifier with one day of training data.

* 8 pages

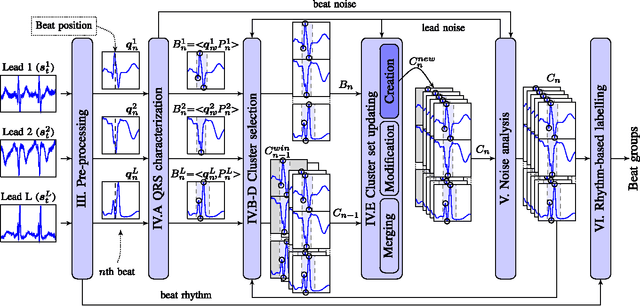

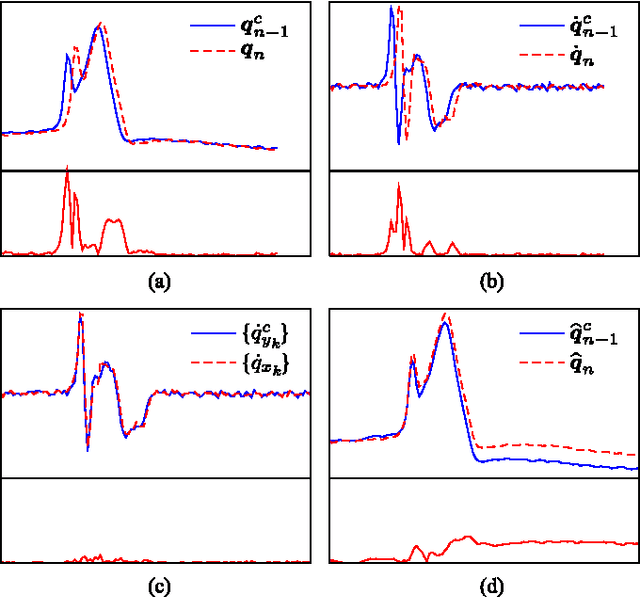

A method for context-based adaptive QRS clustering in real-time

Oct 27, 2014

Abstract:Continuous follow-up of heart condition through long-term electrocardiogram monitoring is an invaluable tool for diagnosing some cardiac arrhythmias. In such context, providing tools for fast locating alterations of normal conduction patterns is mandatory and still remains an open issue. This work presents a real-time method for adaptive clustering QRS complexes from multilead ECG signals that provides the set of QRS morphologies that appear during an ECG recording. The method processes the QRS complexes sequentially, grouping them into a dynamic set of clusters based on the information content of the temporal context. The clusters are represented by templates which evolve over time and adapt to the QRS morphology changes. Rules to create, merge and remove clusters are defined along with techniques for noise detection in order to avoid their proliferation. To cope with beat misalignment, Derivative Dynamic Time Warping is used. The proposed method has been validated against the MIT-BIH Arrhythmia Database and the AHA ECG Database showing a global purity of 98.56% and 99.56%, respectively. Results show that our proposal not only provides better results than previous offline solutions but also fulfills real-time requirements.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge