D. A. Sasi Kiran

Spatial Relation Graph and Graph Convolutional Network for Object Goal Navigation

Aug 27, 2022

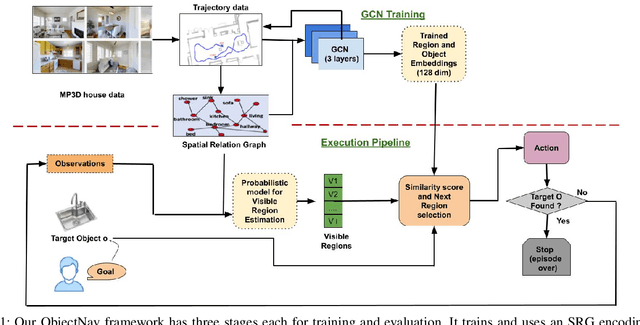

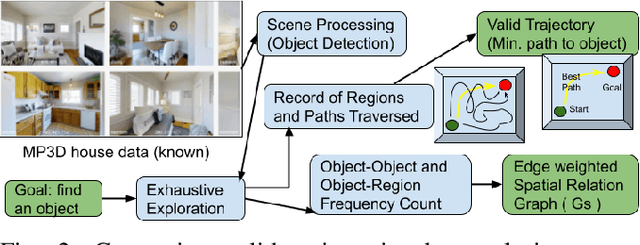

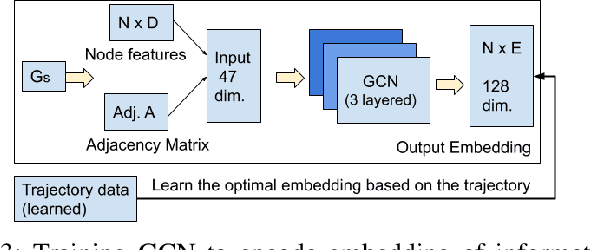

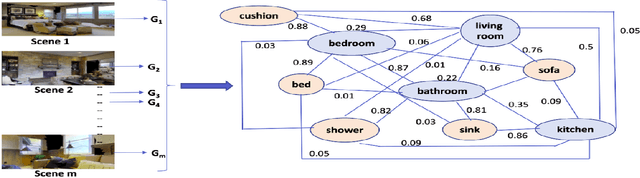

Abstract:This paper describes a framework for the object-goal navigation task, which requires a robot to find and move to the closest instance of a target object class from a random starting position. The framework uses a history of robot trajectories to learn a Spatial Relational Graph (SRG) and Graph Convolutional Network (GCN)-based embeddings for the likelihood of proximity of different semantically-labeled regions and the occurrence of different object classes in these regions. To locate a target object instance during evaluation, the robot uses Bayesian inference and the SRG to estimate the visible regions, and uses the learned GCN embeddings to rank visible regions and select the region to explore next.

Object Goal Navigation using Data Regularized Q-Learning

Aug 27, 2022

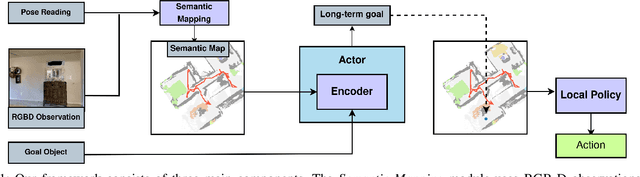

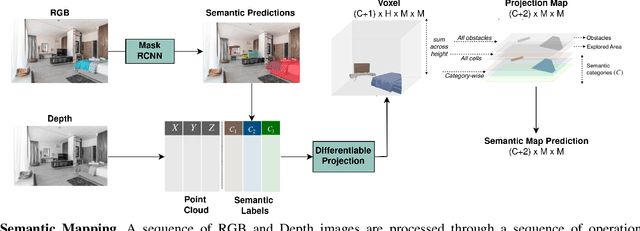

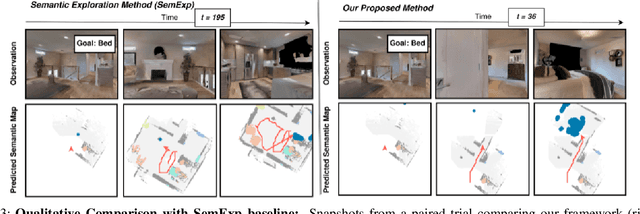

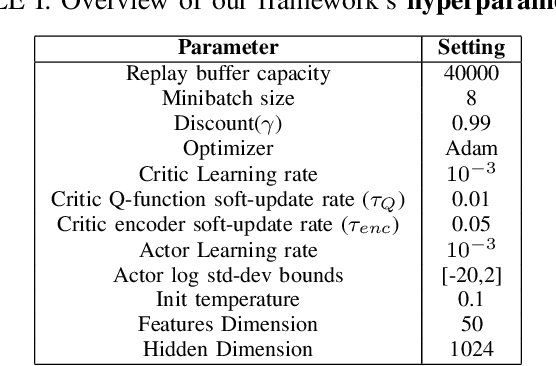

Abstract:Object Goal Navigation requires a robot to find and navigate to an instance of a target object class in a previously unseen environment. Our framework incrementally builds a semantic map of the environment over time, and then repeatedly selects a long-term goal ('where to go') based on the semantic map to locate the target object instance. Long-term goal selection is formulated as a vision-based deep reinforcement learning problem. Specifically, an Encoder Network is trained to extract high-level features from a semantic map and select a long-term goal. In addition, we incorporate data augmentation and Q-function regularization to make the long-term goal selection more effective. We report experimental results using the photo-realistic Gibson benchmark dataset in the AI Habitat 3D simulation environment to demonstrate substantial performance improvement on standard measures in comparison with a state of the art data-driven baseline.

Non Holonomic Collision Avoidance of Dynamic Obstacles under Non-Parametric Uncertainty: A Hilbert Space Approach

Jan 02, 2022

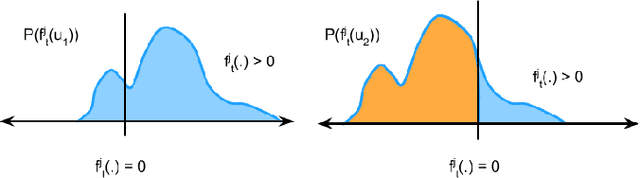

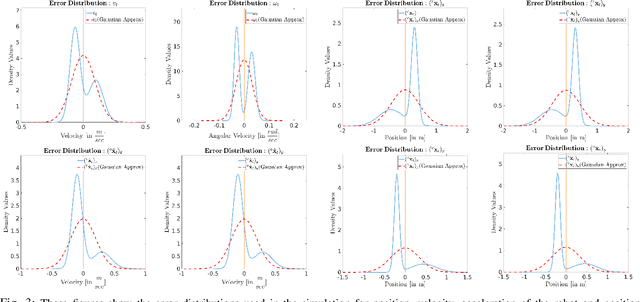

Abstract:We consider the problem of an agent/robot with non-holonomic kinematics avoiding many dynamic obstacles. State and velocity noise of both the robot and obstacles as well as the robot's control noise are modelled as non-parametric distributions as often the Gaussian assumptions of noise models are violated in real-world scenarios. Under these assumptions, we formulate a robust MPC that samples robotic controls effectively in a manner that aligns the robot to the goal state while avoiding obstacles under the duress of such non-parametric noise. In particular, the MPC incorporates a distribution matching cost that effectively aligns the distribution of the current collision cone to a certain desired distribution whose samples are collision-free. This cost is posed as a distance function in the Hilbert Space, whose minimization typically results in the collision cone samples becoming collision-free. We compare and show tangible performance gain with methods that model the collision cone distribution by linearizing the Gaussian approximations of the original non-parametric state and obstacle distributions. We also show superior performance with methods that pose a chance constraint formulation of the Gaussian approximations of non-parametric noise without subjecting such approximations to further linearizations. The performance gain is shown both in terms of trajectory length and control costs that vindicates the efficacy of the proposed method. To the best of our knowledge, this is the first presentation of non-holonomic collision avoidance of moving obstacles in the presence of non-parametric state, velocity and actuator noise models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge