Vanshil Shah

Non Holonomic Collision Avoidance of Dynamic Obstacles under Non-Parametric Uncertainty: A Hilbert Space Approach

Jan 02, 2022

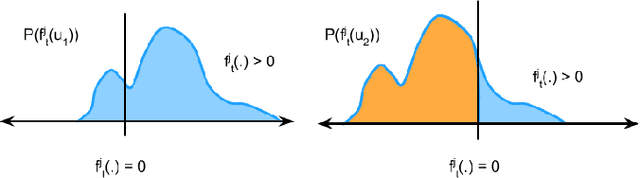

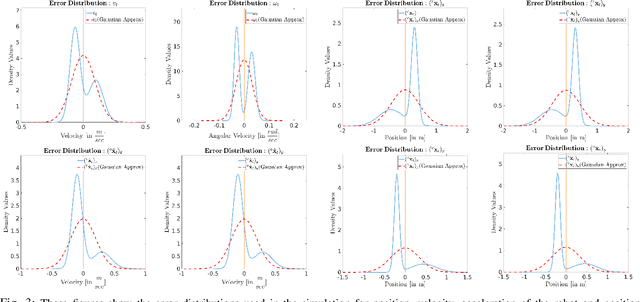

Abstract:We consider the problem of an agent/robot with non-holonomic kinematics avoiding many dynamic obstacles. State and velocity noise of both the robot and obstacles as well as the robot's control noise are modelled as non-parametric distributions as often the Gaussian assumptions of noise models are violated in real-world scenarios. Under these assumptions, we formulate a robust MPC that samples robotic controls effectively in a manner that aligns the robot to the goal state while avoiding obstacles under the duress of such non-parametric noise. In particular, the MPC incorporates a distribution matching cost that effectively aligns the distribution of the current collision cone to a certain desired distribution whose samples are collision-free. This cost is posed as a distance function in the Hilbert Space, whose minimization typically results in the collision cone samples becoming collision-free. We compare and show tangible performance gain with methods that model the collision cone distribution by linearizing the Gaussian approximations of the original non-parametric state and obstacle distributions. We also show superior performance with methods that pose a chance constraint formulation of the Gaussian approximations of non-parametric noise without subjecting such approximations to further linearizations. The performance gain is shown both in terms of trajectory length and control costs that vindicates the efficacy of the proposed method. To the best of our knowledge, this is the first presentation of non-holonomic collision avoidance of moving obstacles in the presence of non-parametric state, velocity and actuator noise models.

DSLR: Dynamic to Static LiDAR Scan Reconstruction Using Adversarially Trained Autoencoder

May 26, 2021

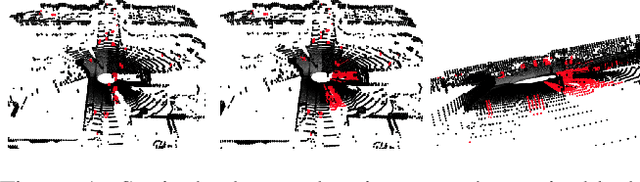

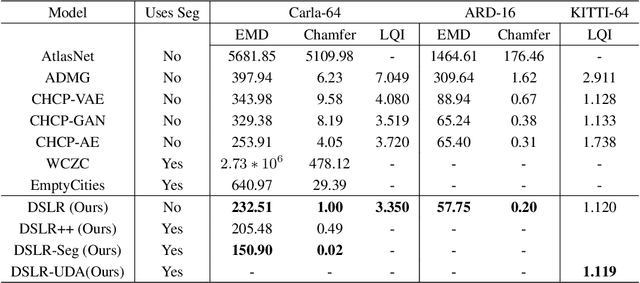

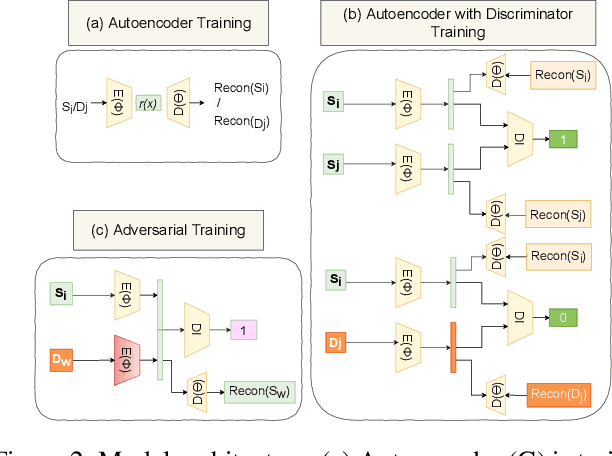

Abstract:Accurate reconstruction of static environments from LiDAR scans of scenes containing dynamic objects, which we refer to as Dynamic to Static Translation (DST), is an important area of research in Autonomous Navigation. This problem has been recently explored for visual SLAM, but to the best of our knowledge no work has been attempted to address DST for LiDAR scans. The problem is of critical importance due to wide-spread adoption of LiDAR in Autonomous Vehicles. We show that state-of the art methods developed for the visual domain when adapted for LiDAR scans perform poorly. We develop DSLR, a deep generative model which learns a mapping between dynamic scan to its static counterpart through an adversarially trained autoencoder. Our model yields the first solution for DST on LiDAR that generates static scans without using explicit segmentation labels. DSLR cannot always be applied to real world data due to lack of paired dynamic-static scans. Using Unsupervised Domain Adaptation, we propose DSLR-UDA for transfer to real world data and experimentally show that this performs well in real world settings. Additionally, if segmentation information is available, we extend DSLR to DSLR-Seg to further improve the reconstruction quality. DSLR gives the state of the art performance on simulated and real-world datasets and also shows at least 4x improvement. We show that DSLR, unlike the existing baselines, is a practically viable model with its reconstruction quality within the tolerable limits for tasks pertaining to autonomous navigation like SLAM in dynamic environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge