Claudius Zelenka

Annotating Ambiguous Images: General Annotation Strategy for Image Classification with Real-World Biomedical Validation on Vertebral Fracture Diagnosis

Jun 21, 2023

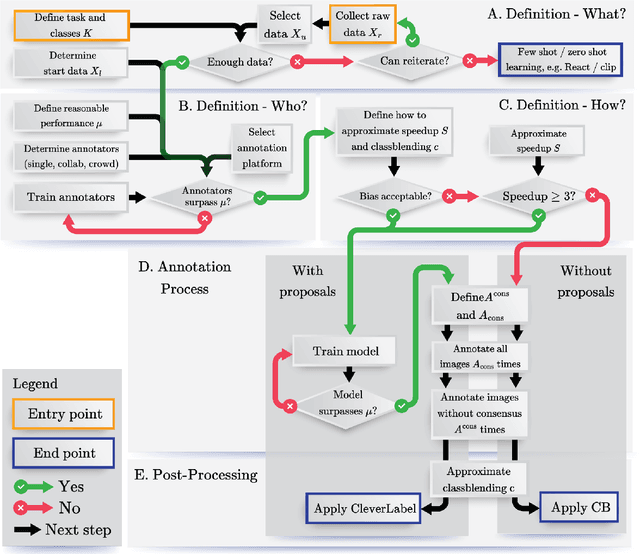

Abstract:While numerous methods exist to solve classification problems within curated datasets, these solutions often fall short in biomedical applications due to the biased or ambiguous nature of the data. These difficulties are particularly evident when inferring height reduction from vertebral data, a key component of the clinically-recognized Genant score. Although strategies such as semi-supervised learning, proposal usage, and class blending may provide some resolution, a clear and superior solution remains elusive. This paper introduces a flowchart of general strategy to address these issues. We demonstrate the application of this strategy by constructing a vertebral fracture dataset with over 300,000 annotations. This work facilitates the transition of the classification problem into clinically meaningful scores and enriches our understanding of vertebral height reduction.

Label Smarter, Not Harder: CleverLabel for Faster Annotation of Ambiguous Image Classification with Higher Quality

May 22, 2023

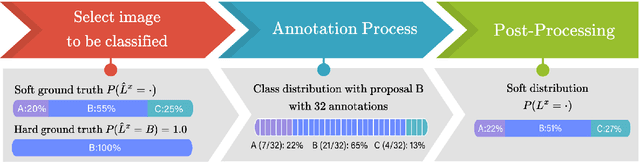

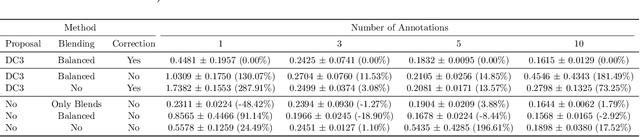

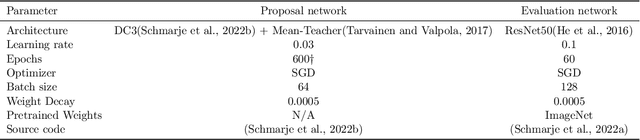

Abstract:High-quality data is crucial for the success of machine learning, but labeling large datasets is often a time-consuming and costly process. While semi-supervised learning can help mitigate the need for labeled data, label quality remains an open issue due to ambiguity and disagreement among annotators. Thus, we use proposal-guided annotations as one option which leads to more consistency between annotators. However, proposing a label increases the probability of the annotators deciding in favor of this specific label. This introduces a bias which we can simulate and remove. We propose a new method CleverLabel for Cost-effective LabEling using Validated proposal-guidEd annotations and Repaired LABELs. CleverLabel can reduce labeling costs by up to 30.0%, while achieving a relative improvement in Kullback-Leibler divergence of up to 29.8% compared to the previous state-of-the-art on a multi-domain real-world image classification benchmark. CleverLabel offers a novel solution to the challenge of efficiently labeling large datasets while also improving the label quality.

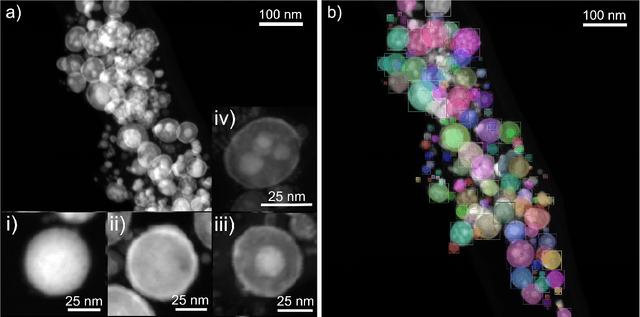

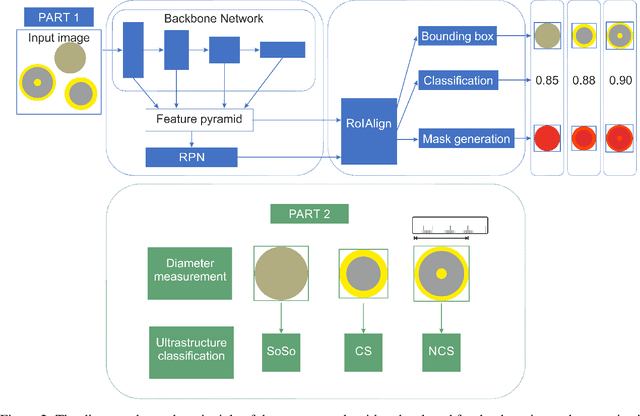

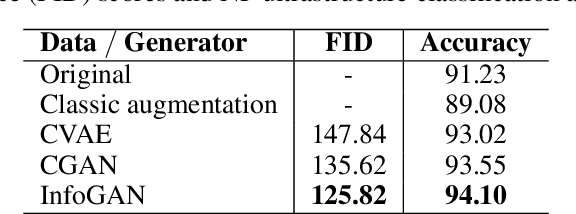

Automated Classification of Nanoparticles with Various Ultrastructures and Sizes

Jul 28, 2022

Abstract:Accurately measuring the size, morphology, and structure of nanoparticles is very important, because they are strongly dependent on their properties for many applications. In this paper, we present a deep-learning based method for nanoparticle measurement and classification trained from a small data set of scanning transmission electron microscopy images. Our approach is comprised of two stages: localization, i.e., detection of nanoparticles, and classification, i.e., categorization of their ultrastructure. For each stage, we optimize the segmentation and classification by analysis of the different state-of-the-art neural networks. We show how the generation of synthetic images, either using image processing or using various image generation neural networks, can be used to improve the results in both stages. Finally, the application of the algorithm to bimetallic nanoparticles demonstrates the automated data collection of size distributions including classification of complex ultrastructures. The developed method can be easily transferred to other material systems and nanoparticle structures.

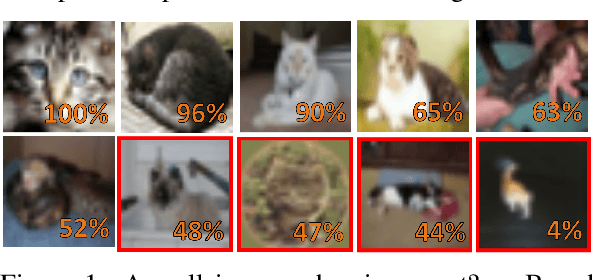

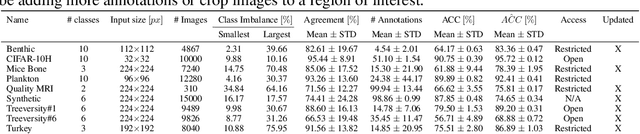

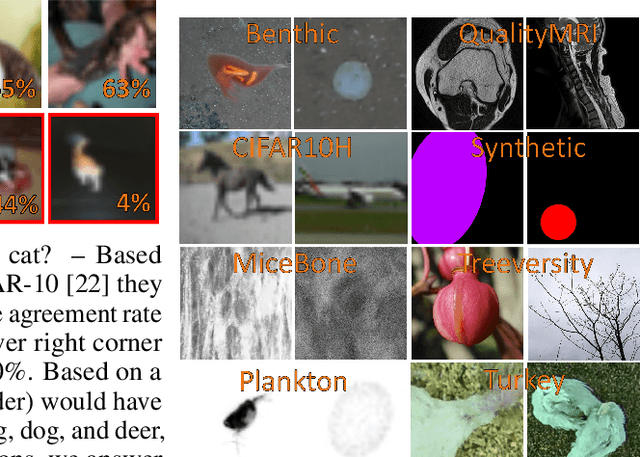

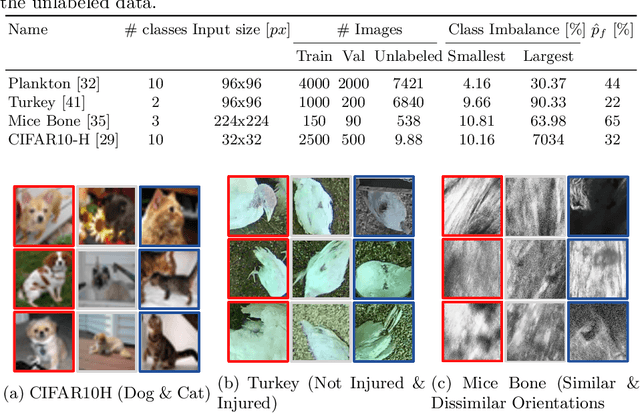

Is one annotation enough? A data-centric image classification benchmark for noisy and ambiguous label estimation

Jul 13, 2022

Abstract:High-quality data is necessary for modern machine learning. However, the acquisition of such data is difficult due to noisy and ambiguous annotations of humans. The aggregation of such annotations to determine the label of an image leads to a lower data quality. We propose a data-centric image classification benchmark with nine real-world datasets and multiple annotations per image to investigate and quantify the impact of such data quality issues. We focus on a data-centric perspective by asking how we could improve the data quality. Across thousands of experiments, we show that multiple annotations allow a better approximation of the real underlying class distribution. We identify that hard labels can not capture the ambiguity of the data and this might lead to the common issue of overconfident models. Based on the presented datasets, benchmark baselines, and analysis, we create multiple research opportunities for the future.

Learning Stixel-based Instance Segmentation

Jul 07, 2021Abstract:Stixels have been successfully applied to a wide range of vision tasks in autonomous driving, recently including instance segmentation. However, due to their sparse occurrence in the image, until now Stixels seldomly served as input for Deep Learning algorithms, restricting their utility for such approaches. In this work we present StixelPointNet, a novel method to perform fast instance segmentation directly on Stixels. By regarding the Stixel representation as unstructured data similar to point clouds, architectures like PointNet are able to learn features from Stixels. We use a bounding box detector to propose candidate instances, for which the relevant Stixels are extracted from the input image. On these Stixels, a PointNet models learns binary segmentations, which we then unify throughout the whole image in a final selection step. StixelPointNet achieves state-of-the-art performance on Stixel-level, is considerably faster than pixel-based segmentation methods, and shows that with our approach the Stixel domain can be introduced to many new 3D Deep Learning tasks.

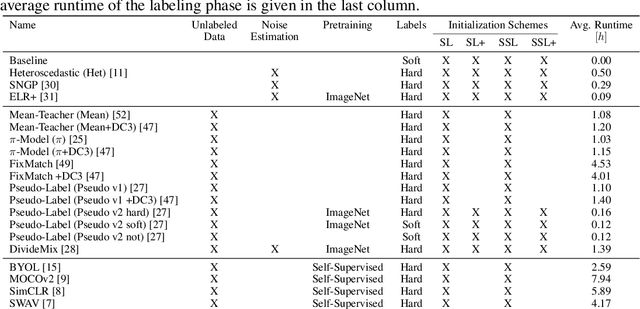

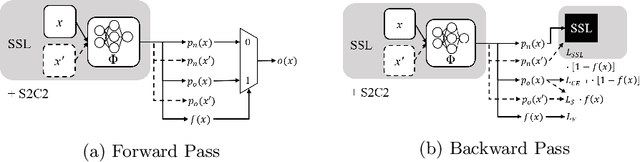

S2C2 - An orthogonal method for Semi-Supervised Learning on fuzzy labels

Jun 30, 2021

Abstract:Semi-Supervised Learning (SSL) can decrease the amount of required labeled image data and thus the cost for deep learning. Most SSL methods only consider a clear distinction between classes but in many real-world datasets, this clear distinction is not given due to intra- or interobserver variability. This variability can lead to different annotations per image. Thus many images have ambiguous annotations and their label needs to be considered "fuzzy". This fuzziness of labels must be addressed as it will limit the performance of Semi-Supervised Learning (SSL) and deep learning in general. We propose Semi-Supervised Classification & Clustering (S2C2) which can extend many deep SSL algorithms. S2C2 can estimate the fuzziness of a label and applies SSL as a classification to certainly labeled data while creating distinct clusters for images with similar but fuzzy labels. We show that S2C2 results in median 7.4% better F1-score for classifications and 5.4% lower inner distance of clusters across multiple SSL algorithms and datasets while being more interpretable due to the fuzziness estimation of our method. Overall, a combination of Semi-Supervised Learning with our method S2C2 leads to better handling of the fuzziness of labels and thus real-world datasets.

2D and 3D Segmentation of uncertain local collagen fiber orientations in SHG microscopy

Jul 30, 2019

Abstract:Collagen fiber orientations in bones, visible with Second Harmonic Generation (SHG) microscopy, represent the inner structure and its alteration due to influences like cancer. While analyses of these orientations are valuable for medical research, it is not feasible to analyze the needed large amounts of local orientations manually. Since we have uncertain borders for these local orientations only rough regions can be segmented instead of a pixel-wise segmentation. We analyze the effect of these uncertain borders on human performance by a user study. Furthermore, we compare a variety of 2D and 3D methods such as classical approaches like Fourier analysis with state-of-the-art deep neural networks for the classification of local fiber orientations. We present a general way to use pretrained 2D weights in 3D neural networks, such as Inception-ResNet-3D a 3D extension of Inception-ResNet-v2. In a 10 fold cross-validation our two stage segmentation based on Inception-ResNet-3D and transferred 2D ImageNet weights achieves a human comparable accuracy.

Restoration of Images with Wavefront Aberrations

Apr 02, 2017

Abstract:This contribution deals with image restoration in optical systems with coherent illumination, which is an important topic in astronomy, coherent microscopy and radar imaging. Such optical systems suffer from wavefront distortions, which are caused by imperfect imaging components and conditions. Known image restoration algorithms work well for incoherent imaging, they fail in case of coherent images. In this paper a novel wavefront correction algorithm is presented, which allows image restoration under coherent conditions. In most coherent imaging systems, especially in astronomy, the wavefront deformation is known. Using this information, the proposed algorithm allows a high quality restoration even in case of severe wavefront distortions. We present two versions of this algorithm, which are an evolution of the Gerchberg-Saxton and the Hybrid-Input-Output algorithm. The algorithm is verified on simulated and real microscopic images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge