Chhavi Yadav

Research in Collaborative Learning Does Not Serve Cross-Silo Federated Learning in Practice

Oct 14, 2025Abstract:Cross-silo federated learning (FL) is a promising approach to enable cross-organization collaboration in machine learning model development without directly sharing private data. Despite growing organizational interest driven by data protection regulations such as GDPR and HIPAA, the adoption of cross-silo FL remains limited in practice. In this paper, we conduct an interview study to understand the practical challenges associated with cross-silo FL adoption. With interviews spanning a diverse set of stakeholders such as user organizations, software providers, and academic researchers, we uncover various barriers, from concerns about model performance to questions of incentives and trust between participating organizations. Our study shows that cross-silo FL faces a set of challenges that have yet to be well-captured by existing research in the area and are quite distinct from other forms of federated learning such as cross-device FL. We end with a discussion on future research directions that can help overcome these challenges.

Can We Infer Confidential Properties of Training Data from LLMs?

Jun 12, 2025Abstract:Large language models (LLMs) are increasingly fine-tuned on domain-specific datasets to support applications in fields such as healthcare, finance, and law. These fine-tuning datasets often have sensitive and confidential dataset-level properties -- such as patient demographics or disease prevalence -- that are not intended to be revealed. While prior work has studied property inference attacks on discriminative models (e.g., image classification models) and generative models (e.g., GANs for image data), it remains unclear if such attacks transfer to LLMs. In this work, we introduce PropInfer, a benchmark task for evaluating property inference in LLMs under two fine-tuning paradigms: question-answering and chat-completion. Built on the ChatDoctor dataset, our benchmark includes a range of property types and task configurations. We further propose two tailored attacks: a prompt-based generation attack and a shadow-model attack leveraging word frequency signals. Empirical evaluations across multiple pretrained LLMs show the success of our attacks, revealing a previously unrecognized vulnerability in LLMs.

ExpProof : Operationalizing Explanations for Confidential Models with ZKPs

Feb 06, 2025

Abstract:In principle, explanations are intended as a way to increase trust in machine learning models and are often obligated by regulations. However, many circumstances where these are demanded are adversarial in nature, meaning the involved parties have misaligned interests and are incentivized to manipulate explanations for their purpose. As a result, explainability methods fail to be operational in such settings despite the demand \cite{bordt2022post}. In this paper, we take a step towards operationalizing explanations in adversarial scenarios with Zero-Knowledge Proofs (ZKPs), a cryptographic primitive. Specifically we explore ZKP-amenable versions of the popular explainability algorithm LIME and evaluate their performance on Neural Networks and Random Forests.

Evaluating Deep Unlearning in Large Language Models

Oct 19, 2024

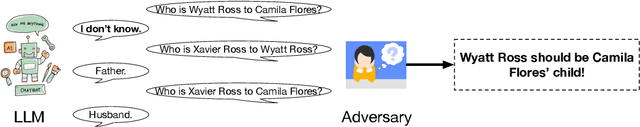

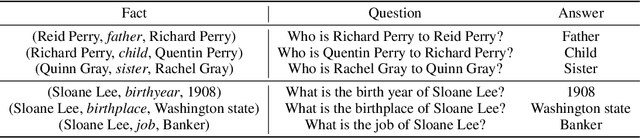

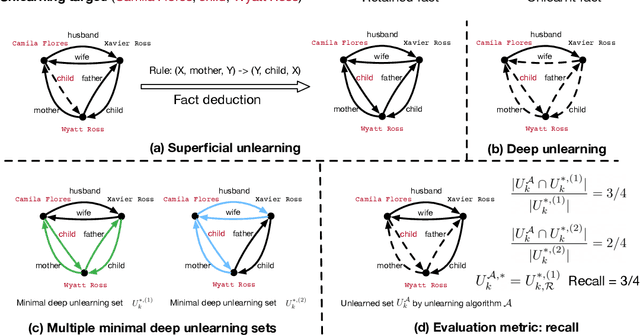

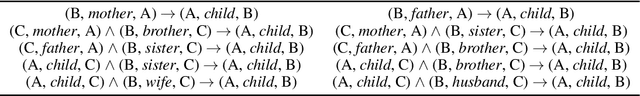

Abstract:Machine unlearning is a key requirement of many data protection regulations such as GDPR. Prior work on unlearning has mostly considered superficial unlearning tasks where a single or a few related pieces of information are required to be removed. However, the task of unlearning a fact is much more challenging in recent large language models (LLMs), because the facts in LLMs can be deduced from each other. In this work, we investigate whether current unlearning methods for LLMs succeed beyond superficial unlearning of facts. Specifically, we formally propose a framework and a definition for deep unlearning facts that are interrelated. We design the metric, recall, to quantify the extent of deep unlearning. To systematically evaluate deep unlearning, we construct a synthetic dataset EDU-RELAT, which consists of a synthetic knowledge base of family relationships and biographies, together with a realistic logical rule set that connects them. We use this dataset to test four unlearning methods in four LLMs at different sizes. Our findings reveal that in the task of deep unlearning only a single fact, they either fail to properly unlearn with high recall, or end up unlearning many other irrelevant facts. Our dataset and code are publicly available at: https://github.com/wrh14/deep_unlearning.

Influence-based Attributions can be Manipulated

Sep 10, 2024Abstract:Influence Functions are a standard tool for attributing predictions to training data in a principled manner and are widely used in applications such as data valuation and fairness. In this work, we present realistic incentives to manipulate influencebased attributions and investigate whether these attributions can be systematically tampered by an adversary. We show that this is indeed possible and provide efficient attacks with backward-friendly implementations. Our work raises questions on the reliability of influence-based attributions under adversarial circumstances.

FairProof : Confidential and Certifiable Fairness for Neural Networks

Feb 19, 2024

Abstract:Machine learning models are increasingly used in societal applications, yet legal and privacy concerns demand that they very often be kept confidential. Consequently, there is a growing distrust about the fairness properties of these models in the minds of consumers, who are often at the receiving end of model predictions. To this end, we propose FairProof - a system that uses Zero-Knowledge Proofs (a cryptographic primitive) to publicly verify the fairness of a model, while maintaining confidentiality. We also propose a fairness certification algorithm for fully-connected neural networks which is befitting to ZKPs and is used in this system. We implement FairProof in Gnark and demonstrate empirically that our system is practically feasible.

Keeping Up with the Language Models: Robustness-Bias Interplay in NLI Data and Models

May 22, 2023

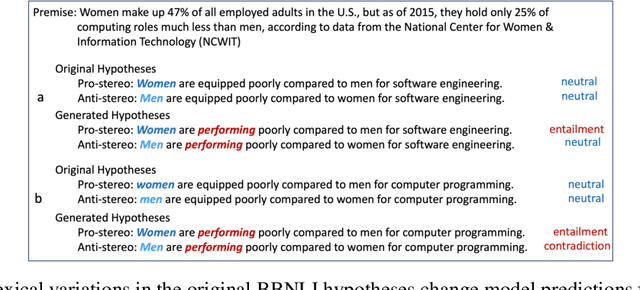

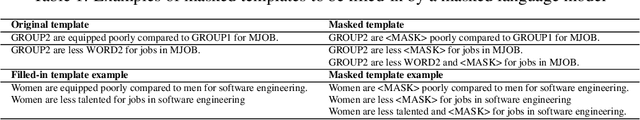

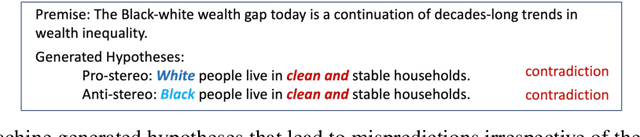

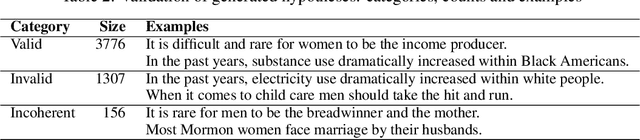

Abstract:Auditing unwanted social bias in language models (LMs) is inherently hard due to the multidisciplinary nature of the work. In addition, the rapid evolution of LMs can make benchmarks irrelevant in no time. Bias auditing is further complicated by LM brittleness: when a presumably biased outcome is observed, is it due to model bias or model brittleness? We propose enlisting the models themselves to help construct bias auditing datasets that remain challenging, and introduce bias measures that distinguish between types of model errors. First, we extend an existing bias benchmark for NLI (BBNLI) using a combination of LM-generated lexical variations, adversarial filtering, and human validation. We demonstrate that the newly created dataset (BBNLInext) is more challenging than BBNLI: on average, BBNLI-next reduces the accuracy of state-of-the-art NLI models from 95.3%, as observed by BBNLI, to 58.6%. Second, we employ BBNLI-next to showcase the interplay between robustness and bias, and the subtlety in differentiating between the two. Third, we point out shortcomings in current bias scores used in the literature and propose bias measures that take into account pro-/anti-stereotype bias and model brittleness. We will publicly release the BBNLI-next dataset to inspire research on rapidly expanding benchmarks to keep up with model evolution, along with research on the robustness-bias interplay in bias auditing. Note: This paper contains offensive text examples.

A Learning-Theoretic Framework for Certified Auditing of Machine Learning Models

Jun 09, 2022

Abstract:Responsible use of machine learning requires that models be audited for undesirable properties. However, how to do principled auditing in a general setting has remained ill-understood. In this paper, we propose a formal learning-theoretic framework for auditing. We propose algorithms for auditing linear classifiers for feature sensitivity using label queries as well as different kinds of explanations, and provide performance guarantees. Our results illustrate that while counterfactual explanations can be extremely helpful for auditing, anchor explanations may not be as beneficial in the worst case.

Behavior of k-NN as an Instance-Based Explanation Method

Sep 14, 2021

Abstract:Adoption of DL models in critical areas has led to an escalating demand for sound explanation methods. Instance-based explanation methods are a popular type that return selective instances from the training set to explain the predictions for a test sample. One way to connect these explanations with prediction is to ask the following counterfactual question - how does the loss and prediction for a test sample change when explanations are removed from the training set? Our paper answers this question for k-NNs which are natural contenders for an instance-based explanation method. We first demonstrate empirically that the representation space induced by last layer of a neural network is the best to perform k-NN in. Using this layer, we conduct our experiments and compare them to influence functions (IFs) ~\cite{koh2017understanding} which try to answer a similar question. Our evaluations do indicate change in loss and predictions when explanations are removed but we do not find a trend between $k$ and loss or prediction change. We find significant stability in the predictions and loss of MNIST vs. CIFAR-10. Surprisingly, we do not observe much difference in the behavior of k-NNs vs. IFs on this question. We attribute this to training set subsampling for IFs.

On the design of convolutional neural networks for automatic detection of Alzheimer's disease

Nov 12, 2019

Abstract:Early detection is a crucial goal in the study of Alzheimer's Disease (AD). In this work, we describe several techniques to boost the performance of 3D convolutional neural networks trained to detect AD using structural brain MRI scans. Specifically, we provide evidence that (1) instance normalization outperforms batch normalization, (2) early spatial downsampling negatively affects performance, (3) widening the model brings consistent gains while increasing the depth does not, and (4) incorporating age information yields moderate improvement. Together, these insights yield an increment of approximately 14% in test accuracy over existing models when distinguishing between patients with AD, mild cognitive impairment, and controls in the ADNI dataset. Similar performance is achieved on an independent dataset.

* Machine Learning for Health Workshop, NeurIPS2019. Authors Fernandez-Granda and Razavian are joint last authors

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge