Chao Cai

Tracing Human Stress from Physiological Signals using UWB Radar

Oct 14, 2024Abstract:Stress tracing is an important research domain that supports many applications, such as health care and stress management; and its closest related works are derived from stress detection. However, these existing works cannot well address two important challenges facing stress detection. First, most of these studies involve asking users to wear physiological sensors to detect their stress states, which has a negative impact on the user experience. Second, these studies have failed to effectively utilize multimodal physiological signals, which results in less satisfactory detection results. This paper formally defines the stress tracing problem, which emphasizes the continuous detection of human stress states. A novel deep stress tracing method, named DST, is presented. Note that DST proposes tracing human stress based on physiological signals collected by a noncontact ultrawideband radar, which is more friendly to users when collecting their physiological signals. In DST, a signal extraction module is carefully designed at first to robustly extract multimodal physiological signals from the raw RF data of the radar, even in the presence of body movement. Afterward, a multimodal fusion module is proposed in DST to ensure that the extracted multimodal physiological signals can be effectively fused and utilized. Extensive experiments are conducted on three real-world datasets, including one self-collected dataset and two publicity datasets. Experimental results show that the proposed DST method significantly outperforms all the baselines in terms of tracing human stress states. On average, DST averagely provides a 6.31% increase in detection accuracy on all datasets, compared with the best baselines.

Adv-4-Adv: Thwarting Changing Adversarial Perturbations via Adversarial Domain Adaptation

Dec 04, 2021

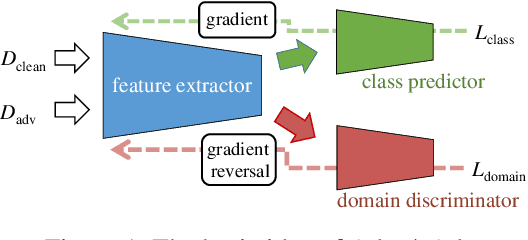

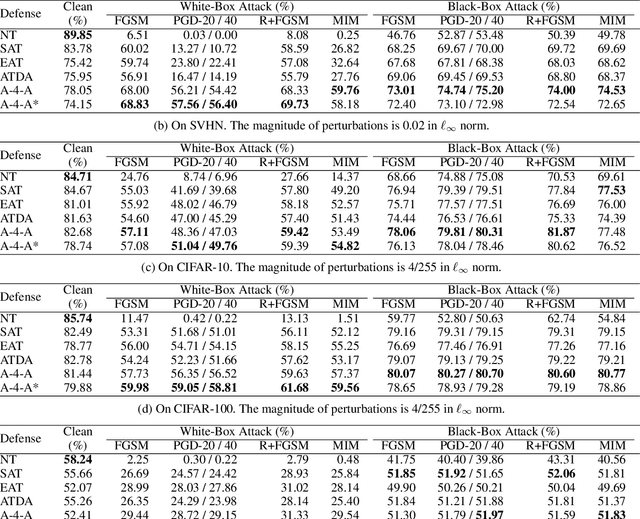

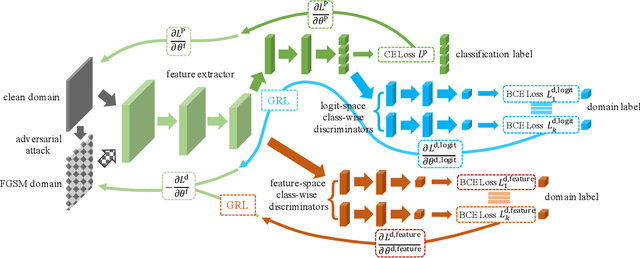

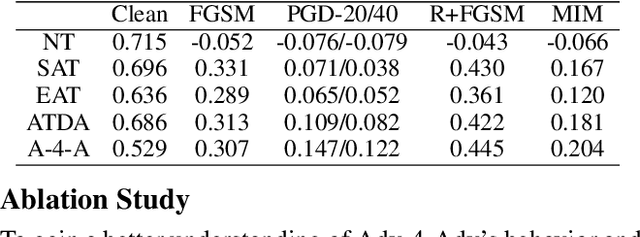

Abstract:Whereas adversarial training can be useful against specific adversarial perturbations, they have also proven ineffective in generalizing towards attacks deviating from those used for training. However, we observe that this ineffectiveness is intrinsically connected to domain adaptability, another crucial issue in deep learning for which adversarial domain adaptation appears to be a promising solution. Consequently, we proposed Adv-4-Adv as a novel adversarial training method that aims to retain robustness against unseen adversarial perturbations. Essentially, Adv-4-Adv treats attacks incurring different perturbations as distinct domains, and by leveraging the power of adversarial domain adaptation, it aims to remove the domain/attack-specific features. This forces a trained model to learn a robust domain-invariant representation, which in turn enhances its generalization ability. Extensive evaluations on Fashion-MNIST, SVHN, CIFAR-10, and CIFAR-100 demonstrate that a model trained by Adv-4-Adv based on samples crafted by simple attacks (e.g., FGSM) can be generalized to more advanced attacks (e.g., PGD), and the performance exceeds state-of-the-art proposals on these datasets.

MoRe-Fi: Motion-robust and Fine-grained Respiration Monitoring via Deep-Learning UWB Radar

Nov 16, 2021

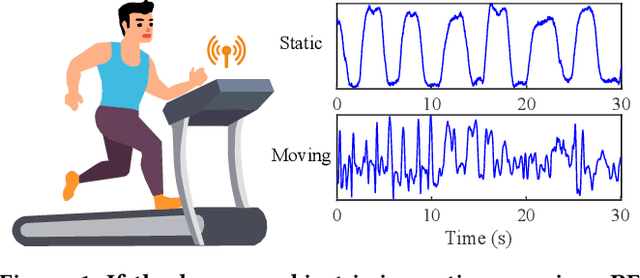

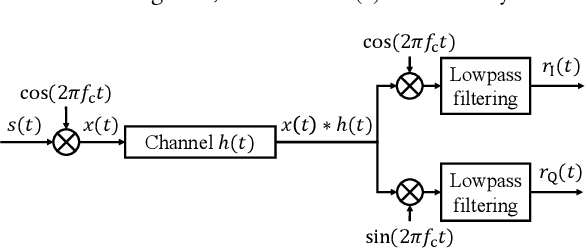

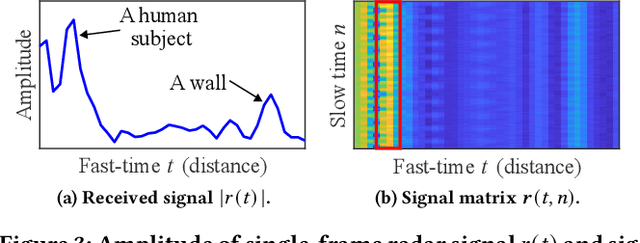

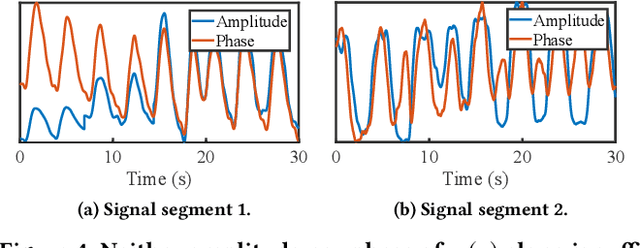

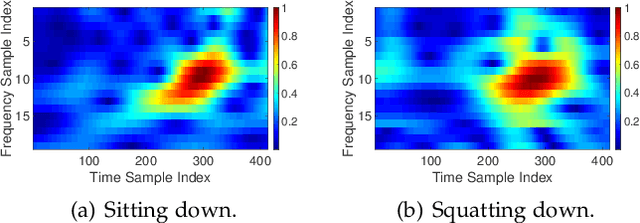

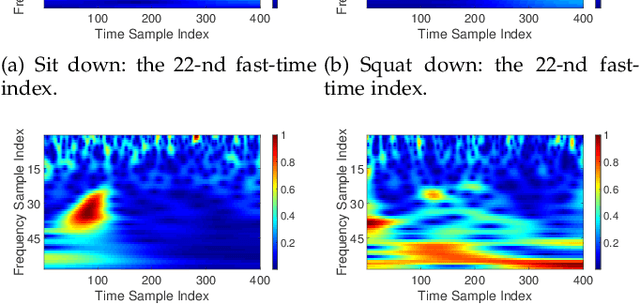

Abstract:Crucial for healthcare and biomedical applications, respiration monitoring often employs wearable sensors in practice, causing inconvenience due to their direct contact with human bodies. Therefore, researchers have been constantly searching for contact-free alternatives. Nonetheless, existing contact-free designs mostly require human subjects to remain static, largely confining their adoptions in everyday environments where body movements are inevitable. Fortunately, radio-frequency (RF) enabled contact-free sensing, though suffering motion interference inseparable by conventional filtering, may offer a potential to distill respiratory waveform with the help of deep learning. To realize this potential, we introduce MoRe-Fi to conduct fine-grained respiration monitoring under body movements. MoRe-Fi leverages an IR-UWB radar to achieve contact-free sensing, and it fully exploits the complex radar signal for data augmentation. The core of MoRe-Fi is a novel variational encoder-decoder network; it aims to single out the respiratory waveforms that are modulated by body movements in a non-linear manner. Our experiments with 12 subjects and 66-hour data demonstrate that MoRe-Fi accurately recovers respiratory waveform despite the interference caused by body movements. We also discuss potential applications of MoRe-Fi for pulmonary disease diagnoses.

* 14 pages

V2iFi: in-Vehicle Vital Sign Monitoring via Compact RF Sensing

Oct 28, 2021

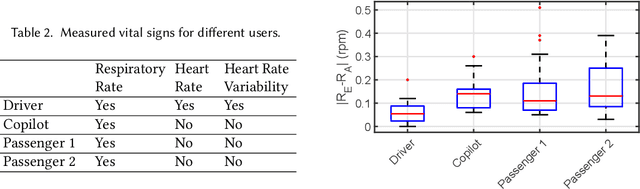

Abstract:Given the significant amount of time people spend in vehicles, health issues under driving condition have become a major concern. Such issues may vary from fatigue, asthma, stroke, to even heart attack, yet they can be adequately indicated by vital signs and abnormal activities. Therefore, in-vehicle vital sign monitoring can help us predict and hence prevent these issues. Whereas existing sensor-based (including camera) methods could be used to detect these indicators, privacy concern and system complexity both call for a convenient yet effective and robust alternative. This paper aims to develop V2iFi, an intelligent system performing monitoring tasks using a COTS impulse radio mounted on the windshield. V2iFi is capable of reliably detecting driver's vital signs under driving condition and with the presence of passengers, thus allowing for potentially inferring corresponding health issues. Compared with prior work based on Wi-Fi CSI, V2iFi is able to distinguish reflected signals from multiple users, and hence provide finer-grained measurements under more realistic settings. We evaluate V2iFi both in lab environments and during real-life road tests; the results demonstrate that respiratory rate, heart rate, and heart rate variability can all be estimated accurately. Based on these estimation results, we further discuss how machine learning models can be applied on top of V2iFi so as to improve both physiological and psychological wellbeing in driving environments.

* 27 pages

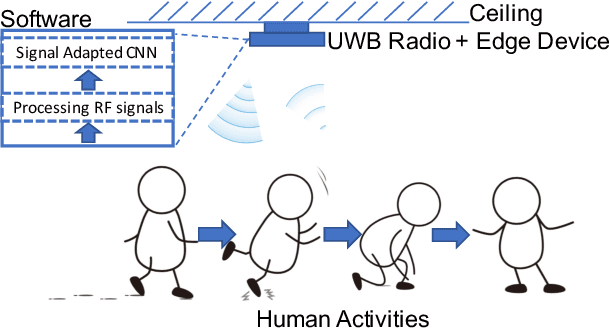

RF-Based Human Activity Recognition Using Signal Adapted Convolutional Neural Network

Oct 28, 2021

Abstract:Human Activity Recognition (HAR) plays a critical role in a wide range of real-world applications, and it is traditionally achieved via wearable sensing. Recently, to avoid the burden and discomfort caused by wearable devices, device-free approaches exploiting RF signals arise as a promising alternative for HAR. Most of the latest device-free approaches require training a large deep neural network model in either time or frequency domain, entailing extensive storage to contain the model and intensive computations to infer activities. Consequently, even with some major advances on device-free HAR, current device-free approaches are still far from practical in real-world scenarios where the computation and storage resources possessed by, for example, edge devices, are limited. Therefore, we introduce HAR-SAnet which is a novel RF-based HAR framework. It adopts an original signal adapted convolutional neural network architecture: instead of feeding the handcraft features of RF signals into a classifier, HAR-SAnet fuses them adaptively from both time and frequency domains to design an end-to-end neural network model. We apply point-wise grouped convolution and depth-wise separable convolutions to confine the model scale and to speed up the inference execution time. The experiment results show that the recognition accuracy of HAR-SAnet outperforms state-of-the-art algorithms and systems.

* 13 pages

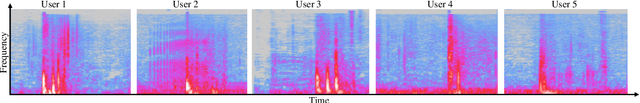

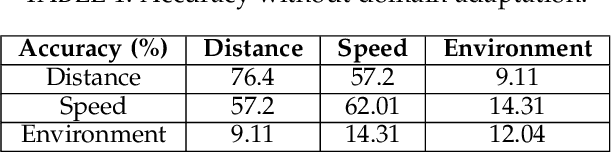

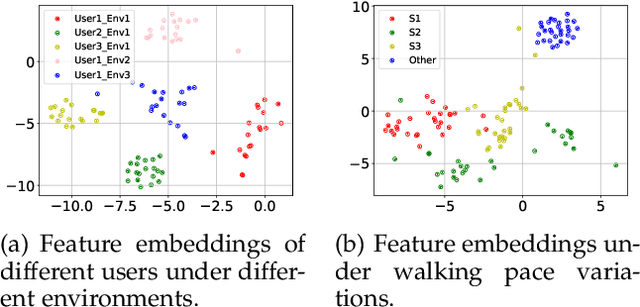

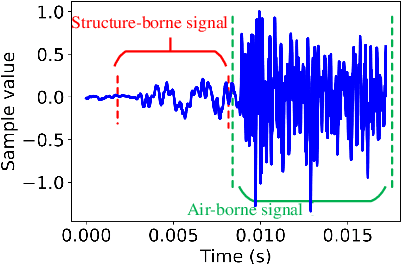

PURE: Passive mUlti-peRson idEntification via Deep Footstep Separation and Recognition

Apr 15, 2021

Abstract:Recently, \textit{passive behavioral biometrics} (e.g., gesture or footstep) have become promising complements to conventional user identification methods (e.g., face or fingerprint) under special situations, yet existing sensing technologies require lengthy measurement traces and cannot identify multiple users at the same time. To this end, we propose \systemname\ as a passive multi-person identification system leveraging deep learning enabled footstep separation and recognition. \systemname\ passively identifies a user by deciphering the unique "footprints" in its footstep. Different from existing gait-enabled recognition systems incurring a long sensing delay to acquire many footsteps, \systemname\ can recognize a person by as few as only one step, substantially cutting the identification latency. To make \systemname\ adaptive to walking pace variations, environmental dynamics, and even unseen targets, we apply an adversarial learning technique to improve its domain generalisability and identification accuracy. Finally, \systemname\ can defend itself against replay attack, enabled by the richness of footstep and spatial awareness. We implement a \systemname\ prototype using commodity hardware and evaluate it in typical indoor settings. Evaluation results demonstrate a cross-domain identification accuracy of over 90\%.

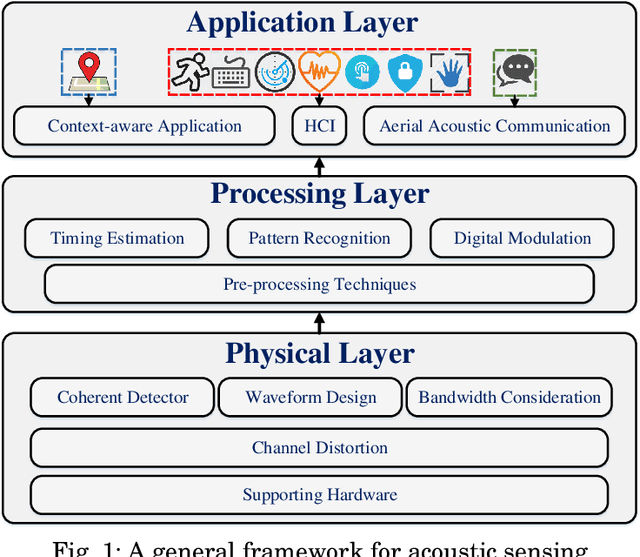

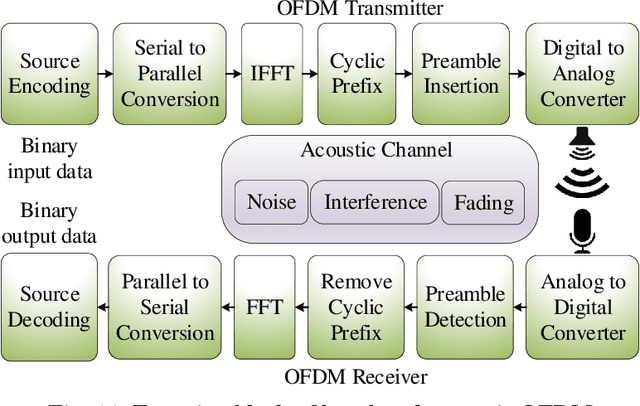

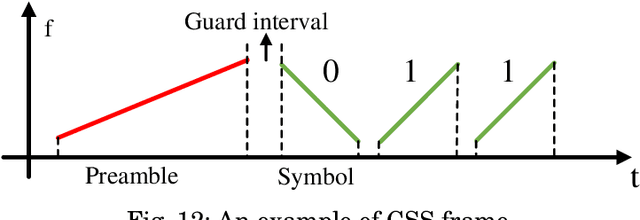

A survey on acoustic sensing

Jan 11, 2019

Abstract:The rise of Internet-of-Things (IoT) has brought many new sensing mechanisms. Among these mechanisms, acoustic sensing attracts much attention in recent years. Acoustic sensing exploits acoustic sensors beyond their primary uses, namely recording and playing, to enable interesting applications and new user experience. In this paper, we present the first survey of recent advances in acoustic sensing using commodity hardware. We propose a general framework that categorizes main building blocks of acoustic sensing systems. This framework consists of three layers, i.e., the physical layer, processing layer, and application layer. We highlight different sensing approaches in the processing layer and fundamental design considerations in the physical layer. Many existing and potential applications including context-aware applications, human-computer interface, and aerial acoustic communications are presented in depth. Challenges and future research trends are also discussed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge