Chanho Park

Perspective-Aware Reasoning in Vision-Language Models via Mental Imagery Simulation

Apr 24, 2025Abstract:We present a framework for perspective-aware reasoning in vision-language models (VLMs) through mental imagery simulation. Perspective-taking, the ability to perceive an environment or situation from an alternative viewpoint, is a key benchmark for human-level visual understanding, essential for environmental interaction and collaboration with autonomous agents. Despite advancements in spatial reasoning within VLMs, recent research has shown that modern VLMs significantly lack perspective-aware reasoning capabilities and exhibit a strong bias toward egocentric interpretations. To bridge the gap between VLMs and human perception, we focus on the role of mental imagery, where humans perceive the world through abstracted representations that facilitate perspective shifts. Motivated by this, we propose a framework for perspective-aware reasoning, named Abstract Perspective Change (APC), that effectively leverages vision foundation models, such as object detection, segmentation, and orientation estimation, to construct scene abstractions and enable perspective transformations. Our experiments on synthetic and real-image benchmarks, compared with various VLMs, demonstrate significant improvements in perspective-aware reasoning with our framework, further outperforming fine-tuned spatial reasoning models and novel-view-synthesis-based approaches.

SHARE: Shared Memory-Aware Open-Domain Long-Term Dialogue Dataset Constructed from Movie Script

Oct 28, 2024

Abstract:Shared memories between two individuals strengthen their bond and are crucial for facilitating their ongoing conversations. This study aims to make long-term dialogue more engaging by leveraging these shared memories. To this end, we introduce a new long-term dialogue dataset named SHARE, constructed from movie scripts, which are a rich source of shared memories among various relationships. Our dialogue dataset contains the summaries of persona information and events of two individuals, as explicitly revealed in their conversation, along with implicitly extractable shared memories. We also introduce EPISODE, a long-term dialogue framework based on SHARE that utilizes shared experiences between individuals. Through experiments using SHARE, we demonstrate that shared memories between two individuals make long-term dialogues more engaging and sustainable, and that EPISODE effectively manages shared memories during dialogue. Our new dataset is publicly available at https://anonymous.4open.science/r/SHARE-AA1E/SHARE.json.

Automatic Speech Recognition System-Independent Word Error Rate Estimation

Apr 26, 2024

Abstract:Word error rate (WER) is a metric used to evaluate the quality of transcriptions produced by Automatic Speech Recognition (ASR) systems. In many applications, it is of interest to estimate WER given a pair of a speech utterance and a transcript. Previous work on WER estimation focused on building models that are trained with a specific ASR system in mind (referred to as ASR system-dependent). These are also domain-dependent and inflexible in real-world applications. In this paper, a hypothesis generation method for ASR System-Independent WER estimation (SIWE) is proposed. In contrast to prior work, the WER estimators are trained using data that simulates ASR system output. Hypotheses are generated using phonetically similar or linguistically more likely alternative words. In WER estimation experiments, the proposed method reaches a similar performance to ASR system-dependent WER estimators on in-domain data and achieves state-of-the-art performance on out-of-domain data. On the out-of-domain data, the SIWE model outperformed the baseline estimators in root mean square error and Pearson correlation coefficient by relative 17.58% and 18.21%, respectively, on Switchboard and CALLHOME. The performance was further improved when the WER of the training set was close to the WER of the evaluation dataset.

SignSGD with Federated Voting

Mar 25, 2024

Abstract:Distributed learning is commonly used for accelerating model training by harnessing the computational capabilities of multiple-edge devices. However, in practical applications, the communication delay emerges as a bottleneck due to the substantial information exchange required between workers and a central parameter server. SignSGD with majority voting (signSGD-MV) is an effective distributed learning algorithm that can significantly reduce communication costs by one-bit quantization. However, due to heterogeneous computational capabilities, it fails to converge when the mini-batch sizes differ among workers. To overcome this, we propose a novel signSGD optimizer with \textit{federated voting} (signSGD-FV). The idea of federated voting is to exploit learnable weights to perform weighted majority voting. The server learns the weights assigned to the edge devices in an online fashion based on their computational capabilities. Subsequently, these weights are employed to decode the signs of the aggregated local gradients in such a way to minimize the sign decoding error probability. We provide a unified convergence rate analysis framework applicable to scenarios where the estimated weights are known to the parameter server either perfectly or imperfectly. We demonstrate that the proposed signSGD-FV algorithm has a theoretical convergence guarantee even when edge devices use heterogeneous mini-batch sizes. Experimental results show that signSGD-FV outperforms signSGD-MV, exhibiting a faster convergence rate, especially in heterogeneous mini-batch sizes.

SignSGD with Federated Defense: Harnessing Adversarial Attacks through Gradient Sign Decoding

Feb 02, 2024Abstract:Distributed learning is an effective approach to accelerate model training using multiple workers. However, substantial communication delays emerge between workers and a parameter server due to massive costs associated with communicating gradients. SignSGD with majority voting (signSGD-MV) is a simple yet effective optimizer that reduces communication costs through one-bit quantization, yet the convergence rates considerably decrease as adversarial workers increase. In this paper, we show that the convergence rate is invariant as the number of adversarial workers increases, provided that the number of adversarial workers is smaller than that of benign workers. The key idea showing this counter-intuitive result is our novel signSGD with federated defense (signSGD-FD). Unlike the traditional approaches, signSGD-FD exploits the gradient information sent by adversarial workers with the proper weights, which are obtained through gradient sign decoding. Experimental results demonstrate signSGD-FD achieves superior convergence rates over traditional algorithms in various adversarial attack scenarios.

Posterior Distillation Sampling

Nov 23, 2023

Abstract:We introduce Posterior Distillation Sampling (PDS), a novel optimization method for parametric image editing based on diffusion models. Existing optimization-based methods, which leverage the powerful 2D prior of diffusion models to handle various parametric images, have mainly focused on generation. Unlike generation, editing requires a balance between conforming to the target attribute and preserving the identity of the source content. Recent 2D image editing methods have achieved this balance by leveraging the stochastic latent encoded in the generative process of diffusion models. To extend the editing capabilities of diffusion models shown in pixel space to parameter space, we reformulate the 2D image editing method into an optimization form named PDS. PDS matches the stochastic latents of the source and the target, enabling the sampling of targets in diverse parameter spaces that align with a desired attribute while maintaining the source's identity. We demonstrate that this optimization resembles running a generative process with the target attribute, but aligning this process with the trajectory of the source's generative process. Extensive editing results in Neural Radiance Fields and Scalable Vector Graphics representations demonstrate that PDS is capable of sampling targets to fulfill the aforementioned balance across various parameter spaces.

Fast Word Error Rate Estimation Using Self-Supervised Representations For Speech And Text

Oct 12, 2023Abstract:The quality of automatic speech recognition (ASR) is typically measured by word error rate (WER). WER estimation is a task aiming to predict the WER of an ASR system, given a speech utterance and a transcription. This task has gained increasing attention while advanced ASR systems are trained on large amounts of data. In this case, WER estimation becomes necessary in many scenarios, for example, selecting training data with unknown transcription quality or estimating the testing performance of an ASR system without ground truth transcriptions. Facing large amounts of data, the computation efficiency of a WER estimator becomes essential in practical applications. However, previous works usually did not consider it as a priority. In this paper, a Fast WER estimator (Fe-WER) using self-supervised learning representation (SSLR) is introduced. The estimator is built upon SSLR aggregated by average pooling. The results show that Fe-WER outperformed the e-WER3 baseline relatively by 19.69% and 7.16% on Ted-Lium3 in both evaluation metrics of root mean square error and Pearson correlation coefficient, respectively. Moreover, the estimation weighted by duration was 10.43% when the target was 10.88%. Lastly, the inference speed was about 4x in terms of a real-time factor.

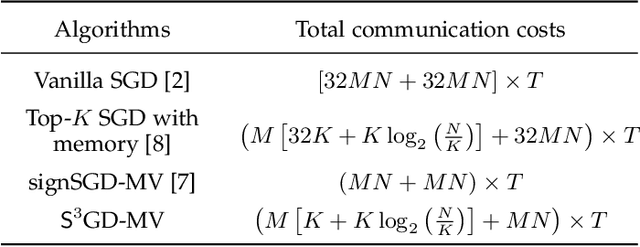

Sparse-SignSGD with Majority Vote for Communication-Efficient Distributed Learning

Feb 15, 2023

Abstract:The training efficiency of complex deep learning models can be significantly improved through the use of distributed optimization. However, this process is often hindered by a large amount of communication cost between workers and a parameter server during iterations. To address this bottleneck, in this paper, we present a new communication-efficient algorithm that offers the synergistic benefits of both sparsification and sign quantization, called ${\sf S}^3$GD-MV. The workers in ${\sf S}^3$GD-MV select the top-$K$ magnitude components of their local gradient vector and only send the signs of these components to the server. The server then aggregates the signs and returns the results via a majority vote rule. Our analysis shows that, under certain mild conditions, ${\sf S}^3$GD-MV can converge at the same rate as signSGD while significantly reducing communication costs, if the sparsification parameter $K$ is properly chosen based on the number of workers and the size of the deep learning model. Experimental results using both independent and identically distributed (IID) and non-IID datasets demonstrate that the ${\sf S}^3$GD-MV attains higher accuracy than signSGD, significantly reducing communication costs. These findings highlight the potential of ${\sf S}^3$GD-MV as a promising solution for communication-efficient distributed optimization in deep learning.

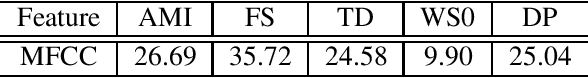

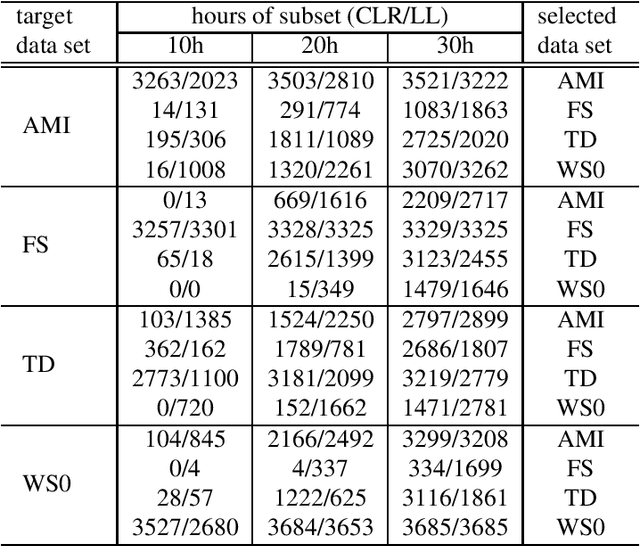

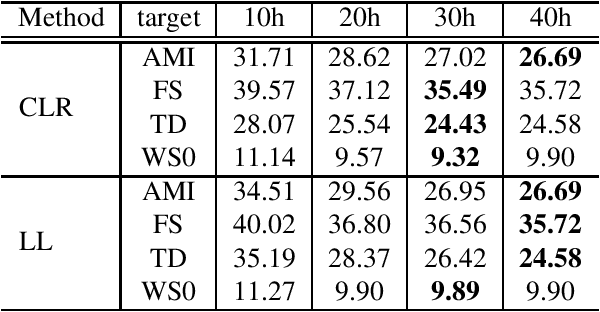

Unsupervised data selection for Speech Recognition with contrastive loss ratios

Jul 25, 2022

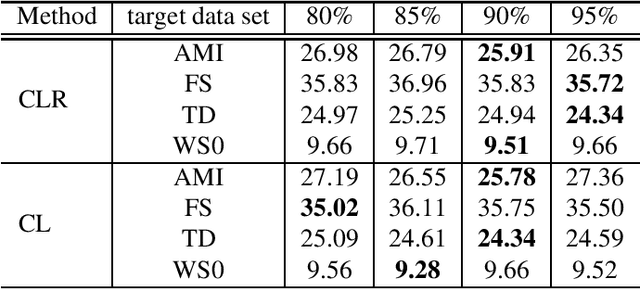

Abstract:This paper proposes an unsupervised data selection method by using a submodular function based on contrastive loss ratios of target and training data sets. A model using a contrastive loss function is trained on both sets. Then the ratio of frame-level losses for each model is used by a submodular function. By using the submodular function, a training set for automatic speech recognition matching the target data set is selected. Experiments show that models trained on the data sets selected by the proposed method outperform the selection method based on log-likelihoods produced by GMM-HMM models, in terms of word error rate (WER). When selecting a fixed amount, e.g. 10 hours of data, the difference between the results of two methods on Tedtalks was 20.23% WER relative. The method can also be used to select data with the aim of minimising negative transfer, while maintaining or improving on performance of models trained on the whole training set. Results show that the WER on the WSJCAM0 data set was reduced by 6.26% relative when selecting 85% from the whole data set.

* 5 pages, accepted by ICASSP 2022

Bayesian AirComp with Sign-Alignment Precoding for Wireless Federated Learning

Sep 14, 2021

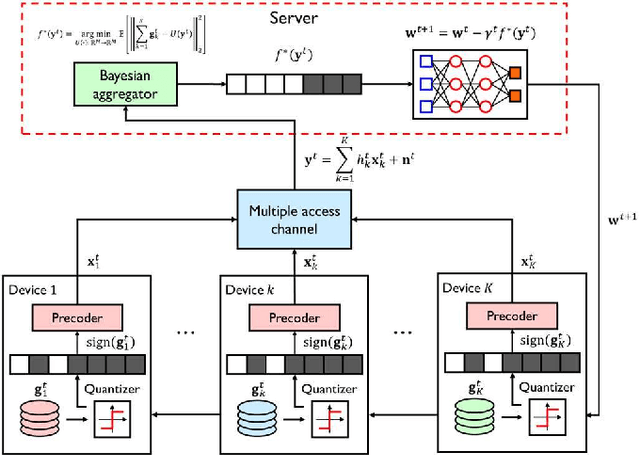

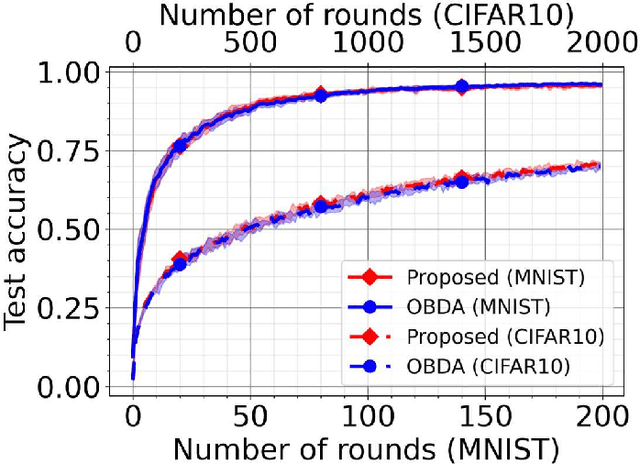

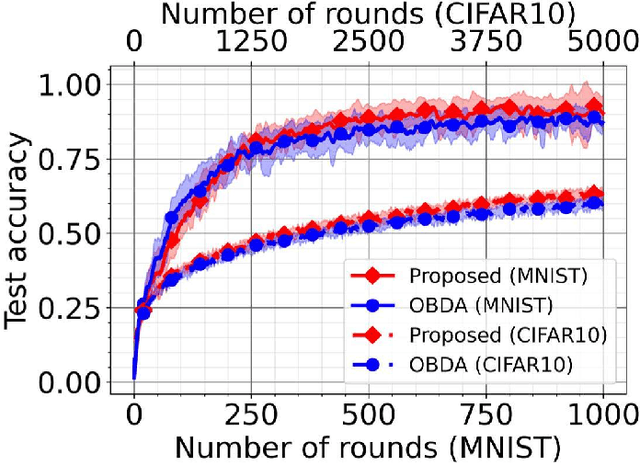

Abstract:In this paper, we consider the problem of wireless federated learning based on sign stochastic gradient descent (signSGD) algorithm via a multiple access channel. When sending locally computed gradient's sign information, each mobile device requires to apply precoding to circumvent wireless fading effects. In practice, however, acquiring perfect knowledge of channel state information (CSI) at all mobile devices is infeasible. In this paper, we present a simple yet effective precoding method with limited channel knowledge, called sign-alignment precoding. The idea of sign-alignment precoding is to protect sign-flipping errors from wireless fadings. Under the Gaussian prior assumption on the local gradients, we also derive the mean squared error (MSE)-optimal aggregation function called Bayesian over-the-air computation (BayAirComp). Our key finding is that one-bit precoding with BayAirComp aggregation can provide a better learning performance than the existing precoding method even using perfect CSI with AirComp aggregation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge