Changyun Wen

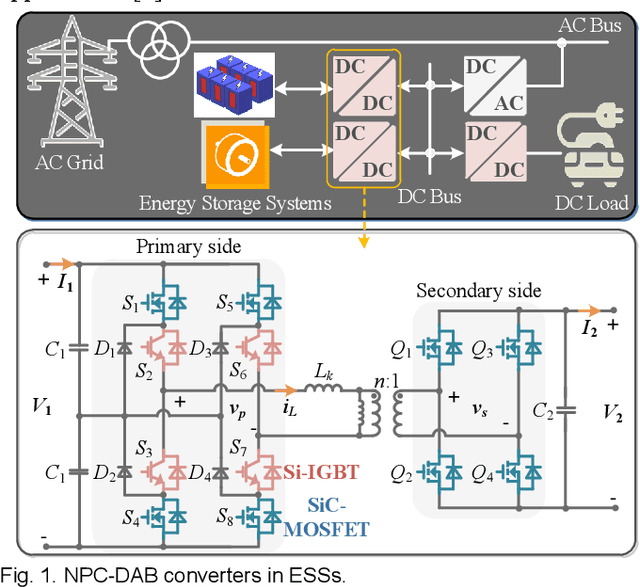

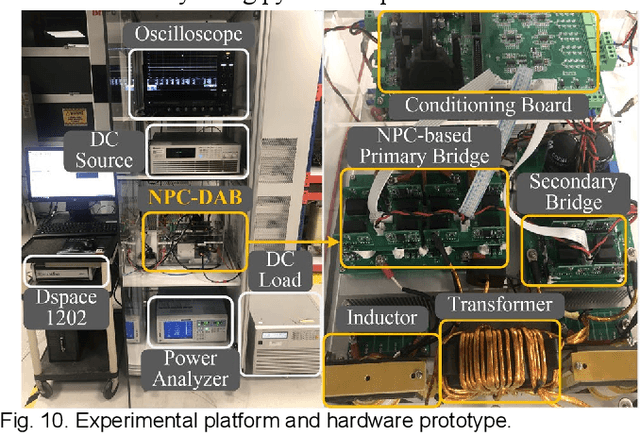

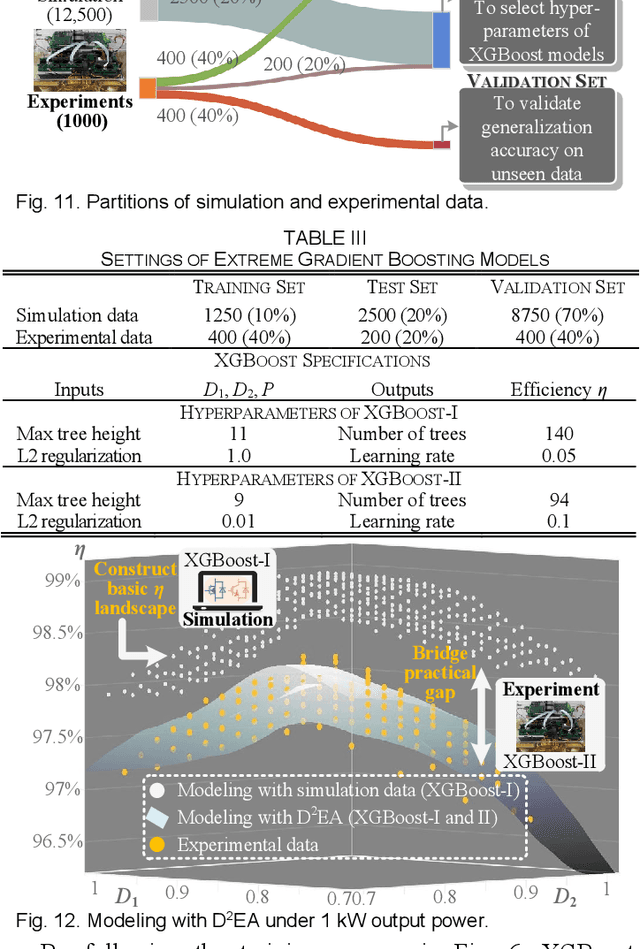

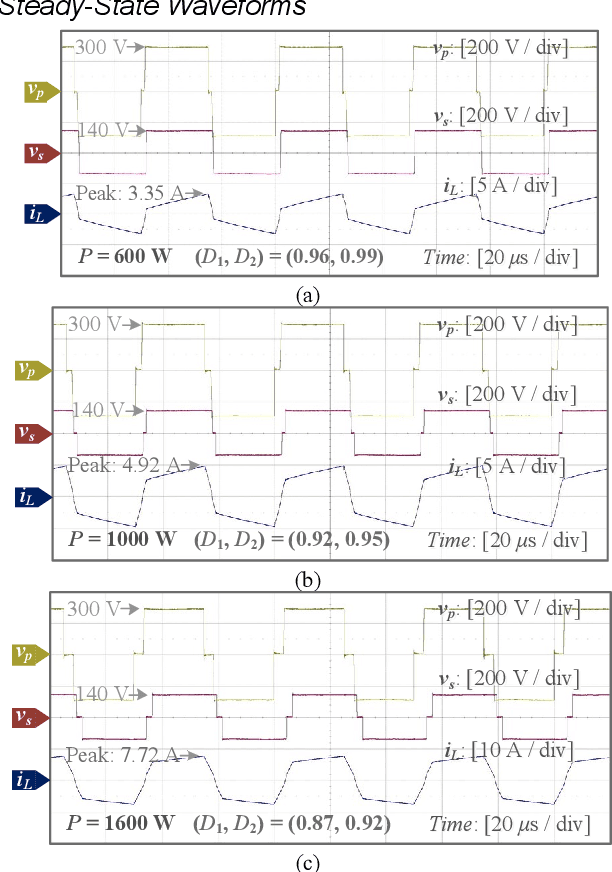

Data-Driven Modeling with Experimental Augmentation for the Modulation Strategy of the Dual-Active-Bridge Converter

Aug 03, 2023

Abstract:For the performance modeling of power converters, the mainstream approaches are essentially knowledge-based, suffering from heavy manpower burden and low modeling accuracy. Recent emerging data-driven techniques greatly relieve human reliance by automatic modeling from simulation data. However, model discrepancy may occur due to unmodeled parasitics, deficient thermal and magnetic models, unpredictable ambient conditions, etc. These inaccurate data-driven models based on pure simulation cannot represent the practical performance in physical world, hindering their applications in power converter modeling. To alleviate model discrepancy and improve accuracy in practice, this paper proposes a novel data-driven modeling with experimental augmentation (D2EA), leveraging both simulation data and experimental data. In D2EA, simulation data aims to establish basic functional landscape, and experimental data focuses on matching actual performance in real world. The D2EA approach is instantiated for the efficiency optimization of a hybrid modulation for neutral-point-clamped dual-active-bridge (NPC-DAB) converter. The proposed D2EA approach realizes 99.92% efficiency modeling accuracy, and its feasibility is comprehensively validated in 2-kW hardware experiments, where the peak efficiency of 98.45% is attained. Overall, D2EA is data-light and can achieve highly accurate and highly practical data-driven models in one shot, and it is scalable to other applications, effortlessly.

* 11 pages

Color Mapping Functions For HDR Panorama Imaging: Weighted Histogram Averaging

Nov 14, 2021

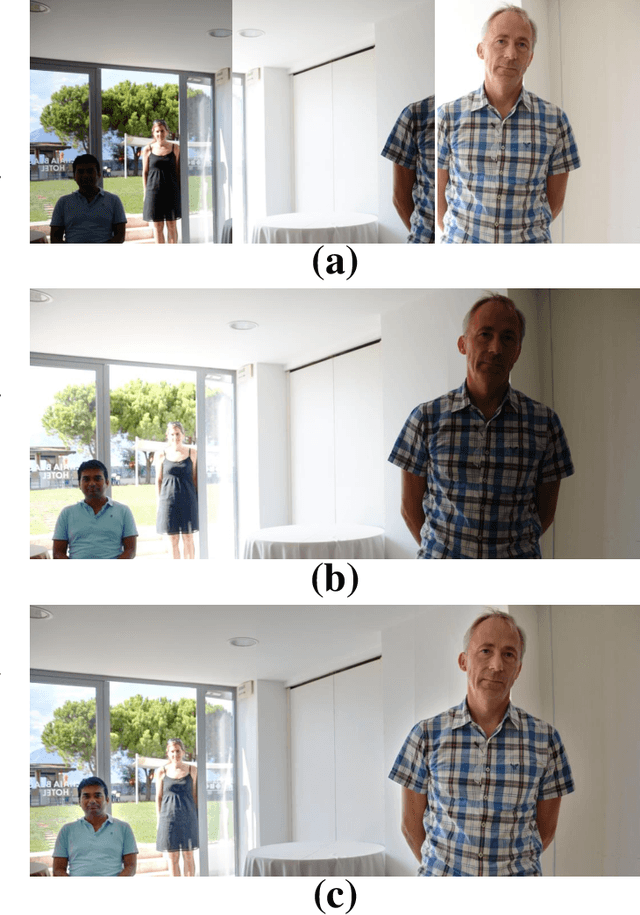

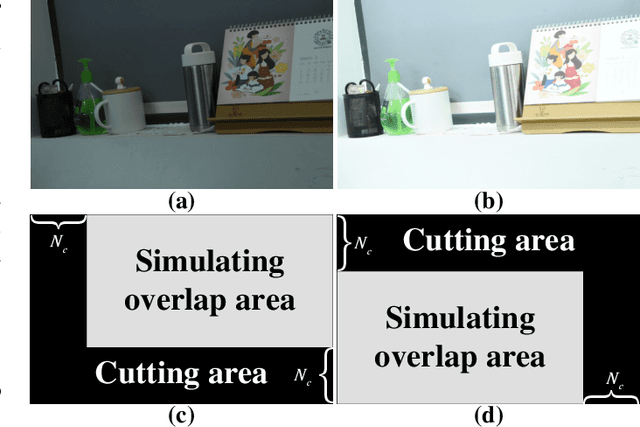

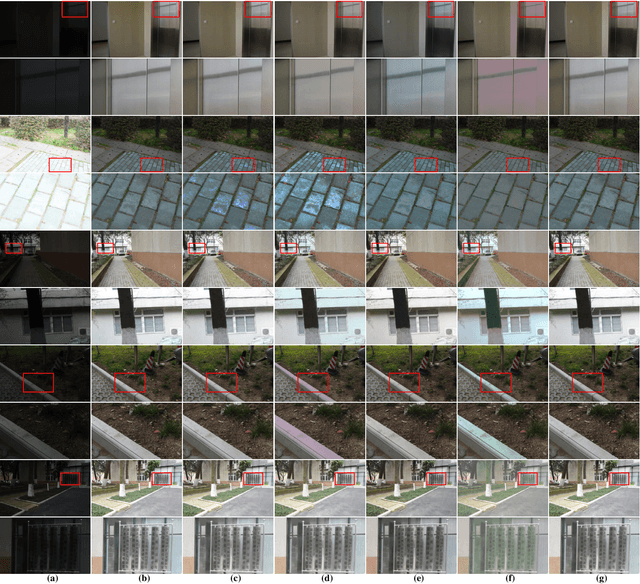

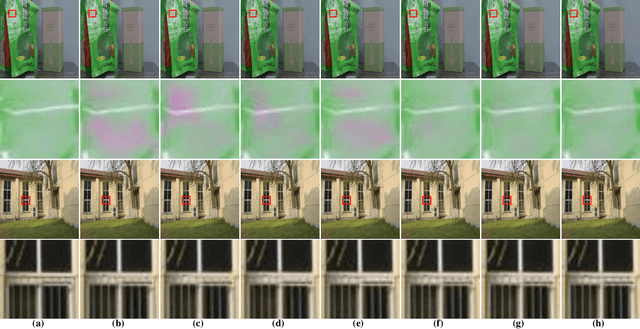

Abstract:It is challenging to stitch multiple images with different exposures due to possible color distortion and loss of details in the brightest and darkest regions of input images. In this paper, a novel color mapping algorithm is first proposed by introducing a new concept of weighted histogram averaging (WHA). The proposed WHA algorithm leverages the correspondence between the histogram bins of two images which are built up by using the non-decreasing property of the color mapping functions (CMFs). The WHA algorithm is then adopted to synthesize a set of differently exposed panorama images. The intermediate panorama images are finally fused via a state-of-the-art multi-scale exposure fusion (MEF) algorithm to produce the final panorama image. Extensive experiments indicate that the proposed WHA algorithm significantly surpasses the related state-of-the-art color mapping methods. The proposed high dynamic range (HDR) stitching algorithm based on MEF also preserves details in the brightest and darkest regions of the input images well. The related materials will be publicly accessible at https://github.com/yilun-xu/WHA for reproducible research.

Deep Joint Demosaicing and High Dynamic Range Imaging within a Single Shot

Nov 14, 2021

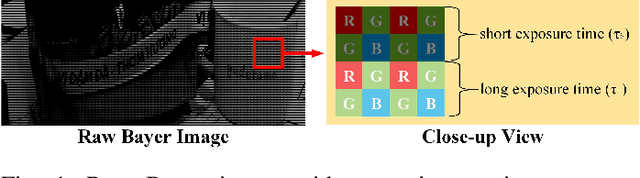

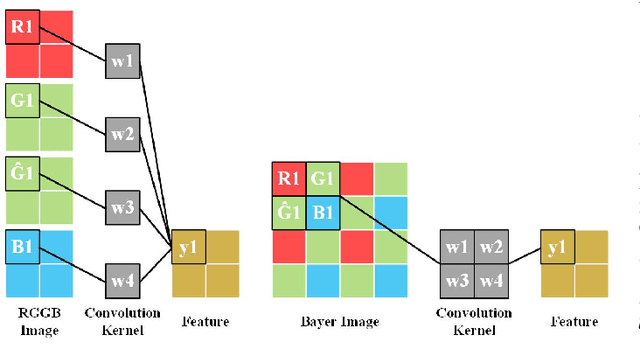

Abstract:Spatially varying exposure (SVE) is a promising choice for high-dynamic-range (HDR) imaging (HDRI). The SVE-based HDRI, which is called single-shot HDRI, is an efficient solution to avoid ghosting artifacts. However, it is very challenging to restore a full-resolution HDR image from a real-world image with SVE because: a) only one-third of pixels with varying exposures are captured by camera in a Bayer pattern, b) some of the captured pixels are over- and under-exposed. For the former challenge, a spatially varying convolution (SVC) is designed to process the Bayer images carried with varying exposures. For the latter one, an exposure-guidance method is proposed against the interference from over- and under-exposed pixels. Finally, a joint demosaicing and HDRI deep learning framework is formalized to include the two novel components and to realize an end-to-end single-shot HDRI. Experiments indicate that the proposed end-to-end framework avoids the problem of cumulative errors and surpasses the related state-of-the-art methods.

Stealthy False Data Injection Attack Detection in Smart Grids with Uncertainties: A Deep Transfer Learning Based Approach

Apr 09, 2021

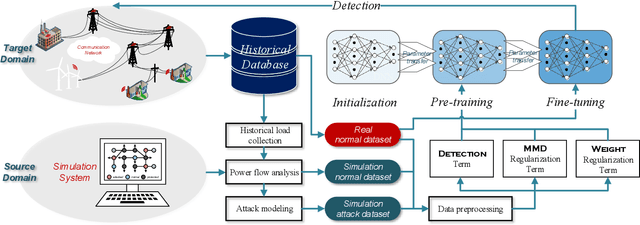

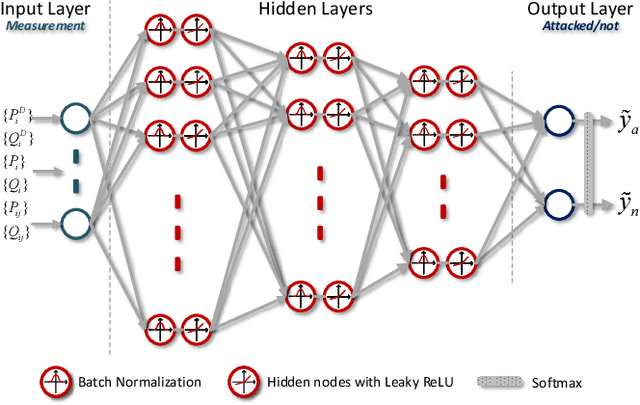

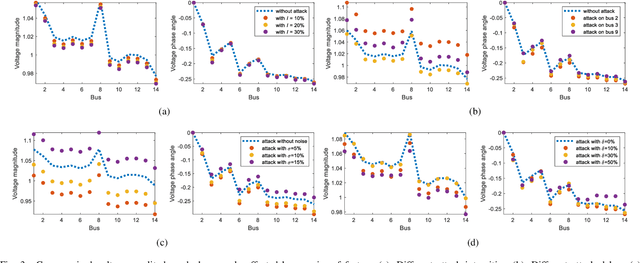

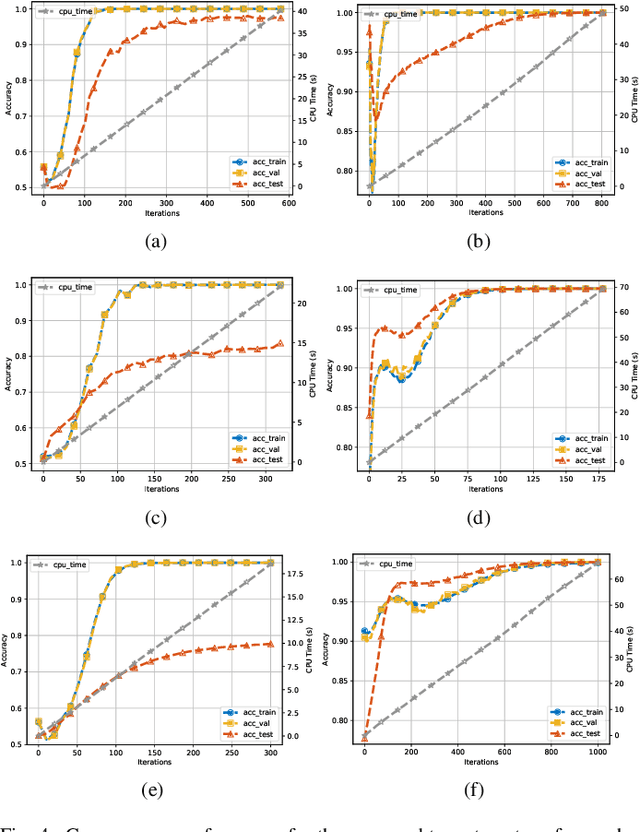

Abstract:Most traditional false data injection attack (FDIA) detection approaches rely on static system parameters or a single known snapshot of dynamic ones. However, such a setting significantly weakens the practicality of these approaches when facing the fact that the system parameters are dynamic and cannot be accurately known during operation due to the presence of uncertainties in practical smart grids. In this paper, we propose an FDIA detection mechanism from the perspective of transfer learning. Specifically, the known initial/approximate system is treated as a source domain, which provides abundant simulated normal and attack data. The real world's unknown running system is taken as a target domain where sufficient real normal data are collected for tracking the latest system states online. The designed transfer strategy that aims at making full use of data in hand is divided into two optimization stages. In the first stage, a deep neural network (DNN) is built by simultaneously optimizing several well-designed terms with both simulated data and real data, and then it is fine-tuned via real data in the second stage. Several case studies on the IEEE 14-bus power system verify the effectiveness of the proposed mechanism.

Online Active Proposal Set Generation for Weakly Supervised Object Detection

Jan 20, 2021

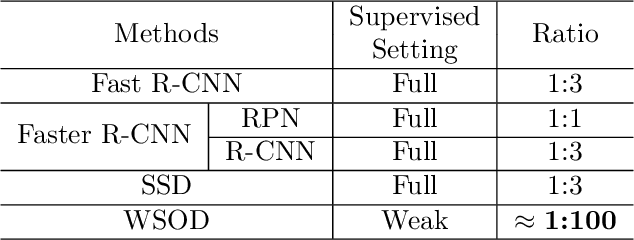

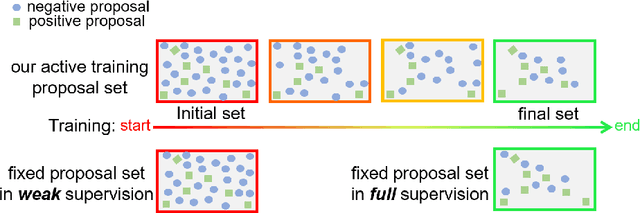

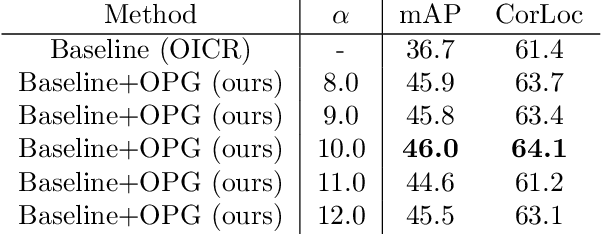

Abstract:To reduce the manpower consumption on box-level annotations, many weakly supervised object detection methods which only require image-level annotations, have been proposed recently. The training process in these methods is formulated into two steps. They firstly train a neural network under weak supervision to generate pseudo ground truths (PGTs). Then, these PGTs are used to train another network under full supervision. Compared with fully supervised methods, the training process in weakly supervised methods becomes more complex and time-consuming. Furthermore, overwhelming negative proposals are involved at the first step. This is neglected by most methods, which makes the training network biased towards to negative proposals and thus degrades the quality of the PGTs, limiting the training network performance at the second step. Online proposal sampling is an intuitive solution to these issues. However, lacking of adequate labeling, a simple online proposal sampling may make the training network stuck into local minima. To solve this problem, we propose an Online Active Proposal Set Generation (OPG) algorithm. Our OPG algorithm consists of two parts: Dynamic Proposal Constraint (DPC) and Proposal Partition (PP). DPC is proposed to dynamically determine different proposal sampling strategy according to the current training state. PP is used to score each proposal, part proposals into different sets and generate an active proposal set for the network optimization. Through experiments, our proposed OPG shows consistent and significant improvement on both datasets PASCAL VOC 2007 and 2012, yielding comparable performance to the state-of-the-art results.

Feature Flow: In-network Feature Flow Estimation for Video Object Detection

Sep 21, 2020

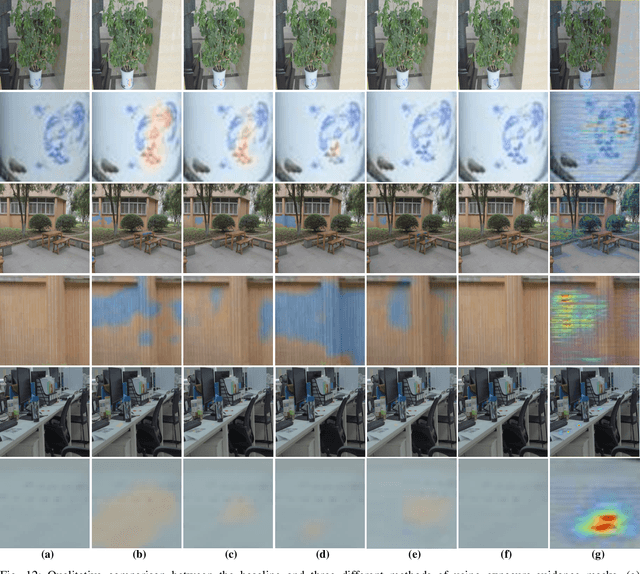

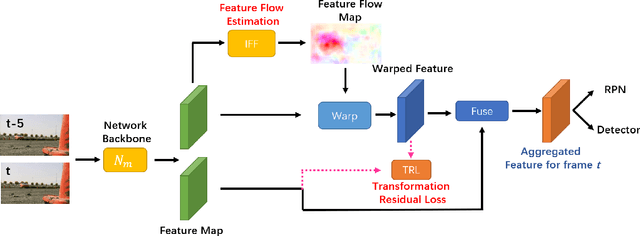

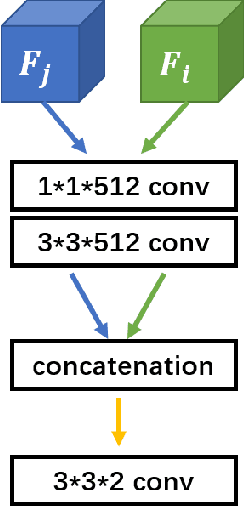

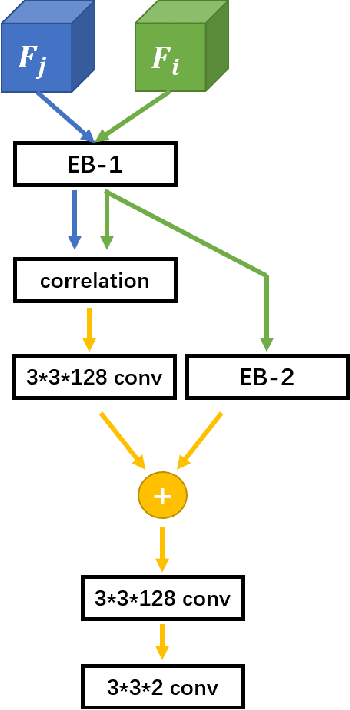

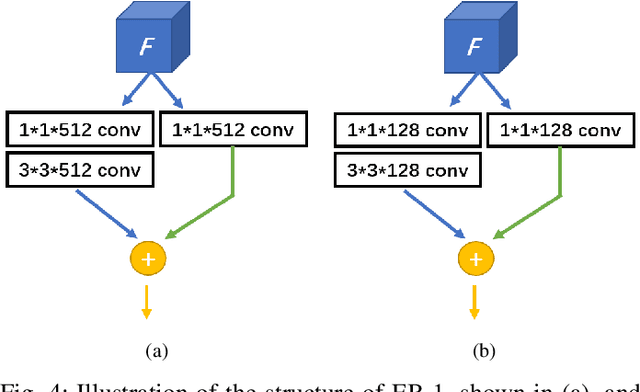

Abstract:Optical flow, which expresses pixel displacement, is widely used in many computer vision tasks to provide pixel-level motion information. However, with the remarkable progress of the convolutional neural network, recent state-of-the-art approaches are proposed to solve problems directly on feature-level. Since the displacement of feature vector is not consistent to the pixel displacement, a common approach is to:forward optical flow to a neural network and fine-tune this network on the task dataset. With this method,they expect the fine-tuned network to produce tensors encoding feature-level motion information. In this paper, we rethink this de facto paradigm and analyze its drawbacks in the video object detection task. To mitigate these issues, we propose a novel network (IFF-Net) with an \textbf{I}n-network \textbf{F}eature \textbf{F}low estimation module (IFF module) for video object detection. Without resorting pre-training on any additional dataset, our IFF module is able to directly produce \textbf{feature flow} which indicates the feature displacement. Our IFF module consists of a shallow module, which shares the features with the detection branches. This compact design enables our IFF-Net to accurately detect objects, while maintaining a fast inference speed. Furthermore, we propose a transformation residual loss (TRL) based on \textit{self-supervision}, which further improves the performance of our IFF-Net. Our IFF-Net outperforms existing methods and sets a state-of-the-art performance on ImageNet VID.

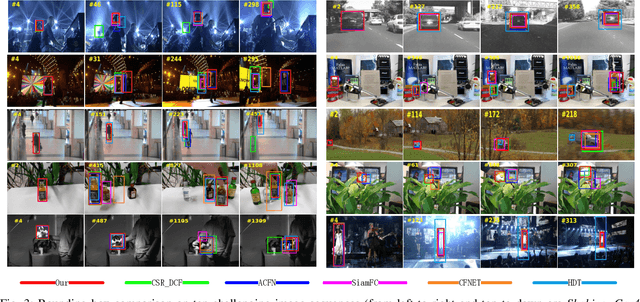

High Speed Tracking With A Fourier Domain Kernelized Correlation Filter

Nov 08, 2018

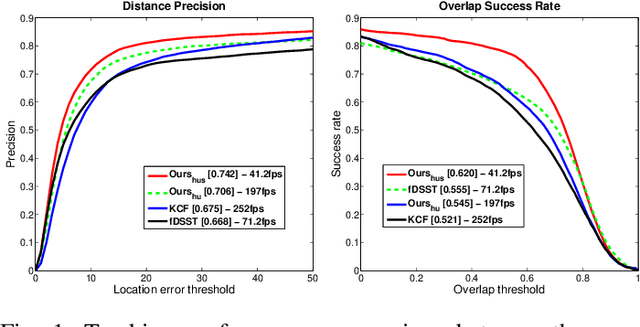

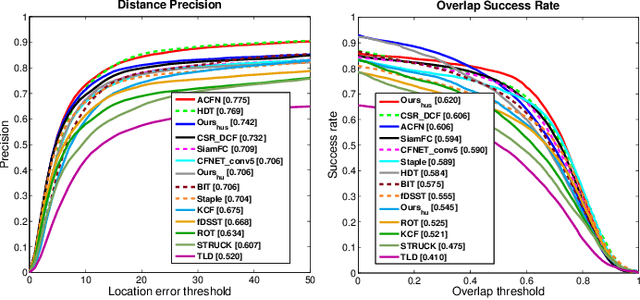

Abstract:It is challenging to design a high speed tracking approach using l1-norm due to its non-differentiability. In this paper, a new kernelized correlation filter is introduced by leveraging the sparsity attribute of l1-norm based regularization to design a high speed tracker. We combine the l1-norm and l2-norm based regularizations in one Huber-type loss function, and then formulate an optimization problem in the Fourier Domain for fast computation, which enables the tracker to adaptively ignore the noisy features produced from occlusion and illumination variation, while keep the advantages of l2-norm based regression. This is achieved due to the attribute of Convolution Theorem that the correlation in spatial domain corresponds to an element-wise product in the Fourier domain, resulting in that the l1-norm optimization problem could be decomposed into multiple sub-optimization spaces in the Fourier domain. But the optimized variables in the Fourier domain are complex, which makes using the l1-norm impossible if the real and imaginary parts of the variables cannot be separated. However, our proposed optimization problem is formulated in such a way that their real part and imaginary parts are indeed well separated. As such, the proposed optimization problem can be solved efficiently to obtain their optimal values independently with closed-form solutions. Extensive experiments on two large benchmark datasets demonstrate that the proposed tracking algorithm significantly improves the tracking accuracy of the original kernelized correlation filter (KCF) while with little sacrifice on tracking speed. Moreover, it outperforms the state-of-the-art approaches in terms of accuracy, efficiency, and robustness.

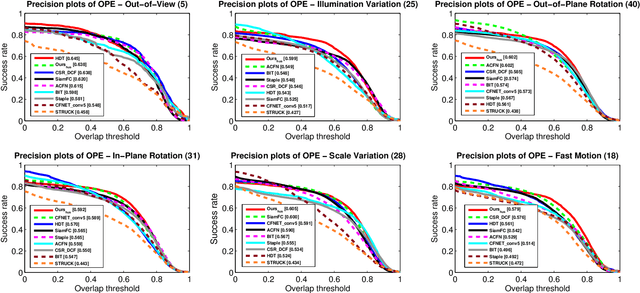

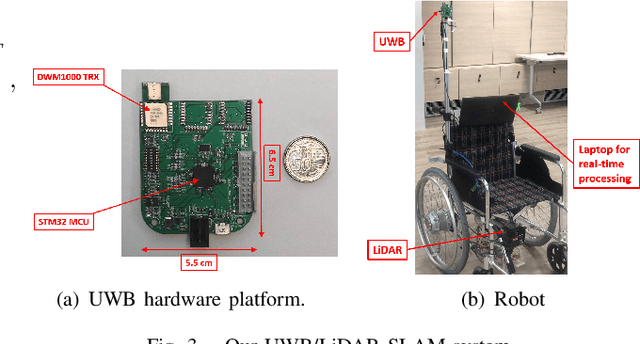

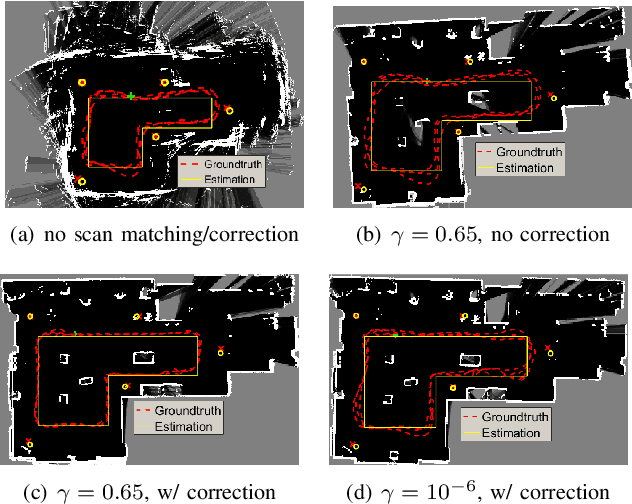

UWB/LiDAR Fusion For Cooperative Range-Only SLAM

Nov 07, 2018

Abstract:We equip an ultra-wideband (UWB) node and a 2D LiDAR sensor a.k.a. 2D laser rangefinder on a mobile robot, and place UWB beacon nodes at unknown locations in an unknown environment. All UWB nodes can do ranging with each other thus forming a cooperative sensor network. We propose to fuse the peer-to-peer ranges measured between UWB nodes and laser scanning information, i.e. range measured between robot and nearby objects/obstacles, for simultaneous localization of the robot, all UWB beacons, and LiDAR mapping. The fusion is inspired by two facts: 1) LiDAR may improve UWB-only localization accuracy as it gives a more precise and comprehensive picture of the surrounding environment; 2) on the other hand, UWB ranging measurements may remove the error accumulated in the LiDAR-based SLAM algorithm. Our experiments demonstrate that UWB/LiDAR fusion enables drift-free SLAM in real-time based on ranging measurements only.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge