Cassie Meeker

Stow: Robotic Packing of Items into Fabric Pods

May 07, 2025

Abstract:This paper presents a compliant manipulation system capable of placing items onto densely packed shelves. The wide diversity of items and strict business requirements for high producing rates and low defect generation have prohibited warehouse robotics from performing this task. Our innovations in hardware, perception, decision-making, motion planning, and control have enabled this system to perform over 500,000 stows in a large e-commerce fulfillment center. The system achieves human levels of packing density and speed while prioritizing work on overhead shelves to enhance the safety of humans working alongside the robots.

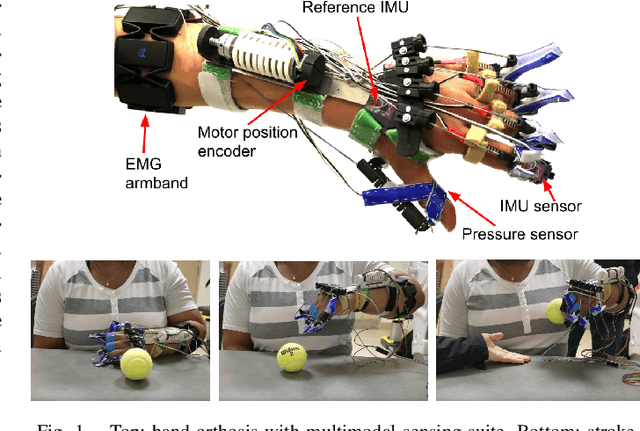

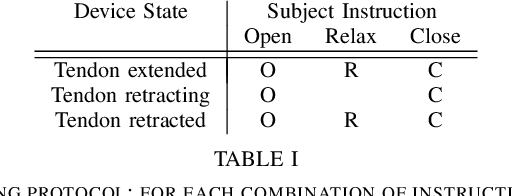

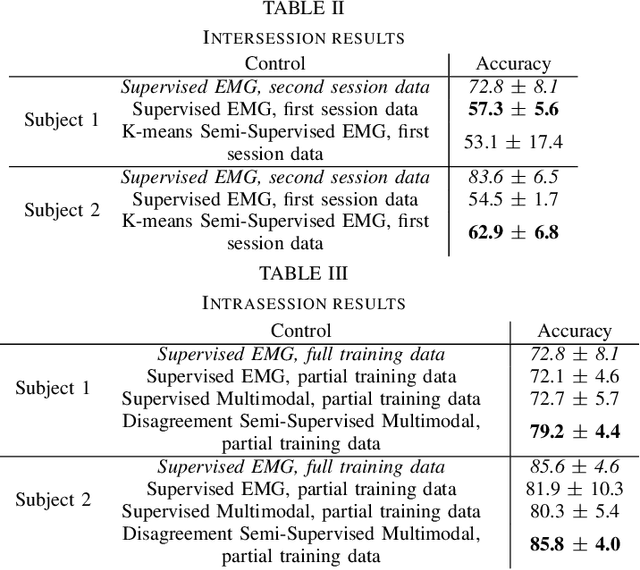

Semi-Supervised Intent Inferral Using Ipsilateral Biosignals on a Hand Orthosis for Stroke Subjects

Oct 30, 2020

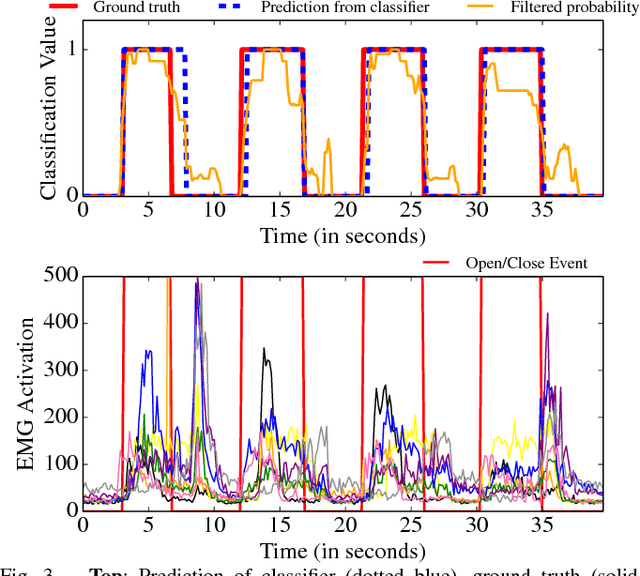

Abstract:In order to provide therapy in a functional context, controls for wearable orthoses need to be robust and intuitive. We have previously introduced an intuitive, user-driven, EMG based orthotic control, but the process of training a control which is robust to concept drift (changes in the input signal) places a substantial burden on the user. In this paper, we explore semi-supervised learning as a paradigm for wearable orthotic controls. We are the first to use semi-supervised learning for an orthotic application. We propose a K-means semi-supervision and a disagreement-based semi-supervision algorithm. This is an exploratory study designed to determine the feasibility of semi-supervised learning as a control paradigm for wearable orthotics. In offline experiments with stroke subjects, we show that these algorithms have the potential to reduce the training burden placed on the user, and that they merit further study.

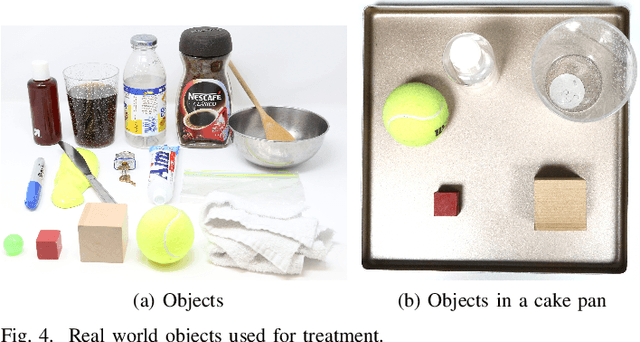

A Continuous Teleoperation Subspace with Empirical and Algorithmic Mapping Algorithms for Non-Anthropomorphic Hands

Nov 21, 2019

Abstract:Teleoperation is a valuable tool for robotic manipulators in highly unstructured environments. However, finding an intuitive mapping between a human hand and a non-anthropomorphic robot hand can be difficult, due to the hands' dissimilar kinematics. In this paper, we seek to create a mapping between the human hand and a fully actuated, non-anthropomorphic robot hand that is intuitive enough to enable effective real-time teleoperation, even for novice users. To accomplish this, we propose a low-dimensional teleoperation subspace which can be used as an intermediary for mapping between hand pose spaces. We present two different methods to define the teleoperation subspace: an empirical definition, which requires a person to define hand motions in an intuitive, hand-specific way, and an algorithmic definition, which is kinematically independent, and uses objects to define the subspace. We use each of these definitions to create a teleoperation mapping for different hands. We validate both the empirical and algorithmic mappings with teleoperation experiments controlled by novices and performed on two kinematically distinct hands. The experiments show that the proposed subspace is relevant to teleoperation, intuitive enough to enable control by novices, and can generalize to non-anthropomorphic hands with different kinematic configurations.

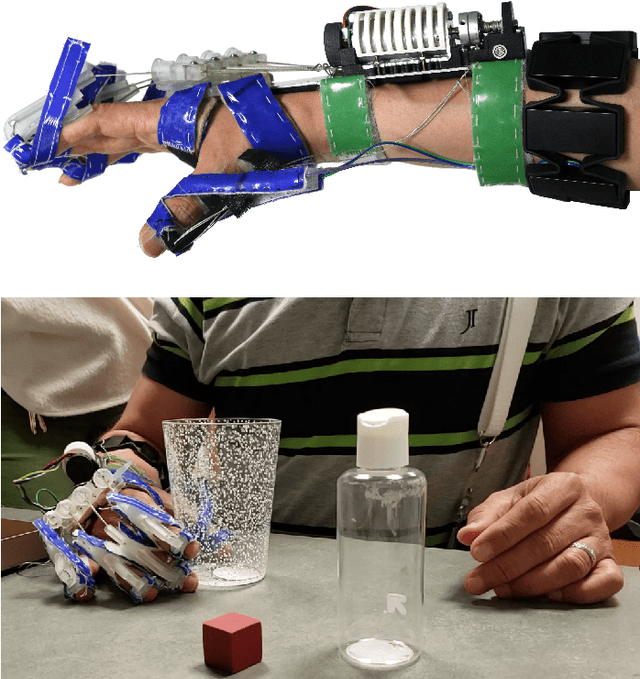

User-Driven Functional Movement Training with a Wearable Hand Robot after Stroke

Nov 18, 2019

Abstract:We studied the performance of a robotic orthosis designed to assist the paretic hand after stroke. This orthosis is designed to be wearable and fully user-controlled, allowing it to serve two possible roles: as a rehabilitation device, designed to be integrated in device-mediated rehabilitation exercises to improve performance of the affected upper limb without device assistance; or as an assistive device, designed to be integrated into daily wear to improve performance of the affected limb with grasping tasks. We present the clinical outcomes of a study designed as a feasibility test for these hypotheses. 11 participants with chronic stroke engaged in a month-long training protocol using the orthosis. Individuals were evaluated using standard outcome measures, both with and without orthosis assistance. Fugl-Meyer scores (unassisted) showed improvement focused specifically at the distal joints of the upper limb, and Action Research Arm Test (ARAT) scores (unassisted) also showed a positive trend. These results suggest the possibility of using our orthosis as a rehabilitative device for the hand. Assisted ARAT and Box and Block Test scores showed that the device can function in an assistive role for participants with minimal functional use of their hand at baseline. We believe these results highlight the potential for wearable and user-driven robotic hand orthoses to extend the use and training of the affected upper limb after stroke.

EMG-Controlled Non-Anthropomorphic Hand Teleoperation Using a Continuous Teleoperation Subspace

Mar 06, 2019

Abstract:We present a method for EMG-driven teleoperation of non-anthropomorphic robot hands. EMG sensors are appealing as a wearable, inexpensive, and unobtrusive way to gather information about the teleoperator's hand pose. However, mapping from EMG signals to the pose space of a non-anthropomorphic hand presents multiple challenges. We present a method that first projects from forearm EMG into a subspace relevant to teleoperation. To increase robustness, we use a model which combines continuous and discrete predictors along different dimensions of this subspace. We then project from the teleoperation subspace into the pose space of the robot hand. Our method is effective and intuitive, as it enables novice users to teleoperate pick and place tasks faster and more robustly than state-of-the-art EMG teleoperation methods when applied to a non-anthropomorphic, multi-DOF robot hand.

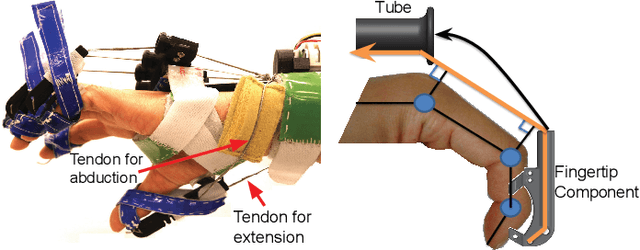

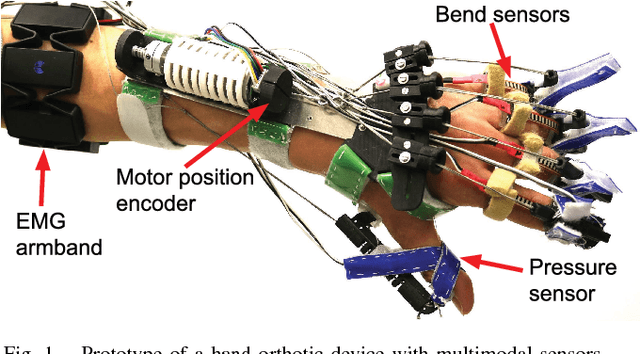

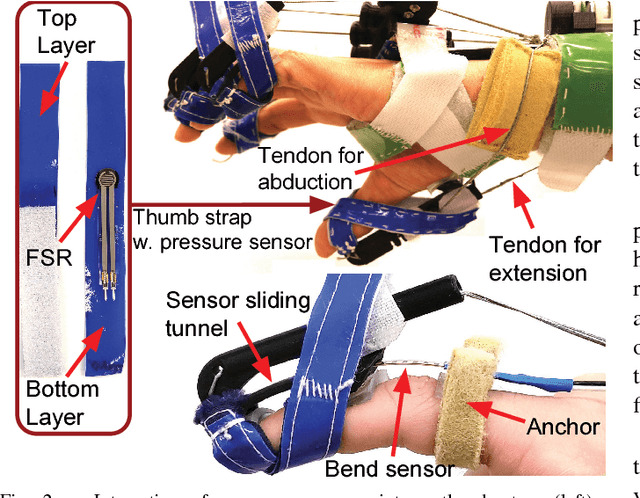

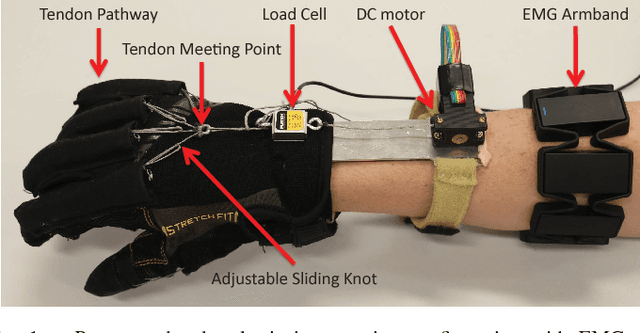

Multimodal Sensing and Interaction for a Robotic Hand Orthosis

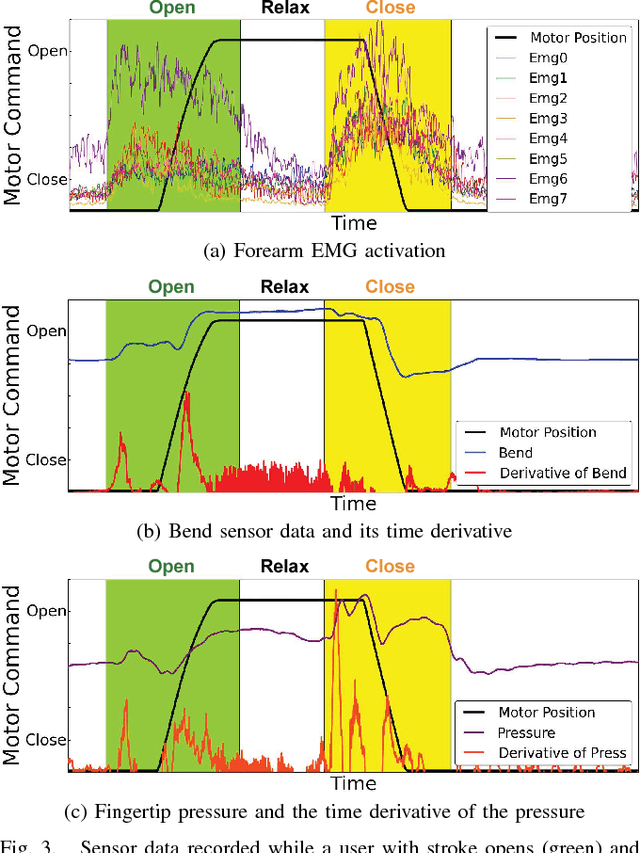

Dec 18, 2018

Abstract:Wearable robotic hand rehabilitation devices can allow greater freedom and flexibility than their workstation-like counterparts. However, the field is generally lacking effective methods by which the user can operate the device: such controls must be effective, intuitive, and robust to the wide range of possible impairment patterns. Even when focusing on a specific condition, such as stroke, the variety of encountered upper limb impairment patterns means that a single sensing modality, such as electromyography (EMG), might not be sufficient to enable controls for a broad range of users. To address this significant gap, we introduce a multimodal sensing and interaction paradigm for an active hand orthosis. In our proof-of-concept implementation, EMG is complemented by other sensing modalities, such as finger bend and contact pressure sensors. We propose multimodal interaction methods that utilize this sensory data as input, and show they can enable tasks for stroke survivors who exhibit different impairment patterns. We believe that robotic hand orthoses developed as multimodal sensory platforms with help address some of the key challenges in physical interaction with the user.

* 8 pages. 9 Figures. IEEE Robotics and Automation Letters. Preprint version. Accepted Dec, 2018

Intuitive Hand Teleoperation by Novice Operators Using a Continuous Teleoperation Subspace

Feb 12, 2018

Abstract:Human-in-the-loop manipulation is useful in when autonomous grasping is not able to deal sufficiently well with corner cases or cannot operate fast enough. Using the teleoperator's hand as an input device can provide an intuitive control method but requires mapping between pose spaces which may not be similar. We propose a low-dimensional and continuous teleoperation subspace which can be used as an intermediary for mapping between different hand pose spaces. We present an algorithm to project between pose space and teleoperation subspace. We use a non-anthropomorphic robot to experimentally prove that it is possible for teleoperation subspaces to effectively and intuitively enable teleoperation. In experiments, novice users completed pick and place tasks significantly faster using teleoperation subspace mapping than they did using state of the art teleoperation methods.

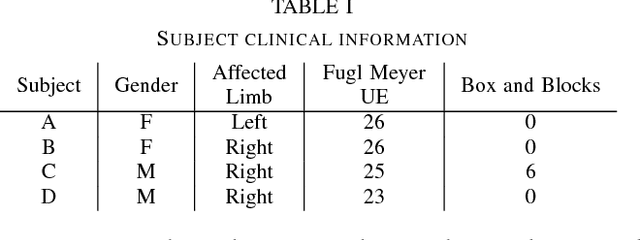

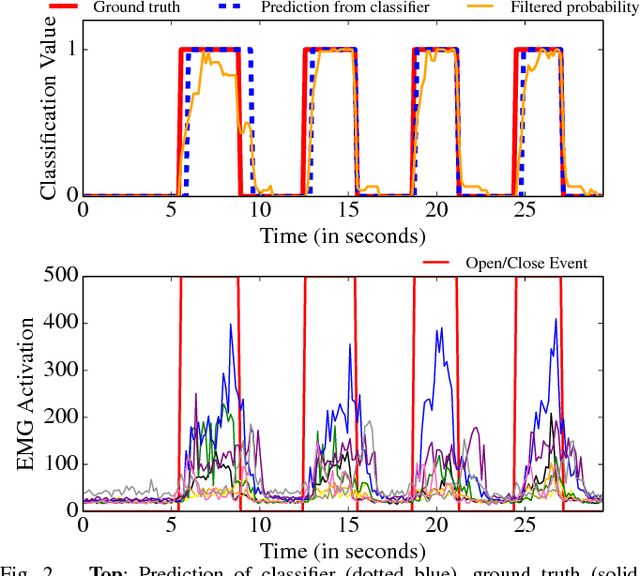

EMG Pattern Classification to Control a Hand Orthosis for Functional Grasp Assistance after Stroke

Feb 01, 2018

Abstract:Wearable orthoses can function both as assistive devices, which allow the user to live independently, and as rehabilitation devices, which allow the user to regain use of an impaired limb. To be fully wearable, such devices must have intuitive controls, and to improve quality of life, the device should enable the user to perform Activities of Daily Living. In this context, we explore the feasibility of using electromyography (EMG) signals to control a wearable exotendon device to enable pick and place tasks. We use an easy to don, commodity forearm EMG band with 8 sensors to create an EMG pattern classification control for an exotendon device. With this control, we are able to detect a user's intent to open, and can thus enable extension and pick and place tasks. In experiments with stroke survivors, we explore the accuracy of this control in both non-functional and functional tasks. Our results support the feasibility of developing wearable devices with intuitive controls which provide a functional context for rehabilitation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge