Cagri Ozcinar

Responsible AI: The Good, The Bad, The AI

Jan 28, 2026Abstract:The rapid proliferation of artificial intelligence across organizational contexts has generated profound strategic opportunities while introducing significant ethical and operational risks. Despite growing scholarly attention to responsible AI, extant literature remains fragmented and is often adopting either an optimistic stance emphasizing value creation or an excessively cautious perspective fixated on potential harms. This paper addresses this gap by presenting a comprehensive examination of AI's dual nature through the lens of strategic information systems. Drawing upon a systematic synthesis of the responsible AI literature and grounded in paradox theory, we develop the Paradox-based Responsible AI Governance (PRAIG) framework that articulates: (1) the strategic benefits of AI adoption, (2) the inherent risks and unintended consequences, and (3) governance mechanisms that enable organizations to navigate these tensions. Our framework advances theoretical understanding by conceptualizing responsible AI governance as the dynamic management of paradoxical tensions between value creation and risk mitigation. We provide formal propositions demonstrating that trade-off approaches amplify rather than resolve these tensions, and we develop a taxonomy of paradox management strategies with specified contingency conditions. For practitioners, we offer actionable guidance for developing governance structures that neither stifle innovation nor expose organizations to unacceptable risks. The paper concludes with a research agenda for advancing responsible AI governance scholarship.

A Lightweight Modular Framework for Constructing Autonomous Agents Driven by Large Language Models: Design, Implementation, and Applications in AgentForge

Jan 19, 2026Abstract:The emergence of LLMs has catalyzed a paradigm shift in autonomous agent development, enabling systems capable of reasoning, planning, and executing complex multi-step tasks. However, existing agent frameworks often suffer from architectural rigidity, vendor lock-in, and prohibitive complexity that impedes rapid prototyping and deployment. This paper presents AgentForge, a lightweight, open-source Python framework designed to democratize the construction of LLM-driven autonomous agents through a principled modular architecture. AgentForge introduces three key innovations: (1) a composable skill abstraction that enables fine-grained task decomposition with formally defined input-output contracts, (2) a unified LLM backend interface supporting seamless switching between cloud-based APIs and local inference engines, and (3) a declarative YAML-based configuration system that separates agent logic from implementation details. We formalize the skill composition mechanism as a directed acyclic graph (DAG) and prove its expressiveness for representing arbitrary sequential and parallel task workflows. Comprehensive experimental evaluation across four benchmark scenarios demonstrates that AgentForge achieves competitive task completion rates while reducing development time by 62% compared to LangChain and 78% compared to direct API integration. Latency measurements confirm sub-100ms orchestration overhead, rendering the framework suitable for real-time applications. The modular design facilitates extension: we demonstrate the integration of six built-in skills and provide comprehensive documentation for custom skill development. AgentForge addresses a critical gap in the LLM agent ecosystem by providing researchers and practitioners with a production-ready foundation for constructing, evaluating, and deploying autonomous agents without sacrificing flexibility or performance.

Dynamic Nested Hierarchies: Pioneering Self-Evolution in Machine Learning Architectures for Lifelong Intelligence

Nov 18, 2025Abstract:Contemporary machine learning models, including large language models, exhibit remarkable capabilities in static tasks yet falter in non-stationary environments due to rigid architectures that hinder continual adaptation and lifelong learning. Building upon the nested learning paradigm, which decomposes models into multi-level optimization problems with fixed update frequencies, this work proposes dynamic nested hierarchies as the next evolutionary step in advancing artificial intelligence and machine learning. Dynamic nested hierarchies empower models to autonomously adjust the number of optimization levels, their nesting structures, and update frequencies during training or inference, inspired by neuroplasticity to enable self-evolution without predefined constraints. This innovation addresses the anterograde amnesia in existing models, facilitating true lifelong learning by dynamically compressing context flows and adapting to distribution shifts. Through rigorous mathematical formulations, theoretical proofs of convergence, expressivity bounds, and sublinear regret in varying regimes, alongside empirical demonstrations of superior performance in language modeling, continual learning, and long-context reasoning, dynamic nested hierarchies establish a foundational advancement toward adaptive, general-purpose intelligence.

A Mathematical Framework for AI Singularity: Conditions, Bounds, and Control of Recursive Improvement

Nov 08, 2025Abstract:AI systems improve by drawing on more compute, data, energy, and better training methods. This paper asks a precise, testable version of the "runaway growth" question: under what measurable conditions could capability escalate without bound in finite time, and under what conditions can that be ruled out? We develop an analytic framework for recursive self-improvement that links capability growth to resource build-out and deployment policies. Physical and information-theoretic limits from power, bandwidth, and memory define a service envelope that caps instantaneous improvement. An endogenous growth model couples capital to compute, data, and energy and defines a critical boundary separating superlinear from subcritical regimes. We derive decision rules that map observable series (facility power, IO bandwidth, training throughput, benchmark losses, and spending) into yes/no certificates for runaway versus nonsingular behavior. The framework yields falsifiable tests based on how fast improvement accelerates relative to its current level, and it provides safety controls that are directly implementable in practice, such as power caps, throughput throttling, and evaluation gates. Analytical case studies cover capped-power, saturating-data, and investment-amplified settings, illustrating when the envelope binds and when it does not. The approach is simulation-free and grounded in measurements engineers already collect. Limitations include dependence on the chosen capability metric and on regularity diagnostics; future work will address stochastic dynamics, multi-agent competition, and abrupt architectural shifts. Overall, the results replace speculation with testable conditions and deployable controls for certifying or precluding an AI singularity.

Spherical Vision Transformer for 360-degree Video Saliency Prediction

Aug 24, 2023

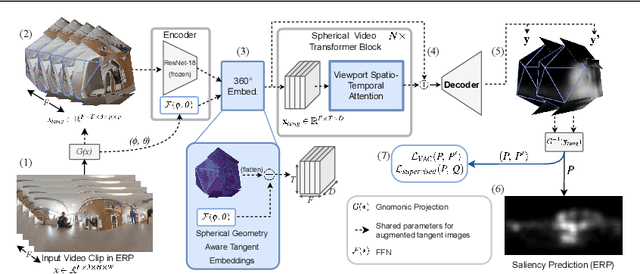

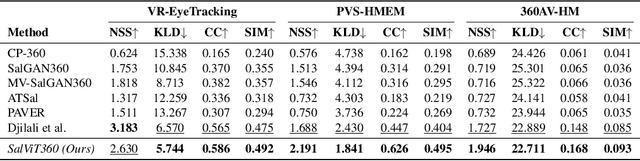

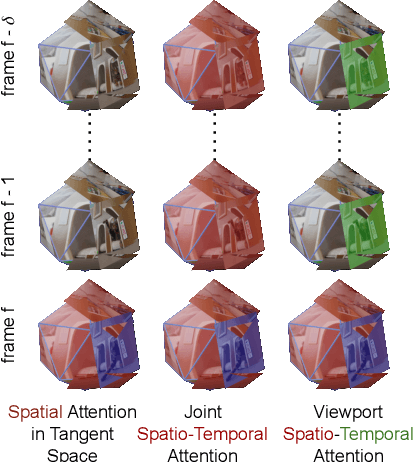

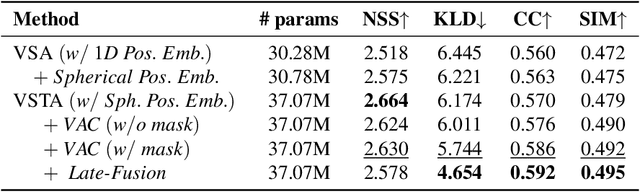

Abstract:The growing interest in omnidirectional videos (ODVs) that capture the full field-of-view (FOV) has gained 360-degree saliency prediction importance in computer vision. However, predicting where humans look in 360-degree scenes presents unique challenges, including spherical distortion, high resolution, and limited labelled data. We propose a novel vision-transformer-based model for omnidirectional videos named SalViT360 that leverages tangent image representations. We introduce a spherical geometry-aware spatiotemporal self-attention mechanism that is capable of effective omnidirectional video understanding. Furthermore, we present a consistency-based unsupervised regularization term for projection-based 360-degree dense-prediction models to reduce artefacts in the predictions that occur after inverse projection. Our approach is the first to employ tangent images for omnidirectional saliency prediction, and our experimental results on three ODV saliency datasets demonstrate its effectiveness compared to the state-of-the-art.

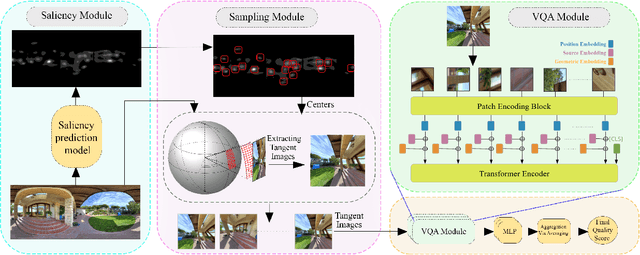

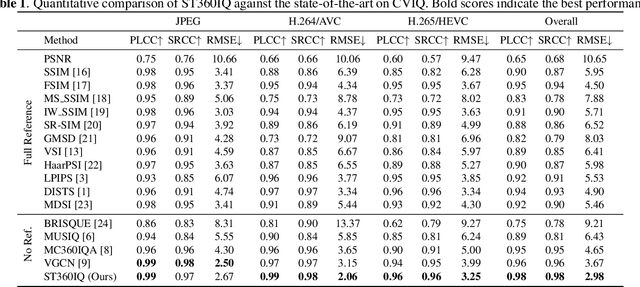

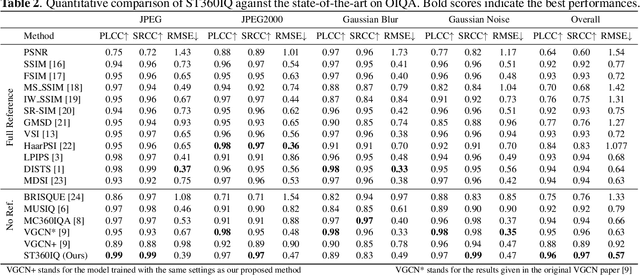

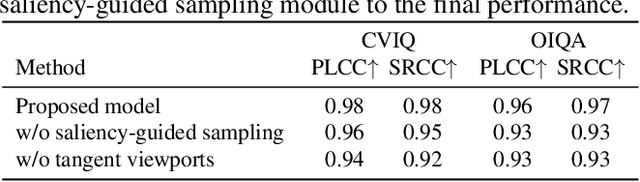

ST360IQ: No-Reference Omnidirectional Image Quality Assessment with Spherical Vision Transformers

Mar 13, 2023

Abstract:Omnidirectional images, aka 360 images, can deliver immersive and interactive visual experiences. As their popularity has increased dramatically in recent years, evaluating the quality of 360 images has become a problem of interest since it provides insights for capturing, transmitting, and consuming this new media. However, directly adapting quality assessment methods proposed for standard natural images for omnidirectional data poses certain challenges. These models need to deal with very high-resolution data and implicit distortions due to the spherical form of the images. In this study, we present a method for no-reference 360 image quality assessment. Our proposed ST360IQ model extracts tangent viewports from the salient parts of the input omnidirectional image and employs a vision-transformers based module processing saliency selective patches/tokens that estimates a quality score from each viewport. Then, it aggregates these scores to give a final quality score. Our experiments on two benchmark datasets, namely OIQA and CVIQ datasets, demonstrate that as compared to the state-of-the-art, our approach predicts the quality of an omnidirectional image correlated with the human-perceived image quality. The code has been available on https://github.com/Nafiseh-Tofighi/ST360IQ

Ensemble approach for detection of depression using EEG features

Mar 07, 2021

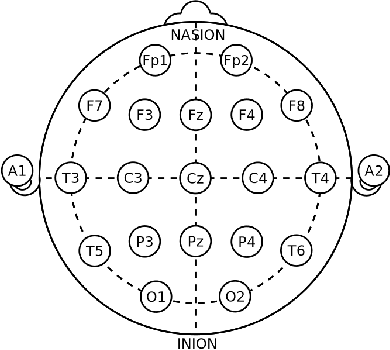

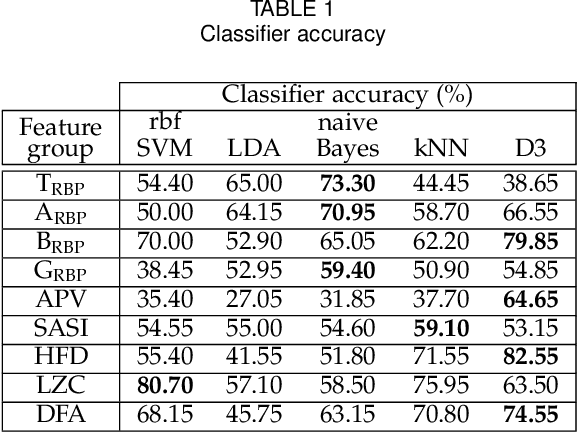

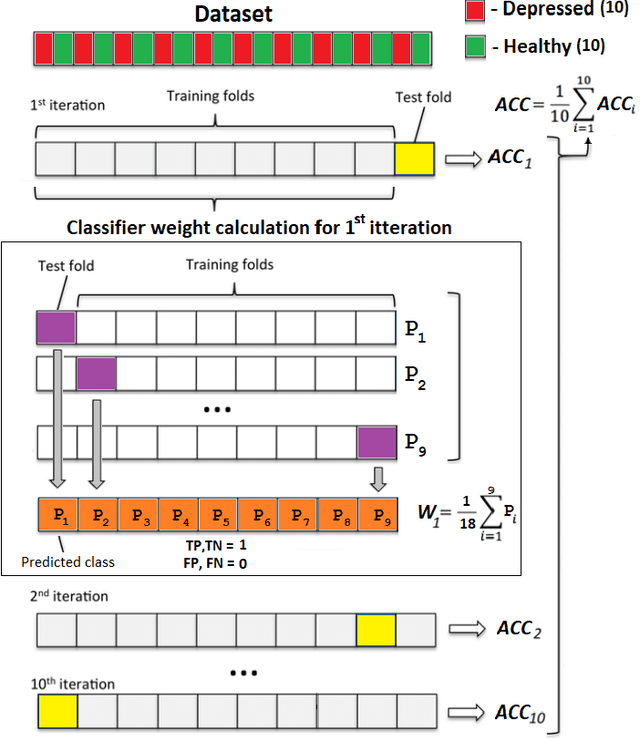

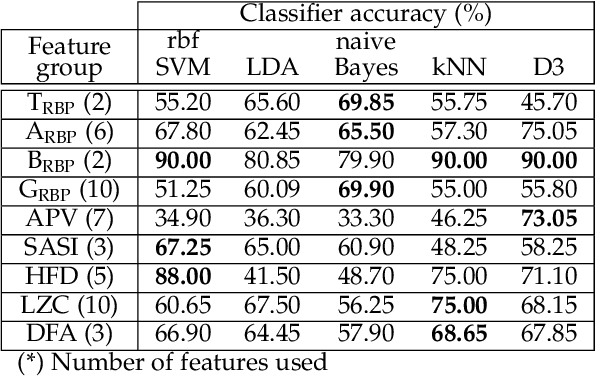

Abstract:Depression is a public health issue which severely affects one's well being and cause negative social and economic effect for society. To rise awareness of these problems, this publication aims to determine if long lasting effects of depression can be determined from electoencephalographic (EEG) signals. The article contains accuracy comparison for SVM, LDA, NB, kNN and D3 binary classifiers which were trained using linear (relative band powers, APV, SASI) and non-linear (HFD, LZC, DFA) EEG features. The age and gender matched dataset consisted of 10 healthy subjects and 10 subjects with depression diagnosis at some point in their lifetime. Several of the proposed feature selection and classifier combinations reached accuracy of 90% where all models where evaluated using 10-fold cross validation and averaged over 100 repetitions with random sample permutations.

Quality Assessment of Super-Resolved Omnidirectional Image Quality Using Tangential Views

Jan 25, 2021

Abstract:Omnidirectional images (ODIs), also known as 360-degree images, enable viewers to explore all directions of a given 360-degree scene from a fixed point. Designing an immersive imaging system with ODI is challenging as such systems require very large resolution coverage of the entire 360 viewing space to provide an enhanced quality of experience (QoE). Despite remarkable progress on single image super-resolution (SISR) methods with deep-learning techniques, no study for quality assessments of super-resolved ODIs exists to analyze the quality of such SISR techniques. This paper proposes an objective, full-reference quality assessment framework which studies quality measurement for ODIs generated by GAN-based and CNN-based SISR methods. The quality assessment framework offers to utilize tangential views to cope with the spherical nature of a given ODIs. The generated tangential views are distortion-free and can be efficiently scaled to high-resolution spherical data for SISR quality measurement. We extensively evaluate two state-of-the-art SISR methods using widely used full-reference SISR quality metrics adapted to our designed framework. In addition, our study reveals that most objective metric show high performance over CNN based SISR, while subjective tests favors GAN-based architectures.

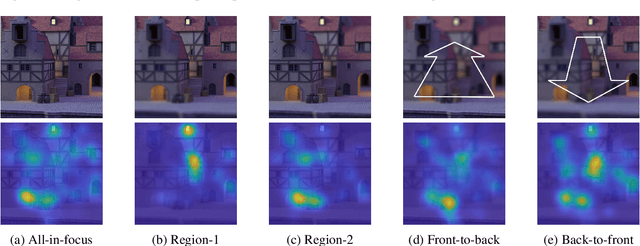

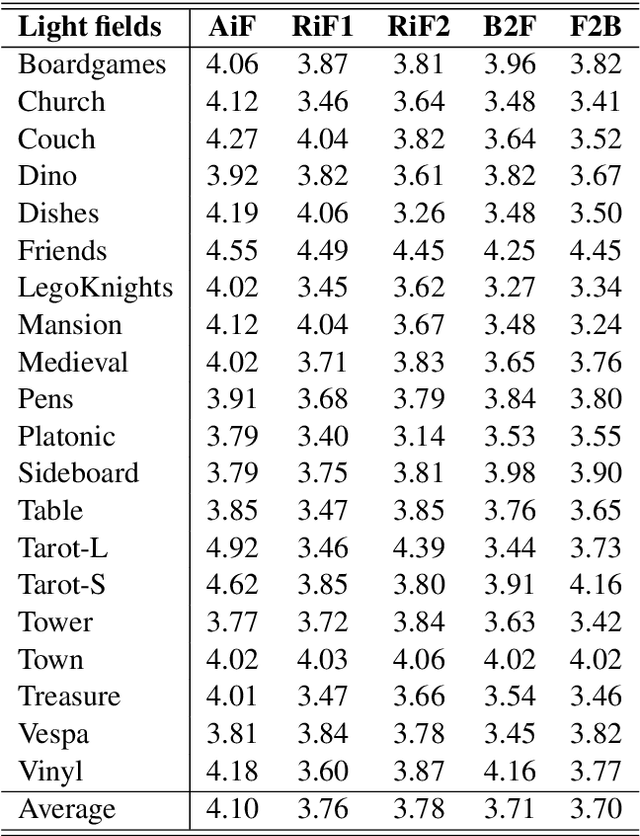

A Study on Visual Perception of Light Field Content

Aug 07, 2020

Abstract:The effective design of visual computing systems depends heavily on the anticipation of visual attention, or saliency. While visual attention is well investigated for conventional 2D images and video, it is nevertheless a very active research area for emerging immersive media. In particular, visual attention of light fields (light rays of a scene captured by a grid of cameras or micro lenses) has only recently become a focus of research. As they may be rendered and consumed in various ways, a primary challenge that arises is the definition of what visual perception of light field content should be. In this work, we present a visual attention study on light field content. We conducted perception experiments displaying them to users in various ways and collected corresponding visual attention data. Our analysis highlights characteristics of user behaviour in light field imaging applications. The light field data set and attention data are provided with this paper.

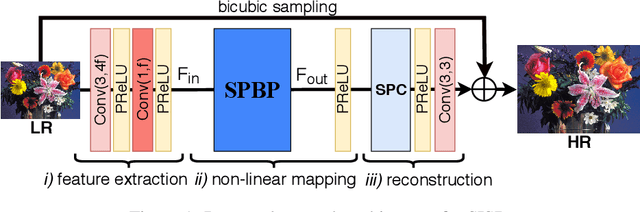

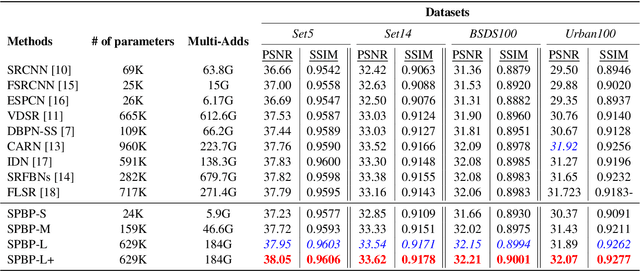

Sub-Pixel Back-Projection Network For Lightweight Single Image Super-Resolution

Aug 03, 2020

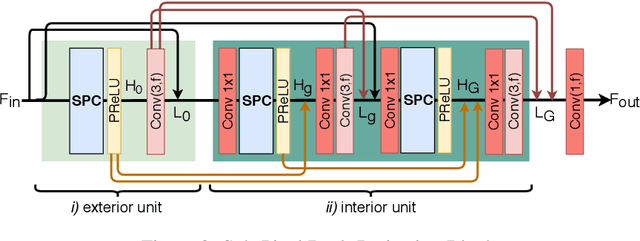

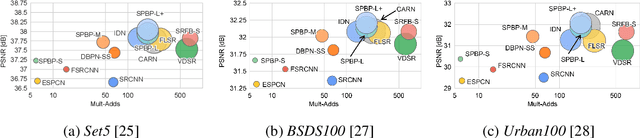

Abstract:Convolutional neural network (CNN)-based methods have achieved great success for single-image superresolution (SISR). However, most models attempt to improve reconstruction accuracy while increasing the requirement of number of model parameters. To tackle this problem, in this paper, we study reducing the number of parameters and computational cost of CNN-based SISR methods while maintaining the accuracy of super-resolution reconstruction performance. To this end, we introduce a novel network architecture for SISR, which strikes a good trade-off between reconstruction quality and low computational complexity. Specifically, we propose an iterative back-projection architecture using sub-pixel convolution instead of deconvolution layers. We evaluate the performance of computational and reconstruction accuracy for our proposed model with extensive quantitative and qualitative evaluations. Experimental results reveal that our proposed method uses fewer parameters and reduces the computational cost while maintaining reconstruction accuracy against state-of-the-art SISR methods over well-known four SR benchmark datasets. Code is available at "https://github.com/supratikbanerjee/SubPixel-BackProjection_SuperResolution".

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge